Final Project Proposal: The Learning Camera

Camera Cogitatio

Current research within the fields of cognitive sciences and computer vision rely on raster images captured by digital cameras to establish a common media. These digital images, that are based on representation of images with an array of pixels, are post-processed in a computational environment in order to extract specific types of information. These features are extracted by designing computer algorithms in order to accomplish several tasks relevant to the designer: face recognition, object recognition, scene interpretation and learning visual concepts from examples. However, this approach that employed by scientists in multiple fields, do not reflect the actual nature of representations taking place within the processes of human visual perception.

For this purpose, a new architecture can be designed to produce first prototypes of a camera that can provide new types of representations. These may resemble human visual representations.

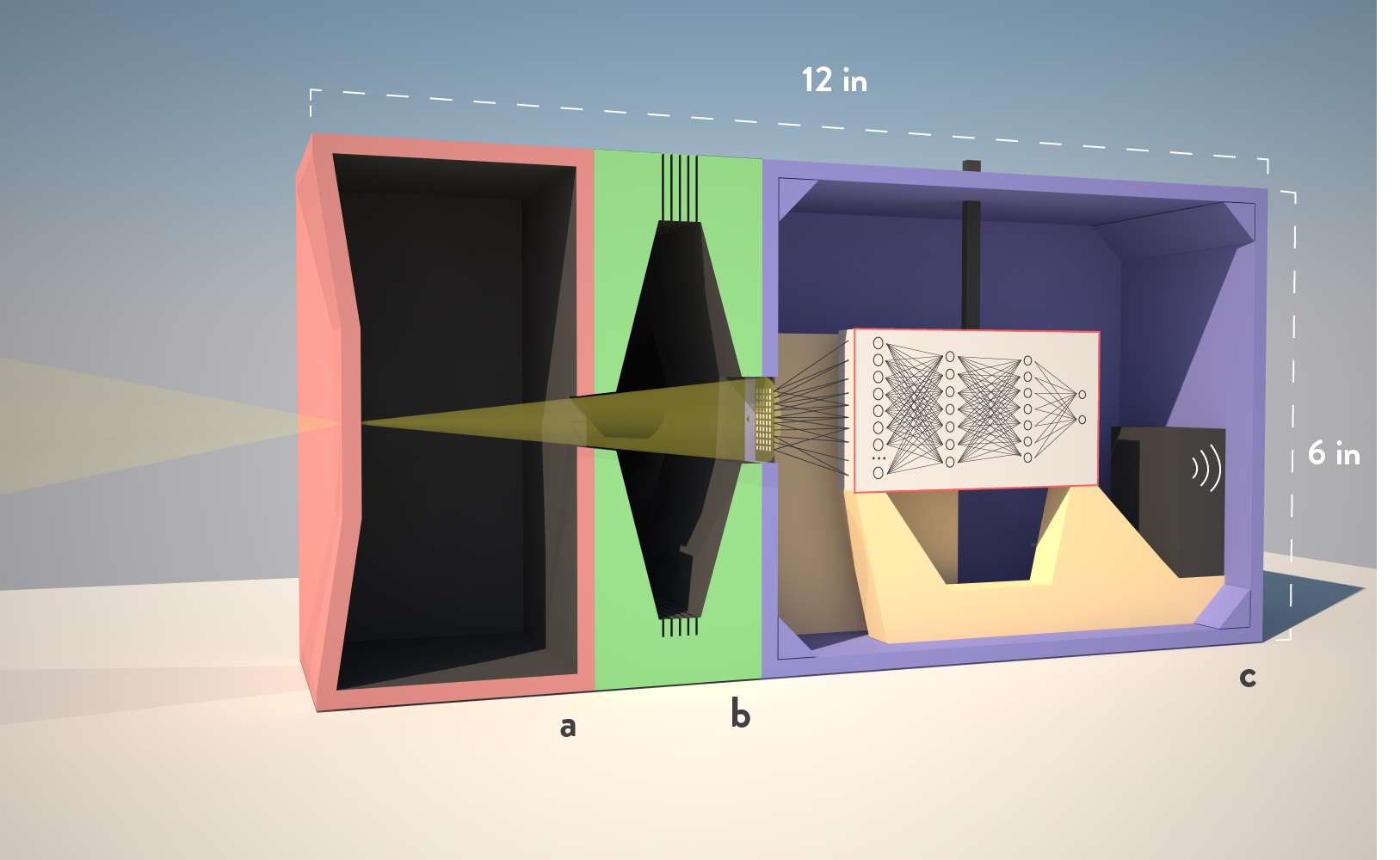

Within a 6 x 6 x 12 inch plywood box, the light collected via pinhole will be filtered and directed to the plate of phototransistors. The current patterns generated by each phototransistor will provide the input for the ANN embedded in the microprocessors of the board. The camera's exposure to a visual scene will generate a specific pattern unique to that scene and ANN algorithm will learn to extract certain structural features from each of these unique exposures by updating its internal parameters. As a result, the camera will learn to classify its exposures according to user-defined criteria. For example, camera can be trained to classify basic shapes, ordinary objects and simple architectural drawings.