This week I focused on making the real time "hologram video" of the shocked object. I used python with a tornado web application plugin as well as the image processing module, opencv. There were two parts to this week: creating the local server for the video streaming (tornado) and the actual image processing (opencv). I worked on each module separately and combined them in the end.

IDEATION AND PLANNING

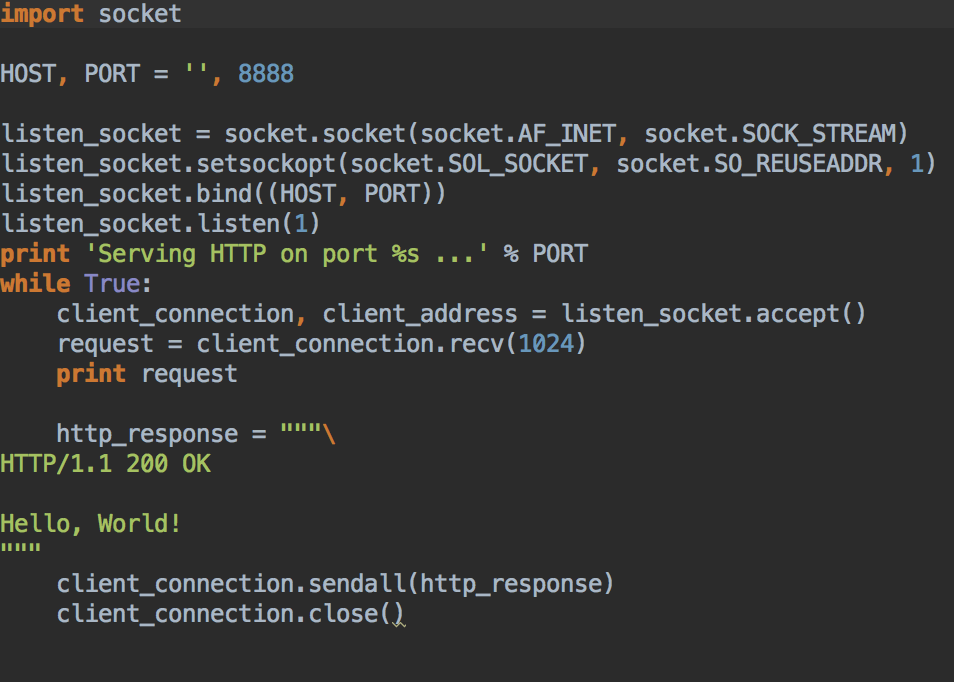

The first thing I needed to do was establish a local server on my computer, so that I could have a place to ultimately stream the video to. I imported the socket module into python, bound the host to port 8888, and initiated the socket with the listen() function. In the main function I had a while loop that established a connection with a web client whenever the client was at the url of the local host at port 8888.

Finally, as is customary with any code, the first thing to do is print "Hello world!"

EXECUTION

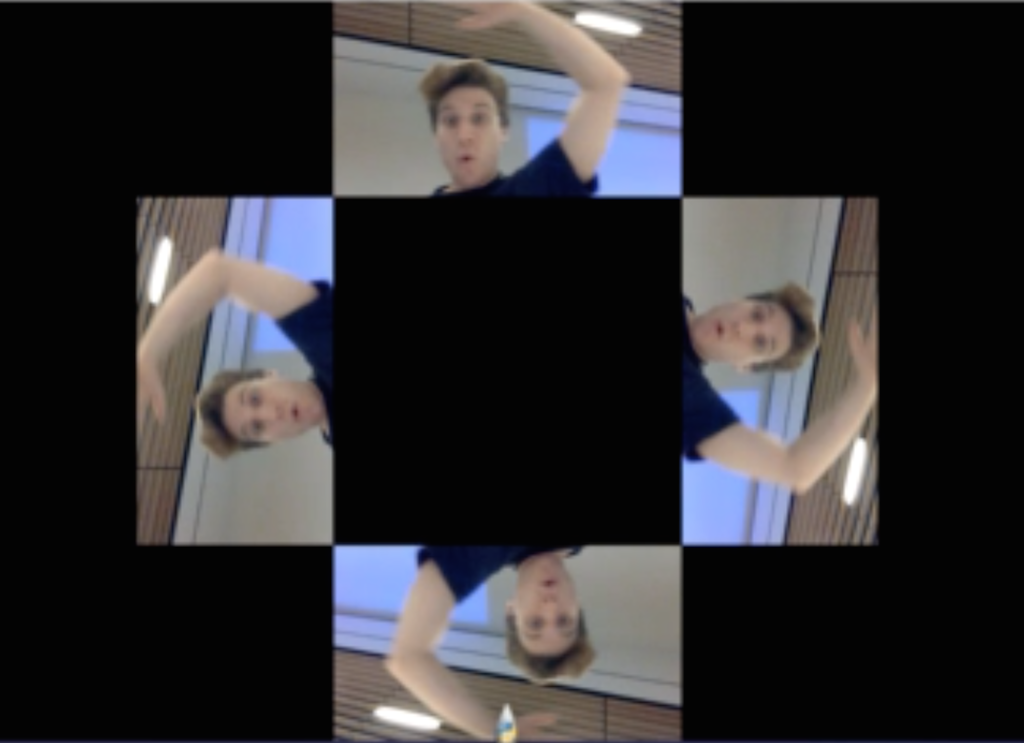

Hologram videos consist of four reflected images rotated 90 degrees with respect to each other. When viewed with a thin-shell plastic pyramid on top, the light from each of the reflected images bounces of the 45 degree pyramid plane into the center to create a pseudo-hologram. In reality this is an extension of the peppers ghost effect I spoke of in week 0

For image processing, I wanted to start simple. I downloaded a few tutorials in opencv and started playing around with capturing images from a webcam and manipulating them.

Here you can see the captured webcam image and a perspective shift. I started using a perspective shift with a 0 skew to make a pure rotation, but then realized there is an opencv function, cv2.getrotationmatrix2D() that creates a 2D rotation matrix for me!

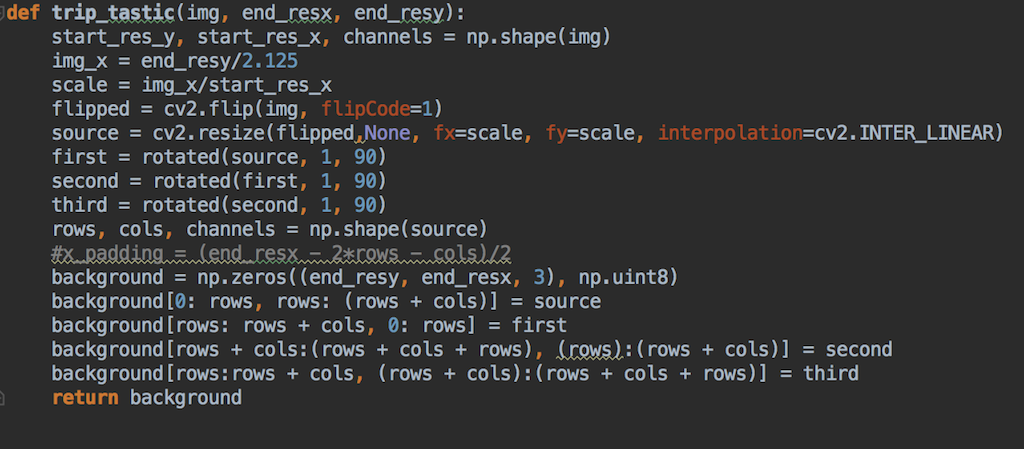

I then wrote the python code called "trip tastic" to make the hologram video comprised of the four reflected images. The code can be broken down into the following steps: (1) take in image by establishing a cv2.VideoCapture object (2) scale the image down to fit the screen size by converting the photo into a numpy array and truncated the matrix (3) flip the image with cv2.rotationmatrix2D() and a flipcode of -1 (4) rotate the image (5) initialize a black background with numpy.zeros() (6) copy and past the rotated image and it's four counter parts, and voila! A trippy experience indeed.

Here's a screen capture of a webcam feed of me doing a lil' dance in lab :) with the trip tastic code going.

The next step for me was to integrate the two pieces: the server code and the image processing code. I made a master code that included a triptastic function and then live streamed the video to localhost:8001. Again, starting simple, I took just a webcam screenshot and uploaded it to localhost:8001.

And now a real-time hologram video of a quick wink uploaded to the server :). Notably, I noticed two main problems when playing around with this code: (1) the latency is highly dependent on the image quality and size, becoming very much not ideal once the picture was larger than 500 x 500 pixels with a quality of 75%. After playing around a bit, I found the optimum of a smaller picture, 300 by 300 pixels, with 75% quality. I also added a cv2.waitkey() function of 10ms per loop so as to give the computer time to process the images. More and less than 10ms introduced more delay.

Just for fun, I made a pseudo-animation, using a for loop going from 0 to 360 degrees, changing the degree index inside the rotation matrix of opencv. Notably, this increased the latency of the response .

The final step was to test out with my own iPhone to see if I could connect to the localhost in my browser and see the real-time hologram video from the webcam feed.

FINAL REMARKS

This week was a lot of fun getting to dive into python and learn a whole new language, as well as image manipulation with Opencv. Opencv is incredibly clean, high-level, and intuitive, so I really enjoyed using it. I did, however, realize I would have two main challenges with my real time hologram video: first, the latency associated with processing would make the video out of psynch with the audio, and second (relatedly) the quality of the photos would need to be greatly reduced for minimal latency, thereby reducing the crispness of the effect. Regardless, though, I think the optimum I reached served as a successful and interesting proof of concept.