The Design Process

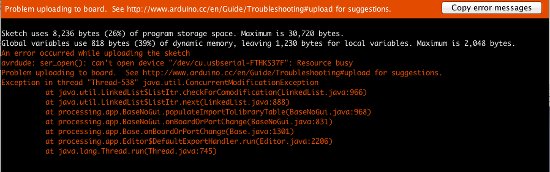

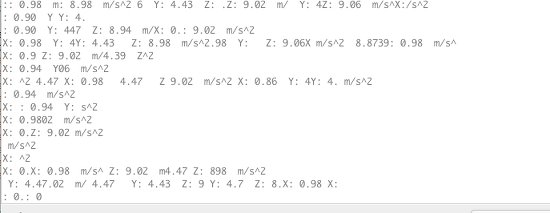

The largest takeaway from this week is that I improved my knowledge on serial communication. My breakout board uses I2C communication, and the most amount of time was spent on communication issues. Basically, I had a lot of problems if I wasn't specific on the order I set everything up. Once the fabduino is plugged in with the FTDI cable, I need to upload the code. This worke about 75% of the time. When it doesn't work, I unplugged and replugged it in, and it worked. Max cannot be open, as otherwise it blocks the uploading of Arduino. Then, I check on the serial monitor to see if the accelerometer is getting accurate data. If so, the serial monitor must be closed before running patch. I did not know this, and for the life of me could not figure out why the patch would work sometimes and not others. Sometimes, it would work, but the data is jumbled and makes no sense.

Error message when too many programs are trying to call serial data

Jumbled accelerometer data when Max is open while trying to load Arduino code

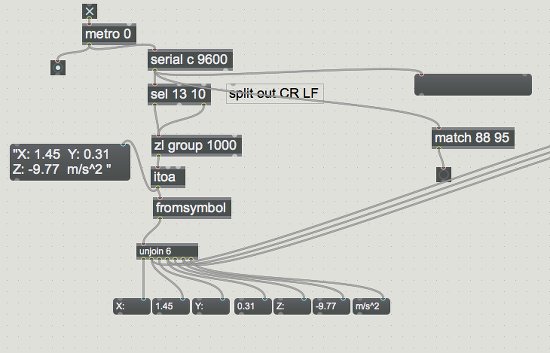

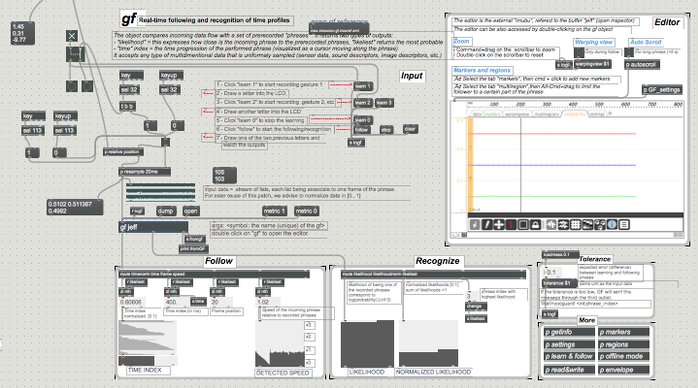

The first part of the patch takes the x, y, z data from the acceleromter, and breaks the string into a mix of strings and floats. That data is then connected to the gF gesture tracking patch developed by IRCAM (Institut de Recherche et Coordination Acoustique/Musiquek), a French avant-garde electroacoustic music research center.

Part of the Max patch that pulls serial data from accelerometer

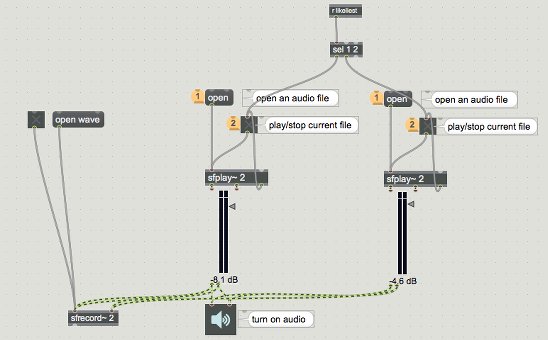

The second patch pulls audio tracks from freesound.org (LittleRobotSoundFactory's track, uagadugu's track, freesfx's match track), and plays them when the recorded gesture is recognized.

Part of the patch that pulls audio tracks when gesture is triggered

I adapted the patch from IRCAM to read accelerometer data. In short, you "teach" the patch each gesture, and then it follows the data, trying to match the data with the gestures you record.

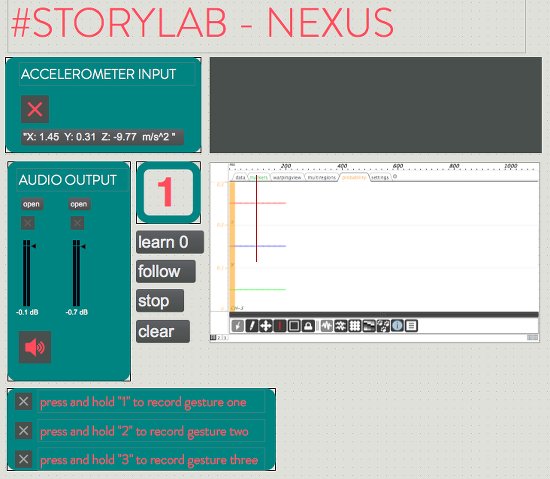

Gesture following patch adapted from gF patch from IRCAM

All in all, much more needs to be tweaked, so that it doesn't take 5 or 6 tries to get the patch to recognize the gesture. and how I can get it to read the serial data coming from the output. Ideally, I can set an if statement in MAX, that asks the program to pull a particular sound track when the values are within a certain range.