Interface and application programming

For this week I decided to create a camera interface between Point gray camera and operating system and extract objects in the image. As the first step I make use of the software development kit in order to use libraries and function that communicate with the camera under different programs. Moreover I used OpenCV library that was discussed in the class to perform some image analysis.The camera provides high resolution images (2.3MP), at high frame rates (163 fps). I started by looking at the program provided by the company, and quickly realized that there is a trade off between imaging speed and writing speed. A back of envelope made it clear that the disk writing speed limits how fast I could acquire images. A full frame is 1920 x 1200 pixels, or 2304000 pixels. Assuming that each pixel value is encoded by 16 bits, the resulting image is 4.6 MB. At 100 frames per seconds, this is close to 460 MB/s. Given this constraints, I decided to use SSD hard drive with increases the bus speed beyond the requirement of the camera.

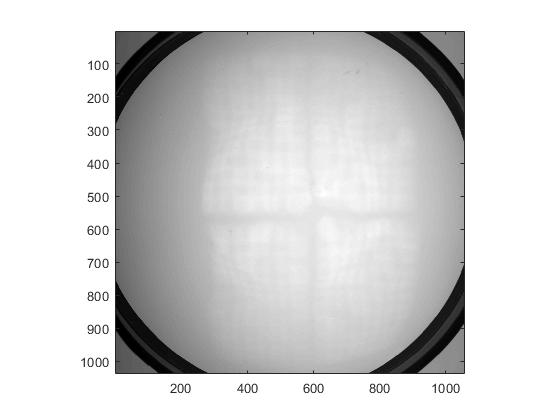

I first started using Matlab, and image processing toolbox to capture image from the camera. Even though the native configuration of the camera allows for variable fps, Matlab limits the fps. Moreover, during writing images, the software would write most of the images into computer RAM, which limits any online processing. I changed the writing format by allowing writing on the disk. Below is the code that I implemented in Matlab for communicating and capturing images from the camera.

vid = videoinput('pointgrey', 1, 'F7_BayerRG8_1920x1200_Mode0');

src = getselectedsource(vid);

vid.ReturnedColorspace = 'grayscale';

src.ExposureMode = 'Manual';

src.FrameRateMode = 'Manual';

src.GainMode = 'Manual';

src.Strobe1 = 'On';

src.Strobe1 = 'Off';

src.WhiteBalanceRBMode = 'Off';

vid.LoggingMode = 'disk&memory';

preview(vid);

vid.ROIPosition = [433 81 966 1045];

triggerconfig(vid, 'manual');

% TriggerRepeat is zero based and is always one

% less than the number of triggers.

vid.TriggerRepeat = 59;

vid.FramesPerTrigger = 100;

diskLogger = VideoWriter('G:\test_20171125\testMode0_0001.mp4', 'MPEG-4');

diskLogger.FrameRate = 100;

diskLogger.Quality = 100;

vid.DiskLogger = diskLogger;

flushdata(vid,'triggers');

start(vid);

islogging(vid);

trigger(vid);

%

flushdata(vid,'triggers');

delete(vid)

The code implements a number of operations. First it defines a video object and specify the bit coding and encoding. Next it defines the physical properties of the camera as well as mode of writing. In order to reduce the size of the data pipeline, I reduced the ROI in the camera to only capture the region of the screen with important features. I used this code to capture images of free behaving zebrafish larvae in the arena.

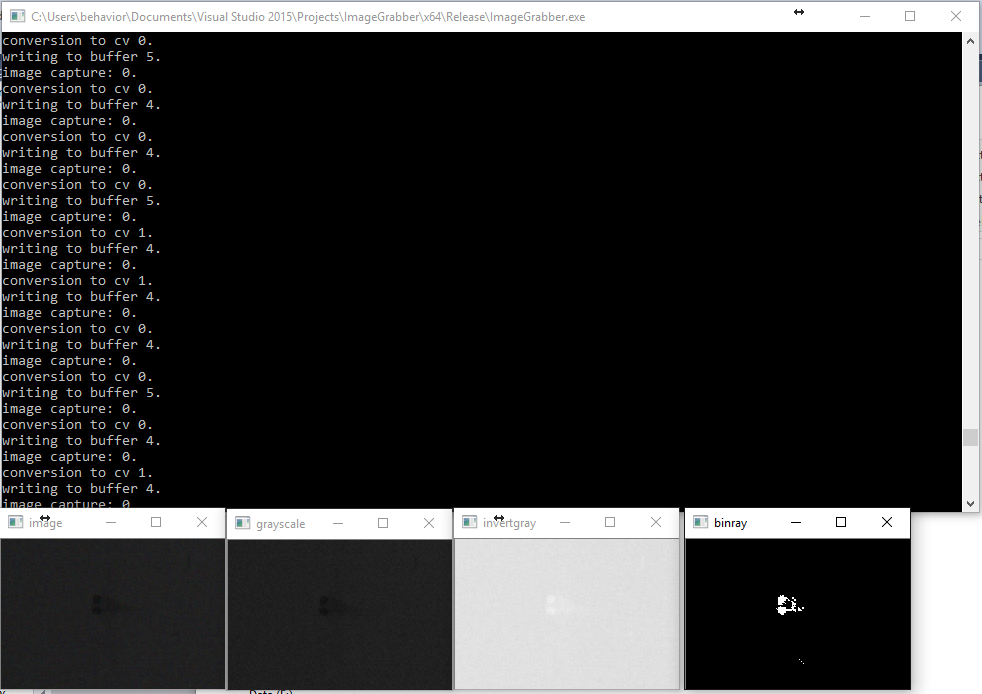

Even though Matlab was easy to code, the speed was an issue in image processing and capturing. I tried Labview for a short time after I realized that the the capturing requires a licence. As results I switch to C++ for programming. C++ in conjunction with opencv and flycapture sdk provides a flexible framework to capture and analyze images online. I started by modifying a previous code for image display, and created a pipeline for analysis as well as saving the images. There was an initial issue with finding the libraries and linking them to the C++ project in visual studio 2015. Three main libraries were needed,C and C++ library, flycapture sdk, and opencv. After installation, the hpp files and lib files needed to be linked to the program

// I had to change the symbols in include commad to make it compatible with html format

#include "FlyCapture2.h"

#include "opencv2/core/core.hpp"

#include "opencv2/opencv.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "PointGreyCamera.h"

#include "iostream"

#include "base.h"

#include "chrono"

using namespace FlyCapture2;

int main()

{

Error error;

Camera camera;

CameraInfo camInfo;

// add buffer frame

FC2Config BufferFrame;

Camera cam;

cam.GetConfiguration(&BufferFrame);

BufferFrame.numBuffers = 300;

BufferFrame.grabMode = BUFFER_FRAMES;

BufferFrame.highPerformanceRetrieveBuffer = true;

cam.SetConfiguration(&BufferFrame);

// Connect the camera

error = camera.Connect(0);

if (error != PGRERROR_OK)

{

std::cout << "Failed to connect to camera" << std::endl;

return false;

}

// Get the camera info and print it out

error = camera.GetCameraInfo(&camInfo);

if (error != PGRERROR_OK)

{

std::cout << "Failed to get camera info from camera" << std::endl;

return false;

}

std::cout << camInfo.vendorName << " "

<< camInfo.modelName << " "

<< camInfo.serialNumber << std::endl;

error = camera.StartCapture();

if (error == PGRERROR_ISOCH_BANDWIDTH_EXCEEDED)

{

std::cout << "Bandwidth exceeded" << std::endl;

return false;

}

else if (error != PGRERROR_OK)

{

std::cout << "Failed to start image capture" << std::endl;

return false;

}

// capture loop

char key = 0;

int k = 0;

char buffer[40];

char dateStr[9];

char timeStr[9];

_strdate(dateStr);

_strtime(timeStr);

auto start = std::chrono::steady_clock::now();

while (key != 'q')

{

// Get the image

auto start1 = std::chrono::steady_clock::now();

Image rawImage;

Error error = camera.RetrieveBuffer(&rawImage);

if (error != PGRERROR_OK)

{

std::cout << "capture error" << std::endl;

continue;

}

// convert to rgb

Image rgbImage;

rawImage.Convert(FlyCapture2::PIXEL_FORMAT_BGR, &rgbImage);

auto end1 = std::chrono::steady_clock::now();

auto elapsed1 = std::chrono::duration_cast(end1 - start1);

std::cout << "image capture: " << elapsed1.count() << "." << std::endl;

// convert to OpenCV Mat

unsigned int rowBytes = (double)rgbImage.GetReceivedDataSize() / (double)rgbImage.GetRows();

cv::Mat image = cv::Mat(rgbImage.GetRows(), rgbImage.GetCols(), CV_8UC3, rgbImage.GetData(), rowBytes);

// create a grayscale matrix

cv::Mat grayscale (image.size(), CV_8U);

// make grayscale image

cv::cvtColor(image, grayscale, CV_BGR2GRAY);

// create inverse grayscale

cv::Mat invertGray(image.size(), CV_8U);

// make invese grayscale image

cv::subtract(cv::Scalar::all(255), grayscale, invertGray);

// create a binary image

cv::Mat binrayImage(grayscale.size(), CV_8U);

// make binary image

cv::threshold(invertGray, binrayImage, 175, 255, cv::THRESH_BINARY);

//cv::adaptiveThreshold(invertGray, binrayImage, 150, cv::ADAPTIVE_THRESH_GAUSSIAN_C, cv::THRESH_BINARY, 11, 2);

//cv::imshow("grayscale", grayscale);

cv::imshow("binray", binrayImage);

//cv::imshow("invertgray", invertGray);

auto end2 = std::chrono::steady_clock::now();

auto elapsed2 = std::chrono::duration_cast(end2 - start1);

std::cout << "conversion to cv " << elapsed2.count() << "." << std::endl;

//sprintf(buffer, "G:/testC/image_%d.jpg", k);

auto end3 = std::chrono::steady_clock::now();

auto elapsed3 = std::chrono::duration_cast(end3 - start);

sprintf(buffer, "G:/test_20171128_2/image_%d_%d.jpg",elapsed3.count(), k);

//printf(buffer);

cv::imshow("image", image);

cv::imwrite(buffer, image);

auto end4 = std::chrono::steady_clock::now();

auto elapsed4 = std::chrono::duration_cast(end4 - start1);

std::cout << "writing to buffer " << elapsed4.count() << "." << std::endl;

key = cv::waitKey(1);

k=k+1;

}

error = camera.StopCapture();

if (error != PGRERROR_OK)

{

// This may fail when the camera was removed, so don't show

// an error message

}

camera.Disconnect();

return 0;

}

With this pipeline, I was able to first record and save the at very fast sample rate> 100 Hz. I used the chronos library to measure the timing of operations with microsecond precision. Moreover, I did image analysis to detect the boundary of the fish online. I used openCV library for this purpose and used it to first acquire the images, and then create a grayscale version, threshold them and binarize the image. Finally I used blackwhite morphing process to identify the boundary.

The reconstruction does accurately capture the physical boundary. I need to use operation such as black/white morph to increase the peformance of online reconstruction.