How To Make (almost) Anything

interface & application programming

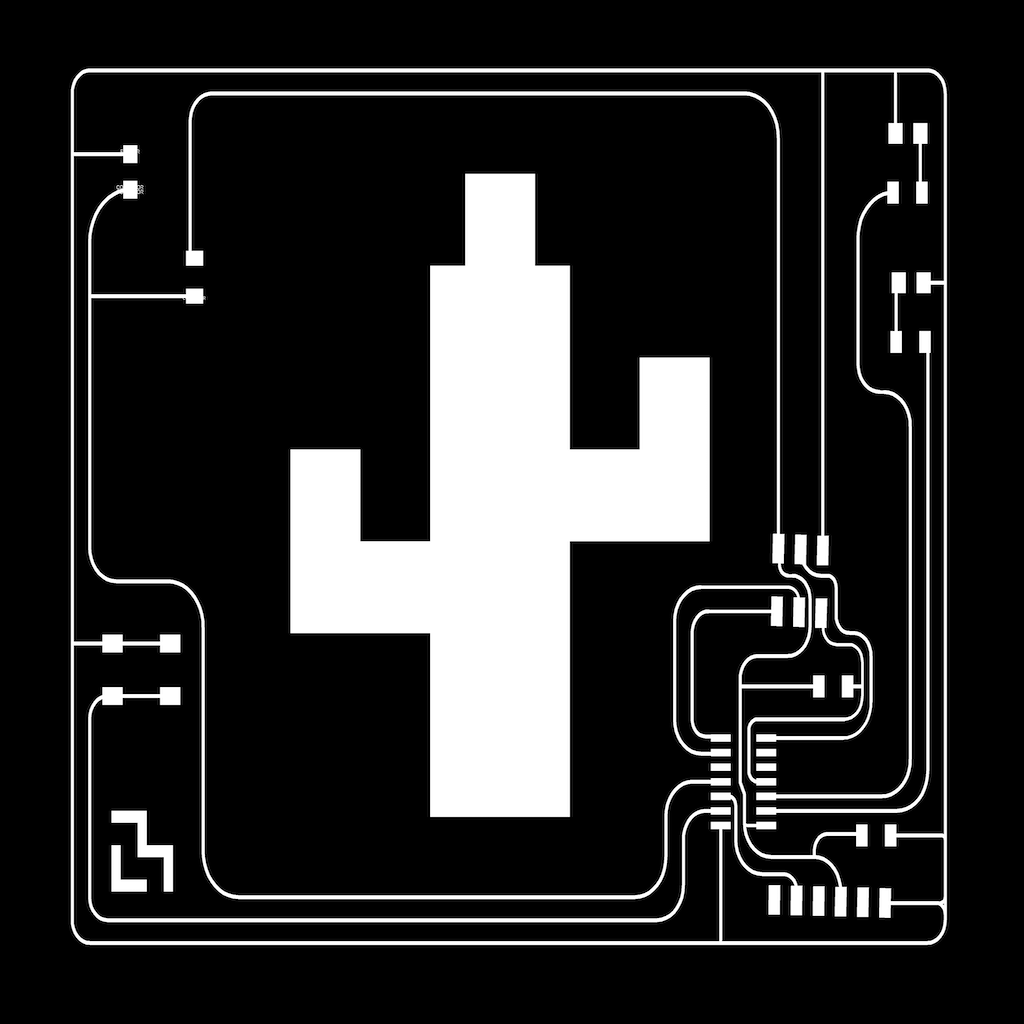

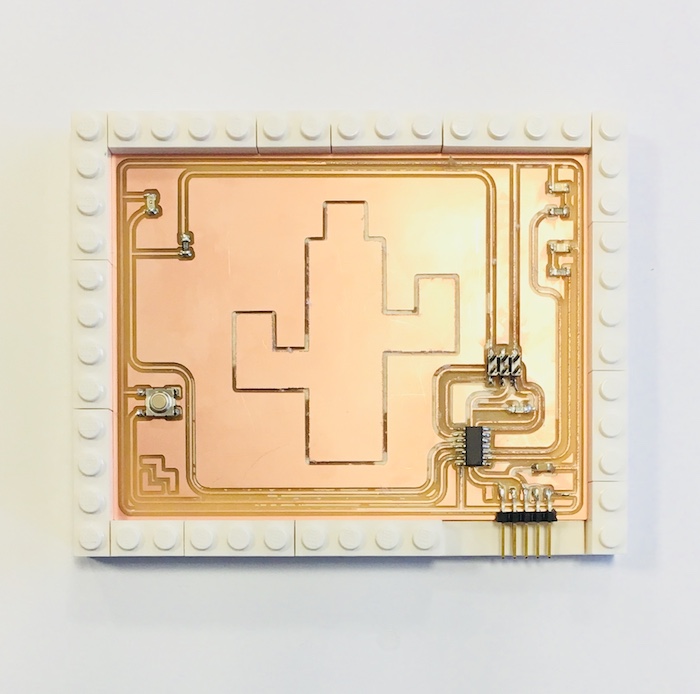

AUGMENTED REALITY FOR CACTUS CIRCUITS

Code: https://github.com/aberke/ar-cactus-circuits

TASK

write an application that interfaces with an input &/or output device that you made.

I’ve designed and programmed a number of interfaces and applications, but never any involving augmented reality (AR). This seemed like the right week to try.

BUILDING WITH AR

There are AR frameworks and tools to choose between

- ARKit (by Apple for iOs)

- ARcore (by Google for Android)

- Unity + Vuforia

I used ARKit.

PREREQUISITES / DEVELOPMENT ENVIRONMENT

In order to make my development environment compatible with both my iPhone and apple’s ARKit, I:

- Upgraded my mac

- Downloaded xcode 10.1

RESOURCES

I read and worked through tutorials to help me understand Xcode and ARKit and Swift (the IOS programming language). Swift is funny looking.

- Tutorial to get acquainted with Xcode + ARkit + my phone: How to Get Started With Apple’s ARKit Augmented Reality Platform

- Helpful resource for understanding Xcode scenes/scenekit/.scn files: https://www.appcoda.com/arkit-image-recognition/

- https://blog.pusher.com/building-an-ar-app-with-arkit-and-scenekit/

I mashed a few tutorials together and modified them towards my project goals. I tested my work by pointing my phone at images I wanted it to detect.

But what is all this code doing?

Helpful apple documentation to explain the ARSCNView and related classes: https://developer.apple.com/documentation/arkit/building_your_first_ar_experience

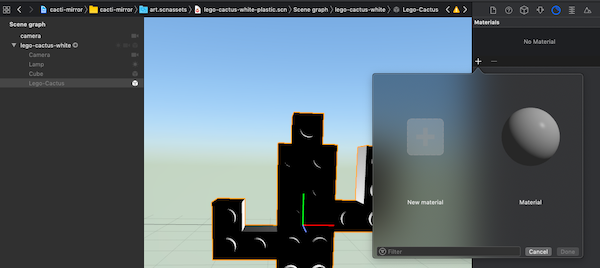

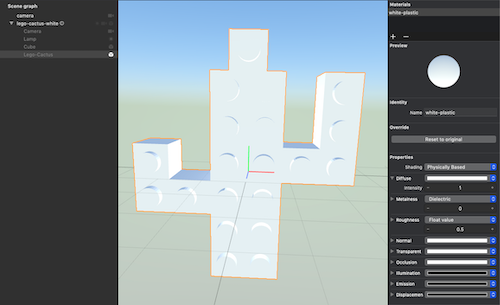

AR MODELS

Models to drop into scenes must be in the Xcode project as .scn files.

Making my own .scn files to overlay on top of detected images:

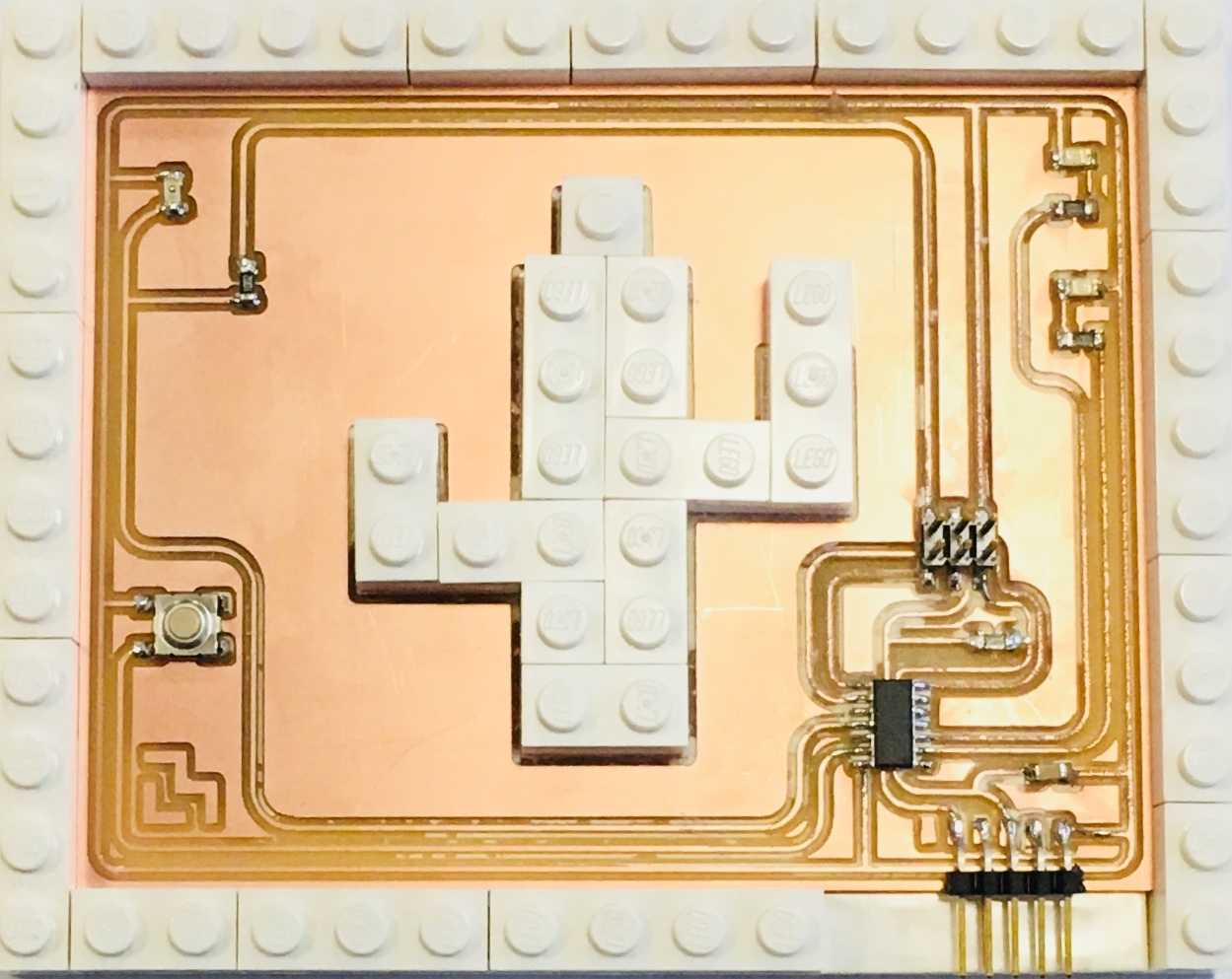

- Make a 3D model of lego cacti to use

- Export as dae file

- Fusion360 does not support .dae exporting so I downloaded blender (free)

- Created model in Fusion → export as STL → import to Blender → export as .dae file → save to xcode project

- In Xcode: File → New -----> create new .scn file from exported file

- Import into Xcode project

The materials attached to the object did not correctly import into XCode. I used Xcode’s native scenekit editor to attach the materials again.

Model Sizing

- Correct sizing the model makes properly positioning it in a scene much simpler.

- Scenekit uses meters as the unit

- https://medium.com/s23nyc-tech/getting-started-with-arkit-and-scenekit-76814862cc75

ICONS

To make icons for the “app” I used a tool: Icon Set Creator: https://itunes.apple.com/us/app/icon-set-creator/id939343785

AR TARGETS & DETECTION

Apple documentation for ARkit reference images: https://developer.apple.com/documentation/arkit/recognizing_images_in_an_ar_experience

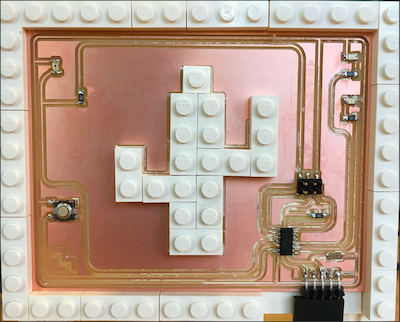

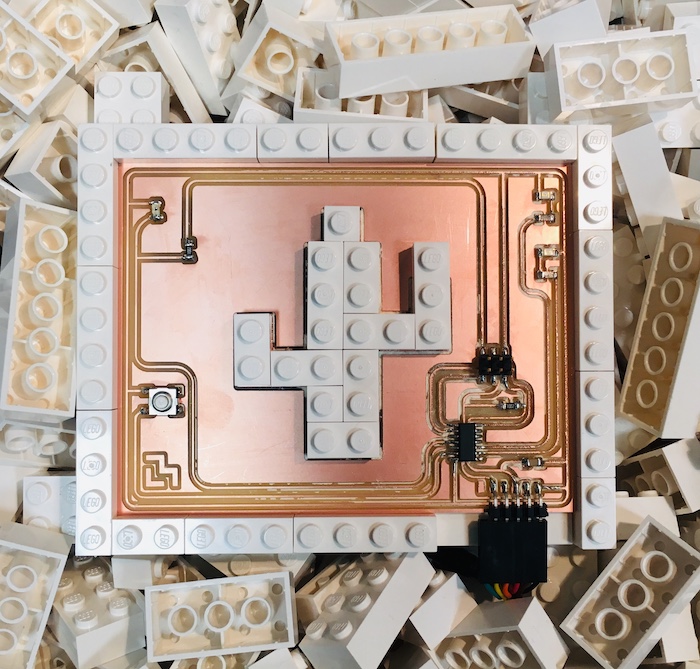

My AR target that I wanted to detect is the cactus circuit board. I want the interaction AR behavior to be different depending on whether the lights are:

1. All off

2. One on/one off

3. All on

So I took photos of the separate states, so that the different photos could serve as different AR target reference images.

i.e. when reference image with all lights off (1) is detected, then do behavior 1, versus when reference image with all lights on (3) is detected, then do behavior 3.

Reference images:

1. All off

2. One on/one off

3. All on

I had to use images of just the lights, and not the board, because otherwise the reference images were too similar for the AR app to detect their differences.

POSITIONING THE (CACTUS) MODEL IN THE SCENE

- Helpful tutorial: https://www.appcoda.com/arkit-horizontal-plane/

- Helpful tutorial for repositioning the cactus after the AR target moves in the scene: https://hackernoon.com/arkit-tutorial-image-recognition-and-virtual-content-transform-91484ceaf5d5

RESULT

In the end… my images of the light were too small and difficult for ARkit to identify or disambiguate, especially when the external light settings fluctuated.

So I cut scope and just showed one setting, where a cactus hovered and spun.

Along the way, I learned about using the XCode developer environment, coding in swift, and the limitations of AR.

Distribution?

It would be nice to share this “app” but I will not be able to submit this “app” to the apple store for distribution because it is too simple and does not adhere to apple’s guidelines.

From https://developer.apple.com/app-store/review/guidelines/#minimum-functionality:

4.2.1 Apps using ARKit should provide rich and integrated augmented reality experiences; merely dropping a model into an AR view or replaying animation is not enough.

SOURCE CODE

All code is in github: https://github.com/aberke/ar-cactus-circuits