How To Make Almost Anything Neil Gershenfeld

MIT Center For Bits And Atoms

Fab labs share an evolving inventory of core capabilities to make (almost) anything, allowing people and projects to be shared. These are my projects.

PARKS 2.0: Public Park Sensors

What does it do?

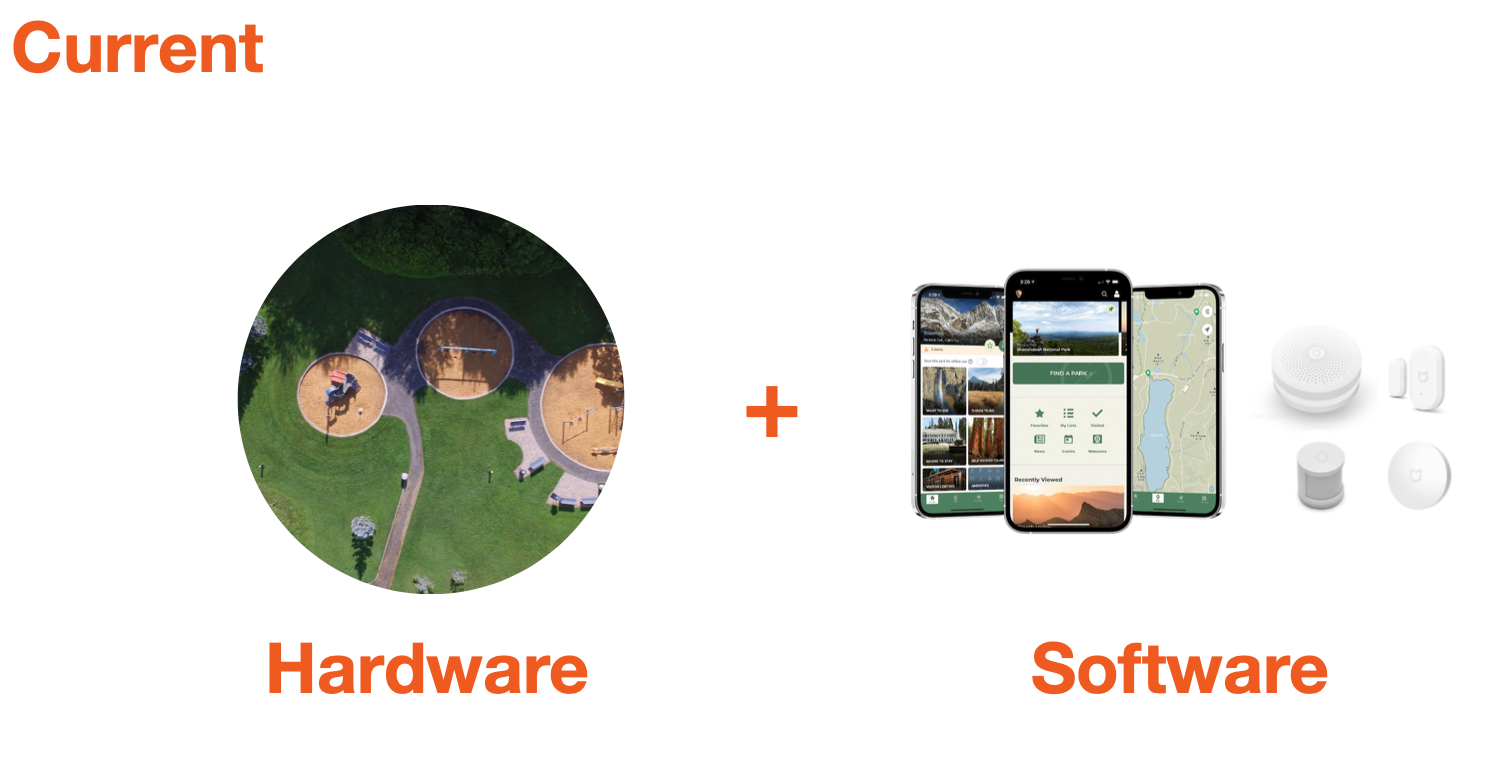

It detects motion using a PIR and turns on a webserver activated ESP-32CAM which uses Real Time Object Recognition to detect and monitor activity in urban parks. It can identify how long people, dogs and other animals remained in the park, and what sort of objects they brought with them/used in the park. This sensor doesn’t collect any private information. It also detects and monitors soil moisture and temperature in the park and streams it on to a platform. This sensor is meant to add an “operating system” to the “hardware” currently in urban parks and grant important actionable data to the Open Space Planning Department in the City of Cambridge. It is meant to be deployed in multiple parks at once and monitor rain, soil quality, and public use of parks to better offer services to citizens to maximize encounters and the use of this social infrastructure.

Who's done what beforehand?

Eco-counter has developed automated bike and pedestrian counters that allow parks and recreation managers to understand when and how parks are being used to better deploy their resources. This Eco-counter uses PYRO – passive-infrared pyroelectric technology to determine the number of people in a park. There are also higher end projects that are attempting to use LIDAR technology to map movement and monitor parks. When initially starting this project I ordered 2 LIDAR Sensors, however, they didn’t arrive in time for me to iterate with, so I decided to use ESPCAM with real time object detection.

What did you design?

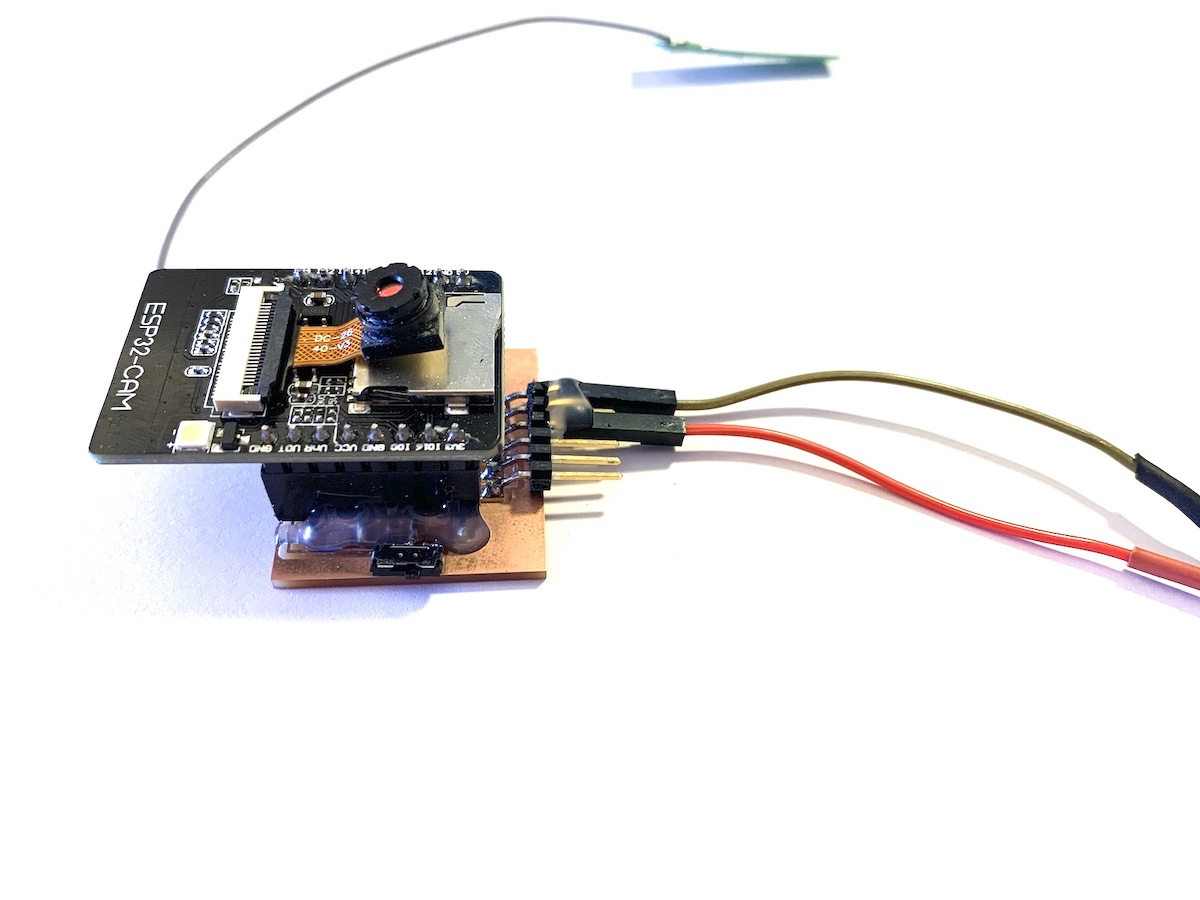

I designed the enclosure and board that hosts the ESP32 CAM, the PCB that allows measurement of soil moisture and temperature, and the integration of all the components. I also edited multiple programs to be able to link an ESP32CAM with a local webserver and deploy real time object detection technology. Finally I designed the interface that allows a viewer to monitor the number of objects that have been detected in the urban park, and make decisions based on these numbers.

What materials and components were used?

A PCB Designed board, an ESP32 Cam, an external battery to power all the components, acrylic / PLA for the enclosure, wood to create a stand, an antenna, a temperature sensor, a moisture sensor.

Where did they come from?

Most components came from the Harvard Fab Lab. The LIDAR Sensors that were used to explore an alternative to the ESP32 CAM Object detection solution were ordered through Digi-Key.

What parts and systems were made?

As mentioned, this project uses a temperature sensor, and a soil moisture sensor (this ended up not working so had to be dropped), and a ESP_CAM to stream video and using YOLO Real Time Object detection, detect different objects in public space. Furthermore, I used 3D printing to test out different possible cases, and laser cutting acrylic to create another design for the enclosure of the project.

What processes were used?

I used Roland machine to mill the PCB, electronics design in KiCAD, and soldering to create the boards that controlled all the components. I also used Arduino IDE to program the Wi-Fi connectivity, and the temperature sensor, and used python to create the interface that would count and use object detection libraries on the video stream. Additionally, I used Rhino to design different iterations of the enclosure, using 3D printing and laser cutting to create the different enclosures.

What questions were answered?

An incredible number of questions were answered. First, that an ESP32 is not powerful enough to run real time object detection, this must be done externally in a more powerful device or in the cloud. Second, that more and more computing is starting to take place in the cloud, so sensors can quite small as they only need to sense and collect data but not process it. Third, that even if sensors can be small, enclosures, sometimes, need to be bigger than necessary for people to identify the object and relate to it, especially in public space. As I designed the enclosure for the object, I realized I could design a very small enclosure for the ESP32 and PCB board with the specifications I wanted. However, since I wanted to deploy this in urban parks, this wouldn’t be desirable, especially if a camera was involved. It would have to be as visible as possible.

What worked? What didn't?

The initial idea to develop a sensor that could work without affecting privacy of users or using a lot of computing power didn’t work. I had thought about using LIDAR Sensors to create a map of an environment and track users this way without identifying them or having any cameras involved (for privacy concerns) but Nathan suggested this was extremely difficult to do, so I should scale down my project given the current circumstances. I’m still interested in testing LIDAR, so I ordered some sensors to explore, knowing I would not be able to execute the project with that technology. Another aspect that didn’t work was being able to determine the exact number of people in multiple parks at once. As the real time object detection takes place frame by frame, a single person is counted or identified multiple times in the same video stream. This made me realize that I would only be able to determine the amount of time a user is in the frame, or a user/object was in the park but not really count distinct number of objects or users. After some research, I learned there is a whole field dedicated to this problem.

How was it evaluated?

This project was evaluated by taking it to a public park and temporarily testing to see if it was able to detect and recognize different objects in the park, and the ways in which these were used. I was next to it, so I could observe the results in the interface and compare it to what I could witness personally. Additionally, on demo day, I deployed it in two different locations of MIT Media Lab to attempt to compare how people moved around the 6th floor and see if there could be any meaningful data that could be extracted (more people identified near the food tables for example).

What are the implications?

The implications are that now almost anyone can implement real time object identification using very small cameras to stream footage to a computer or to the cloud. This was actually very concerning to me. As I continued my project, I started being worried about the project itself, and whether such an object should exist. Although my purpose was to grant important data about park usage and park temperature and moisture levels, this technology could be used wrongly. It could be used, for example, to police public spaces, if it is not already used in that way. I’m surprised there aren’t more conversations about the harm of using object detection technology in cameras in public spaces. In the future, I would like to explore determining activity in parks using only audio levels. I understand this is how biologists determine insect/animal populations so it might be a better way which maintains privacy and doesn’t use cameras in public space.