information in physical systems

4.1

Verify that the entropy function satisfies the properties of

1) Continuity Entropy of the information is defined as where is a probability measure which means it can be a number between 0 to 1. and log is a continuous function. So H(x) is a continuous function

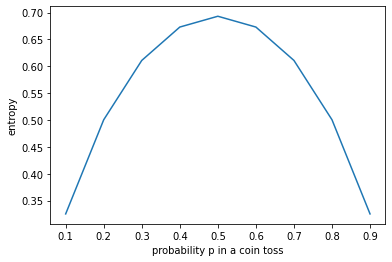

import matplotlib.pyplot as plt

import numpy as np

#for a coin toss. Probability of tossing head or tail is p and 1-p

head_probability = np.linspace(0,1,11)

entropy_of_information = -head_probability*np.log(head_probability)-(1-head_probability)*np.log(1-head_probability)

plt.plot(head_probability,entropy_of_information)

plt.xlabel("probability p in a coin toss")

plt.ylabel("entropy")

4) independence

For applying baye’s rule because of the independence. Therefore,

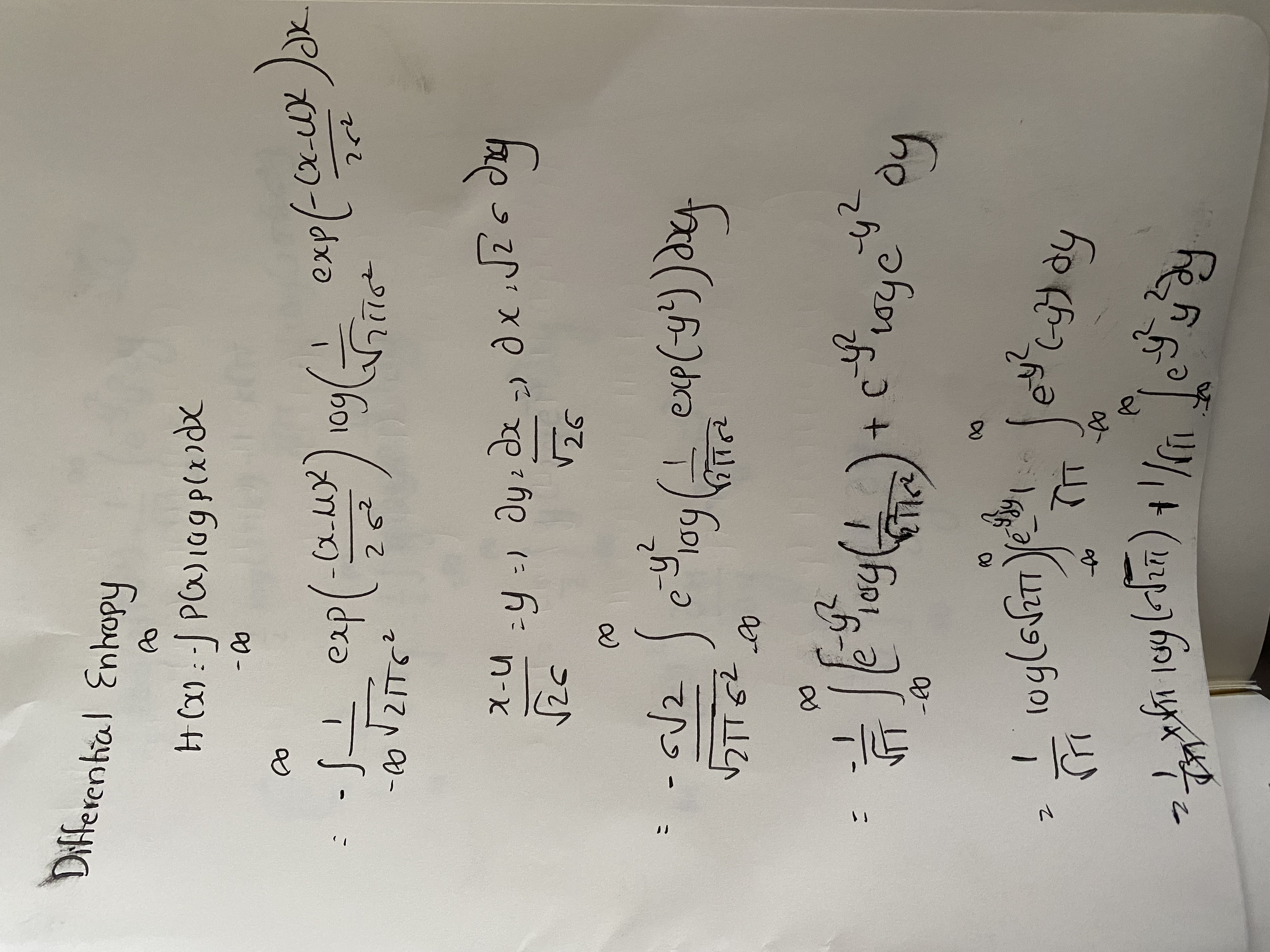

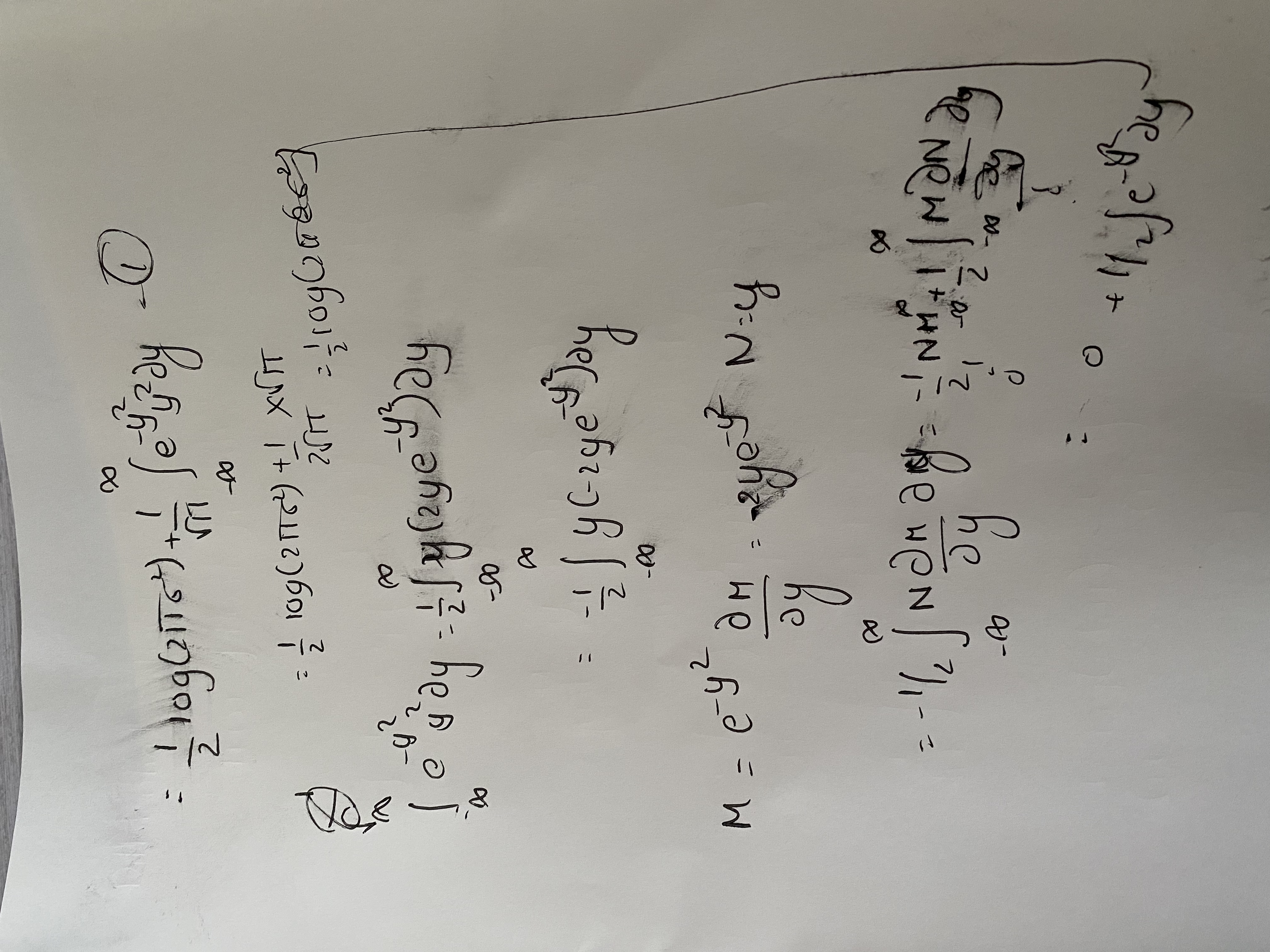

4.2

4.3

Binary channel with a small probability of making an error

(a) Majority voting is when you send a bit repeatedly - so to send a 1 you send 11111 for n times and then pick the majority one received by the output of the channel y

if you send a bit three times 000 - 0 001 - 0 010-0 100-0 by majority voting 011-1 etc for majority voting when the decoded bit is 1 , If we send a 0 three times, the error of the decoded bit will happen either when 2 of the bits are 1 or all three 1 for other cases, majority voting with ensure that the bit is a 0. similarly for 1. so the probability of error is

(b) voting on top of majority of voting

so we send 0 three times - 011 110 101 111 all are error cases for reading 0 as 1 by majority voting. so we can repeat the same step earlier but now the error probability is if is small then =. so error now is 27

(c) so if we keep doing this N times this becomes a multiple of the

4.4

4.5

(a) going back to earlier class, SNR in db is so 20dB is like 100 of the ration. going back to 4.29, channel capacity = 3300*log_2(1+100)=21971.4 = 21kbits/second

(b) subsitute Gbit/s into 4.29 with 3300 hz and compute SNR in db

, in dB = 10=

4.6

Making an unbiased estimator of . Since are drawn from a guassian distribution with the variance and means

If is an unbiased estimator then the

Therefore is an unbiased estimator of

Cramer-Rao lower bound gives information about the minimum variance of the unbiased estimator - which in our case is f(x_1,x_2...x_n) =

Fisher information from 4.32 is the variance of the score = = = =

From 4.34 -

variance of the estimator = E[f^2]-E[f]^2

= = n/n^2*variance +covariance terms

Link - https://www.notion.so/alienrobotfromthefutures/Final-Project-65ca8d3338ab4ee48e30fa6b5e1fca93