Speech to Reality : Making Words Reality

Speech to Text to Mesh to Voxel to Toolpath to Assembly to Reality

Project Description

Speech-to-text-to-mesh-to-voxel-to-toolpath-robotic assembly

The ability to turn spoken words into physical objects could represent a significant shift in how we interact with the material world. In this framework, spoken words are more than just expressions of thought; words have the power to create and change reality.

Imagine saying 'I want a chair', and you get a chair in less than 5 minutes. The ease of using speech as an input makes this technology highly accessible, even to those without technical expertise in 3D modeling or fabrication. It democratizes the process of design and manufacturing, allowing more people to customize and create objects according to their needs or preferences. By producing items on demand and reusing voxel modules, we can reduce waste associated with mass production and inventory storage.

This project is in collabroation with Se Hwan Jeon. Special thanks to Neil Gren, Miana Smith and Center for Bits and Atoms for the lab space and the material. I am grateful for the opportunity to work with them.

The ability to turn spoken words into physical objects could represent a significant shift in how we interact with the material world. In this framework, spoken words are more than just expressions of thought; words have the power to create and change reality.

Imagine saying 'I want a chair', and you get a chair in less than 5 minutes. The ease of using speech as an input makes this technology highly accessible, even to those without technical expertise in 3D modeling or fabrication. It democratizes the process of design and manufacturing, allowing more people to customize and create objects according to their needs or preferences. By producing items on demand and reusing voxel modules, we can reduce waste associated with mass production and inventory storage.

This project is in collabroation with Se Hwan Jeon. Special thanks to Neil Gren, Miana Smith and Center for Bits and Atoms for the lab space and the material. I am grateful for the opportunity to work with them.

Workflow

Steps

Examples

Operating the Robot

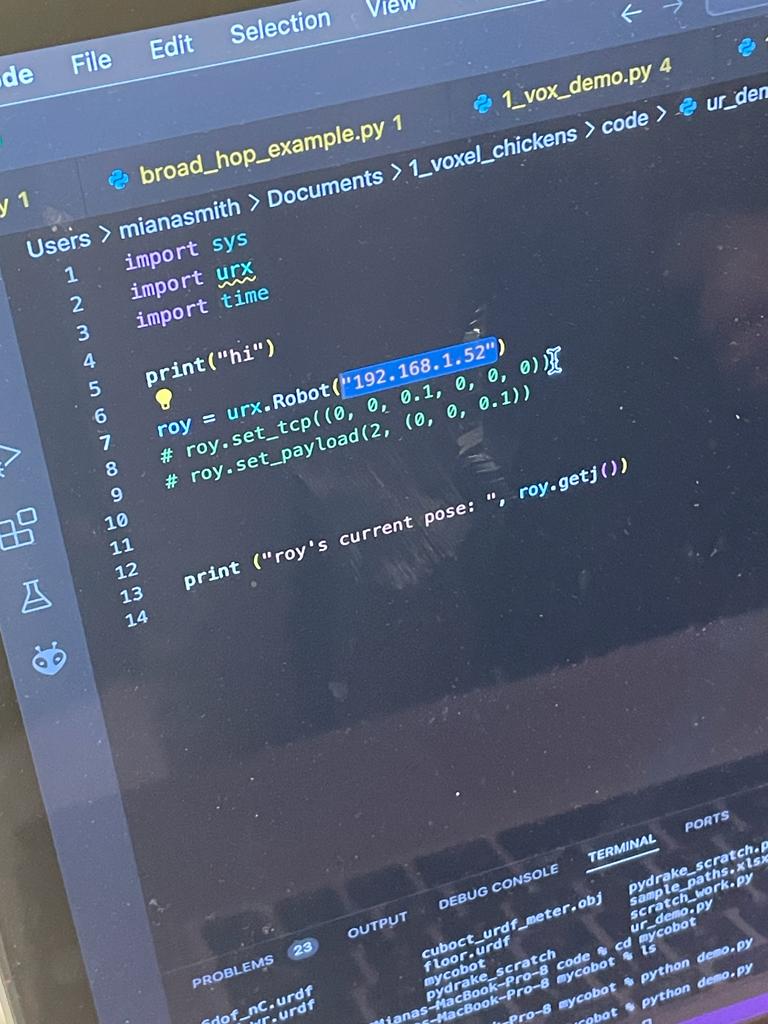

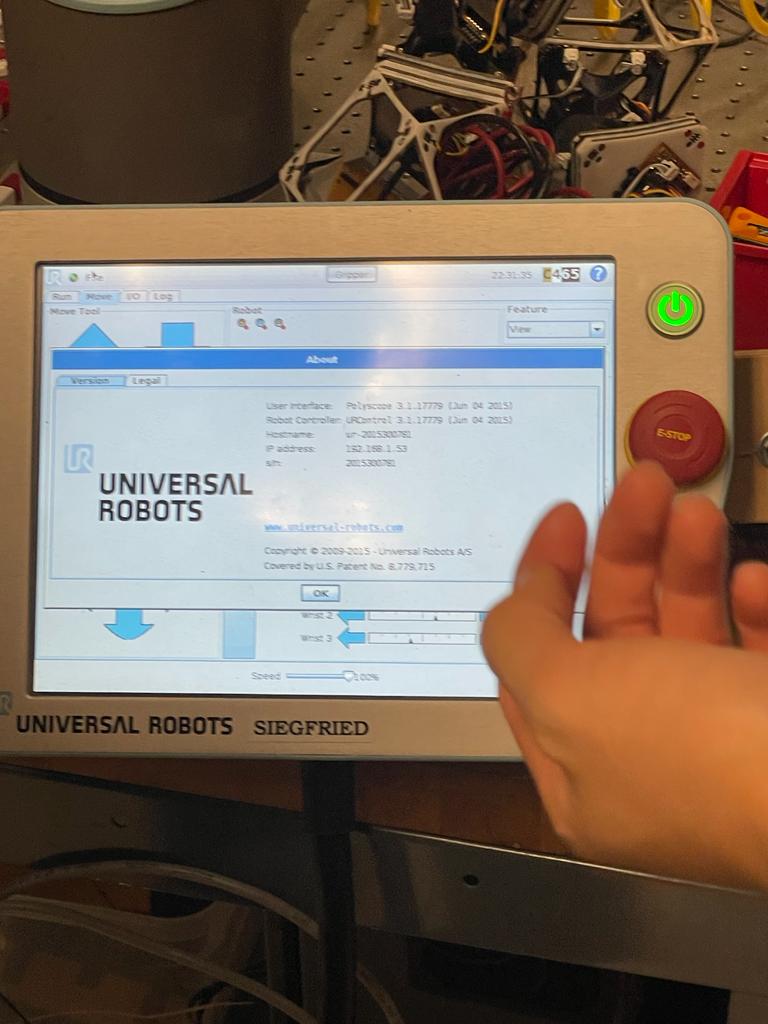

Robot IP address

Robot IP address

Menu / Controls

Menu / Controls

Speech to Text - Accessing Microphone and Speech Detection

Code

Code

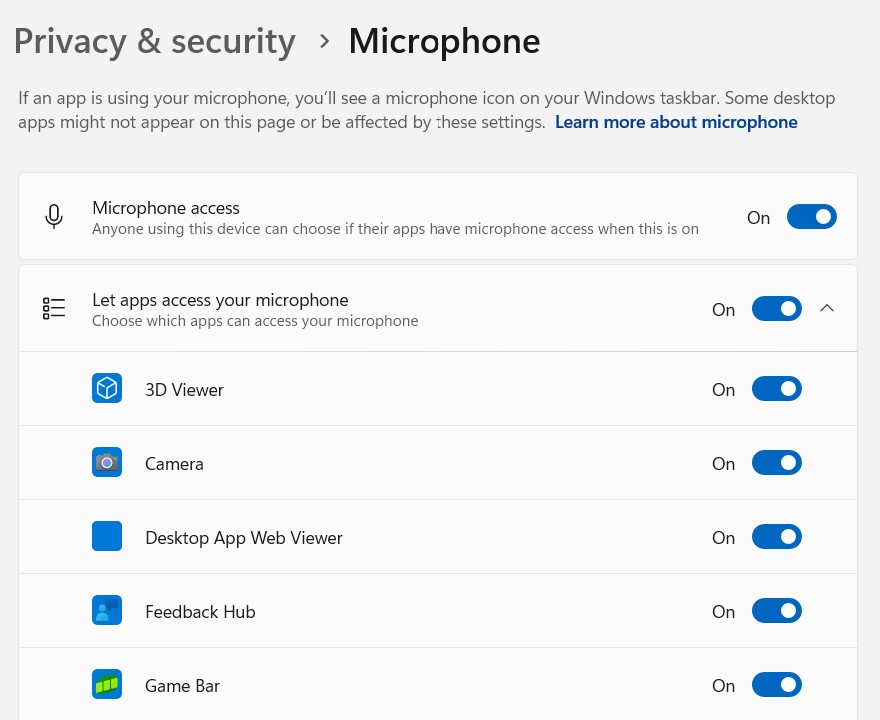

Make sure microphone is enadbled

Make sure microphone is enadbled

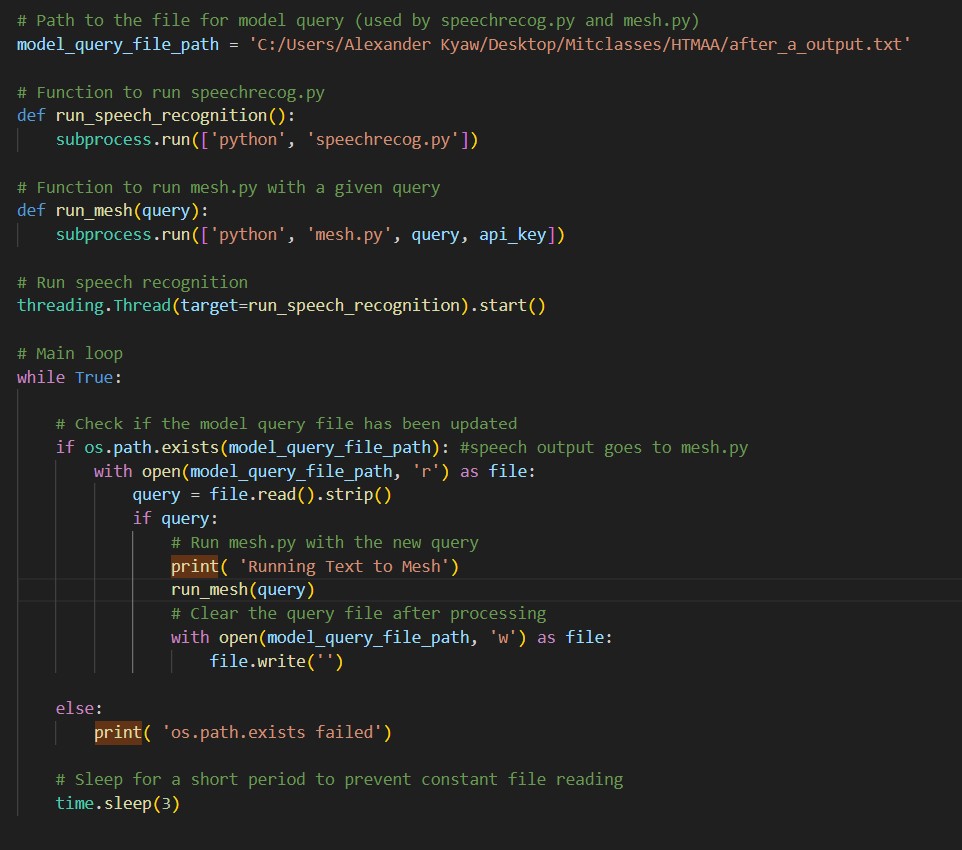

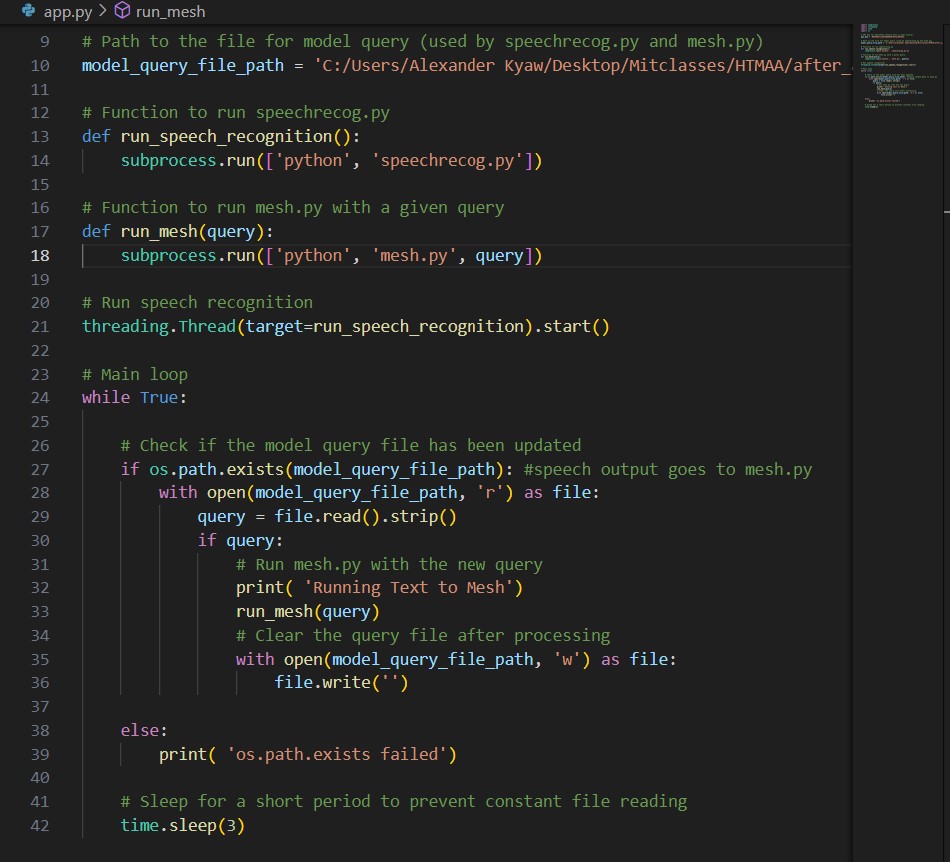

Text to Mesh - Command Line Interface / app.py

Command line interface

Command line interface

Generate voxels based on the size and location.

Generate voxels based on the size and location.

.

. Code for the Speech recogntion .

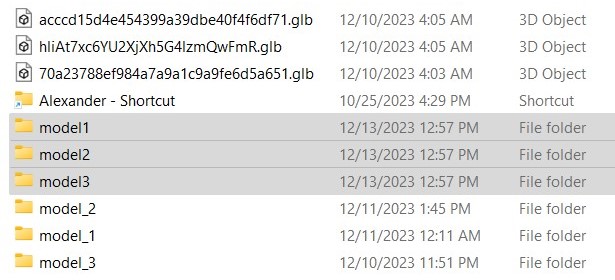

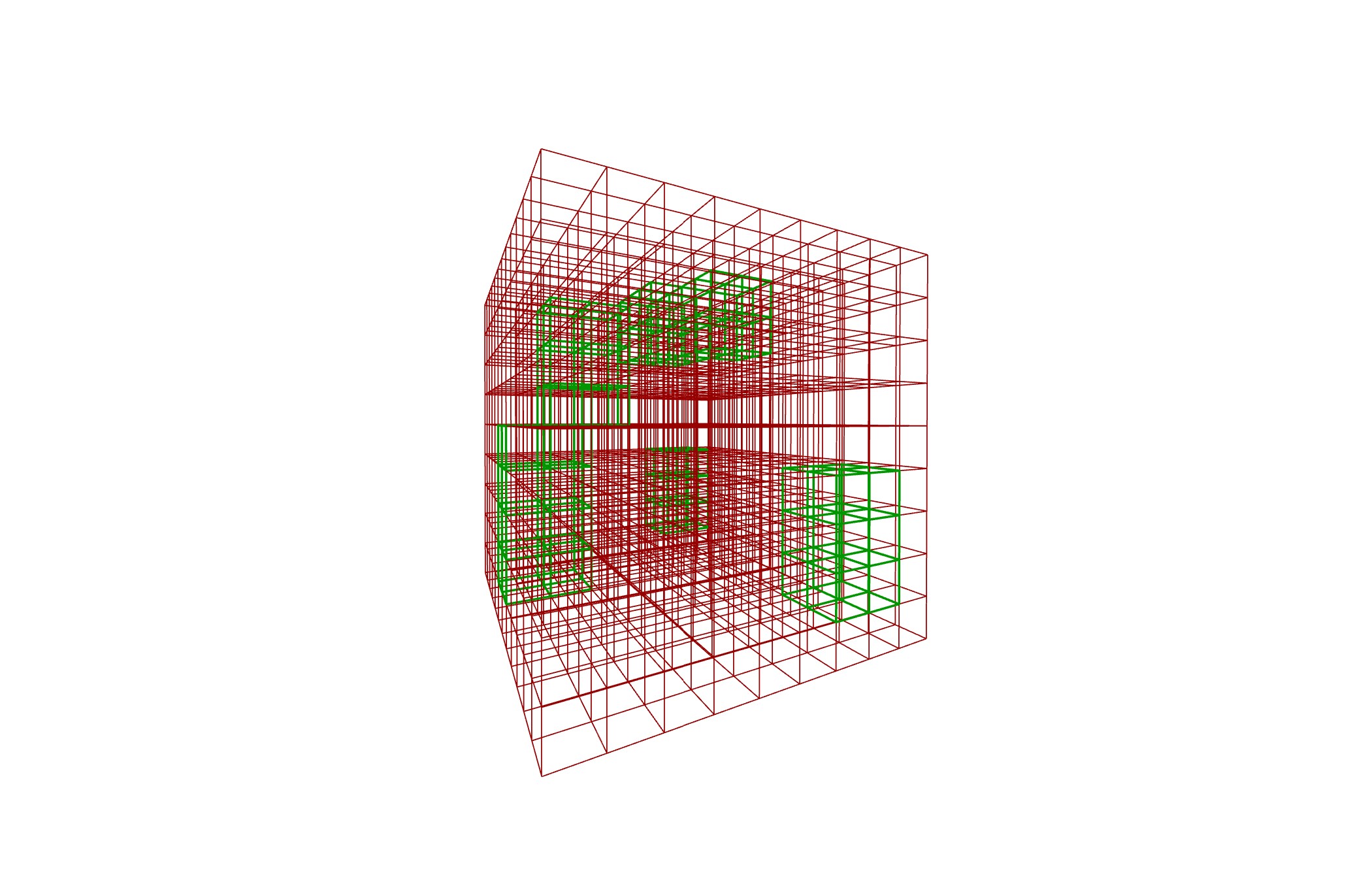

Voxelization - Informed Sorting / Assembly Sequence

Subdivisions based on voxel dimensions

Subdivisions based on voxel dimensions

Voxel Representation

Voxel Representation

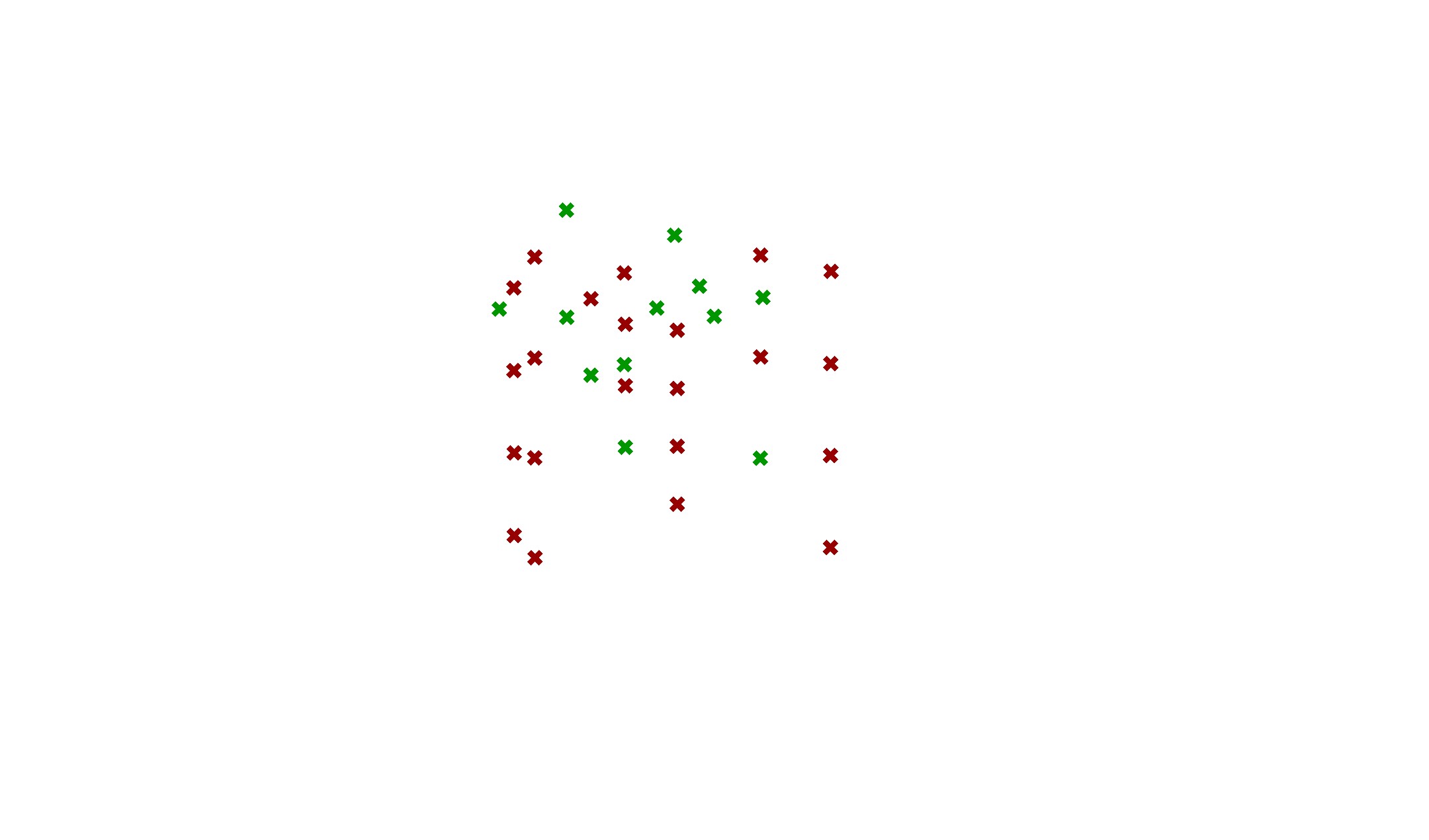

Red = Overhanging Voxels. These voxels are assembled last

Red = Overhanging Voxels. These voxels are assembled last

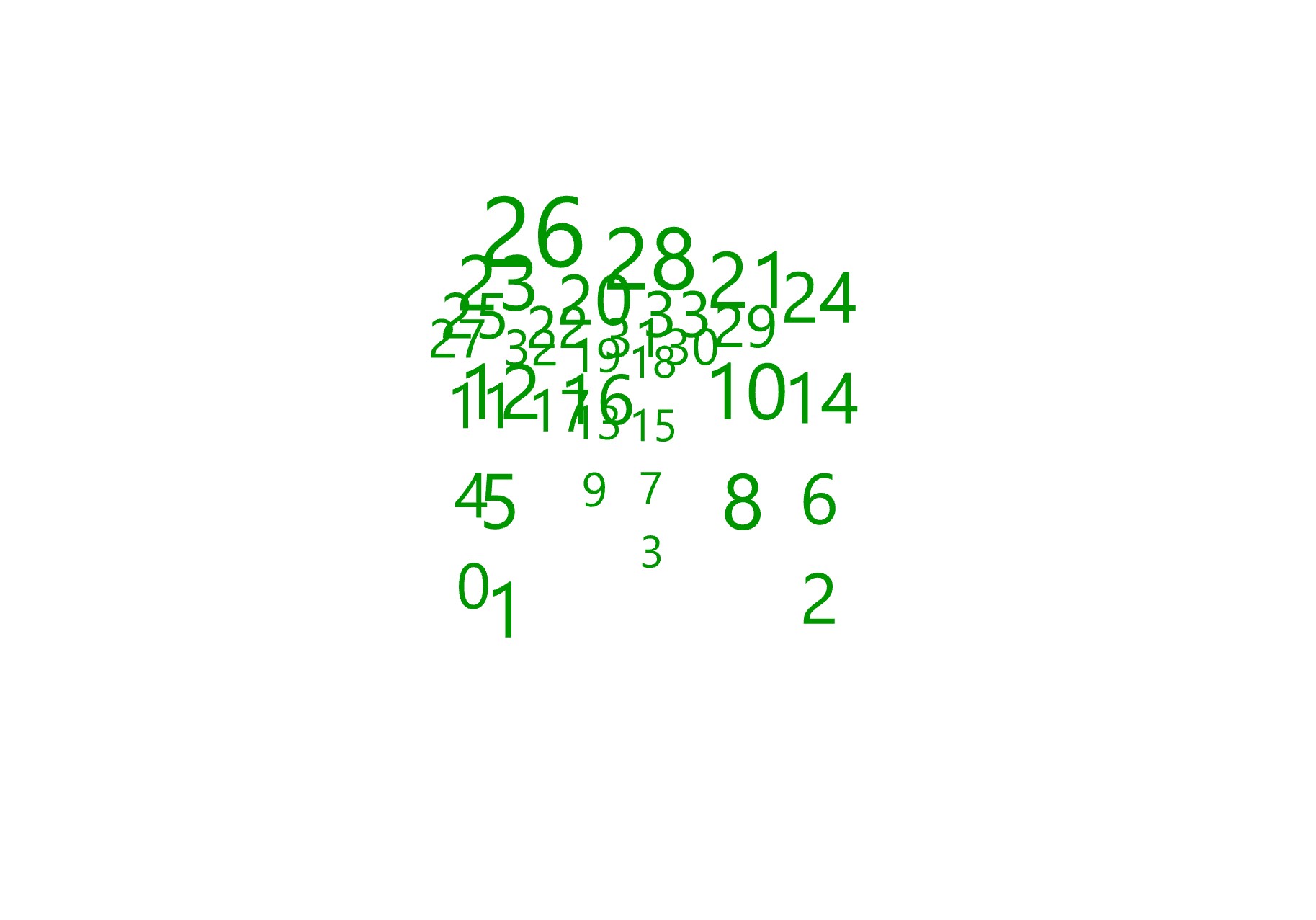

Labeled based on assembly order - Accounting for Overhangin Voxels

Labeled based on assembly order - Accounting for Overhangin Voxels

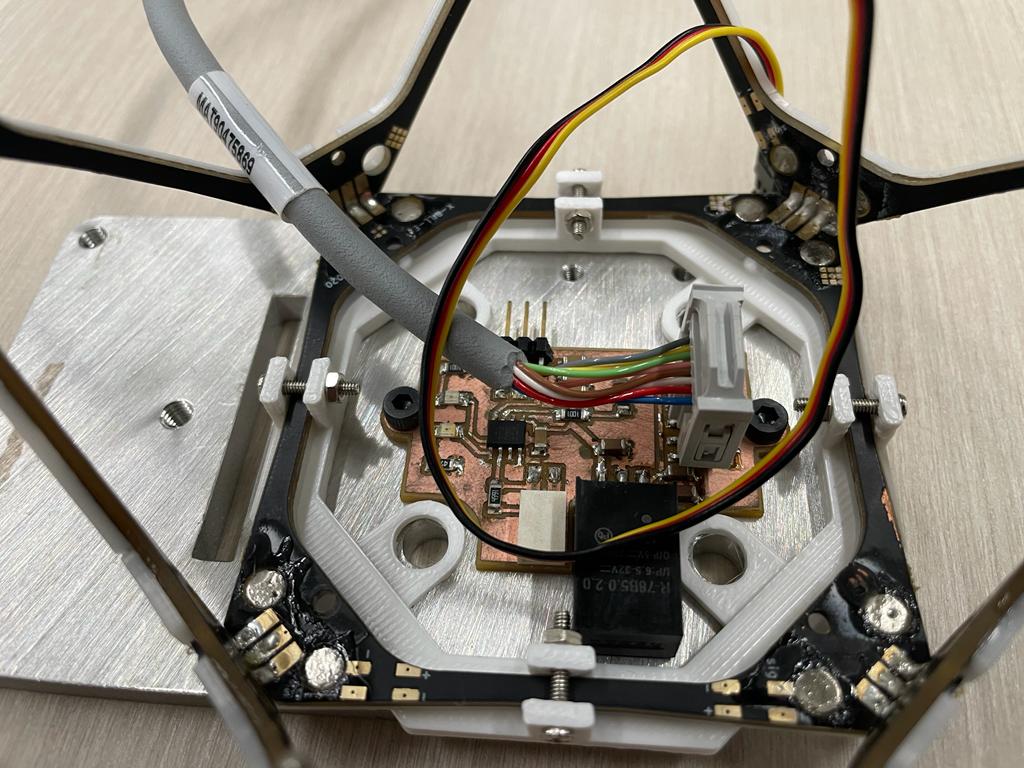

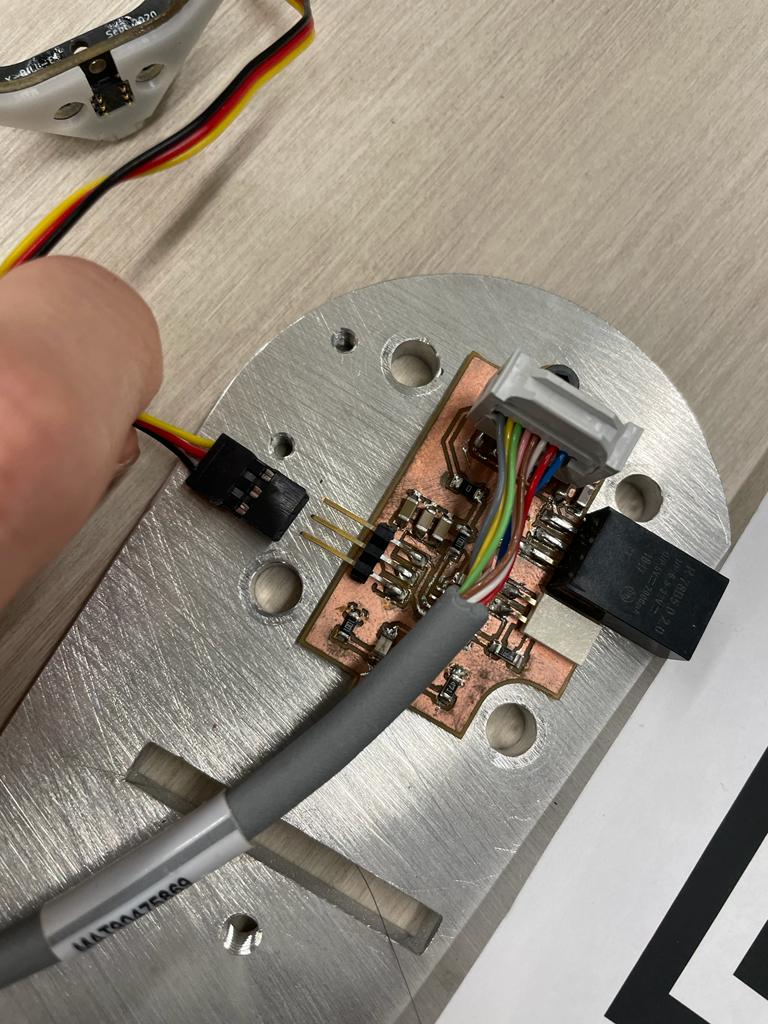

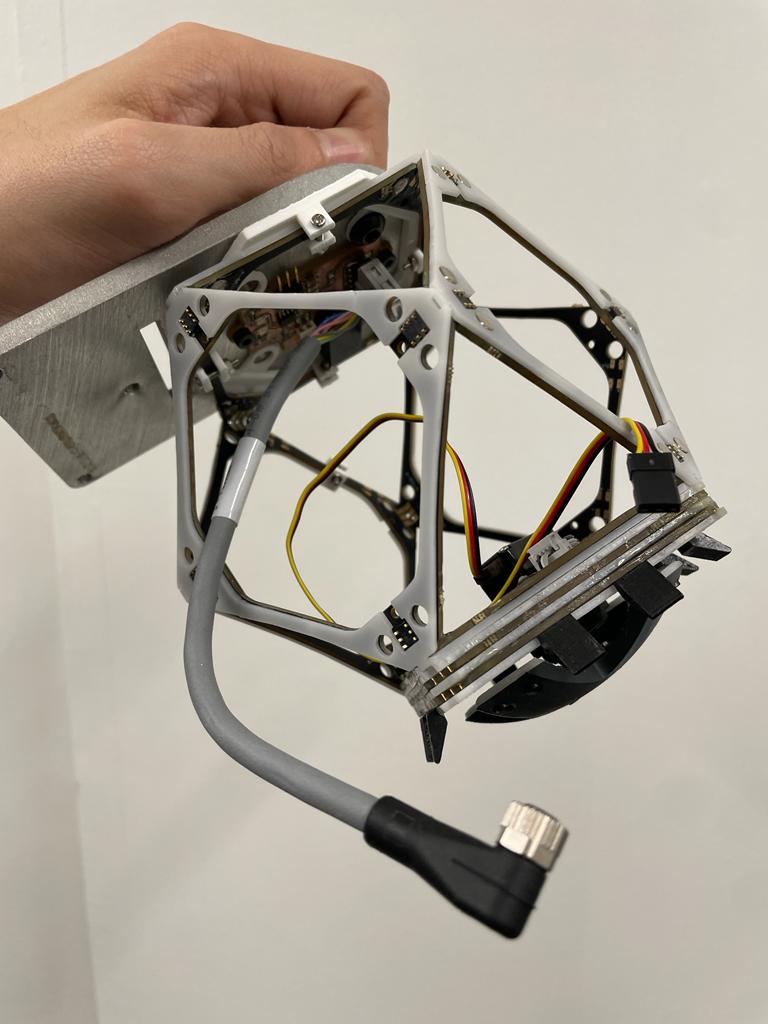

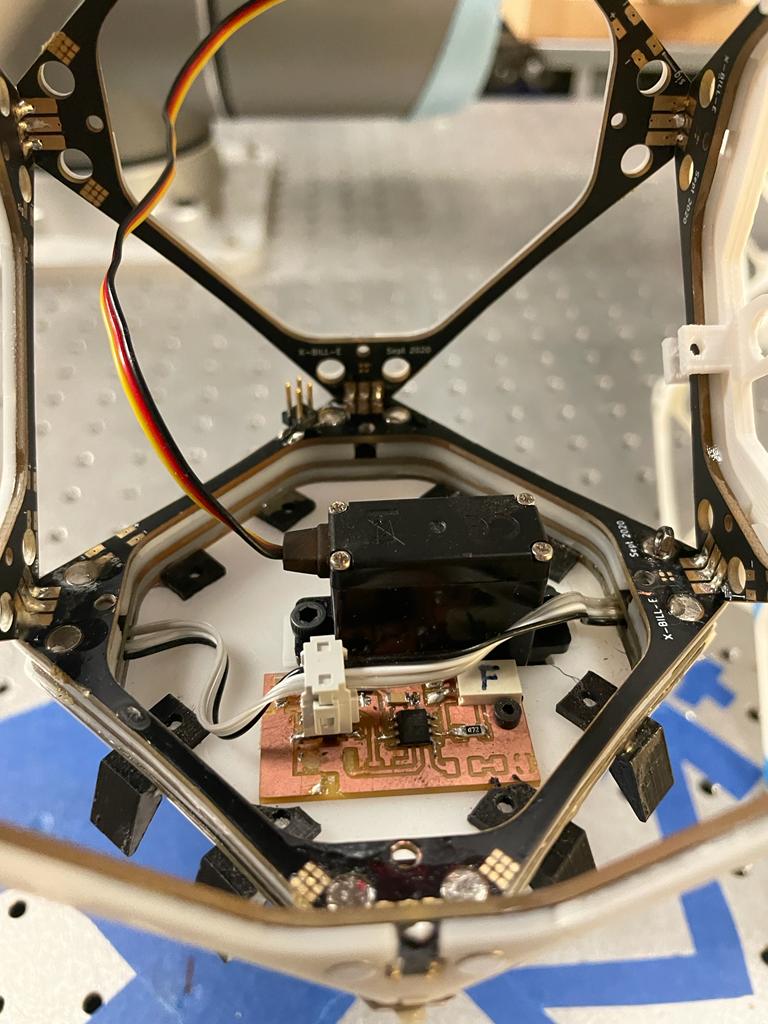

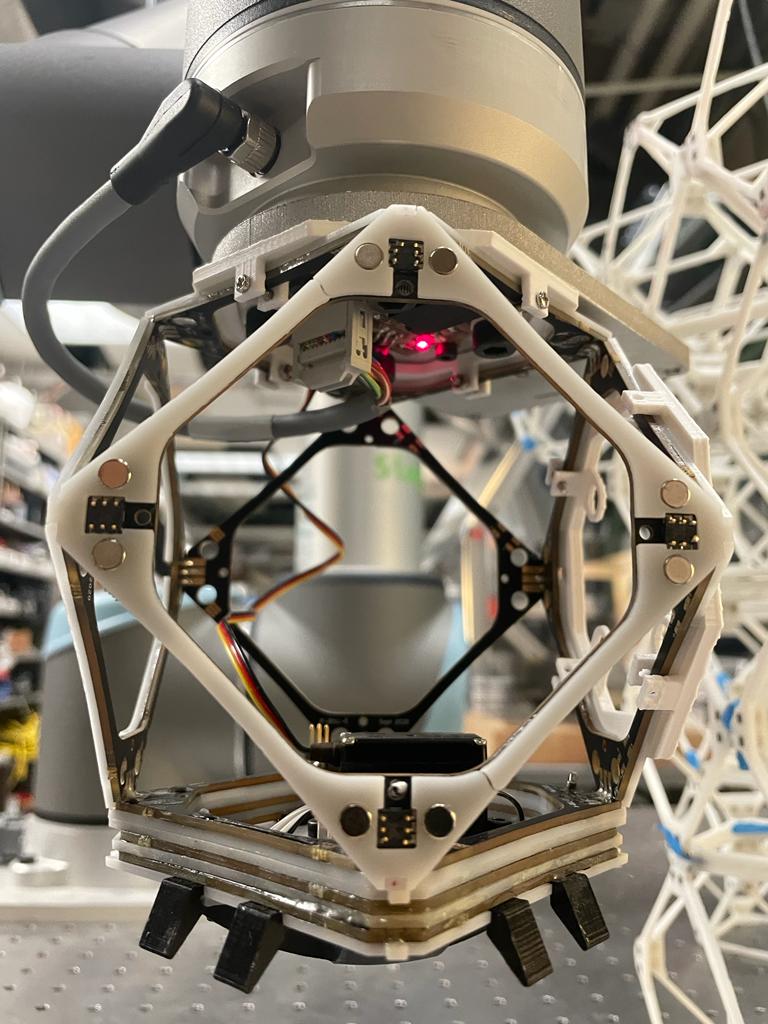

End Effector Fabrication

3D printing

3D printing

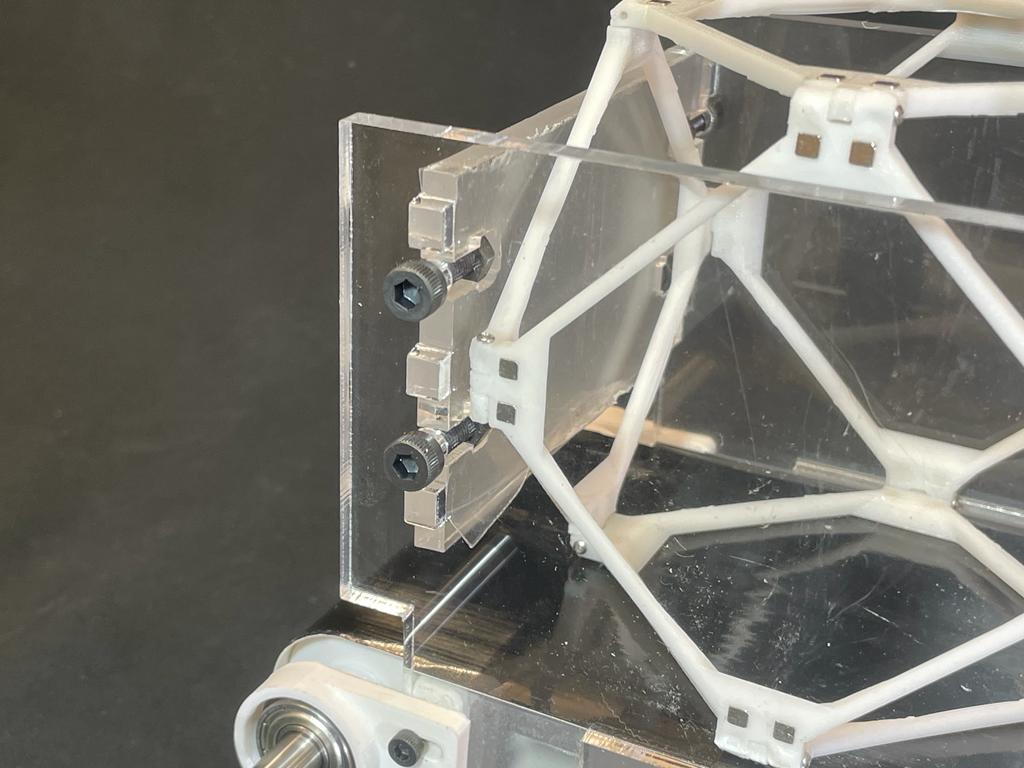

Connection to robot plate

Connection to robot plate

Connection to electronics

Connection to electronics

Attached

Attached

End Effector Connection

.

.

Assembly - Finding Origin

.

Assembly Full Documentation

.

Assembly

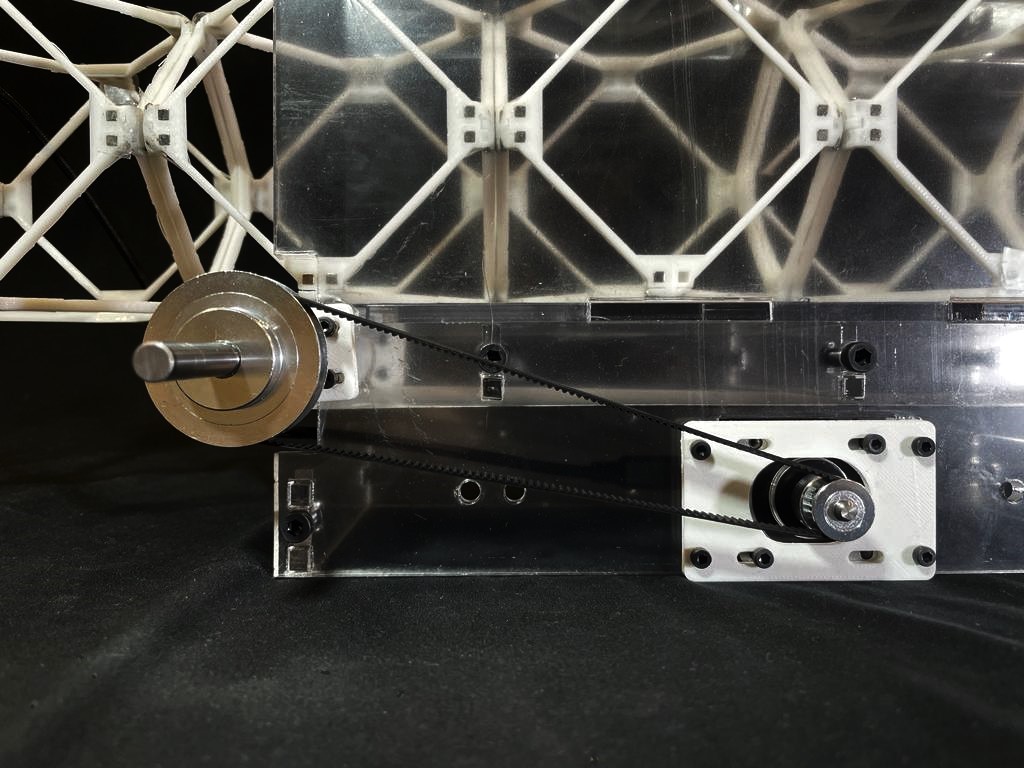

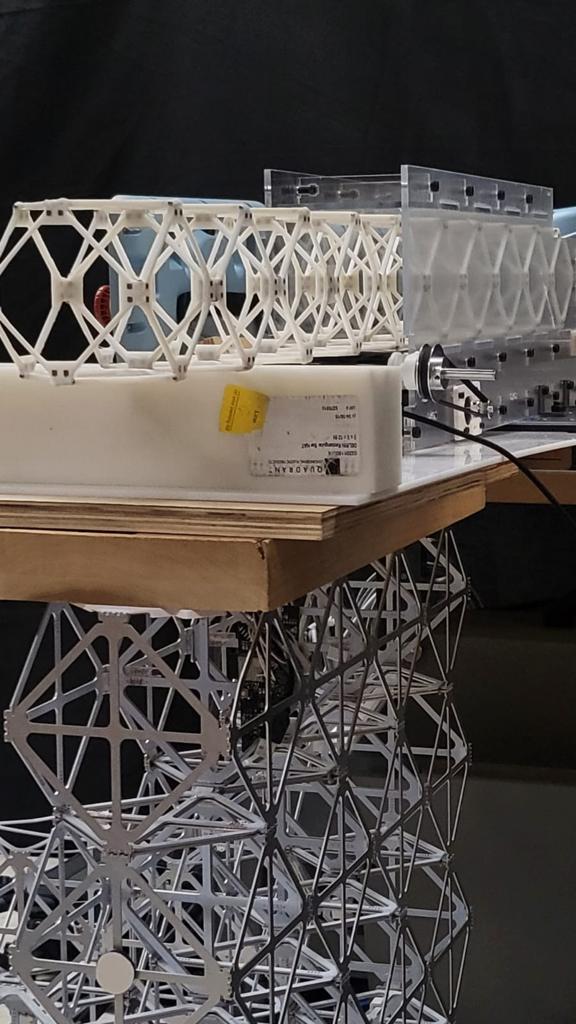

Conveyor Belt

Making a Conveyor Belt for the Voxels

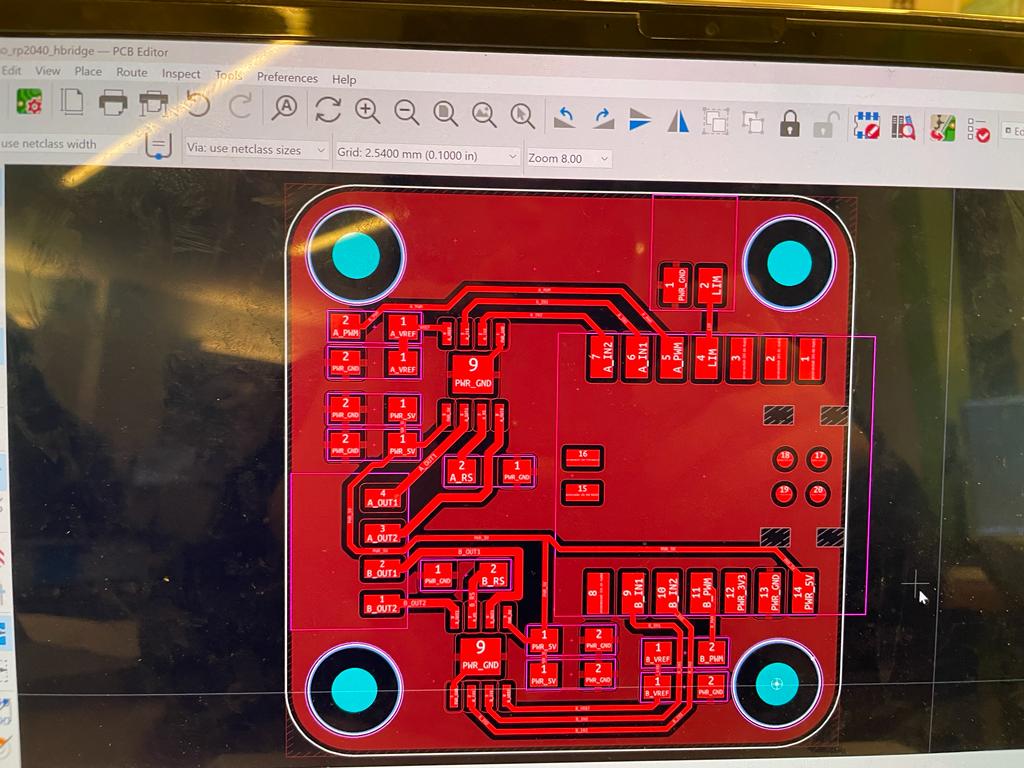

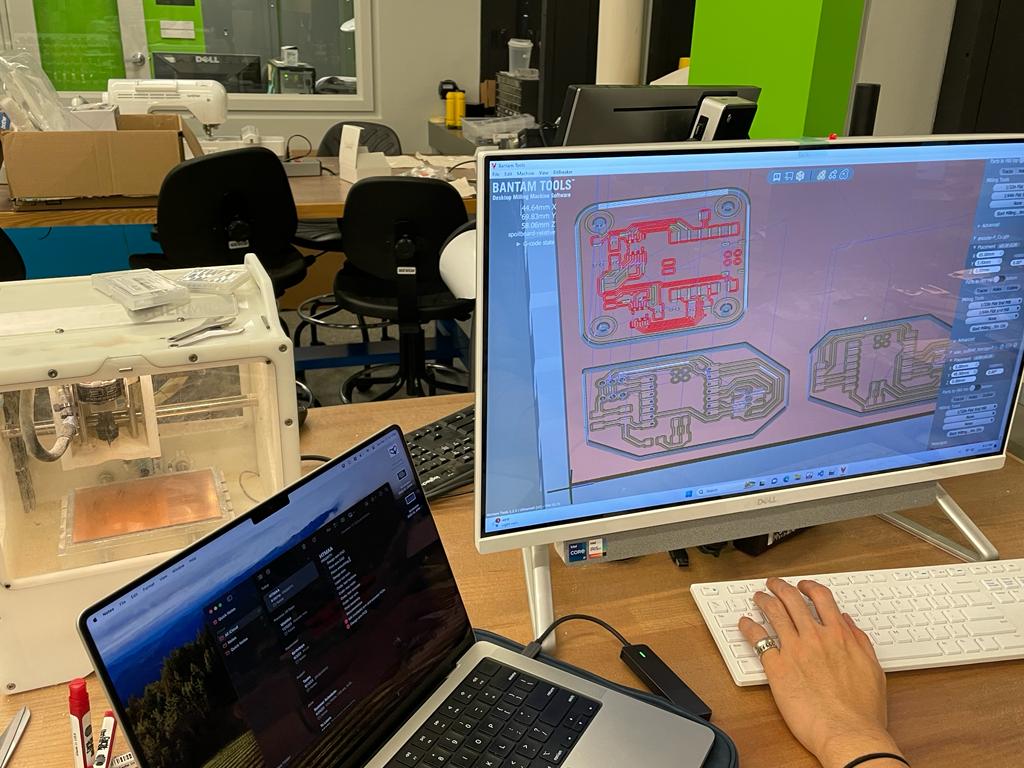

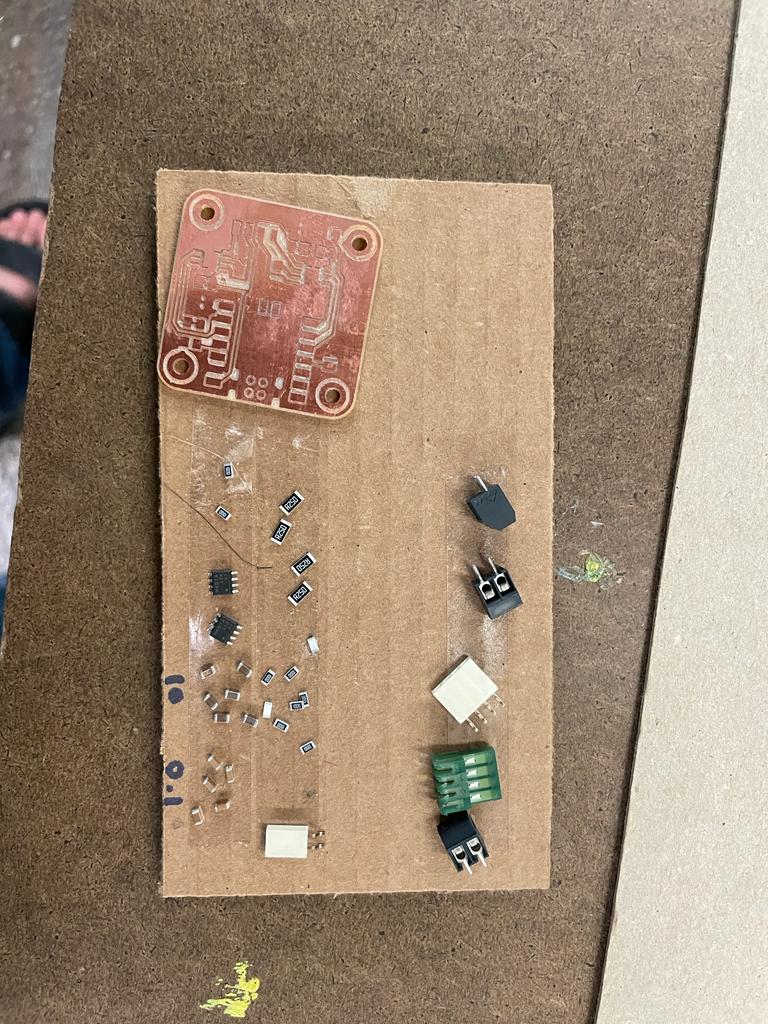

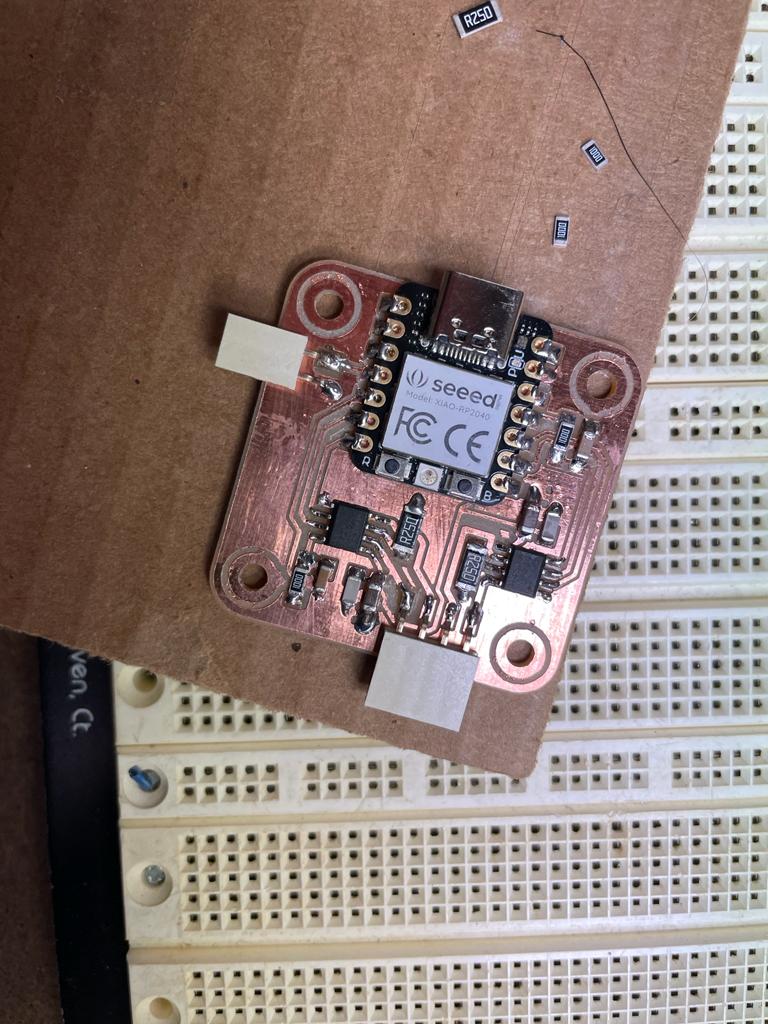

PCB Milling in KiCAD

KiCad Design - help from Quentin

KiCad Design - help from Quentin

SVG output

SVG output

PCB Toolpath

PCB Toolpath

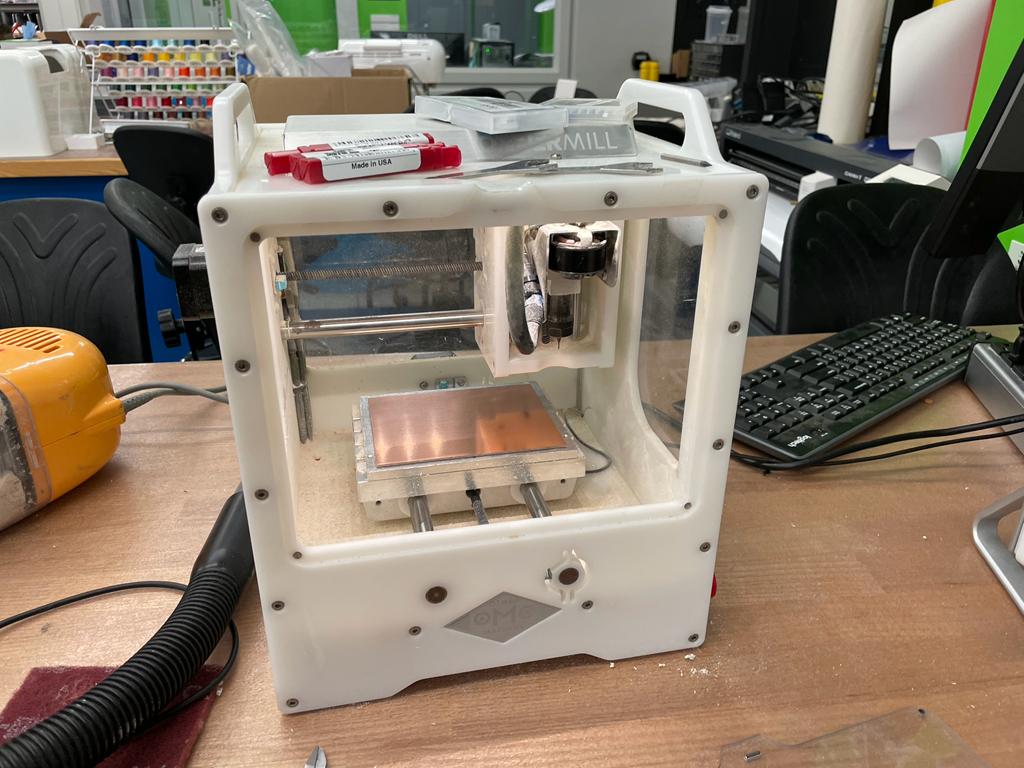

Actual milling

Actual milling

Test Conveyor Machine

Voxels + conveyor belt

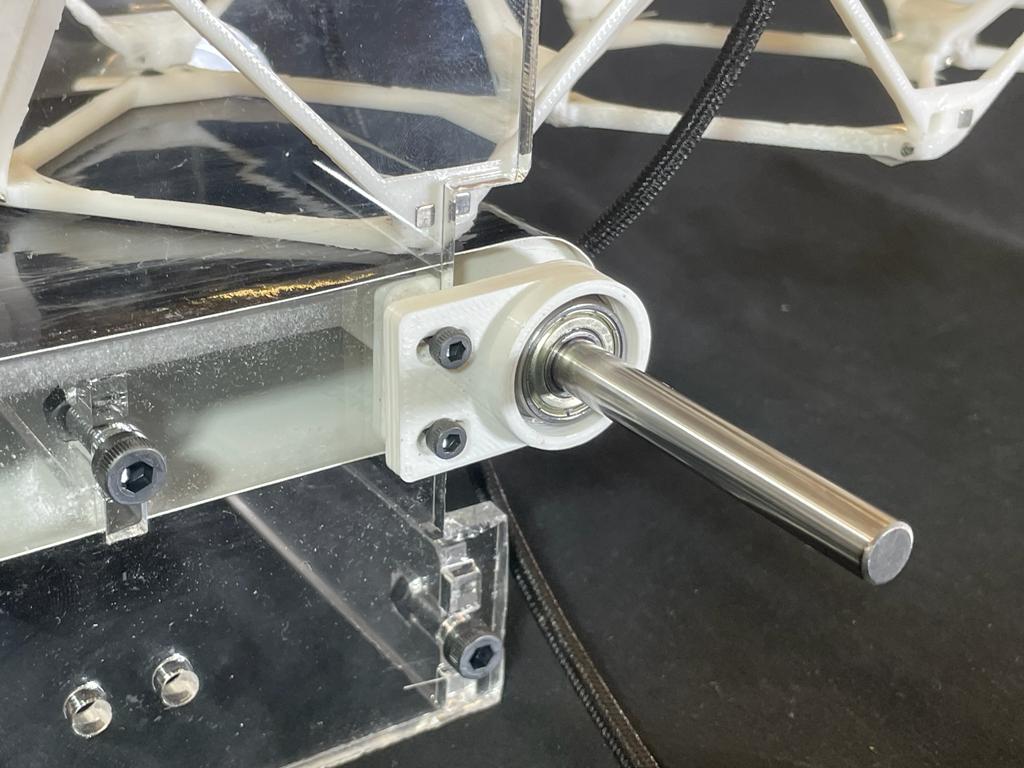

Mechanism Design

Liner Shaft Ball Bearing

Liner Shaft Ball Bearing

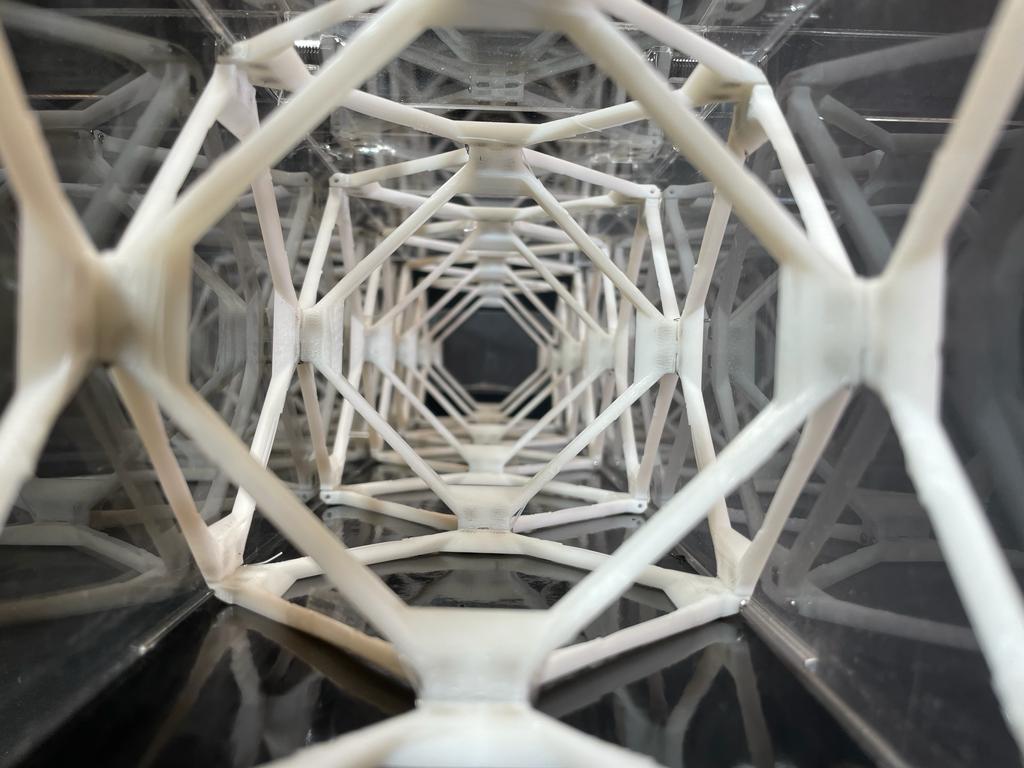

Voxel Container

Voxel Container

Pulley system

Pulley system

Voxels in Container

Voxels in Container

Demo Day

Documentation

PCB Soldering

All the parts

All the parts

.

.

Coding in Micropython

This is similar to the code fromn machine week

import machine

import time

def init():

global i_m

val_A, val_B = lookup_AB[i_m]

pin_A1.value(val_A)

pin_A2.value(not val_A)

pin_B1.value(val_B)

pin_B2.value(not val_B)

def move(steps, dt_ms=2):

global i_m

n_steps = abs(steps)

direction = steps >= 0

delta = 1 if direction else -1

for i in range(n_steps):

i_m = (i_m+delta) % 4

val_A, val_B = lookup_AB[i_m]

pin_A1.value(val_A)

pin_A2.value(not val_A)

pin_B1.value(val_B)

pin_B2.value(not val_B)

time.sleep_ms(dt_ms)

pin_A1 = machine.Pin(6, machine.Pin.OUT)

pin_A2 = machine.Pin(7, machine.Pin.OUT)

pin_B1 = machine.Pin(28, machine.Pin.OUT)

pin_B2 = machine.Pin(4, machine.Pin.OUT)

pin_A_pwm = machine.Pin(27, machine.Pin.OUT)

pin_B_pwm = machine.Pin(29, machine.Pin.OUT)

# duty 0-65535

pwm_A = machine.PWM(pin_A_pwm, freq=5000000, duty_u16=19660)

pwm_B = machine.PWM(pin_B_pwm, freq=5000000, duty_u16=19660)

lookup_AB = [

[1, 0],

[1, 1],

[0, 1],

[0, 0]

]

i_m = 0

System Integration - Resetting the X-Y-Z of the voxel source coordinates

Conveyor belt is mounted. All coordinates have to be set to fit this location

Using the voxel as a stand for even more voxels !

Updated Python Code for Robot Manipulation

The code for robot + converyor belt

Using the voxel as a stand for even more voxels !

Updated Python Code for Robot Manipulation

The code for robot + converyor belt

import urx

import time

import numpy as np

# Fixed parameters

PI = 3.1415926535

movement_plane_height = 0.4

source_coordinate = [0.08, 0.15, 0.042]

in_to_m = 0.0254

voxel_xy_offset = [-0.03, -0.15, -0.035]

# Load in xyz coordinates of voxels, organized from bottom to top layer

voxel_coordinates = [

[6.0, 2, 4], [14, 14, 4], [2, 14, 4], [6.0, 2, 8.0], #0-5

[14, 14, 8.0], [2, 14, 8.0], [14, 10, 8.0], [14, 14, 12], [6.0, 2, 12], #6-11

[10, 2, 12], [2, 14, 12], [14, 10, 12],[2, 10, 12], [10, 6.0, 12], [6.0, 6.0, 12], #12-17

[2, 14, 16], [2, 10, 16], [10, 6.0, 16], [14, 10, 16], [6.0, 6.0, 16], [10, 2, 16], #18-23

[14, 14, 16], [6.0, 2, 16], [14, 2, 16], [2, 2, 16], #24-30

]

for i in range(len(voxel_coordinates)):

for xyz in range(3):

if xyz == 1:

voxel_coordinates[i][xyz] = -voxel_coordinates[i][xyz]

elif xyz == 2:

voxel_coordinates[i][xyz] -= 4

# scale:

voxel_coordinates[i][xyz] = voxel_coordinates[i][xyz] * in_to_m

# offset

voxel_coordinates[i][xyz] += voxel_xy_offset[xyz]

print(voxel_coordinates)

# Setup robot as needed

robot = urx.Robot("192.168.1.53")

robot.set_tcp((0, 0, 0.1, 0, 0, 0))

robot.set_payload(2, (0, 0, 0.1))

acc_cmd = 2 # acc. and vel. for all movements

vel_cmd = 2

time.sleep(0.2) # leave some time to robot to process the setup commands

# Helper functions for convenience

def deg2rad(deg):

rad = []

for i in range(len(deg)):

rad.append(deg[i] * PI / 180)

return rad

def translateEndEffectorGlobal(robot, p_des, p_root):

p_cmd = robot.getl()

for i in range(len(p_des)):

p_cmd[i] = p_des[i] + p_root[i]

p_cmd[3:6] = p_root[3:6]

print("Moving to global position: ", p_cmd)

robot.movel(p_cmd, acc=acc_cmd, vel=vel_cmd)

def translateEndEffectorZGlobal(robot, p_z, p_root):

p_cmd = robot.getl()

p_cmd[2] = p_z + p_root[2]

p_cmd[3:6] = p_root[3:6]

print("Moving to global Z position: ", p_cmd[2])

robot.movel(p_cmd, acc=acc_cmd, vel=vel_cmd)

def translateEndEffectorXYGlobal(robot, p_xy, p_root):

p_cmd = robot.getl()

for i in range(len(p_xy)):

p_cmd[i] = p_xy[i] + p_root[i]

p_cmd[3:6] = p_root[3:6]

print("Moving to global position: ", p_xy)

robot.movel(p_cmd, acc=acc_cmd, vel=vel_cmd)

def grabSourceBlock(robot, p_root):

source_coordinate_xy = source_coordinate[0:2]

source_coordinate_z = source_coordinate[2]

translateEndEffectorZGlobal(robot, movement_plane_height, p_root)

translateEndEffectorXYGlobal(robot, source_coordinate_xy, p_root)

translateEndEffectorZGlobal(robot, source_coordinate_z, p_root)

robot.send_program('set_tool_digital_out(0, False)')

time.sleep(0.1)

translateEndEffectorZGlobal(robot, movement_plane_height, p_root)

def placeSourceBlock(robot, p_des, p_root):

translateEndEffectorZGlobal(robot, movement_plane_height, p_root)

translateEndEffectorXYGlobal(robot, p_des[0:2], p_root)

translateEndEffectorZGlobal(robot, p_des[2], p_root)

robot.send_program('set_tool_digital_out(0, True)')

time.sleep(0.1)

translateEndEffectorZGlobal(robot, movement_plane_height, p_root)

if __name__ == '__main__':

# Startup procedure

print("Starting program...")

j_root = deg2rad([0, -90, 135, 225, -90, 45])

print("Robot root joint angles: ", j_root)

print("Moving to home...")

robot.movej(j_root, acc=acc_cmd, vel=vel_cmd)

robot.send_program('set_tool_digital_out(0, True)') # open tool as default

p_root = robot.getl() # global root position on startup

print("Robot global root position: ", p_root)

# Main code

for coord in voxel_coordinates:

grabSourceBlock(robot, p_root)

placeSourceBlock(robot, coord, p_root)

#translateEndEffectorZGlobal(robot, movement_plane_height, p_root)

#translateEndEffectorXYGlobal(robot, [0.08, 0.15], p_root)

#translateEndEffectorZGlobal(robot, 0.063, p_root)

# Shutdown procedure

input("Press enter to shutdown...")

print("Returning to root position and orientation...")

translateEndEffectorZGlobal(robot, movement_plane_height, p_root)

translateEndEffectorXYGlobal(robot, [0, 0], p_root)

robot.movej(j_root, acc=acc_cmd, vel=vel_cmd)

robot.send_program('set_tool_digital_out(0, True)') # open tool as default

print("Program finished.")

time.sleep(0.5)

robot.close()