This week I am testing the limits of embedded software and hardware.

This week I am testing the limits of embedded software and hardware. This week I am testing the limits of embedded software and hardware.

This week I am testing the limits of embedded software and hardware.

The idea is to implement a voxel renderer that runs completely on the Raspberry Pi Pico, in real(-ish) time, and displays it on a small LCD display. Basically, Minecraft on a really cheap microcontroller.

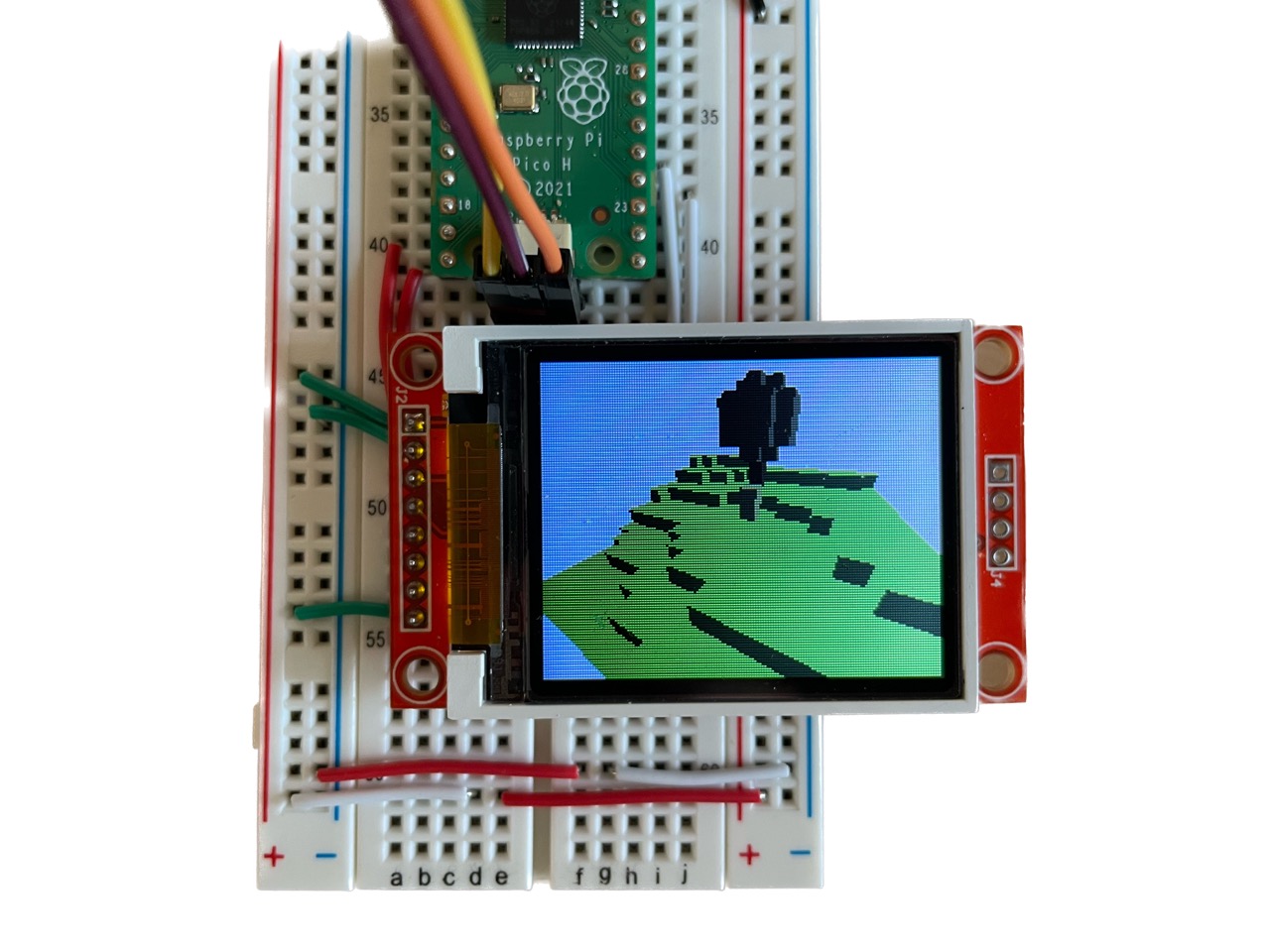

Raspberry Pi Pico, courtesy of hackster.io

Raspberry Pi Pico, courtesy of hackster.io

So what are we working with? Well, the datasheet for RP2040 gives us these numbers:

That is... incredibly low. Don't get me wrong, it's perfectly respectable for a modern microcontroller but it doesn't even begin to compare to the minimum specs for running Minecraft. To put it into perspective, my laptop (which barely hits 30fps) has a 3.49GHz processor with many more cores and 16GB of RAM. That's over 26 times faster, times the additional number of cores! And 264kB is about 3 seconds of compressed audio, or a 200px by 300px PNG file.

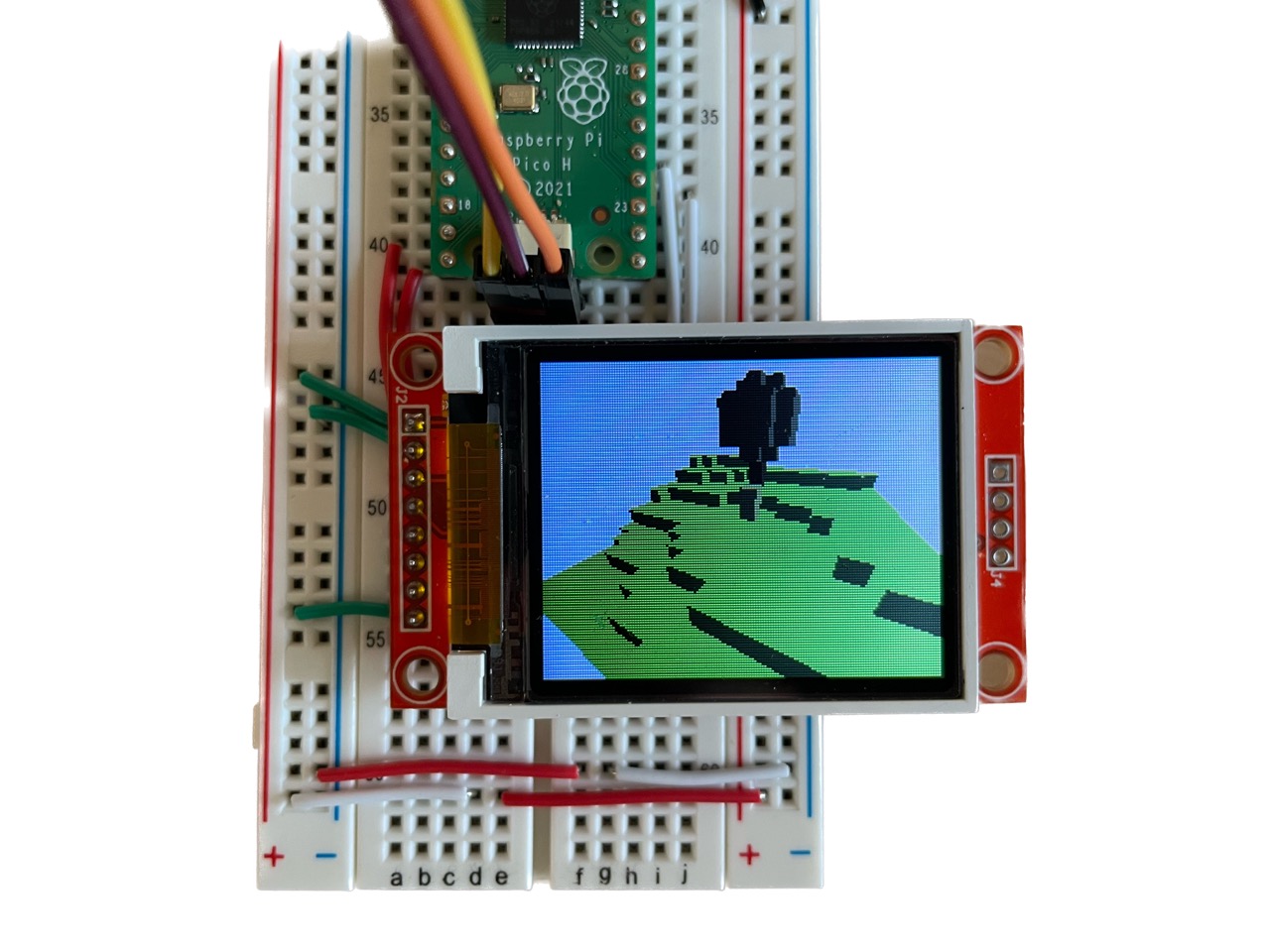

First, I needed to get some LCD display working with the Pico. The lab had some of these 1.8" Adafruit breakout boards for the ST7735 lying around, so I went with that. It has a resolution of 160x128 pixels which is perfect for my use case; having more pixels to draw would just make the project harder. So I created a rust project using the RP2040 HAL crate, wrote boilerplate for some ST7735 drivers I found online andddd:

The issue here isn't the flicker. I programmed the green LED to toggle on/off whenever the CPU is finished updating the screen, and the video above is in realtime so we've got a big problem: rendering the display alone takes ages! Ideally, the green LED should appear static to the human eye (because it's blinking so fast) and the CPU should be busy for even less time than that. Ok, let's go down this rabbit hole.

I decided to write my own drivers for the ST7735 (the chip on the LCD display) loosely based off the datasheet and these two libraries. The idea is very simple: Pico <-> SPI <-> ST7735, where SPI refers to the serial protocol (as opposed to e.g. I2C). Then it's just a matter of sending the right bytes (commands, data) in the right order.

The biggest issue with just about every ST7735 driver is that it blocks the CPU while pixels are being transmitted to the screen. It's possible to just increase the SPI baudrate to drop that time from a dozen milleseconds to just a few, but that's still a considerable amount of time for a processor. What I'd like is for the CPU to be free to render the next frame while the current frame is being transmitted over SPI.

In comes direct memory access (DMA). It's hardware on the processor that can only perform memcpy's, e.g. SRAM <-> SRAM, SPI <-> SRAM, PIO <-> SRAM, etc. It isn't exclusive to the RP2040, but for some reason ST7735 drivers online don't make use of it. For my project, I store a contiguous screen buffer (i.e. size 128 x 160 pixels) that the CPU can take its time updating. Meanwhile, DMA will transmit that buffer over SPI to the display. So from the side of the CPU, it's like displaying every pixel at once rather than one at a time.

The immediate speed benefits from this are pretty good, but more importantly the CPU is busy for nanoseconds per frame. Big upgrade!

A common technique in computer graphics is double buffering. As the name implies, it involves using two screen buffers: one for drawing and the other to display. What does this mean? Well with one buffer, you're necessarily doing the CPU Render -> DMA Display -> CPU Render -> etc. pipeline sequentially. Attempting to update the screen buffer while it's being uploaded results in visual artifacts. With two buffers, the CPU and DMA can touch mutually exclusive memory and "swap" when both are done with the current frame.

I spent a whole day writing ST7735 drivers and speed wise, it's way better than alternatives online. The only issue is it didn't work:

It turns out the display I used was broken... when I replaced it:

Much better. There's still some noticeable flicker, which is due to the lack of vsync (i.e. the pixels being uploaded are racing the scanline updating the physical LCD) but good enough. In practice, you wouldn't dramatically change the whole screen (green <-> plane picture) like this anyways.

There are three rendering methods I was considering for this project.

I chose raytracing because the screen I'm drawing to is relatively small. Also, voxels require a considerable amount of geometry which is expensive on 264kB of SRAM or computationally inefficient if we don't cache. Also, raytracing in a voxel grid is very efficient (in fact it's used as an optimization for more complex raytracers).

The first step in raytracing is ray casting. The latter is shooting one ray into the world and yielding what it hit, whereas the former is shooting a whole bunch of those rays to create an image. So once raycasting is implemented, the rest is trivial. I chose this algorithm (A. Woo) and used this implementation as reference.

I hard-coded a basic 3D model into the program, then animated the camera to move forward:

So first implementation is really slow. Why? Well it turns out that the RP2040 doesn't have a floating-point unit (FPU) so any operations involving f32 or f64 is emulated via software. This is really expensive, and these types are designed for precision which is really redundant when your output is a 160 x 128 screen.

I found this paper which compares floating point to fixed point arithmetic on the Pico. Fixed point is implemented with integer operations so it's a lot faster at the cost of precision and range.

To test this, I implemented a 32-bit Fixed type with a 15-bit fractional part. Depending on the use case, it may make more sense to shift around this integer/decimal distribution, or even drop down to a 16-bit representation, but I opted for simple first. Still, this drop-in replacement is a lot faster:

The Pico has two cores, and so far I've been using just one. So let's add what is probably the most obvious "optimization." With raytracing, this is trivial: core0 renders the first half of the screen, and core1 renders the rest. These regions can operate independently and don't overlap:

One last touch is just making the processor faster. The Pico will run at 125MHz if you don't tell it anything, but it's safe to double that. Some people have even reached 436MHz! This makes performance about twice as good, but I didn't want to push it farther since it risks damaging the hardware.

If you're not convinced everything I've been showing is actually 3D, here is the same model spinning:

I was out during the weekend and had all of two days to start and finish the project. So I started running out of time and had to put aside my dream of running Minecraft on the Pico. Instead, I'll completely forgo the gameplay and focus on the graphics. But to make it cool, I created a Minecraft plugin using Spigot that will communicate with the Pico to transmit world data over:

I'm running a modded server that transmits whatever chunk the player is in to the Pico

I'm running a modded server that transmits whatever chunk the player is in to the Pico

Finally, I added simple lambert shading which makes it look a lot nicer:

Download the source code + uf2 (170kB)