Week 4: 3D Printing

xdd44, Sep. 28, 2024

Test of 3D Printer (Group work with Zhi Ray Wang)

We worked on Arch Shop's Sindoh 3DWox 1 printer with PLA filament.

We have experiences with 3D printing, but we haven't tried printing objects directly on a shaft before. Therefore we decided to have a test.

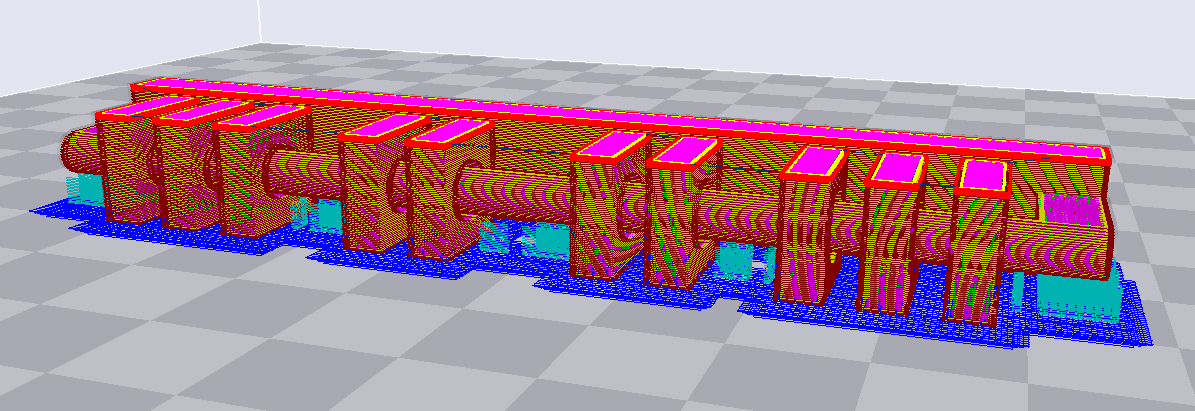

We used 3DWOX Desktop's default setting for Sindoh PLA, while adjusted layer height to 0.15mm for a finer rounded shaft. We also realized the shaft itself is a ~8cm span, therefore we set nozzle temperature to 195°C for faster cure, and spaced the objects so that there can be additional support for safer printing. The gap between the objects and the shaft varied from 0.1mm to 1mm.

Printing preview

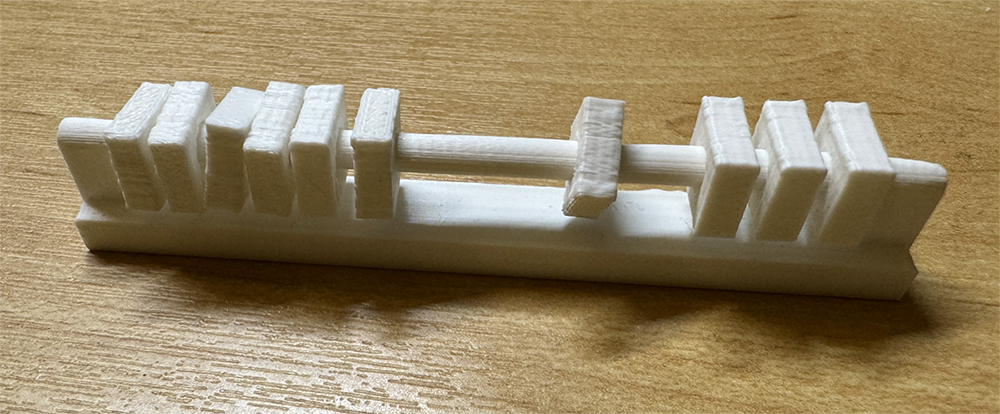

The testing result showed that objects with a gap from 0.4mm to 1mm all can freely (loosely) move and rotate on the shaft, while objects with 0.1mm to 0.3mm gaps stuck completely with the shaft.

The spanning of the shaft also affected the result a bit: the bottom lines of printing dropped a little, which made the movement of objects a bit jerky around that part.

Moved objects on the shaft

Dropped bottom lines of the span

3D Printing of a Design

Following our clearance test, I realize I can print a bracelet with beads on it. I would also like to see if the "shaft", or the bracelet body in this case, can constrain the orientation of the beads.

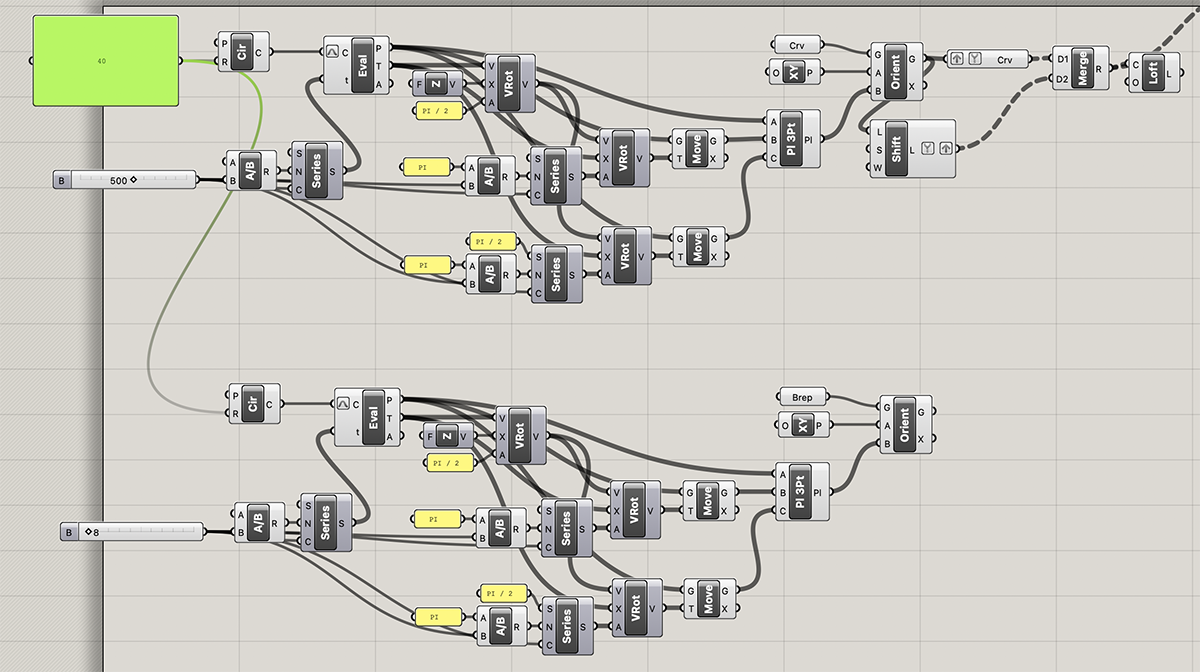

I decided to make a Mobius ring, so that the side of the beads can be flipped once they are slided for a full circle. I wrote a Grasshopper script to construct the ring: 1. Draw the section of the ring; 2. Get the vectors along the circle and rotate them from 0 - 180 degree; 3. Orient copies of the section curve to the vectors; 4. Loft through the curves.

Grasshopper script to construct the ring and the beams.

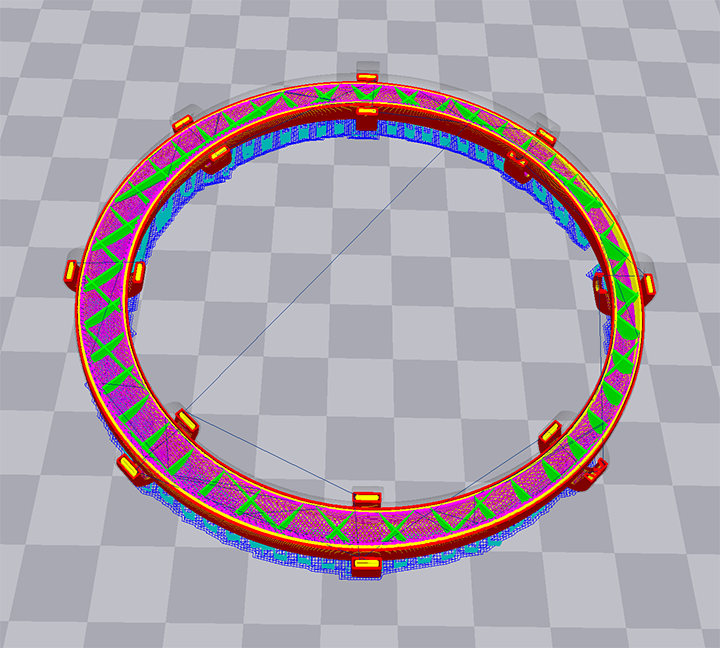

3DWOX preview

It turned out I added too much support, and the model became really rough. I sanded it and the beams could freely slide.

Printed bracelet

The heart holes being flipped.

3D Scanning of an Object

This summer I worked with Prof. Takehiko Nagakura on his Desgin Heritage project in Turkey, which I believe is more interesting to be included here than I re-do a simple 3D scan with my phone.

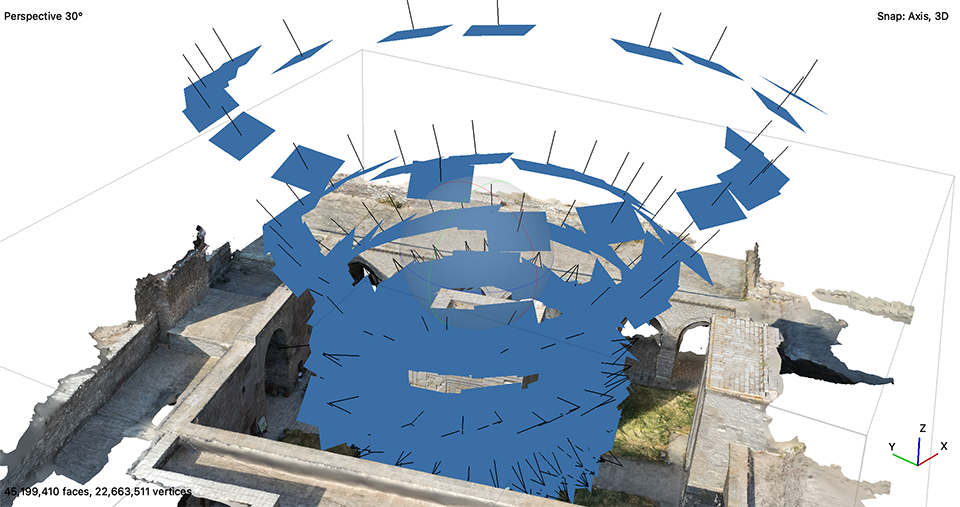

The project is about scanning and reconstructing heritage sites digitally, so that anyone can access and view the ancient buildings through laptop or VR. During the trip, I served as the drone operator to take photos ("scan") of the heritage architectures, so that the team can reconstruct them digitally in Metashape. Takehiko has been aware of new 3D reconstruction models emerging, and recently proposed that I test out NeRF and Gaussian Splatting, two trending models that perform 3D reconstruction in a completely new way.

Below I will show first the collection of the photos I used and the processing result of Metashape. I selected 455 photos from my drone scanning of Sultan Han.

455 photos of central structure of Sultan Han

Metashape processing result, showing estimated camera positions and the result mesh

I used NeRF model from nerfstudio, which is a great tool integrated multiple models and providing a viewer. I used Metashape for the camera position estimation, since COLMAP embedded in nerfstudio took much longer. The camera estimation took around 2 hours, but the training only took harf an hour, with promising result.

NeRF reconstruction result, which preserves sky and surrounding environment