Week 8

Input Devices

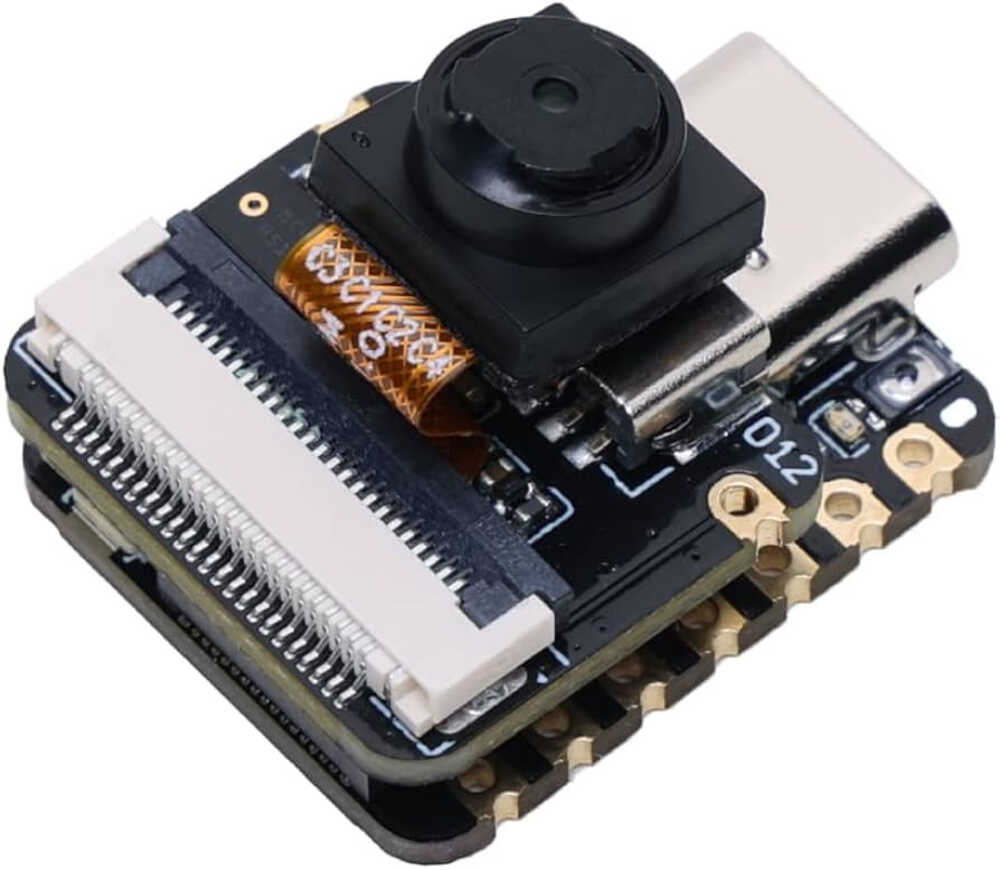

I thought the xiao camera modules looked really cool so I wanted to do a project that incorporated one of those. I quickly became fixated on the idea of making a banana detector that takes an image from the camera and determines whether or not it's a banana. Seems simple enough, but this ended up being a whole lot more challenging than I expected

before I get into all the details of my various attempts at banana detection, I'll start by talking about the camera since I guess that was supposed to be the focus of the week.

left is the camera module on an esp32. right is an example image captured with it

As you can see the image quality is not the best. But was still happy to be able to read input and still very impressive for its size and ease of use.

I started by focusing on how can I detect a banana. I've taken intro to machine learning, I know what a CNN is, how hard could this be? The answer is very. I explored various tutorials, tried to train my own cnn, tried to find things that would be light enough to run on a esp32. I tried a mix of pytorch, torchvision (a subclass of pytorch), and opencv

At the point when I was struggling the most with this, I decided to see if I could just look at the pixel values and pick yellow. Unfortunately, the shade of a banana is not always the same and beige colored wall or floors are close enough to yellow that this was not very successful.

Then I went back to my computer vision idea and decided it would be ok if I have a ml model running on my computer, connected to the esp and reading the image in. I found a segment detection model that includes bananas in its pretrained weights, so I started using this.

Then came the sending image part. I wanted to create a server running on my computer using python and http.server. I made it so this server could handle post requests, run the detection on them and then write something back to the esp32 telling it whether or not there was a banana.

This kinda worked but the detection was still pretty meh and it took a while. I decided to see if there was a better vision model I could use. Yuval recommended YOLO and it came up a lot in my googling so I decided to give it a go. After looking through various tutorials, I found an example, made a few changes, and added that into my server code. This ended up being basically just using out of the box yolov5. This worked well, but didn't seem much more accurate. It was faster but then I realized the bottleneck in many cases had been sending the actually image from the esp32 to the server because it's a lot of bytes

Then, I tried to figure out how to improve the networking part. I ended up looking a little ahead at networking week. The first thing I thought might be the issue was that I was reconnecting to the server everytime I wanted to send a message. I came up with a few ideas to solve this. The first was to make the tcp connection a persistent connection so it wouldn't disconnect and reconnect everytime. This kinda worked, but I was having some trouble getting it to connect at all at times (probably because I didn't close it correctly or something)

At this point I started questioning whether TCP was the correct way to go at all. I started to explore web sockets and udp. I tried using an arduino websocket library I found, but could not get this working really. Then I dug through the HTMAA site a little more and took some inspo from udp examples to send messages through udp instead. The main drawback of UDP is packet loss. I thought this wouldn't be a problem because a skipped frame is not important. However, ESP32 has an MTU of 1500, which means I could only send packets of max size 1436. So if I wanted to send a whole image I would have to stitch the packets together.

I tried a myriad of approaches to reassembling the packets. I started by adding a packet number as a header. then used that number to figure out which bytes of the image those corresponded to and do that when the packet was received. This led to stripey images because some packets would be received and others not. I tried resending each packet a few times, or randomly choosing the order to send packets, and some of this helped but I still couldn't get a good image. Then I tried a send and respond structure where the server would say give me packet 5 and the client would send packet 5. Then the client would only read a new frame when it was asked for package 0. This gave me good images but was still slow ~5s round trip time.

So I went back to TCP, I made some tweaks, and got it working somewhat better. ESP32 client code can be found here and server code is here

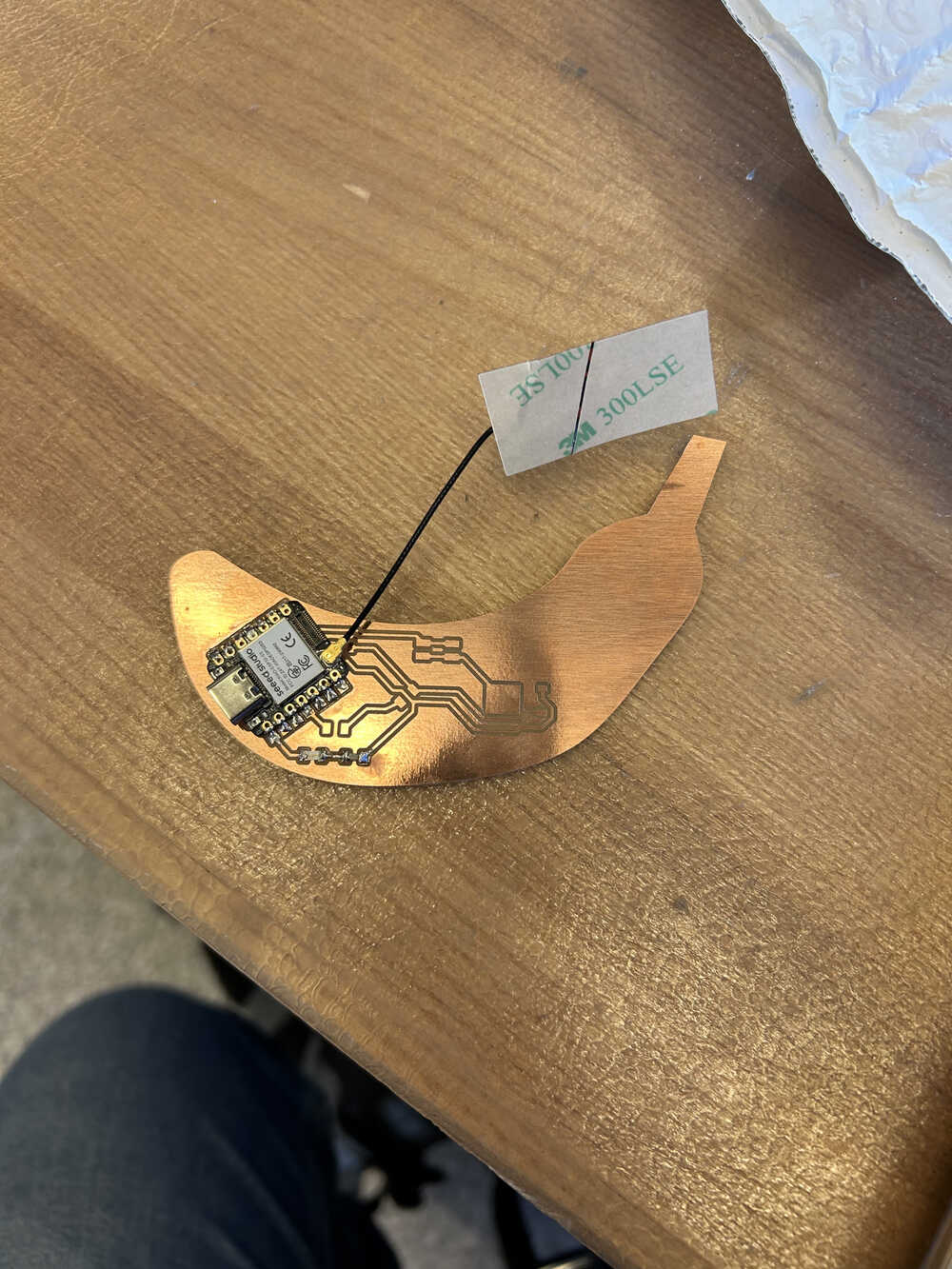

Also, sometime in the middle of all of this struggle, I designed and produced a banana shaped pcb for my esp32. I added an LED that turns on when a banana is seen.

left is the milled board and right includes the soldered on esp32s3, LED, and a resistor

I'm planning to also use this board next week for output devices, so I left a spot to add a speaker.

it kinda works! The led turns on when it sees the banana and off when the banana goes away. I actually didn't wait quite long enough before pausing the video but I promise it went off.

my conclusion for the week: embedded-programming + networking + computer-vision + perfectionism = pain.