HOW TO DOT ALMOST ANYTHING

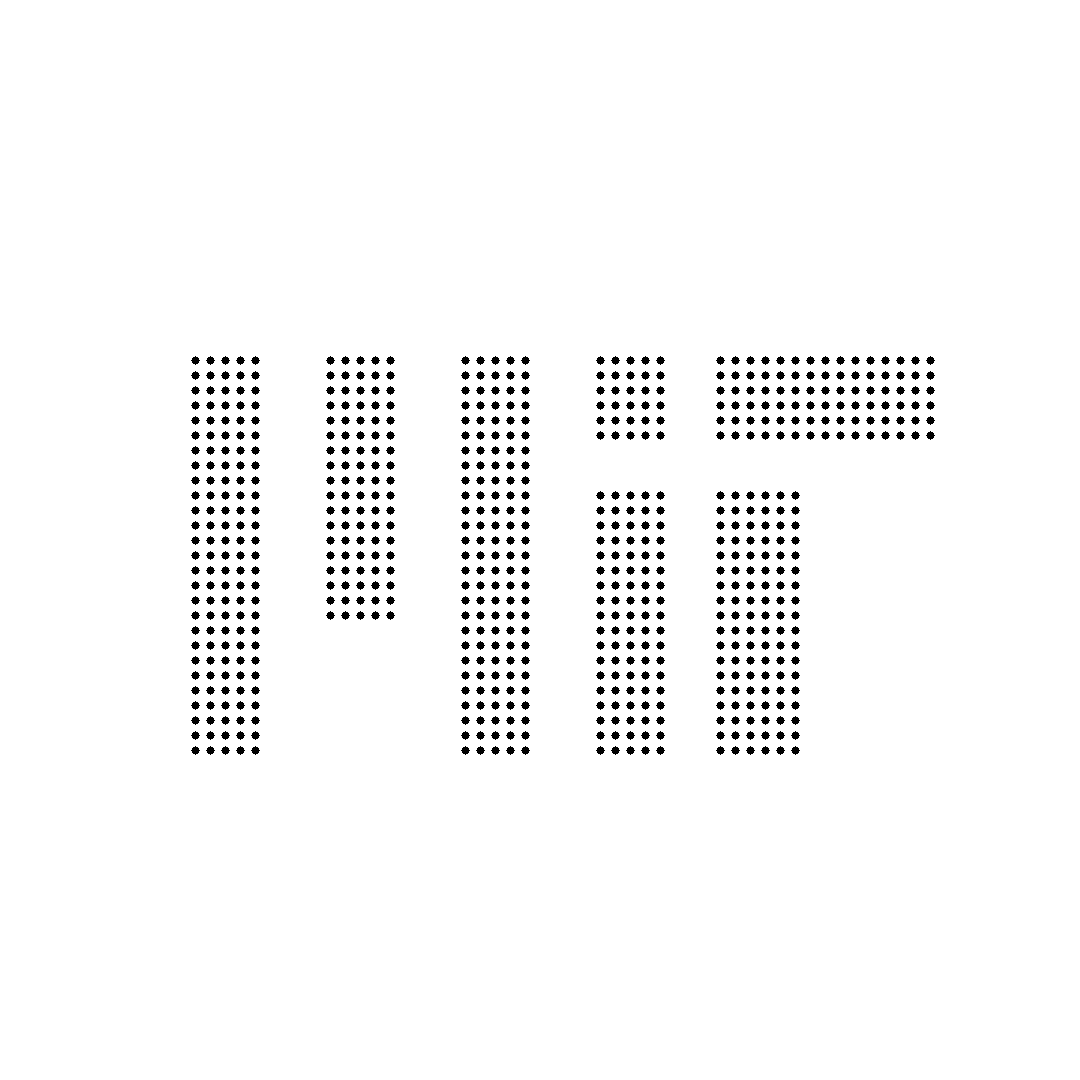

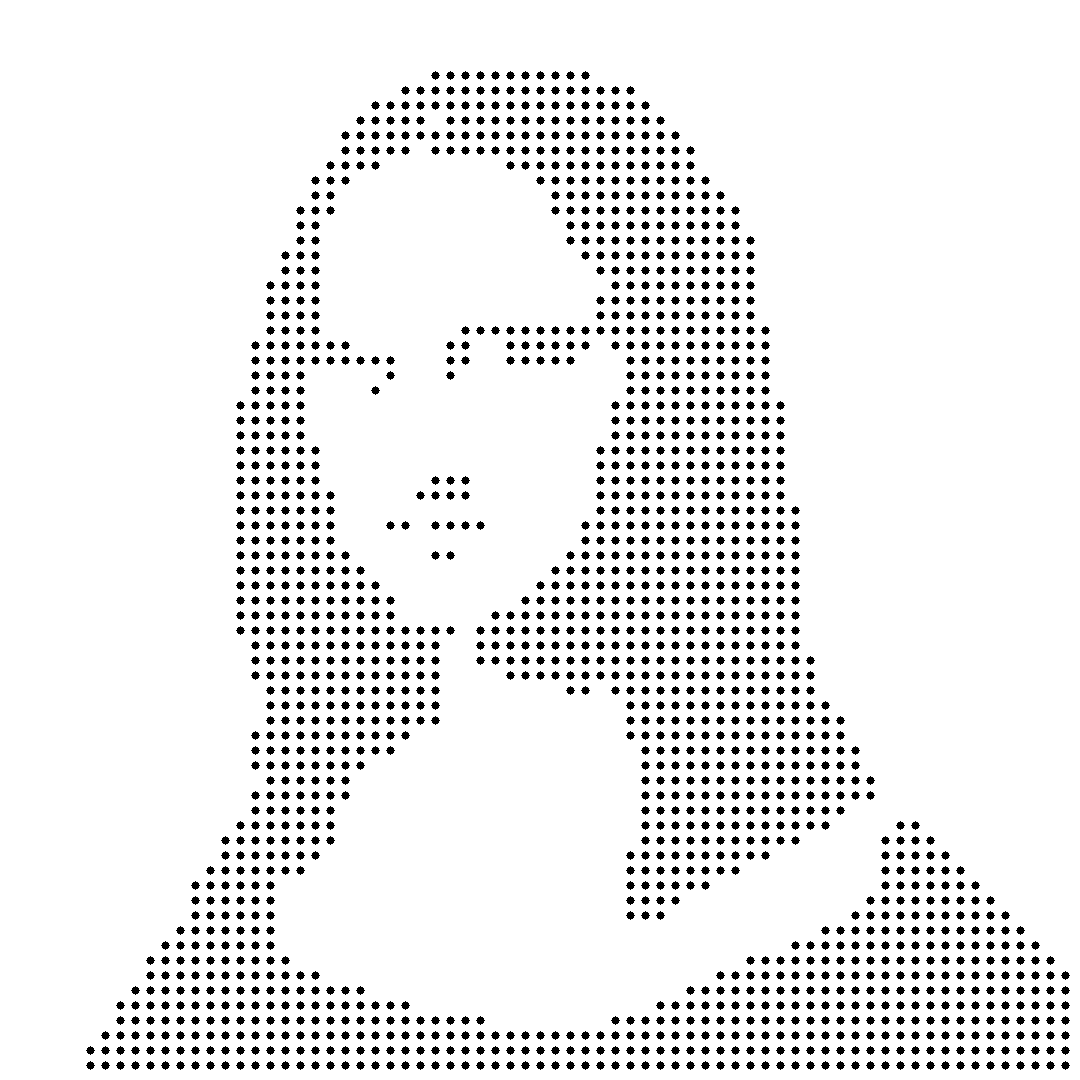

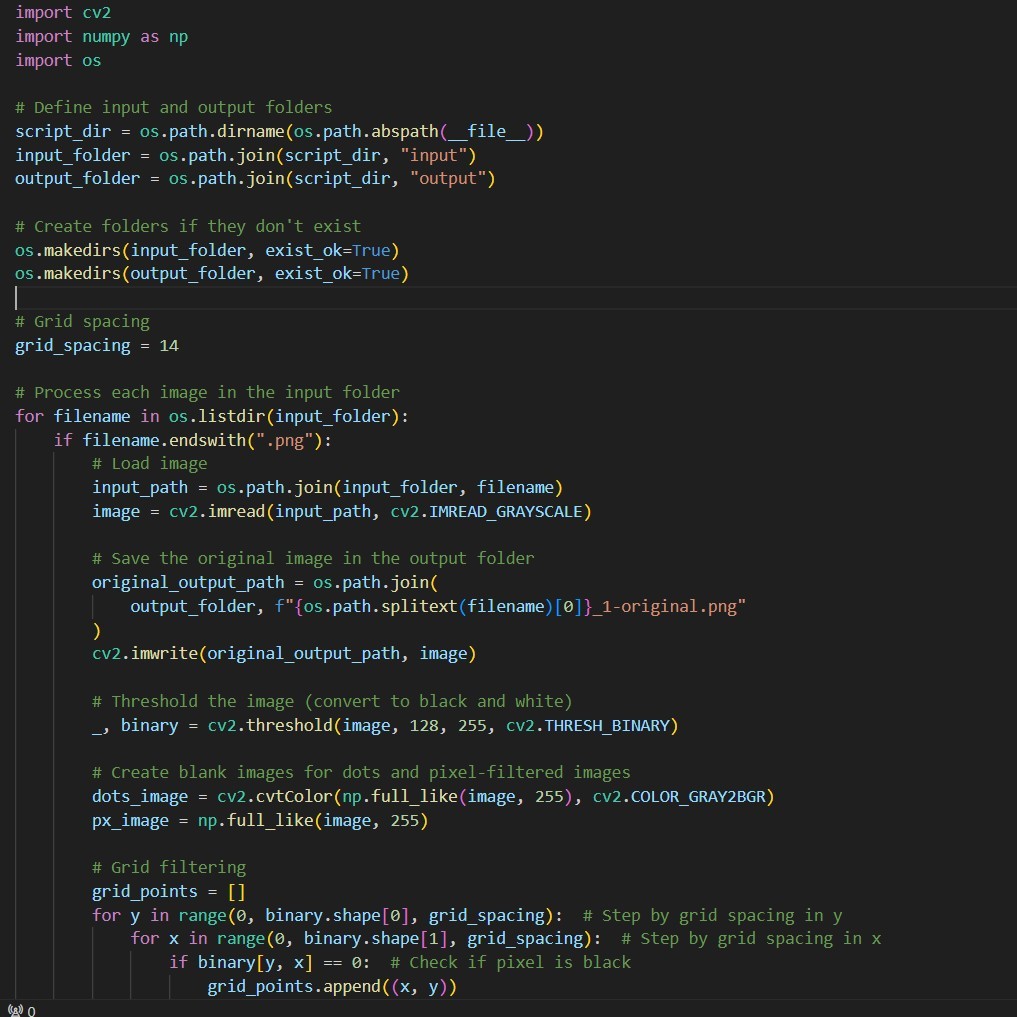

01 - Image to Px Coordinates

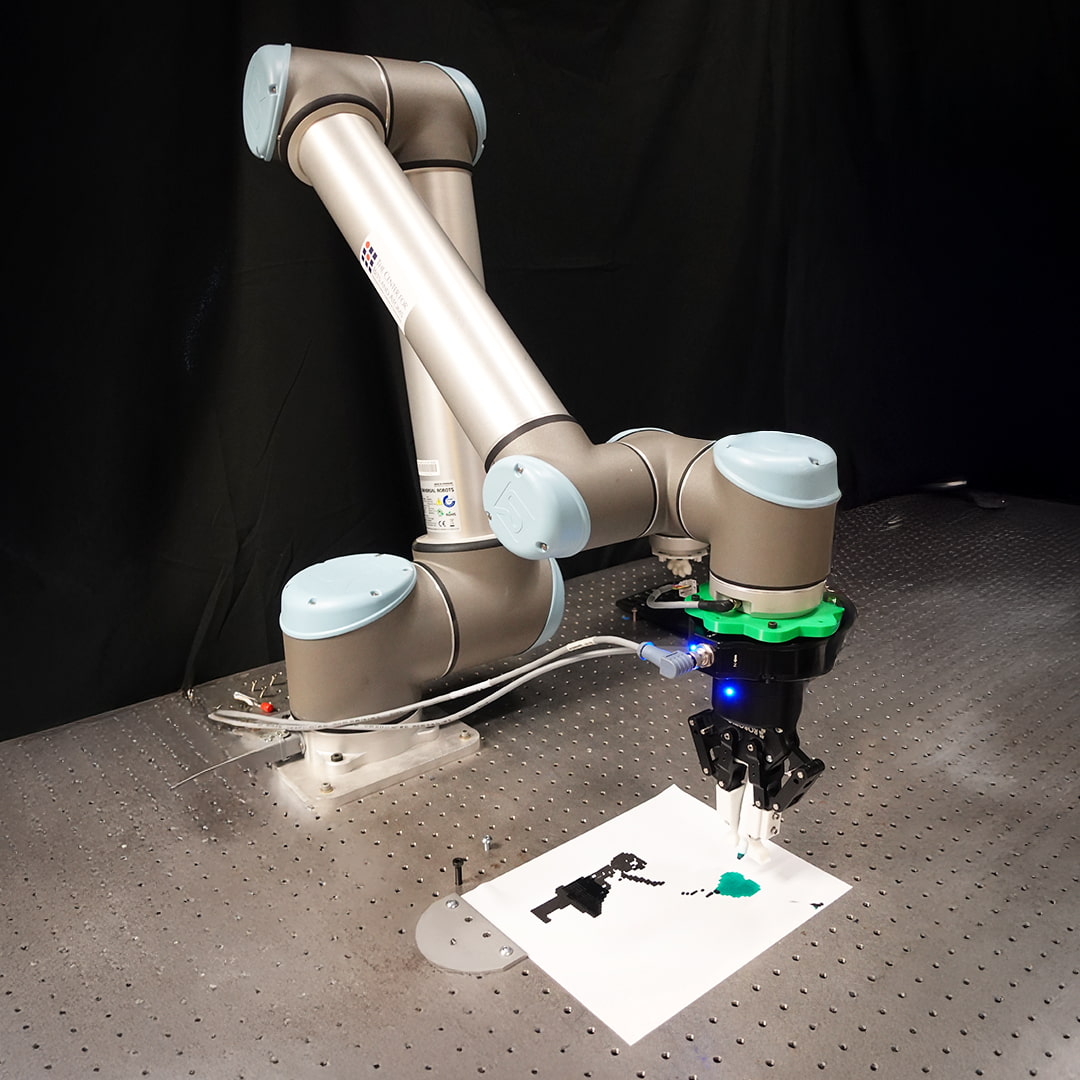

For wilcard week, I joined forces with Wang Shi Ray. We developed a workflow that would take a digital image and make the UR10 robotic arm draw it as dots in a sheet of paper.

First, we created a script that takes in an image, extracts the x and y px positions of all pixels who's opcacity is 50% or more, and filters those pixels at a constant rate to get a grid of separated pixels in the dark areas of the image.

HOW TO DOT ALMOST ANYTHING

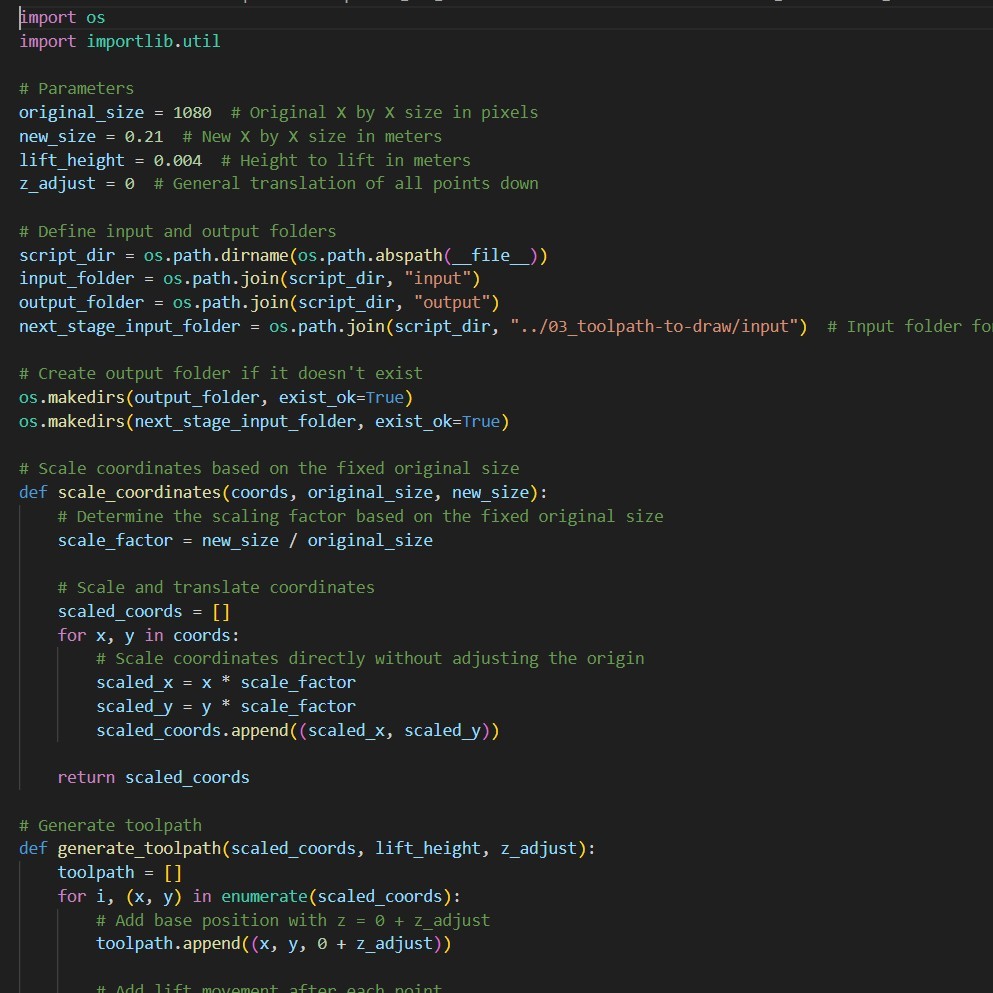

02 - Px Coordinates to Toolpath Positions

Then, we developed a subsequent script takes in the filtered px coordinates outputed by the previous script, scales them to the size of a real image (in this case a sheet of paper), and adds in-between coordinates with higher z value (so that the robot does not draw a line betwee points), effectively creating the toolpath coordinates for the UR10.

HOW TO DOT ALMOST ANYTHING

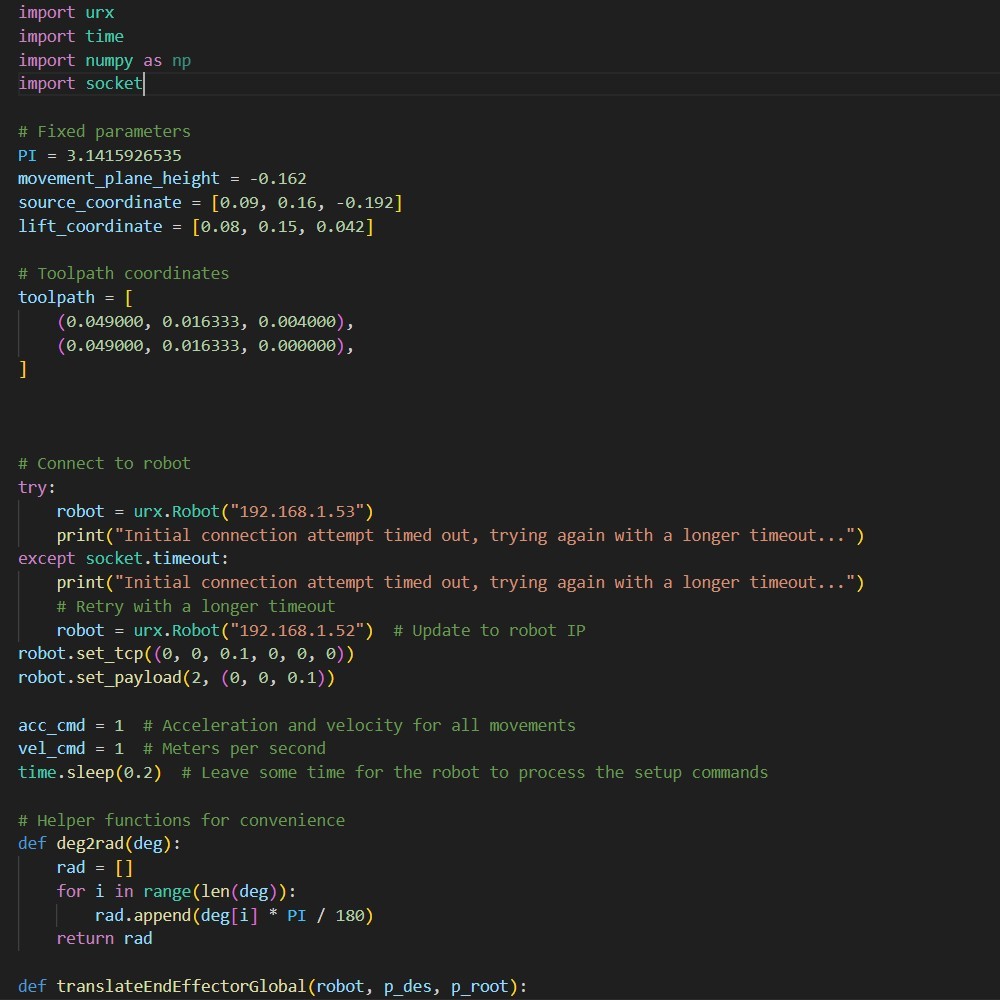

03 - Toolpath Positions to Robot Control

Finally, we took the base script provided by Alex Kyaw, added our list of coordinates for linear movement, installed the gripper into the UR-10, measured the distance form the center of the arm to the tip of the pen, and added a z-adjust distance to process our coordinates. With this, we could run the script and see the UR10 dot any image we fed it into the paper.