week 13

interface and application programming

The assignment for this week was to write an application that interfaces a user with an input and/or output device that you made.

I wanted to keep things fairly simple this week as I'm traveling for a conference and thus am away from the lab. I actually had no idea what I wanted to do for this assignment and spent a bunch of time tinkering with the different types of tools (specifically graphics and interfaces) provided as examples on the HTMAA website. Unfortunately, that means I just spent several hours tinkering instead of making any real progress or decisions. Normally, this is not an issue (and possibly a good thing), but it wasn't great timing as I'd be away the entire week.

In the end, I decided to use the existing webcam on my Mac to program a motion detector and some way of visualizing said motion. This isn't particularly relevant to my final project (read: it is not relevant at all), but I figured using the built-in webcam would simplify things with limited resources while still satisfying the input requirement of this assignment, per the inputs page.

As for the output for video and audio inputs, we were provided lots of different types of examples: SDL, Pygame, PySDL2, openFrameworks, ofpython, TouchDesigner, SuperCollider, among others. I decided to go with Pygame.

I first started off with something simple in VS Code: I wanted to create an interface that would mirror the motion detected from the camera.

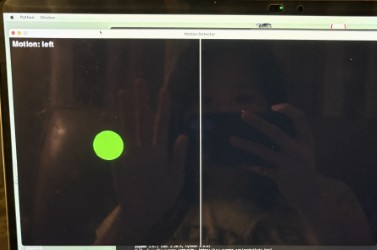

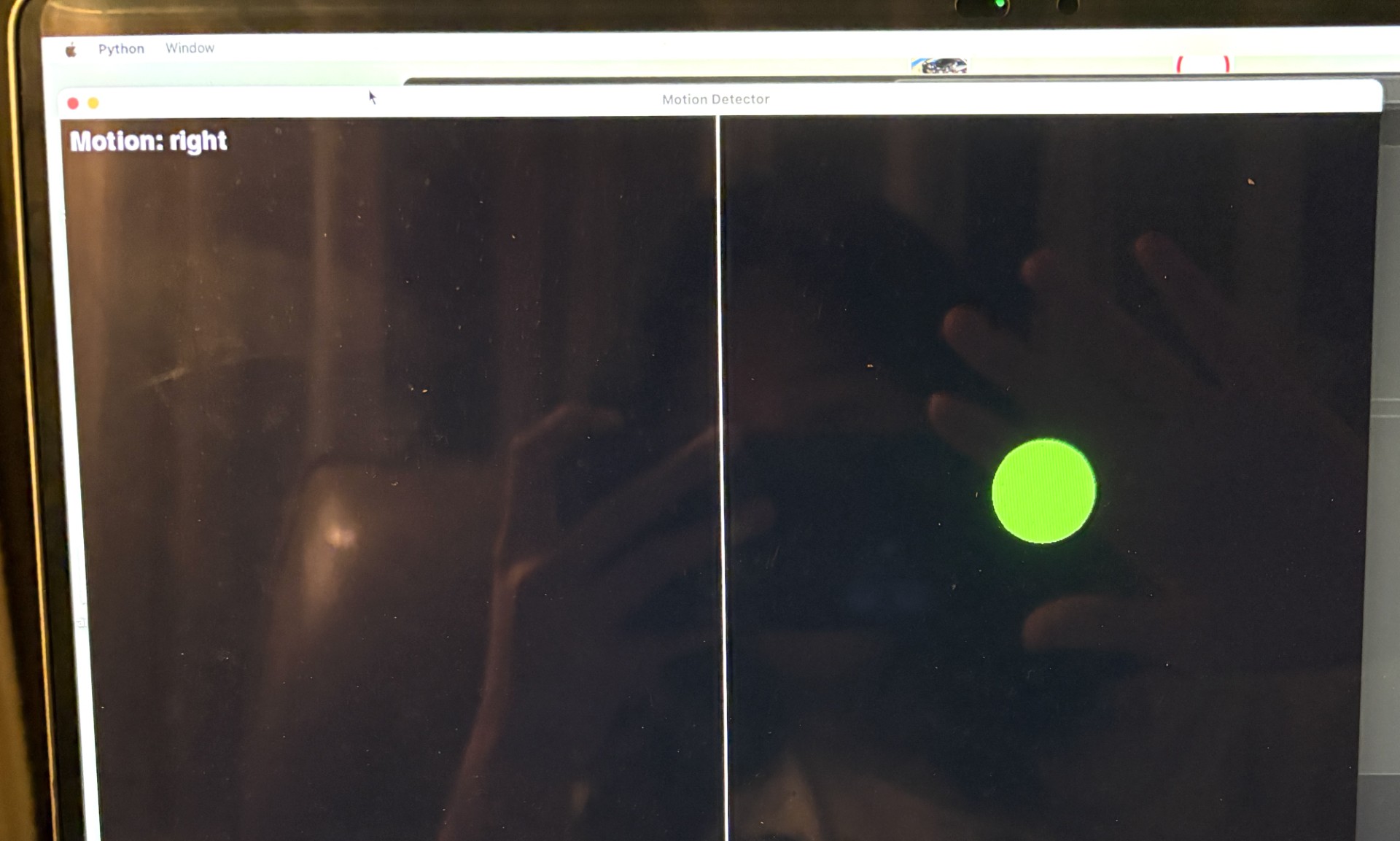

The idea was that we get webcam input (the motion data) and then process the input in real-time using OpenCV. The output would be an interactive visualization, using Pygame for graphics display, that displays real-time feedback with visual elements (for now, geometric shapes), text updates, and screen division.

I've copied my code below:

import cv2

import numpy as np

import pygame

import sys

from pygame import gfxdraw

class GestureController:

def __init__(self):

# 1. Initialize webcam

self.cap = cv2.VideoCapture(0) # Use default webcam (usually built-in)

if not self.cap.isOpened():

print("Error: Could not open webcam")

sys.exit()

# Get webcam frame size

_, frame = self.cap.read()

self.height, self.width = frame.shape[:2]

# 2. Initialize Pygame for visualization

pygame.init()

self.screen = pygame.display.set_mode((self.width, self.height))

pygame.display.set_caption("Motion Detector")

# 3. Setup variables for motion detection

self.prev_frame = None

self.motion_threshold = 30 # Adjust this value based on your needs

# Colors

self.BLACK = (0, 0, 0)

self.WHITE = (255, 255, 255)

self.RED = (255, 0, 0)

self.GREEN = (0, 255, 0)

self.BLUE = (0, 0, 255)

def process_frame(self):

# 1. Capture frame

ret, frame = self.cap.read()

if not ret:

return None, None

# 2. Mirror frame (so movements are more intuitive)

frame = cv2.flip(frame, 1)

# 3. Convert to grayscale

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# 4. Blur the image to reduce noise

gray = cv2.GaussianBlur(gray, (21, 21), 0)

# Initialize motion direction

motion_direction = "none"

# 5. Compare with previous frame

if self.prev_frame is not None:

# Calculate difference between frames

frame_diff = cv2.absdiff(self.prev_frame, gray)

# Apply threshold to get regions of significant movement

thresh = cv2.threshold(frame_diff, self.motion_threshold, 255, cv2.THRESH_BINARY)[1]

# Divide frame into left and right halves

left_sum = np.sum(thresh[:, :self.width//2])

right_sum = np.sum(thresh[:, self.width//2:])

# Determine direction based on which half has more motion

if left_sum > 10000 and left_sum > right_sum * 1.5: # Adjust these values as needed

motion_direction = "left"

elif right_sum > 10000 and right_sum > left_sum * 1.5:

motion_direction = "right"

# 6. Store current frame for next iteration

self.prev_frame = gray

return frame, motion_direction

def draw_visualization(self, motion_direction):

# 1. Clear screen

self.screen.fill(self.BLACK)

# 2. Draw dividing line

pygame.draw.line(self.screen, self.WHITE,

(self.width//2, 0),

(self.width//2, self.height),

2)

# 3. Draw circles based on motion

if motion_direction == "left":

# Draw filled circle on left side

gfxdraw.filled_circle(self.screen,

self.width//4, self.height//2,

50, self.GREEN)

elif motion_direction == "right":

# Draw filled circle on right side

gfxdraw.filled_circle(self.screen,

3*self.width//4, self.height//2,

50, self.GREEN)

# 4. Add text showing current direction

font = pygame.font.Font(None, 36)

text = font.render(f"Motion: {motion_direction}", True, self.WHITE)

self.screen.blit(text, (10, 10))

# 5. Update display

pygame.display.flip()

def run(self):

try:

while True:

# 1. Check for quit event

for event in pygame.event.get():

if event.type == pygame.QUIT:

return

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_q:

return

# 2. Process frame and get motion direction

frame, motion_direction = self.process_frame()

if frame is None:

break

# 3. Update visualization

self.draw_visualization(motion_direction)

# 4. Control frame rate

pygame.time.delay(30)

# Clean up

self.cap.release()

pygame.quit()

if __name__ == "__main__":

controller = GestureController()

controller.run()The motion detection was actually much more sensitive than I'd expected! (Please excuse the extremely blurry photos and the gross computer screen...)

Here's a video of it in motion (ft the unmistakable hotel carpet in the background):

If I have time later, I'd like to add more to the visualization portion of this assignment: I think 'drawing' something virtually via motioning into a camera would be cool, or simply adding different types of colors and shapes depending on the type or velocity of the motion could also work to make the output more interesting.