This week focused on building an application that interfaces a user with an input and output device that I made. Rather than introducing a new system, I extended my final project by creating a web-based interface that makes the existing motion-to-light relationship visible and legible.

The physical system consists of two Xiao Seeed ESP32-C3 boards communicating over Bluetooth Low Energy. One board lives inside the monolith and acts as the input: it detects motion using an RCWL-0516 radar sensor and sends a BLE notification when motion occurs. The second board lives inside the orb and acts as the output: it listens for that notification and triggers a non-blocking sunrise animation across five NeoPixel LEDs.

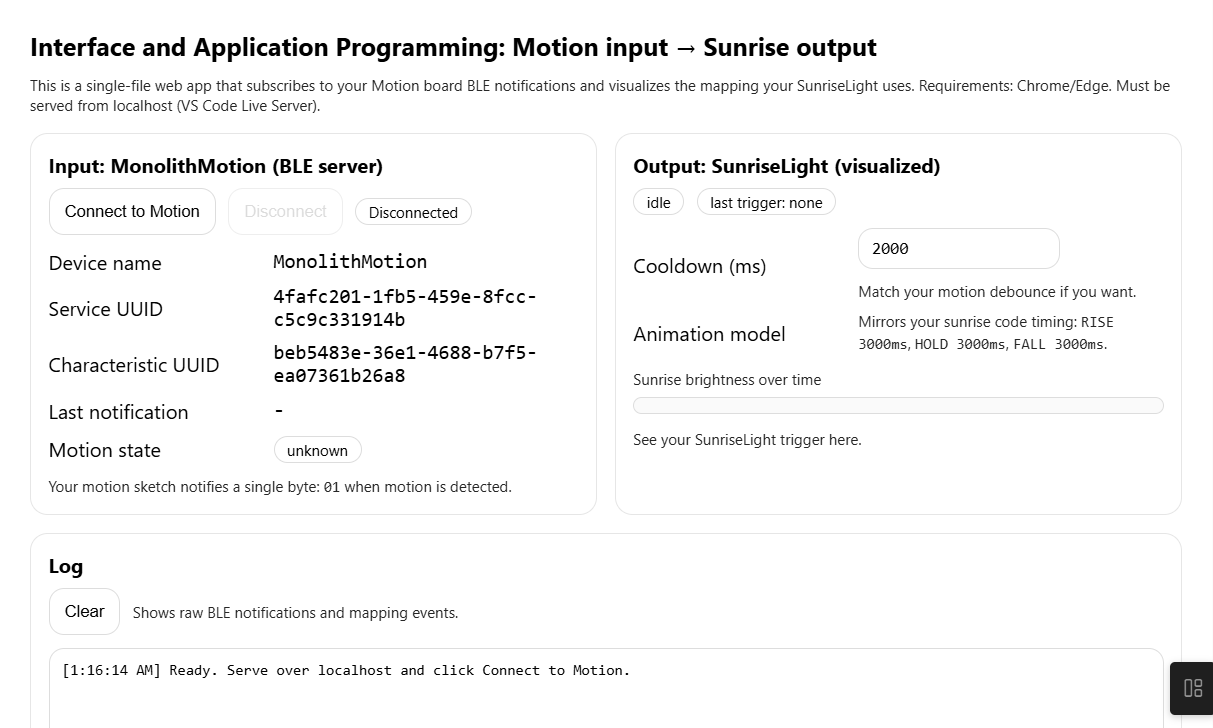

For this assignment, the application layer is a browser-based interface written in HTML, CSS, and JavaScript. The web app connects directly to the motion board using the Web Bluetooth API and subscribes to the same BLE characteristic that the orb listens to. I intentionally did not change anything in the motion or sunrise firmware.

When a motion notification is received (a single byte with a value of 0x01),

the web application interprets this as an input event.

That input is then mapped to a visual output in the browser, mirroring the timing and behavior of the physical sunrise.

The interface includes a real-time log of incoming BLE data, a simple progress visualization, and an orb-like light graphic that brightens, holds, and fades in sync with the physical LEDs. This makes the system’s behavior observable without interfering with the hardware itself.

One important constraint is that the sunrise board is a BLE client and does not expose a writable interface. Because of this, the web application does not control the LEDs directly. Instead, it acts as a readable layer that reflects the same trigger that drives the physical system.

What this assignment clarified for me is the role of an interface as an interpretive layer. The hardware already worked, but the web application makes the interaction understandable, especially when motion is subtle or when the orb is not immediately visible. The system feels less like a hidden mechanism and more like a conversation between objects, light, and a user.

Funfact:My social media accounts are titled "audio-visual interface" as an easter egg to .avi