Week 3 - Embedded Programming

I spent this week on both the week’s assignment as well as my final project. We’ll look at both below.

This week’s assignment

Here is the assignment as per the course website.

group assignment:

• demonstrate and compare the toolchains and development workflows for available embedded architectures

individual assignment:

• browse through the data sheet for a microcontroller • write and test a program for an embedded system using a microcontroller to interact (with local input &/or output devices) and communicate (with remote wired or wireless connections) • extra credit: try different languages &/or development environments

Group Assignment

Ben, Jaqueline and I talked about different microcontrollers and ways to program them. Jaqueline told us about how she’s using the Arduino IDE to program her SAMD21 board in C++. Ben is using both a Teensy 4.0 and an Adafruit Feather. He’s programming them with PlatformIO (a VS Code extension) and using CircuitPython (with the board mounted as a file system). I shared my experience with micropython and two ways I’ve used in the past to program ESP32 dev boards:

- Using VS Code and PyMakr (I don’t like this approach, it’s not reliable and I spend more time debugging than programming)

- Mounting the board into WSL and using mpremote to directly use the REPL on the board or pushing python files onto it. I’ll outline that approach a bit more further down in the section of my progress on the final project.

Ben put together a pdf outlining our conversation: PDF - Group Assignment

Individual Assignment

This week was, again, a lot of fun. I made a board (or rather multiple boards) that can communicate with a web page to imitate message sending from Stranger Things' Upside Down. The video above gives you a first impression.

I had experience with both soldering as well as embedded programming from before my time at the Media Lab. More so with the latter than the former, though. I specifically did not have any experience with soldering very small components so when it came to choosing a board for this week, I opted for the board with a SAMD21 footprint as it would allow me to practice soldering fairly small joints.

Soldering

Admittedly, soldering Quentin’s QPad board was a bit of an emotional rollercoaster. I was very frustrated initially when I used too much solder and essentially created one big short on each of the 4 sides of the microcontroller. It gave me an opportunity to learn how to use the desoldering wick and pump, though. Initially it felt like all I was accomplishing was soldering the desoldering wick onto the board. Eventually, I got the hang of it and successfully finished the soldering of the SAMD21 microcontroller onto the board. The other components were very easy in comparison - with the exception of the micro-usb receptacle. It has a few very small contacts, but having learned from previous mistakes, I was able to solder it with less solder and a good amount of flux.

Quentin then helped me with flashing the required firmware onto the chip. Loading his code examples showed that the assembly of the board was successful and all components worked as expected. The initial frustration was gone and I left the lab very happy. I’m very keen on improving my soldering skills in the coming weeks and will look for opportunities to practice. Thank you, Quentin, for designing the practice board for us and helping me with questions and the final tests. Thank you, Alan, for your help with the equipment and soldering advice. I appreciate both of you.

Programming

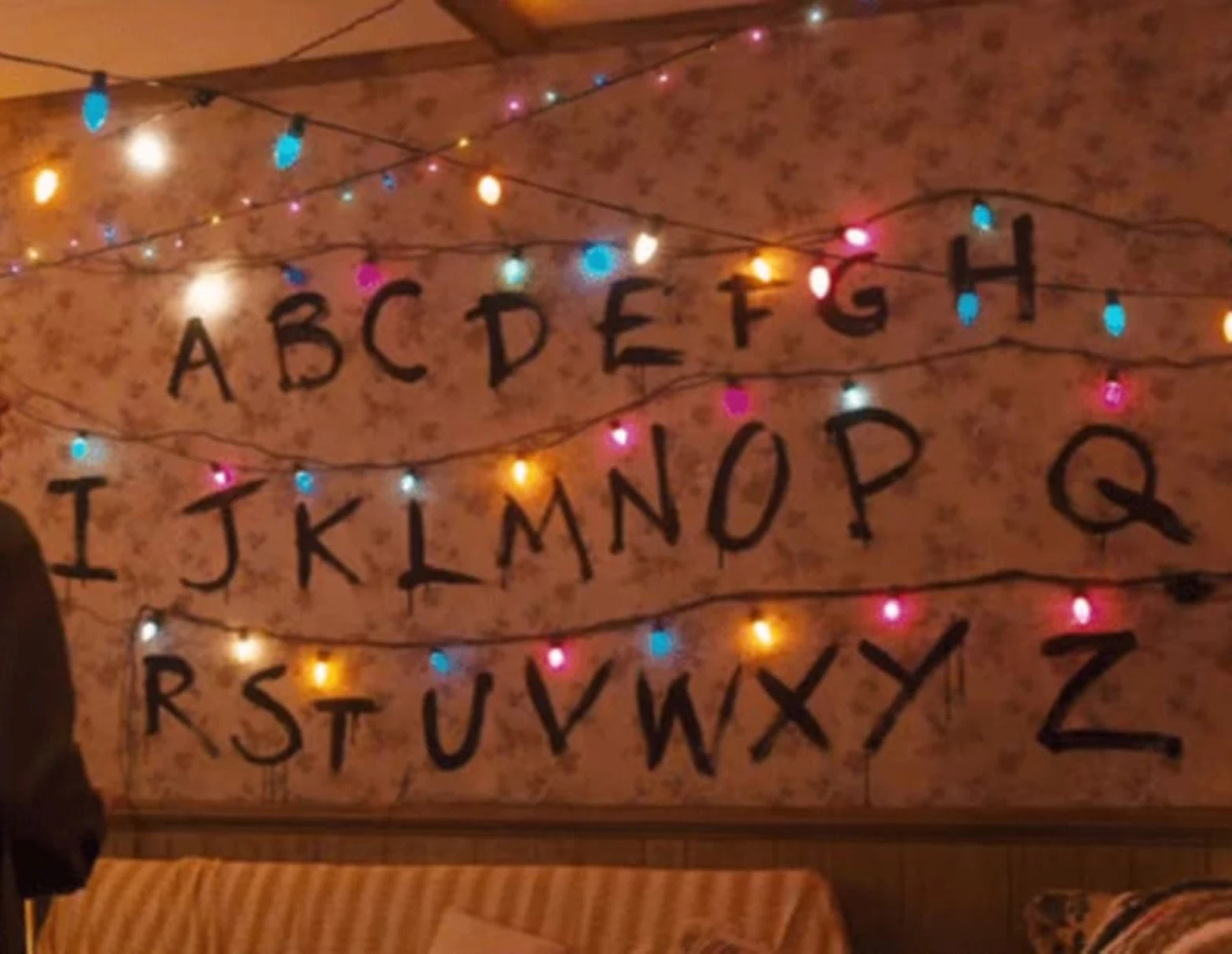

I felt much more at home when it came to programming the board. My idea for this week leans on a pop culture reference. In the first season of the show “Stranger Things” one of the characters, Will Byers, gets lost in a parallel dimension. This place is later named “The Upside Down” by the characters in the show. His mom never gives up on him and they find a way to communicate to each other: His mom hangs up a string of lights with a letter attached to each light bulb. Will is then able to switch on these light bulbs from the Upside Down and can communicate letters and words to his mom that way.

I had hoped that I could use an ESP32 for this week’s assignment, but at the time of soldering none was available. The narrative of my idea is a little stronger if we imagine a wireless bluetooth connection (ESP32s support bluetooth, the SAMD21 I ended up using does not), but I think it still works and is a cute idea.

Imagine getting lost in the Upside Down and you could whip out your handy QPad board to communicate with your home dimension! Wouldn’t that be great?

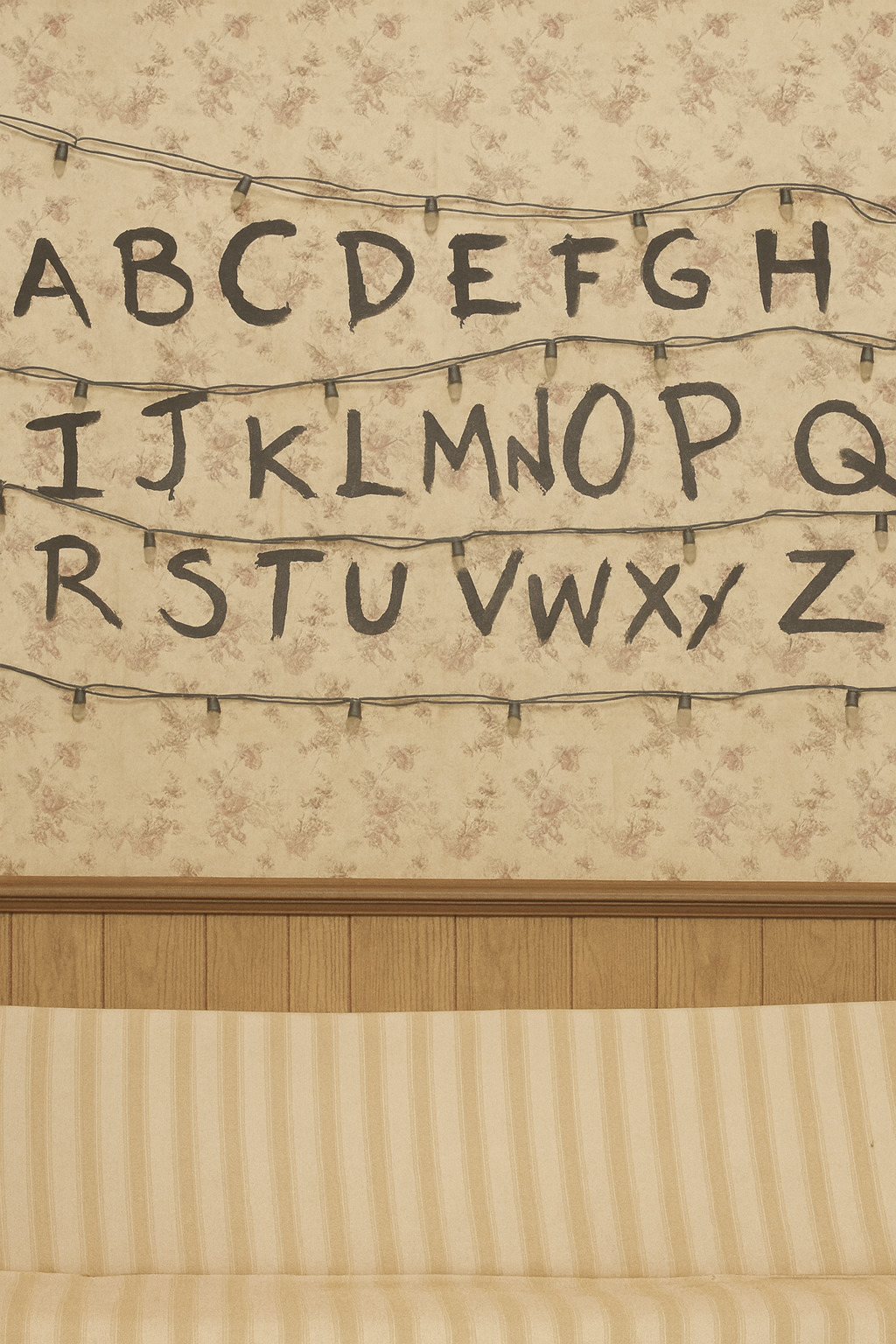

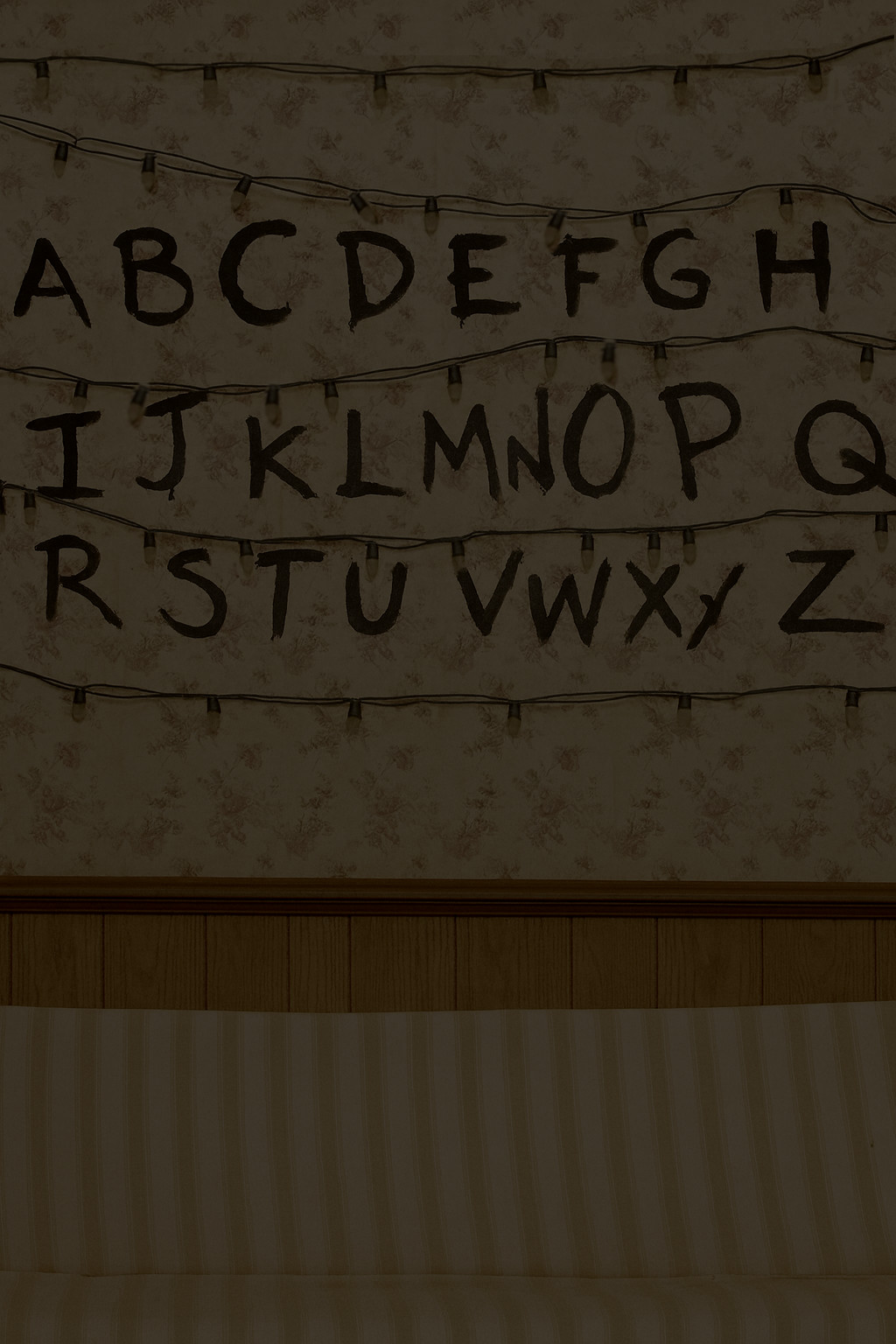

For the assignment I made use of AI for image generation. Below you can see a) the reference image I used, b) the image ChatGPT gave me and c) the resulting image I made myself by adding additional light bulbs (not every letter had one, adding more lights for decoration and adjusting image brightness for mood).

Prompt used for image transformation, ChatGPT with GPT-5

Can you please look at the attached image and transform the image for me in the following ways:

- I want a orthographic frontal view of the wall with the letters and the lights.

- The lights need to be switched off

- Details like the shape of the light bulbs and the pattern on the wallpaper etc should be preserved.

- The shape and order of the letters also needs to be preserved.

I then also used AI to give me a starting point for the HTML and JS code required to make the light bulb message board work.

Prompt

Ok, cool. Can you give me some basic HTML that loads this image and then also places little blurry dots over the light bulbs and uses add or screen or so as a combination method? I want to be able to “switch on” the individual light bulbs.The resulting setup was very decent already and helped a lot with getting this up and running fairly quickly.

Result

<!doctype html>

<meta charset="utf-8" />

<title>Stranger Wall – bulb toggles</title>

<style>

html,body{height:100%;margin:0;background:#111;display:grid;place-items:center}

#stage{position:relative;image-rendering:auto}

#wall{display:block;max-width:min(90vw,1100px);height:auto}

canvas{position:absolute;inset:0;pointer-events:none}

/* optional: a quick legend */

.hint{position:fixed;bottom:10px;left:10px;color:#aaa;font:12px system-ui}

</style>

<div id="stage">

<!-- Replace src with your exported ortho, lights-off image -->

<img id="wall" src="wall_ortho_off.jpg" alt="Alphabet wall" />

<canvas id="fx"></canvas>

</div>

<div class="hint">Click bulb to toggle • Alt-click logs coords for adding bulbs</div>

<script>

const img = document.getElementById('wall');

const cvs = document.getElementById('fx');

const ctx = cvs.getContext('2d', { alpha: true, desynchronized: true });

// Example bulb list (x,y in image pixels). Add as many as you want.

// You can mix colors per bulb.

const bulbs = [

{x:120, y:115, r:36, color:'#ffd27a', on:false},

{x:270, y:130, r:36, color:'#7ad6ff', on:false},

{x:420, y:120, r:36, color:'#ffa0ff', on:false},

{x:565, y:135, r:36, color:'#ffd27a', on:false},

{x:720, y:120, r:36, color:'#7ad6ff', on:false},

{x:870, y:135, r:36, color:'#ffd27a', on:false},

{x:140, y:255, r:36, color:'#7ad6ff', on:false},

{x:310, y:260, r:36, color:'#ffd27a', on:false},

{x:490, y:270, r:36, color:'#ffa0ff', on:false},

{x:660, y:260, r:36, color:'#ffd27a', on:false},

{x:840, y:270, r:36, color:'#7ad6ff', on:false},

{x:160, y:405, r:36, color:'#ffd27a', on:false},

{x:360, y:410, r:36, color:'#7ad6ff', on:false},

{x:560, y:415, r:36, color:'#ffd27a', on:false},

{x:760, y:410, r:36, color:'#ffa0ff', on:false}

];

let scale = 1, dpr = Math.max(1, window.devicePixelRatio || 1);

// size canvas to the image on load/resize

function fit() {

const rect = img.getBoundingClientRect();

cvs.style.width = rect.width + 'px';

cvs.style.height = rect.height + 'px';

cvs.width = Math.round(rect.width * dpr);

cvs.height = Math.round(rect.height * dpr);

scale = rect.width / img.naturalWidth;

draw();

}

function draw() {

ctx.clearRect(0,0,cvs.width,cvs.height);

ctx.save();

ctx.scale(dpr, dpr);

ctx.globalCompositeOperation = 'screen'; // additive-like

for (const b of bulbs) if (b.on) glow(b);

ctx.restore();

}

function glow(b) {

// position in CSS pixels

const x = b.x * scale, y = b.y * scale;

const r = (b.r || 36) * scale;

// outer soft aura

let g = ctx.createRadialGradient(x, y, 0, x, y, r*2.4);

g.addColorStop(0, hexA(b.color, 0.36));

g.addColorStop(0.35, hexA(b.color, 0.14));

g.addColorStop(1, hexA(b.color, 0));

ctx.fillStyle = g; ctx.beginPath(); ctx.arc(x,y,r*2.4,0,Math.PI*2); ctx.fill();

// inner bright core

g = ctx.createRadialGradient(x, y, 0, x, y, r);

g.addColorStop(0, hexA('#ffffff', 0.85));

g.addColorStop(1, hexA(b.color, 0));

ctx.fillStyle = g; ctx.beginPath(); ctx.arc(x,y,r,0,Math.PI*2); ctx.fill();

}

// click to toggle nearest bulb

img.addEventListener('click', (e) => {

const rect = img.getBoundingClientRect();

const x = (e.clientX - rect.left) / scale;

const y = (e.clientY - rect.top ) / scale;

if (e.altKey) { console.log(`{x:${x|0}, y:${y|0}, r:36, color:'#ffd27a', on:false},`); return; }

let iBest = -1, dBest = 1e9;

bulbs.forEach((b,i) => {

const dx = b.x - x, dy = b.y - y;

const d2 = dx*dx + dy*dy;

if (d2 < dBest) { dBest = d2; iBest = i; }

});

if (iBest >= 0) { bulbs[iBest].on = !bulbs[iBest].on; draw(); }

});

img.decode ? img.decode().then(fit) : img.complete ? fit() : img.addEventListener('load', fit);

window.addEventListener('resize', fit);

// helper: hex -> rgba with alpha

function hexA(hex, a=1){

const h = hex.replace('#','');

const [r,g,b] = h.length===3

? [...h].map(s => parseInt(s+s,16))

: [h.slice(0,2),h.slice(2,4),h.slice(4,6)].map(s=>parseInt(s,16));

return `rgba(${r},${g},${b},${a})`;

}

</script>

This setup allowed me to quickly identify the locations of the light bulbs for initial testing. I then refactored the code manually to make it easier to address specific light bulbs that should be dynamic (as opposed to the static bulbs on the purely decorative strings). I also played with different blending modes and sizes of the lights. Additionally, I added an animation loop that allowed for a more dynamic feel. I was quite happy with the final look and feel of the page.

I then proceeded to add the JS Web Serial API to my setup so that I could send commands from the SAMD21 microcontroller to activate individual lights. Note that this API will probably only work in Chrome and Edge. The communication across this interface is very simple. The board needs to send the character (letter) that needs switching on and the JavaScript on the page then figures out which of the bulbs needs to be addressed. The logic on the page also makes it so that switching on one light will automatically switch off all the others.

The code for the board was largely straight-forward. I used the Arduino IDE and wrote C++ code for the logic on the board. I based my code on Quentin’s examples for both the screen and touch pad handling.

The only unintuitive part of this process was building a menu for the screen. I wanted a menu that allowed the following:

- Send pre-defined messages for demos

- Send individual letters for when someone is actually lost in the Upside Down and needs to send a custom message

- A settings section that lets the user change the speed at which the characters are sent in the case of pre-defined messages

I used ArduinoMenu for the menu definition and ran into a couple of issues. The menu declarations in code are based on C macros which obfuscate errors a little bit. If you make a mistake, you will most likely receive error messages that have more to do with the macro system than your actual mistake. I eventually figured out (with the help of ChatGPT) that each menu declaration needs a minimum of 2 entries and can have a maximum of 16. I violated both of these in my tests and was presented with unhelpful messages that certain symbols weren’t declared. So, if you use ArduinoMenu, make sure that you add at least two menu entries, even if you’re just testing. I wanted to have all letters in a single menu, but due to the size constraints, I had to split up the letters across two menus. Now, the user needs to first select if they want to send a letter in the range of A-M (first menu) or N-Z (second menu). I’m not particularly happy with that setup, but it works for now. There are probably much better ways to handle the letter selection, though.

Below is a video of the final result. I’m sending the message “howtomakealmostanything” in this case - which is unlikely to save anyone from the Upside Down, but there are 2 additional messages stored on the board: “iamlost” and “helpme”. The speed of the message can be controlled with a simple setting accessible via the menu.

To interactively test the final result you can visit the Message Wall page. You can use your own board to send these messages, but to make things easier for you, I left the code that switched lights on based on mouse clicks.

Update

Quentin was able to give me an ESP32-S3 board so I could test a bluetooth connection to my web page. Adding the respective JS code was easy enough. I’m using the Web Bluetooth API to connect to the board and have a simple eventListener set up that receives characters and passes them over to my function that handles the light bulbs. I have written JS code for these sorts of applications before, so I used ChatGPT to produce the code and save me some time.

Prompt

Can you give me some basic JS code that allows for making a web bluetooth connection to receive some data from an esp32 board?Result

// Replace with your GATT UUIDs (example: Nordic UART Service)

const SERVICE_UUID = '6e400001-b5a3-f393-e0a9-e50e24dcca9e';

const RX_CHAR_UUID = '6e400003-b5a3-f393-e0a9-e50e24dcca9e'; // notifications from ESP32

let charRx;

async function connectESP32() {

const device = await navigator.bluetooth.requestDevice({

filters: [{ services: [SERVICE_UUID] }]

});

const server = await device.gatt.connect();

const service = await server.getPrimaryService(SERVICE_UUID);

charRx = await service.getCharacteristic(RX_CHAR_UUID);

// receive notifications

await charRx.startNotifications();

charRx.addEventListener('characteristicvaluechanged', (e) => {

const v = e.target.value; // DataView

// decode as UTF-8 (or parse bytes as needed)

const text = new TextDecoder().decode(v.buffer);

console.log('ESP32>', text);

});

}

// call connectESP32() from a user gesture (e.g., button click)

I did the same for the MicroPython side of things. Again, I’ve done this sort of setup before and since this was supposed to be just a quick test of the board, I enlisted ChatGPT for help.

Prompt

Ok, let’s also add some MicroPython code that can connect to that bluetooth receiver we just made and then send some characters like ‘a" to it.Result

# MicroPython on ESP32: BLE UART-style peripheral that notifies the browser.

import bluetooth, time

from micropython import const

from struct import pack

_IRQ_CENTRAL_CONNECT = const(1)

_IRQ_CENTRAL_DISCONNECT = const(2)

_IRQ_GATTS_WRITE = const(3)

# Nordic UART Service UUIDs (your values)

UART_SERVICE_UUID = bluetooth.UUID('6e400001-b5a3-f393-e0a9-e50e24dcca9e')

UART_TX_UUID = bluetooth.UUID('6e400003-b5a3-f393-e0a9-e50e24dcca9e') # notify: ESP32 -> browser

UART_RX_UUID = bluetooth.UUID('6e400002-b5a3-f393-e0a9-e50e24dcca9e') # write: browser -> ESP32

# Simple advertising payload (name + 128-bit service UUIDs)

def adv_payload(name=None, services=None):

p = bytearray()

if name:

nb = name.encode()

p += bytes((len(nb)+1, 0x09)) + nb # Complete Local Name

if services:

for u in services:

b = bytes(u)

p += bytes((len(b)+1, 0x07)) + b # Complete List of 128-bit Service UUIDs

return p

ble = bluetooth.BLE()

ble.active(True)

# GATT table: one service with TX(notify) and RX(write) chars

UART_SERVICE = (

UART_SERVICE_UUID,

(

(UART_TX_UUID, bluetooth.FLAG_NOTIFY),

(UART_RX_UUID, bluetooth.FLAG_WRITE),

),

)

(tx_handle, rx_handle) = ble.gatts_register_services((UART_SERVICE,))[0]

conn_handle = None

def on_ble(event, data):

global conn_handle

if event == _IRQ_CENTRAL_CONNECT:

conn_handle, _, _ = data

print("Central connected:", conn_handle)

elif event == _IRQ_CENTRAL_DISCONNECT:

print("Central disconnected")

conn_handle = None

start_advertising()

elif event == _IRQ_GATTS_WRITE:

handle, = data

if handle == rx_handle:

msg = ble.gatts_read(rx_handle)

print("RX from browser:", msg)

ble.irq(on_ble)

def start_advertising():

name = "ESP32-UART"

ble.gap_advertise(100_000, adv_payload(name=name, services=[UART_SERVICE_UUID]))

print("Advertising as", name)

start_advertising()

# Demo: once connected (and after the browser enables notifications),

# send 'a' every second.

while True:

if conn_handle is not None:

try:

ble.gatts_notify(conn_handle, tx_handle, b'a')

except Exception as e:

# If notifications aren't enabled yet, this may no-op or raise; keep trying.

pass

time.sleep(1)

else:

time.sleep(0.2)

I manually extended the MicroPython code it produced to send the string “helpme” instead of just a single letter.

Setting up the ESP32-S3 board with MicroPython was done using esptool. You can grab a MicroPython release from here and then run:

python -m esptool --chip esp32s3 --port COM9 --baud 460800 write_flash -z 0x0 <Path To>\ESP32_GENERIC_S3-20250911-v1.26.1.bin

To then use the board in WSL, I ran this in Powershell:

usbipd bind --busid 2-2

usbipd attach --wsl --busid 2-2

Where the busid can be obtained via:

usbipd list

In WSL, I connected to the board via mpremote and pushed the bluetooth script onto it as main.py using:

mpremote connect auto fs cp ./bluetooth_sender.py :main.py

Now, when connecting a powerbank to the board it starts advertising and is available to be paired with. Once the web page pairs with the board, the board starts sending the “helpme” string character-by-character. Works as expected and is a much better way to venture out into the Upside Down!

Bonus: Notes on using MicroPython on ESP32 dev boards (on Windows, via WSL)

In a previous life I had already played with ESP32 boards attached to my Windows machine. At the time it took me a while to figure out how to make the boards show up as resources for mpremote in WSL. My notes from when I first figured out how to connect the board came in handy this week and I’m reproducing them here in case someone else runs into similar issues. I used the ESP32 dev board to connect to the Waveshare screen in my experiments.

Windows

In Powershell, make sure the board is connected and shows up. Note its bus id for the next command.

usbipd list

Then, make sure that WSL has access to this board (as identified through its bus id).

usbipd attach --wsl --busid 2-1

WSL

Make sure that the board shows up now.

ls /dev/ttyUSB*

Load the CP210x USB serial driver. Without it, you will probably not be able to access the board.

sudo modprobe cp210x

Confirm that the driver is loaded.

lsmod | grep cp210x

Temporarily fix permissions so non-root users can access the serial port.

sudo chmod 666 /dev/ttyUSB0

Connect the board.

mpremote connect /dev/ttyUSB0

You can also use

mpremote connect auto mount .

to establish the connection and automatically make the contents of the current directory available to the interpreter running on the board. I’m using this a lot for testing and then just import whatever python test file I want to play with.

Note on Web Development

I’m documenting the below in case anyone else uses Hugo and needs to host custom HTML/JS for their assignments.

I produced some custom HTML with more or less complicated JavaScript this week (the wall with the light bulbs). Piping that through Hugo in a way that makes it work when hosted through Neil’s setup on the CBA server is a little tricky. Generally, the trick to make static files work with his setup which hosts student pages in a fairly nested path on the official CBA website is to use Hugo shortcodes (to be able to run Hugo functions like absURL and replace file paths) and to then use these shortcodes in .md files (so that the resulting HTML is actually built and included in /public/).

For this week, I created a shortcode in a file called messagewall.html that contains the custom HTML and JS I developed. Putting it in the shortcode allows me to create a variable for the background image at the top of the file:

{{ $img := "week3/board_background_clean.jpg" | absURL }}

and to then include it in the html code like so:

<img id="wall" src="{{ $img }}" alt="Alphabet wall">

All that’s left to do now is to create a .md file that includes nothing but a reference to this shortcode:

---

title: "Message Wall"

---

\{\{< messagewall >\}\}

The final build will then include an html page that has an absolute filepath to the image.