The Result

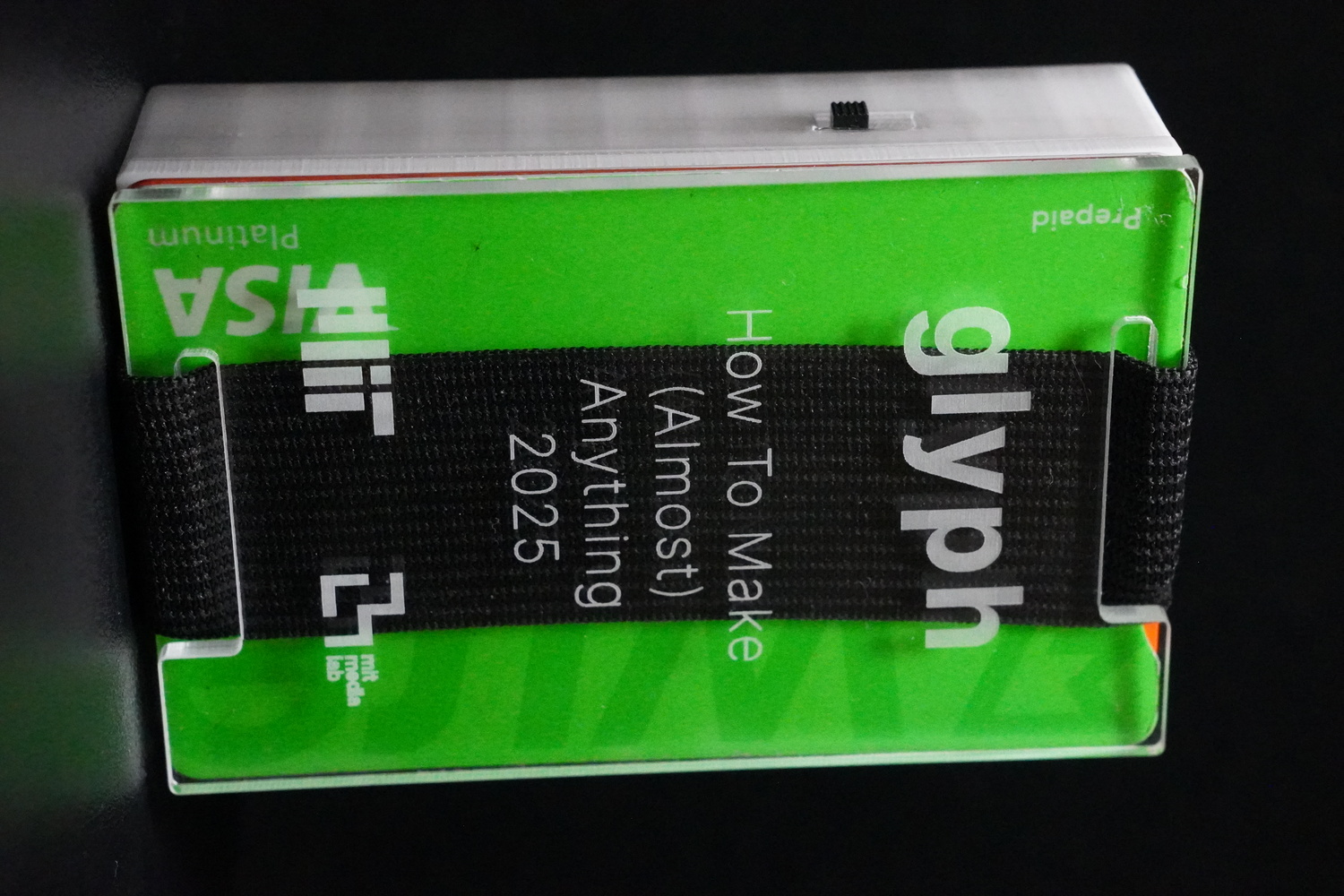

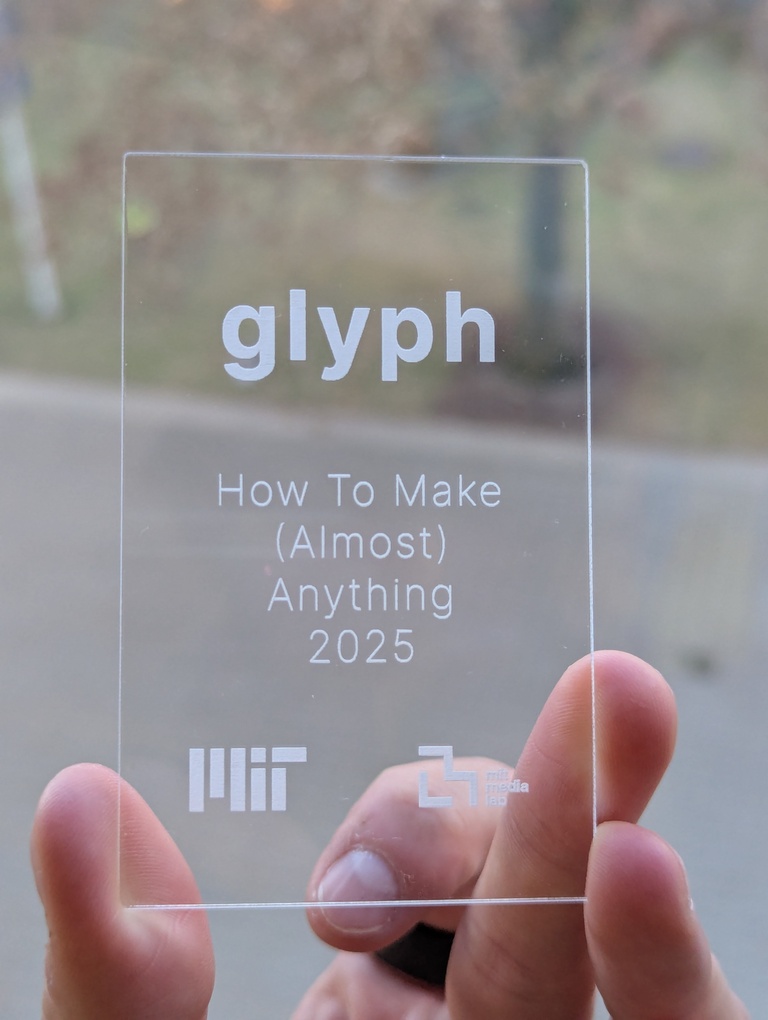

You can find the journey below, but here are some results. This was a fun project. I’m generally happy with how it turned out and I learned a lot. One of the key goals for me was to keep things polished and organized, and I think the final build quality of “glyph” is pretty decent considering it’s not professionally manufactured. It’s also fairly close to my initial designs.

The following description of my journey towards a first version of “glyph” will try to answer most of these questions. To be very explicit about them, I include some bullet points right here.

- What does it do?

Glyph holds credit cards and can show information on a 200x200 px e-ink screen. It has a web API that allows other systems to send images to it (like XR glasses or AI assistants).

- Who’s done what beforehand?

I did not look much at other people’s work when building glyph, but the HTMAA website lists related projects. Multiple people have used e-ink screens before. Nathan Harris, Sam Laney and Sohail Ahmed Soomro are examples.

- What sources did you use?

I used AI (ChatGPT) as listed below for some of the code generation. I also used some Youtube tutorials to learn concepts and skills. They are listed where applicable, as well. I also relied on friends and TAs within the HTMAA cohort to teach me in areas that I didn’t know that much about. I believe I give credit to all of them in the respective sections of this website.

- What did you design?

I designed the housing, the electronics, its logo, the software architecture, the method of attaching the two plates via an elastic band, the internals of the housing, the interaction patterns and use cases on n8n, the container setup for hosting the web app, and probably a few things I’m missing here.

- What materials and components were used?

- Where did they come from?

- How much did they cost?

| Material/Component | Source | Cost |

|---|---|---|

| White and transparent PLA (a small percentage of a spool) | Amazon | a small percentage of $13.99 |

| Acrylic Sheets | Transparent - Amazon White - Amazon | a percentage of $9.99 each |

| Some Wires | Amazon | a small percentage of $12.99 |

| small piece of copper stock for PCB milling | Electronics Lab | probably ~$1 |

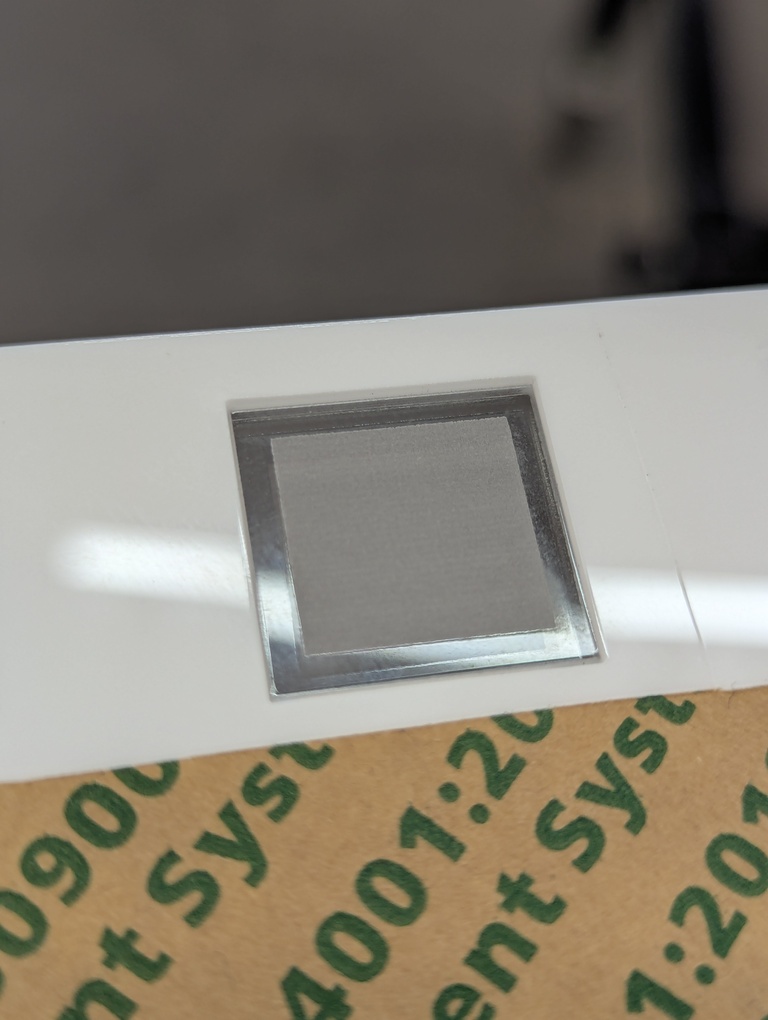

| E-Ink Screen | Amazon | $17.99 |

| ESP32C3 | DigiKey | $4.99 |

| WS2812B | Amazon | 1/100 * C$23.99 |

| Buttons | Amazon | small percentage of $8.49 |

| 2x 6mm Standoffs | Amazon | small percentage of $9.99 |

| Switch | Amazon | a small percentage of $9.99 |

| Elastic Band | Amazon | a small percentage of $3.99 |

| Screws | Had them already | probably just a few cents |

| 20cm or so of spool wire | CBA Lab | probably just a few cents |

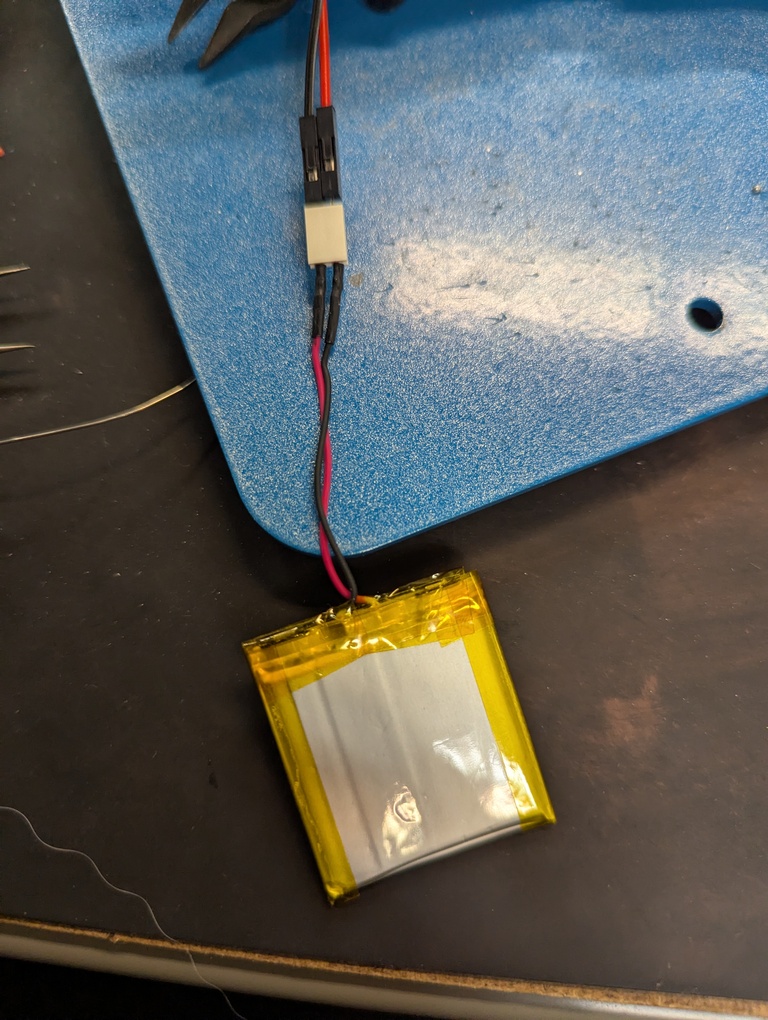

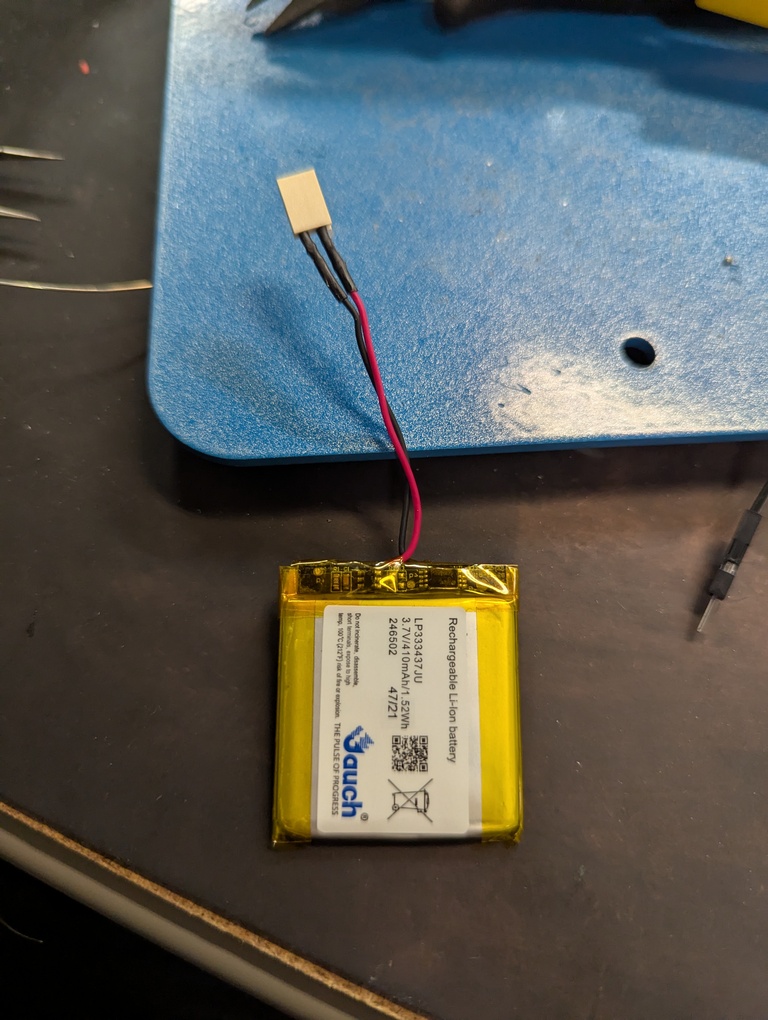

| Battery (410mAh) | Digikey | $6.91 |

| Heat Set Inserts | had these already from an aliexpress order | probably just a few cents |

I spent more money on a few things that didn’t make it into the final product (some specific capacitor and resistor values) or that are tools that I’ll use for a long time (a $12 logic analyzer for example). I also spent money on a PCB from JLCPCB ($6.20 + a lot of shipping) that didn’t make it into the final product.

- What parts and systems were made?

An enclosure made by 3d printing and laser cutting and engraving. It also has an elastic band modified by some sewing. A battery-powered PCB. Sofware systems including:

- embedded software on ESP32C3 to pull images from

- a cloud system acting as a web api for the device

- a workflow in N8N as an example of how an AI assistant can send data to the device

- a UI on the Snap Spectacles to send QR codes from XR

The device also has some peripheral elements like buttons, a switch and a status LED.

- What tools and processes were used?

- 3D printing (Bambu)

- Laser cutting and engraving (Xtool P2)

- PCB milling (Carvera)

- Soldering (Pinecil)

- Embedded programming

- Web development / API development

- Sewing (Brother SE1900)

- What questions were answered?

- Can I make a device that looks fairly professional? -> Kinda!

- Can I find use cases for an e-ink screen in my wallet? -> Yeah, but it needs to be smaller!

- Is HTMAA a lot of fun? -> Hell yeah!

- What worked? What didn’t?

I couldn’t quite figure out how to replace the commercial driver board for the e-ink screen. I want to continue debugging, but had to go with my plan B for spiral development reasons. Everything else worked pretty well. The sizing of the device doesn’t work that well, yet. Ideally it’d be half the size.

- How was it evaluated?

I aimed to make something that fits into my pocket, but I think it needs another iteration to get there. Otherwise, I think I hit my goals of making something that is well integrated, works well and provides some commentary on the idea that the future is phone-less.

- What are the implications?

The implications are that a) I feel very empowered to keep building things and b) I still believe that having small devices built for particular purposes is very nice. Instrumenting more devices to be smarter could be a fun goal for the future. I might explore that.

Final Project Tracker

This is were I document my progress on my final project.

Week 1

I did some brainstorming and made concepts around the two ideas I have for my final project. You can read more about the ideas and look at early visuals on the page documenting my work for Week 1.

I’m still deciding between the two options, but I am giving myself some time to decide. I know that I’ll need to figure out a few things that these ideas have in common. So, I can work on those things before committing to an idea. Namely:

- These are portable devices and need to be powered by battery. I need to get more familiar with LiPO batteries and need a charging solution (USB-C).

- Both ideas involve e-ink screens. I need to understand the form factor I can go for. Specifically, I need to understand if I need to use breakout boards or can manage to drive the display itself directly. I also need to learn how to actually show images and text on the displays.

Week 2

This week was all about laser cutting cardboard. I wasn’t able to make much progress on the final project, but I’ve ordered and received e-ink screens from Waveshare. I ordered both a display on a driver board and a raw display. I’m hoping that next week will give me some headspace to start playing with the displays.

Week 3

I know my own limitations in terms of my electronics knowledge, but I also understand what I will have to learn to make my project(s) happen. So, I spent some time today (Sept 18th) figuring out resources I can use to learn. I had a great meeting with Ceci who’s in my cohort and knows MUCH more about electronics than I do. She answered a lot of my questions and gave me a sense of what’s feasible. Thank you so much, Ceci! The questions I had were predominantly around power management. When switching, the e-ink screen needs a higher voltage than what the microcontroller or USB will provide by default.

Driver Code

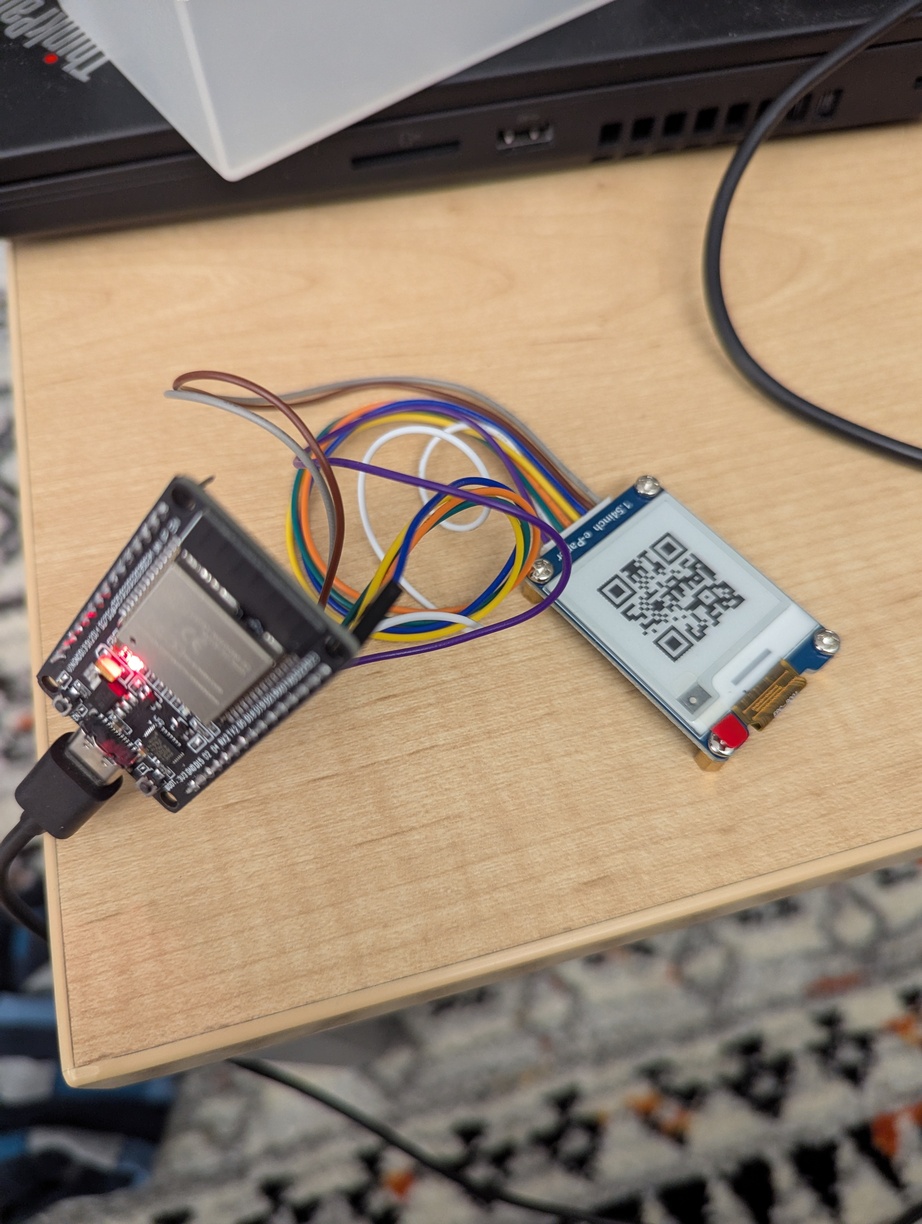

For my Final Project I need to send images (like QR codes) to an e-ink screen. I want to use micropython for the logic in my final project and so I was looking for a micropython driver that I could use for the board. I ran into some problems. The micropython driver that I could find was targeting V1 of the Waveshare board only and didn’t work on the V2 screen that I purchased. I couldn’t find a V2 driver written in micropython, but I ended up playing with a combination of C++ libraries (GxEPD2 and Adafruit GFX Library) that allowed me to verify that the screen itself actually worked fine. This video was very helpful when narrowing down what libraries to try.

I still didn’t have a micropython driver obviously and the C++ libraries were obfuscated enough (lots of inheritance of templated base classes to support a wide range of screen models) that deriving the byte sequence needed to initialize and operate the board was a little outside of what I could realistically achieve this week.

The capabilities of modern LLMs astound me on a weekly basis. To solve my “problem”, I opened the GxEPD2 library in Visual Studio Code and asked GPT-5-mini to help me figure out how the communication with the screen is handled for my particular screen model.

Prompt

#codebase I’d love for you to analyze the exact control sequqence needed to init a waveshare 1inch54 board. I know that the code here works on my display so let’s figure out how exactly that works. Afterwards I’d like to translate it to micropython, but let’s figure out how the communication with the display is done and what’s required to show pixels on the display please.It came back giving me a few options for a few different display types. I clarified which screen I want to use with the following prompt.

Prompt

I know that the one we want to use is the D67 variant. I ran that on the display (via ESP32 dev board) and it worked well. Let’s use what we know about that variant and produce a micropython equivalent to how the c++ code works with that display, please.It then produced micropython driver code that worked flawlessly for my test:

Driver

"""

MicroPython driver for GDEH0154D67 (1.54" 200x200 SSD1681) based on GxEPD2 Arduino sequences.

Target: ESP32 (adjust SPI init pins for other boards)

Wiring (example):

- CS -> any GPIO (active low)

- DC -> any GPIO

- RST -> any GPIO

- BUSY-> any GPIO (input)

- SCK -> HSPI SCK

- MOSI-> HSPI MOSI

- MISO-> optional (not used)

Notes:

- Panel requires 3.3V for VCC and IO. Do NOT use 5V data lines.

- Busy polarity for this variant: HIGH when busy.

Usage:

import machine, time

from micropython_gdeh0154d67 import EPD

epd = EPD(spi_id=1, sck=14, mosi=13, cs=15, dc=27, rst=26, busy=25)

epd.init()

buf = bytearray([0xFF]*(200*200//8)) # white

epd.write_image(0,0,200,200,buf)

epd.update_full()

epd.sleep()

"""

import time

from machine import Pin, SPI

# Display parameters

WIDTH = 200

HEIGHT = 200

BUSY_ACTIVE_LEVEL = 1 # busy HIGH for SSD1681

class EPD:

def __init__(self, spi_id=1, sck=14, mosi=13, miso=-1, baudrate=4000000, cs=15, dc=27, rst=26, busy=25):

# pins

self.cs = Pin(cs, Pin.OUT, value=1)

self.dc = Pin(dc, Pin.OUT, value=1)

self.rst = Pin(rst, Pin.OUT, value=1)

self.busy = Pin(busy, Pin.IN)

# SPI

if miso >= 0:

self.spi = SPI(spi_id, baudrate=baudrate, polarity=0, phase=0, sck=Pin(sck), mosi=Pin(mosi), miso=Pin(miso))

else:

self.spi = SPI(spi_id, baudrate=baudrate, polarity=0, phase=0, sck=Pin(sck), mosi=Pin(mosi))

# internal

self._inited = False

# low-level helpers

def _cs_low(self):

self.cs.value(0)

def _cs_high(self):

self.cs.value(1)

def _dc_command(self):

self.dc.value(0)

def _dc_data(self):

self.dc.value(1)

def send_command(self, cmd):

self._dc_command()

self._cs_low()

self.spi.write(bytes([cmd]))

self._cs_high()

self._dc_data()

def send_data(self, data):

# data can be int or bytes/bytearray

self._dc_data()

self._cs_low()

if isinstance(data, int):

self.spi.write(bytes([data]))

else:

# assume bytes-like

self.spi.write(data)

self._cs_high()

def _start_data(self):

self._dc_data()

self._cs_low()

def _end_data(self):

self._cs_high()

def reset(self):

# follow Waveshare style: drive RST high, then low, then high

self.rst.value(1)

time.sleep_ms(10)

self.rst.value(0)

time.sleep_ms(10)

self.rst.value(1)

time.sleep_ms(10)

def wait_while_busy(self, timeout_ms=10000):

start = time.ticks_ms()

while self.busy.value() == BUSY_ACTIVE_LEVEL:

time.sleep_ms(1)

if time.ticks_diff(time.ticks_ms(), start) > timeout_ms:

raise OSError('EPD busy timeout')

# partial ram area like in Arduino driver

def set_partial_ram_area(self, x, y, w, h):

# _writeCommand(0x11); _writeData(0x03);

self.send_command(0x11)

self.send_data(0x03)

# 0x44 x start/end in bytes

self.send_command(0x44)

self.send_data(x // 8)

self.send_data((x + w - 1) // 8)

# 0x45 y start/end

self.send_command(0x45)

self.send_data(y & 0xFF)

self.send_data((y >> 8) & 0xFF)

yend = y + h - 1

self.send_data(yend & 0xFF)

self.send_data((yend >> 8) & 0xFF)

# 0x4E ram x address

self.send_command(0x4E)

self.send_data(x // 8)

# 0x4F ram y address

self.send_command(0x4F)

self.send_data(y & 0xFF)

self.send_data((y >> 8) & 0xFF)

# init sequence mirrored from _InitDisplay() in GxEPD2_154_D67.cpp

def init(self):

if self._inited:

return

self.reset()

time.sleep_ms(10)

# soft reset

self.send_command(0x12)

time.sleep_ms(10)

# Driver output control

self.send_command(0x01)

self.send_data(0xC7)

self.send_data(0x00)

self.send_data(0x00)

# Border Waveform

self.send_command(0x3C)

self.send_data(0x05)

# Read built-in temp sensor

self.send_command(0x18)

self.send_data(0x80)

# set full ram area

self.set_partial_ram_area(0, 0, WIDTH, HEIGHT)

self._inited = True

# power on sequence (PowerOn in Arduino code)

def power_on(self):

# _writeCommand(0x22); _writeData(0xe0); _writeCommand(0x20); _waitWhileBusy

self.send_command(0x22)

self.send_data(0xE0)

self.send_command(0x20)

self.wait_while_busy(5000)

def power_off(self):

# _writeCommand(0x22); _writeData(0x83); _writeCommand(0x20); _waitWhileBusy

self.send_command(0x22)

self.send_data(0x83)

self.send_command(0x20)

self.wait_while_busy(2000)

# write whole buffer (current) to RAM at specified rectangle and optionally do not refresh

def write_image(self, x, y, w, h, buf):

if not self._inited:

self.init()

# initial write handling in Arduino ensures previous/full buffers are managed; here we just write current

self.set_partial_ram_area(x, y, w, h)

self.send_command(0x24) # write RAM (current)

# stream data

self._start_data()

# buf should be bytes/bytearray length w*h/8

self.spi.write(buf)

self._end_data()

# helper similar to Arduino's _Update_Full/_Update_Part

def update_full(self):

# full update: 0x22 0xF7, 0x20 then wait

self.send_command(0x22)

self.send_data(0xF7)

self.send_command(0x20)

# full refresh time in Arduino was relatively long; wait until busy releases

self.wait_while_busy(20000)

# after full update the Arduino sets power_is_on false; we keep state open for simplicity

def update_partial(self):

# partial update: 0x22 0xFC, 0x20 then wait

self.send_command(0x22)

self.send_data(0xFC)

self.send_command(0x20)

self.wait_while_busy(5000)

def sleep(self):

# deep sleep: 0x10 0x01 per Arduino

self.power_off()

self.send_command(0x10)

self.send_data(0x01)

# Simple test function to draw a checker pattern (for quick visible test)

def example_test():

epd = EPD(spi_id=1, sck=14, mosi=13, cs=15, dc=27, rst=26, busy=25)

epd.init()

epd.power_on()

# white buffer

buf = bytearray([0xFF] * (WIDTH * HEIGHT // 8))

# paint a small black rectangle in top-left 40x40 (black = 0x00)

for row in range(40):

for col_byte in range(40//8):

idx = (row * (WIDTH//8)) + col_byte

buf[idx] = 0x00

epd.write_image(0, 0, WIDTH, HEIGHT, buf)

epd.update_full()

epd.sleep()

if __name__ == '__main__':

example_test()

I then extended the python code to test images. Again, I used ChatGPT to give me a quick tool that can convert png files (I downloaded a QR code that I wanted to use) to a .bin file with contents that I can directly send to the waveshare screen.

Prompt

If I want to get a bytearray in python of an image, what’s the best way for me to store that image so it’s easiest to read it as a bytearray? Can you give me the code to store a png as .bin?Python

# png2bin.py

# pip install pillow

from PIL import Image

import argparse, sys

def pack_1bpp(im, invert=False, msb_first=True):

im = im.convert("1") # 1-bit BW

w, h = im.size

px = im.getdata()

# Pillow "1" gives 0 or 255. Map to 0/1 bits.

bits = [(0 if p else 1) if invert else (1 if p else 0) for p in px]

out = bytearray()

for i in range(0, len(bits), 8):

chunk = bits[i:i+8]

if len(chunk) < 8:

chunk += [0]*(8 - len(chunk))

b = 0

if msb_first:

for bit in chunk: b = (b << 1) | bit

else:

for k, bit in enumerate(chunk): b |= (bit & 1) << k

out.append(b)

return out, w, h

def main():

ap = argparse.ArgumentParser()

ap.add_argument("input_png")

ap.add_argument("output_bin")

ap.add_argument("--size", type=str, help="WxH (e.g. 200x200)")

ap.add_argument("--invert", action="store_true", help="flip black/white bits")

ap.add_argument("--lsbfirst", action="store_true", help="pack LSB-first in each byte")

args = ap.parse_args()

im = Image.open(args.input_png)

if args.size:

w, h = map(int, args.size.lower().split("x"))

im = im.resize((w, h))

buf, w, h = pack_1bpp(im, invert=args.invert, msb_first=not args.lsbfirst)

# sanity: bytes should be w*h/8

expected = (w*h + 7)//8

if len(buf) != expected:

print(f"Warning: got {len(buf)} bytes, expected {expected}", file=sys.stderr)

with open(args.output_bin, "wb") as f:

f.write(buf)

print(f"Wrote {args.output_bin} ({len(buf)} bytes) for {w}x{h}")

if __name__ == "__main__":

main()

In one of the next weeks, we’ll learn how to use a Logic Analyzer. I will then use it to look at the communication between microcontroller and screen to verify a) that I understand what’s going on and b) that the communication is equivalent to the established C++ library. Once I’ve gone through those steps, I’m planning on open sourcing the above driver code on GitHub so others can easily drive their V2 screens, as well.

For reference, here’s the schematics pdfs for the driver board and screen itself:

Week 4

I focused on 3D printing and scanning this week. I had a chance to chat with Dan and Quentin about my final project, though. They had some good advice for me and I’m very excited for the next two weeks which are all about electronics design and production.

I have decided to build the smart wallet (not the fridge magnets) as my final project. My goal is to hook it up to XR glasses and use it to board a flight.

Week 5

In Week 5 I verified that I understand the communication to the e-ink display. I used a logic analyzer to look at the individual bytes that are sent over the SPI channels.

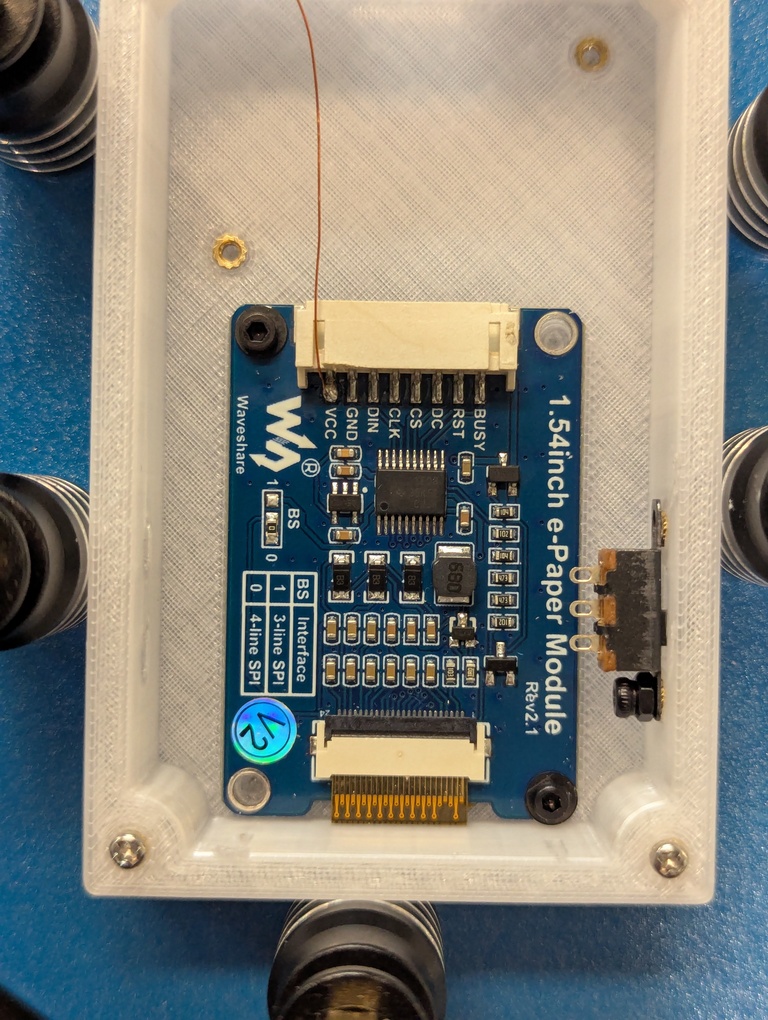

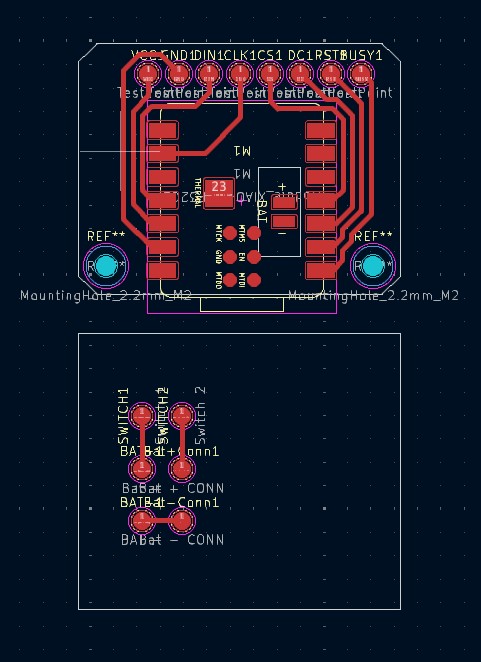

During this week’s assignment I tried to design and simulate a PCB that can replace the driver board that one of the e-ink screens came with. I re-made and adjusted the schematics published by waveshare. Unfortunately, I couldn’t confirm yet that the design is correct and the board will work.

Week 6

In Week 6 I tried to fabricate the board with the boost converter on it. It was a little bit of a mess overall because there are so many traces to keep track of. I wasn’t able to test if the boost converter actually works.

Week 7 - Input Devices

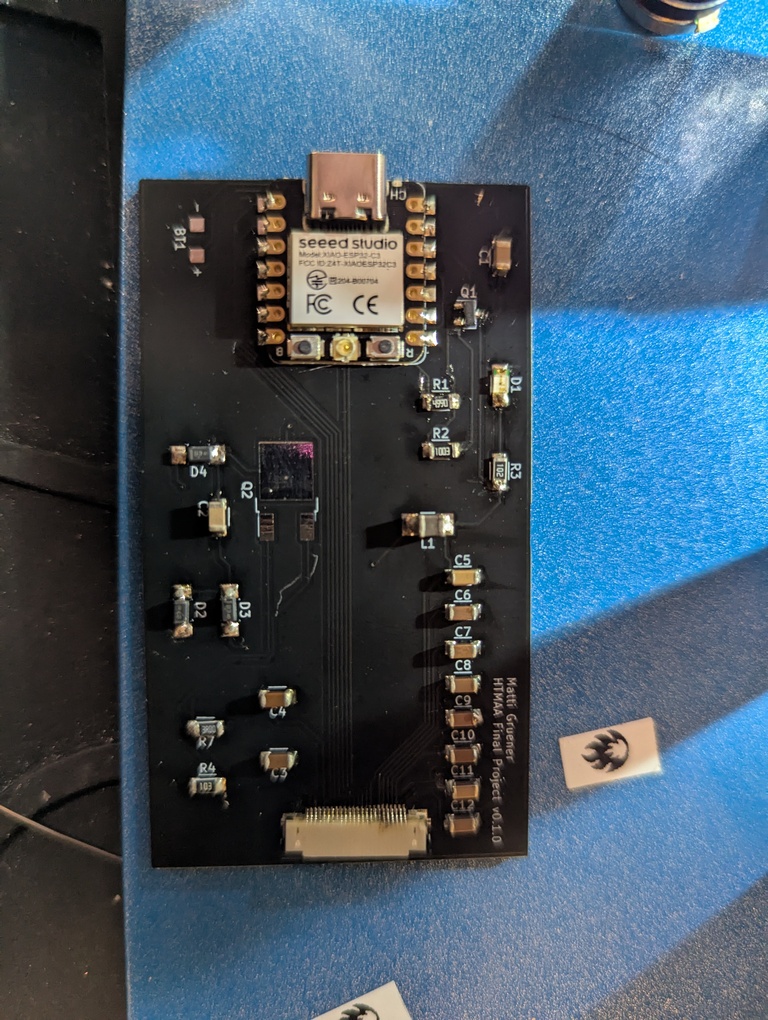

I re-designed the board and on October 26th sent it off to JLCPCB to get a cleaner 2-sided board that I can experiment with. I also ordered additional parts that the HTM inventory currently doesn’t have.

For this week’s assignment, I made a device that reads an NFC tag and then communicates through one of my servers with the Spotify API. I will most likely need a similar setup for the final project. The XR glasses will want to communicate to some server what image should be shown on the physical device (it needs a name!!). The server probably should provide some GET endpoint that I can then use to pull the image onto the e-ink screen. To trigger the ESP to make that GET request I could use an MQTT queue, but I’m not sure yet whether that’s actually the best approach. Will have to do some experimenting. I’m planning on doing that while doing the networking assignment in Week 12. Another option is BLE, but most XR glasses don’t really give you access to their bluetooth hardware.

Week 9 - Output Devices

After the board arrived in the mail, I soldered all the components onto it. I have one design flaw: One of the mosfets has the wrong footprint on the board. I was designing around one of the mosfets that we have in the HTM inventory, but then decided to order and use a different, much smaller one that’s better for fast switching.

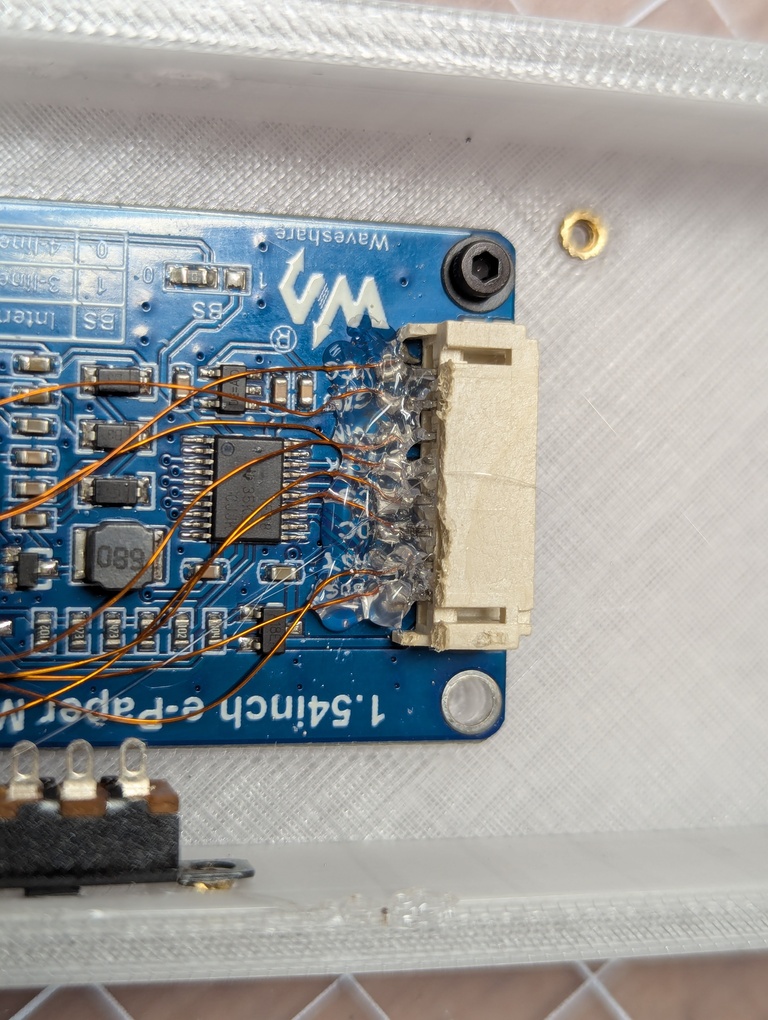

It took me a long time and 2 or 3 different boards to get the 24-pin connector soldered. The pins are very small and I struggled quite a bit. The microscope in the electronics lab in the basement was a great help. Quentin saw me struggling and showed me how to use the solder wick for effectively to clean up shorts between the pins. Thank you, Quentin!

The board still doesn’t work and at this point I’m considering to go with plan B which is using the commercial driver board. I think for spiral development reasons, that would make a lot of sense. At least to get to a working end-to-end test before trying to improve the device.

Week 10 - Molding & Casting

This was molding and casting week and I couldn’t get anything done for my final project.

Week 11 - Machine Week

Ball week! Too busy! Can’t do anything else!!!

Midterm Review and Remaining Work

- Make the housing for the screen (Networking Week)

- Final test for the custom PCB with boost converter but maybe just a simple custom PCB (Networking Week)

- Write software for XR glasses (Interfaces/Programming Week)

- Add cloud setup (Networking Week, similar to Input Devices Week)

Week 12 - Networking Week

I made some server infrastructure to expose an API for the device I want to make. More information can be found on the page for Week 12. The idea is to let external services send information to the device in my pocket. That’ll enable some AI use cases and most importantly XR devices to send data as long as they’re on some wifi. No bluetooth connection required.

Week 13 - Programming Week

I made an interface for selecting QR codes for the Snap Spectacles XR glasses. More information on the page for Week 13.

I also start designing the final PCB I want to use. I’m abandoning the idea of using a professionally manufactured one that includes everything that’s needed to replace the driver board.

Work Done During The Last Two Weeks (Including Wildcard)

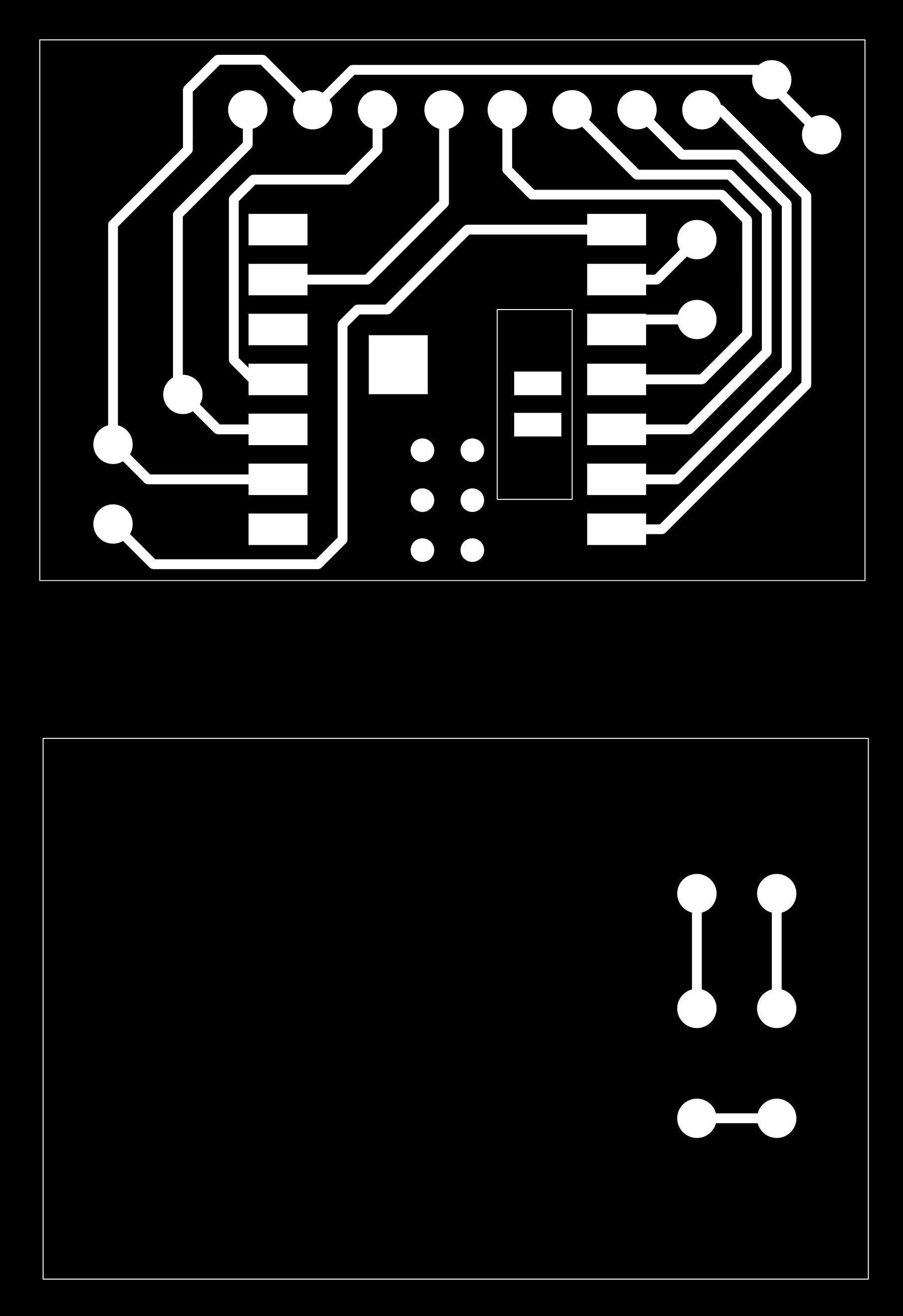

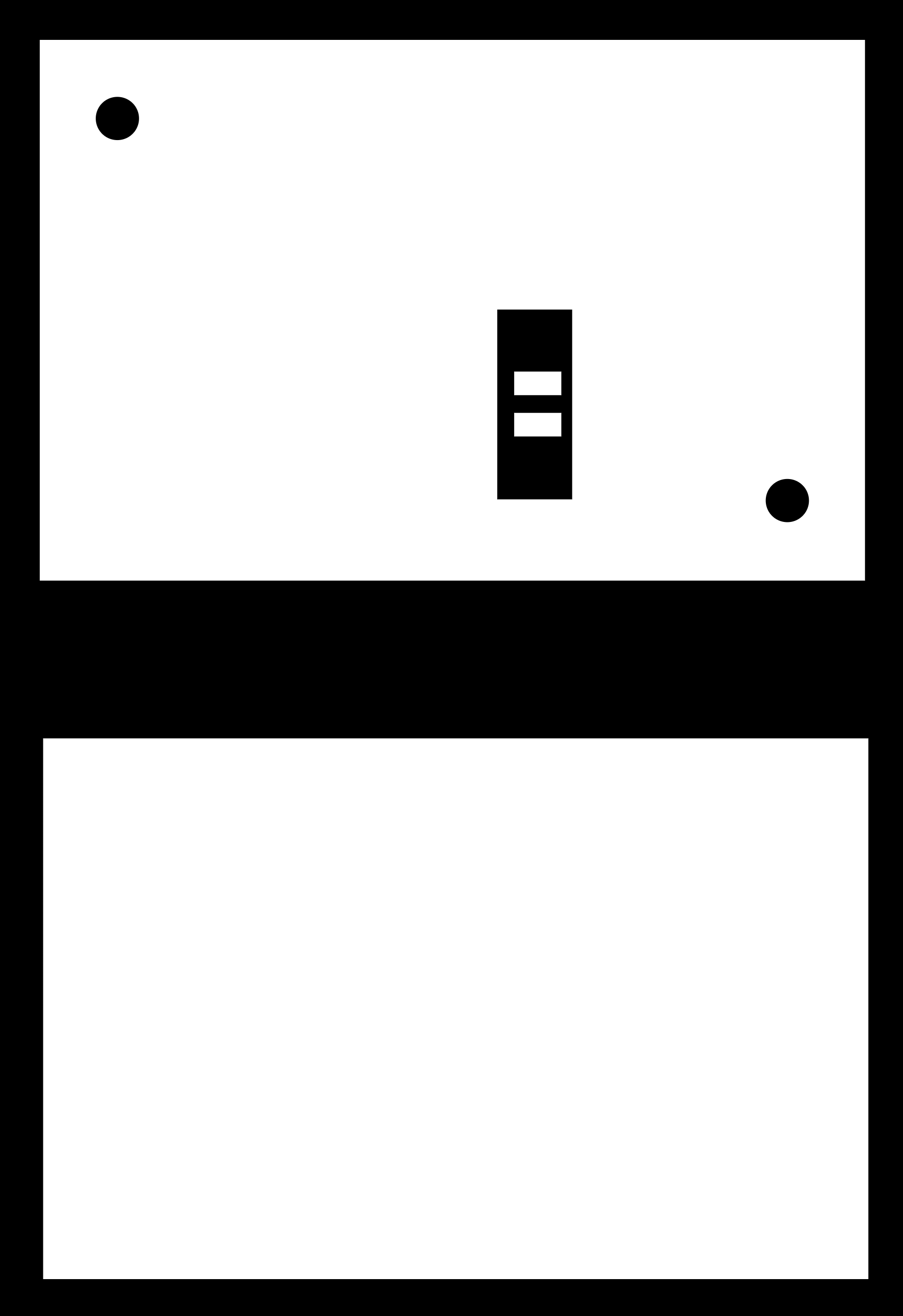

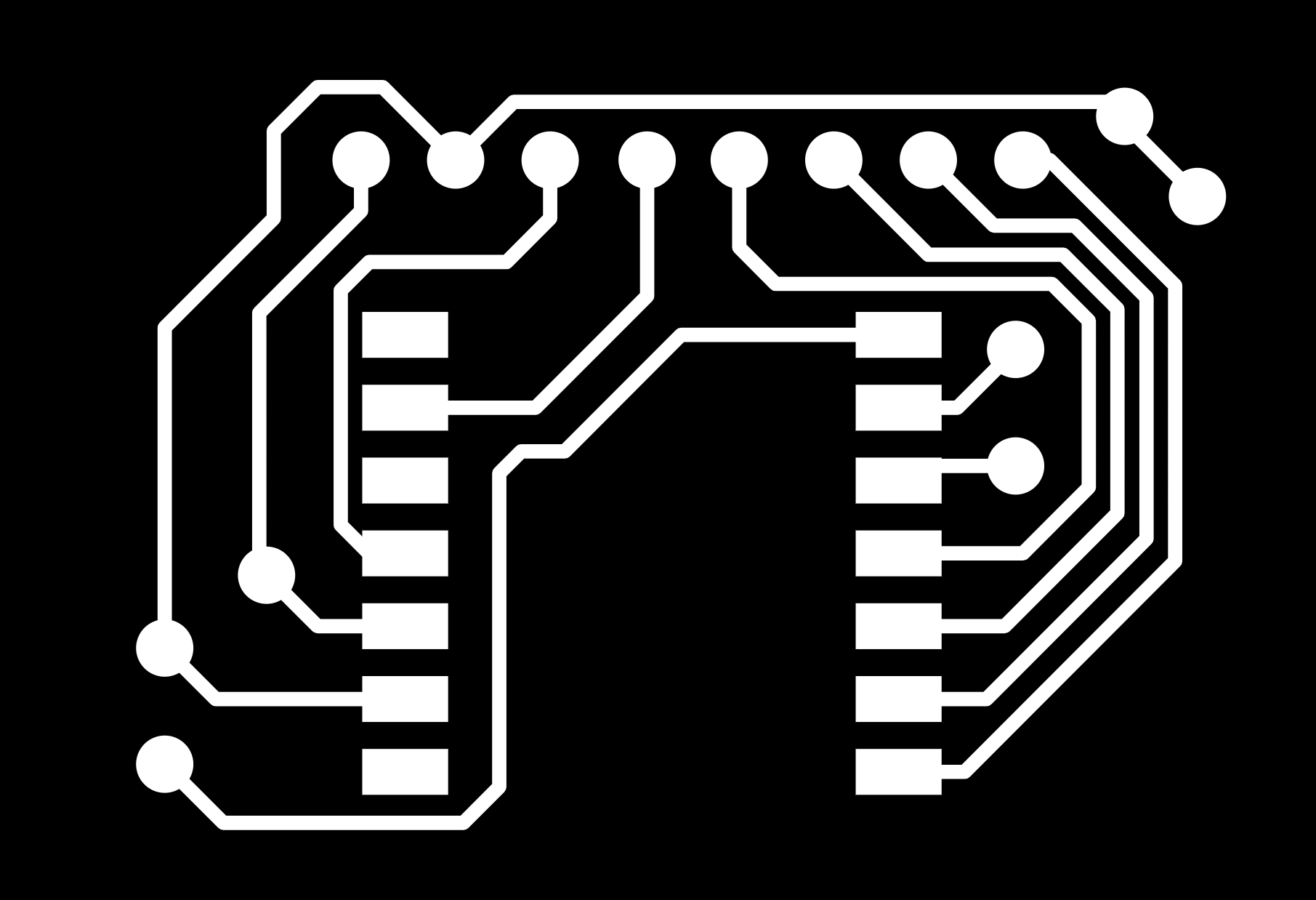

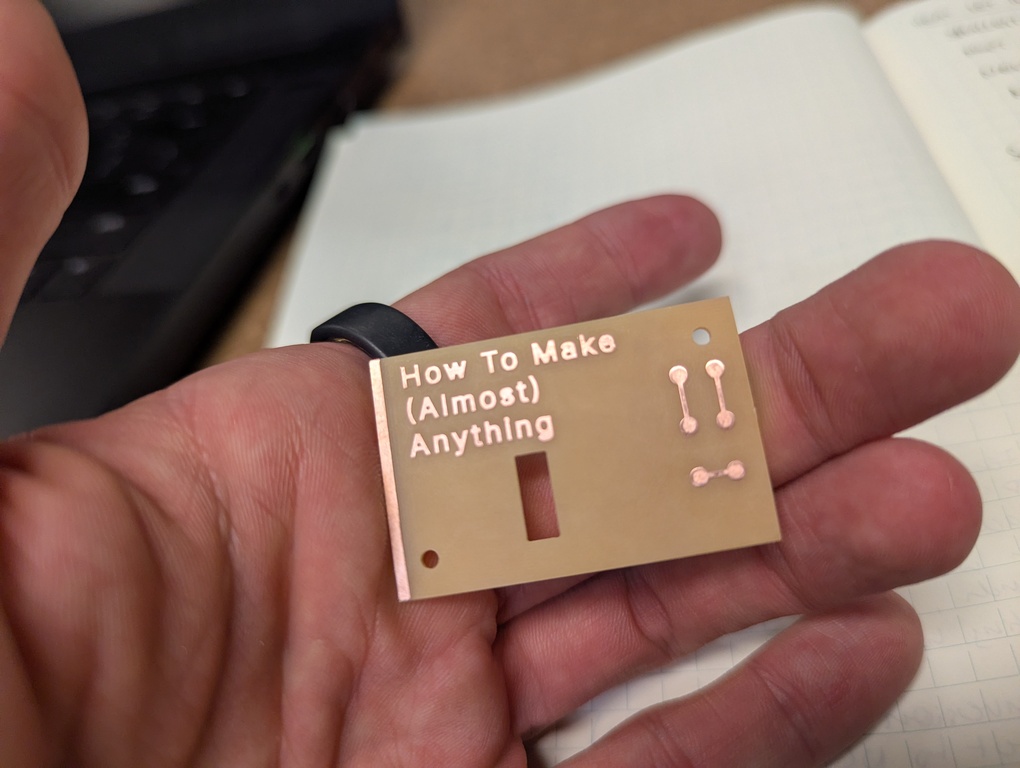

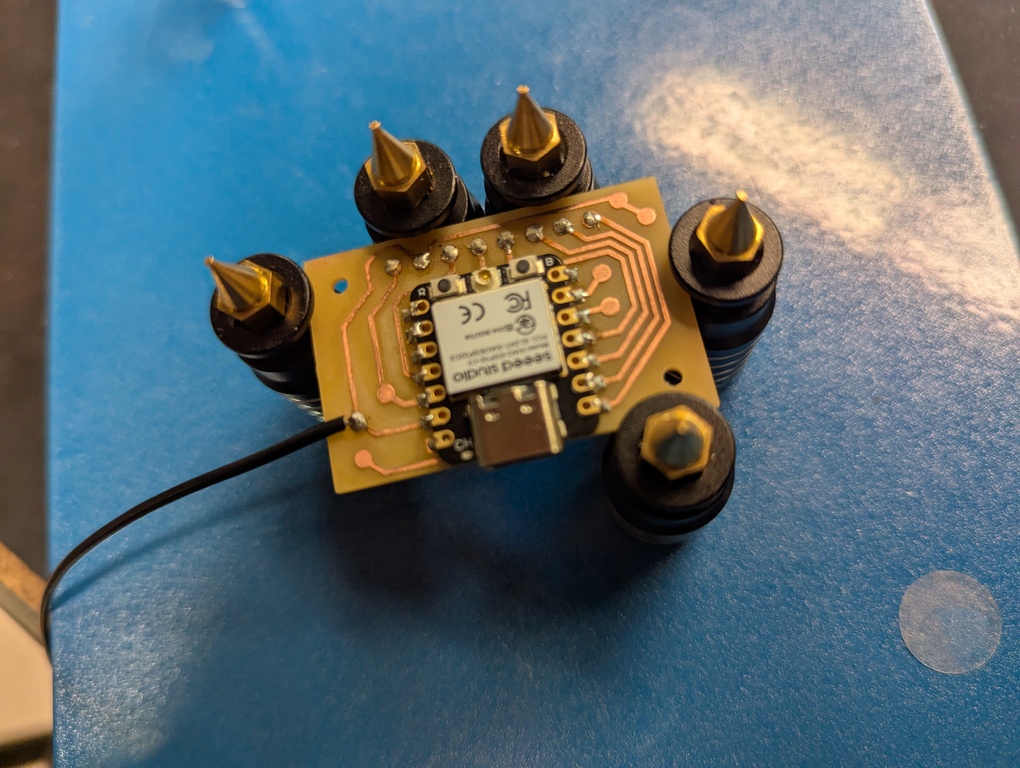

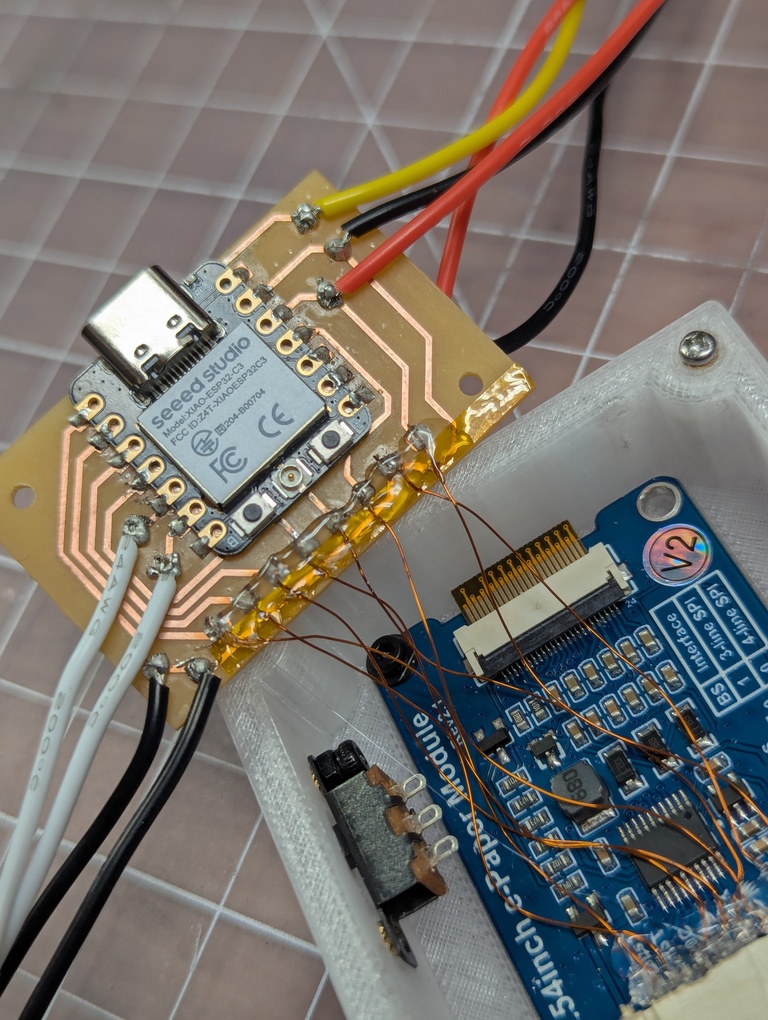

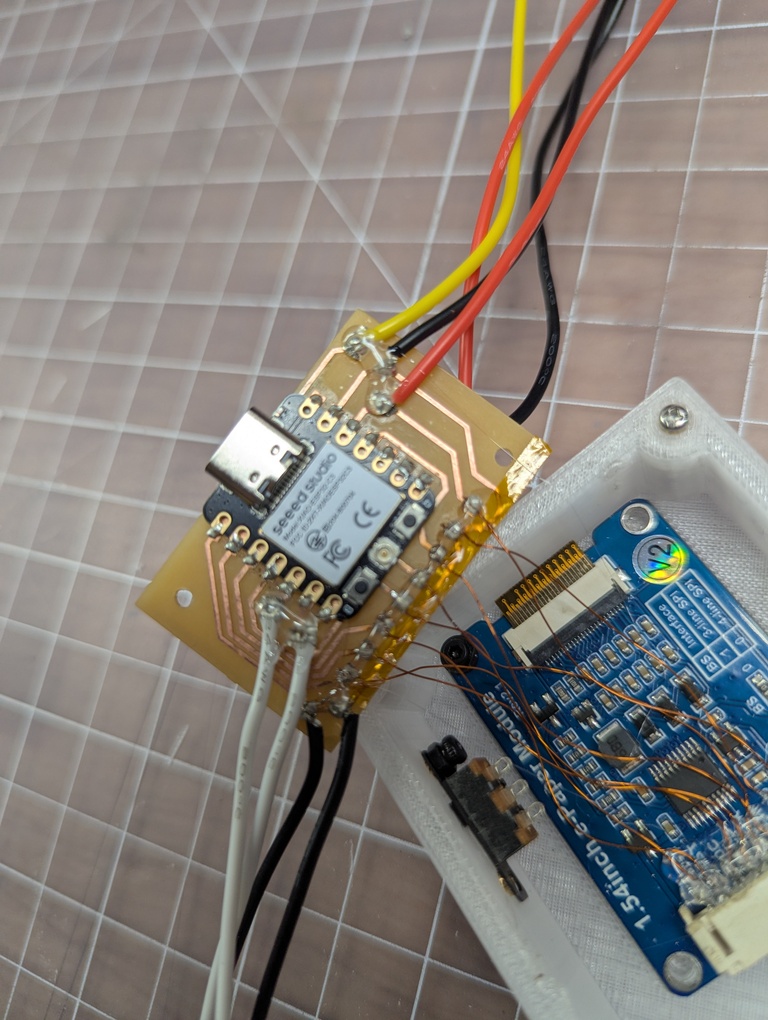

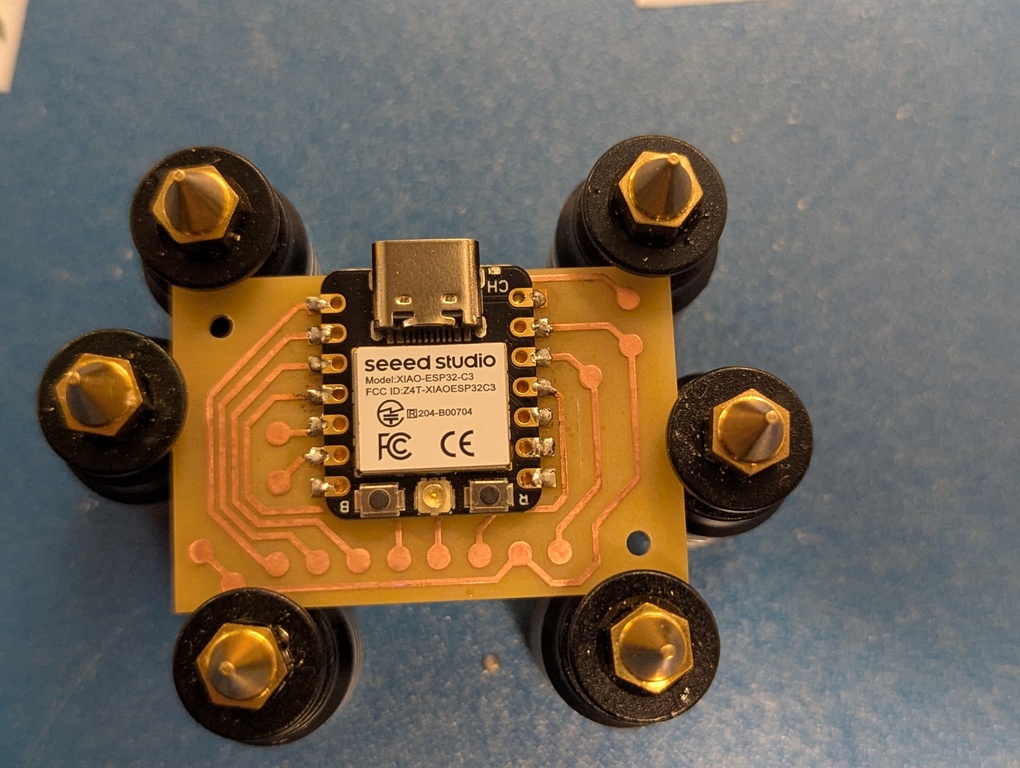

PCB

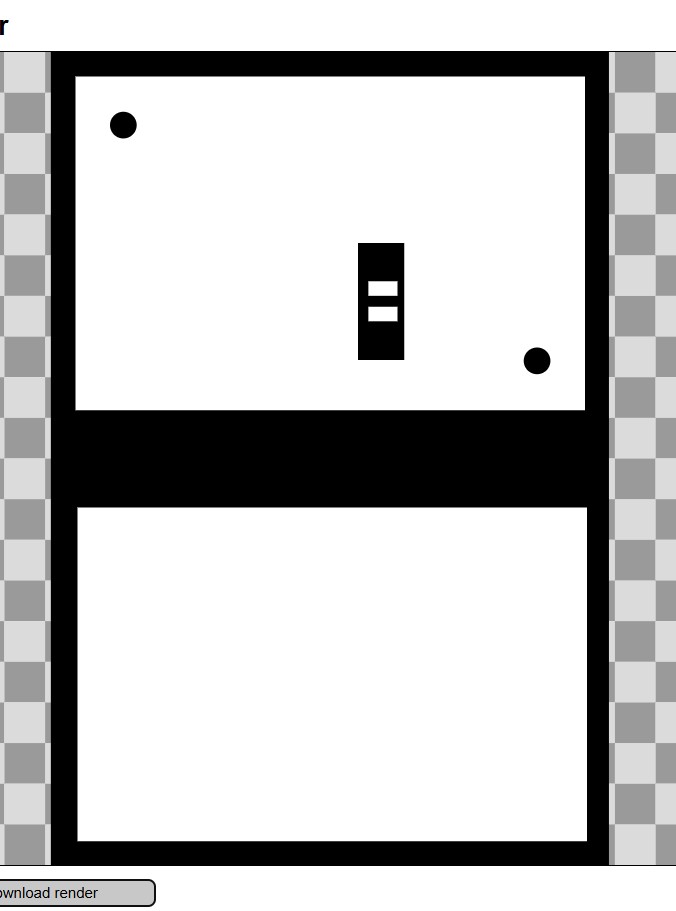

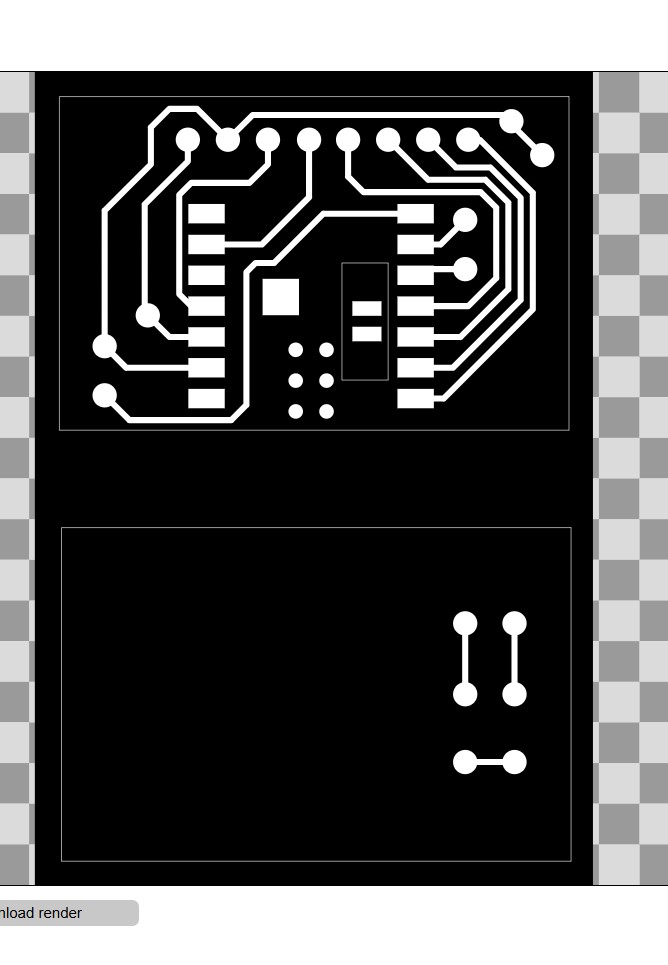

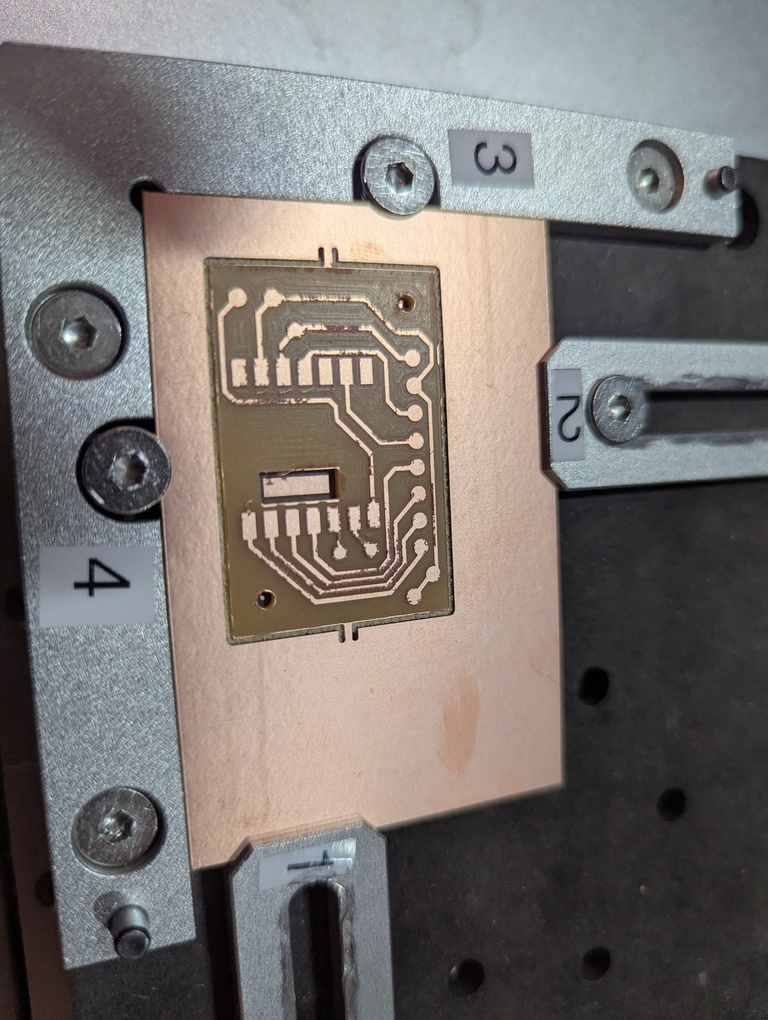

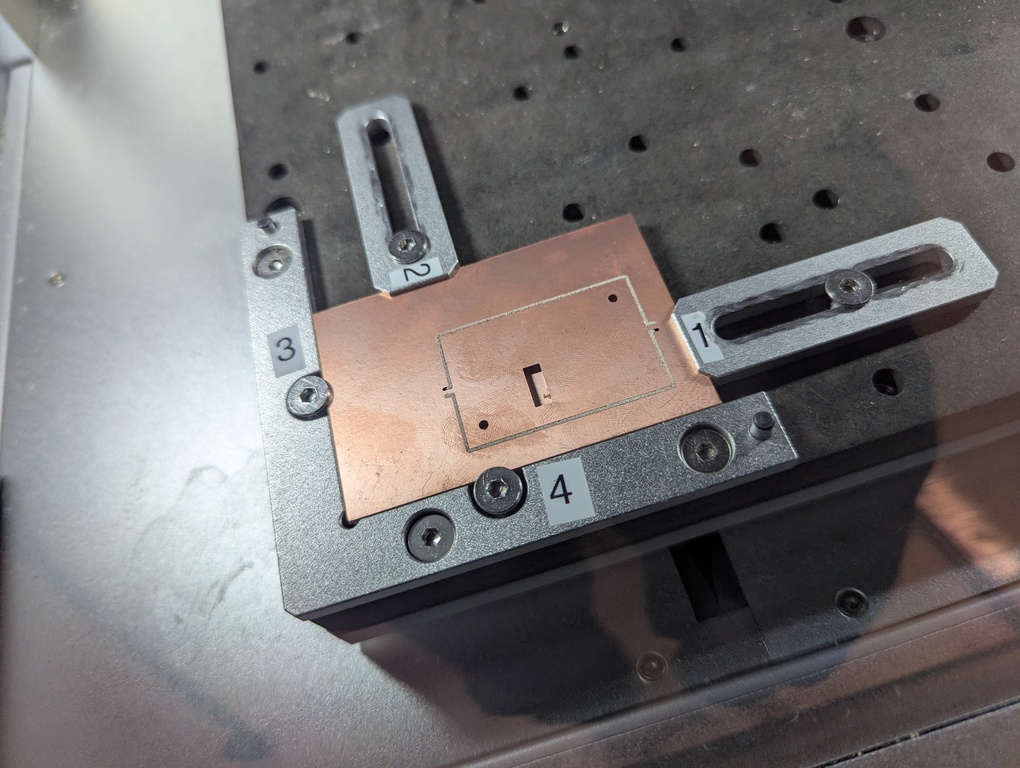

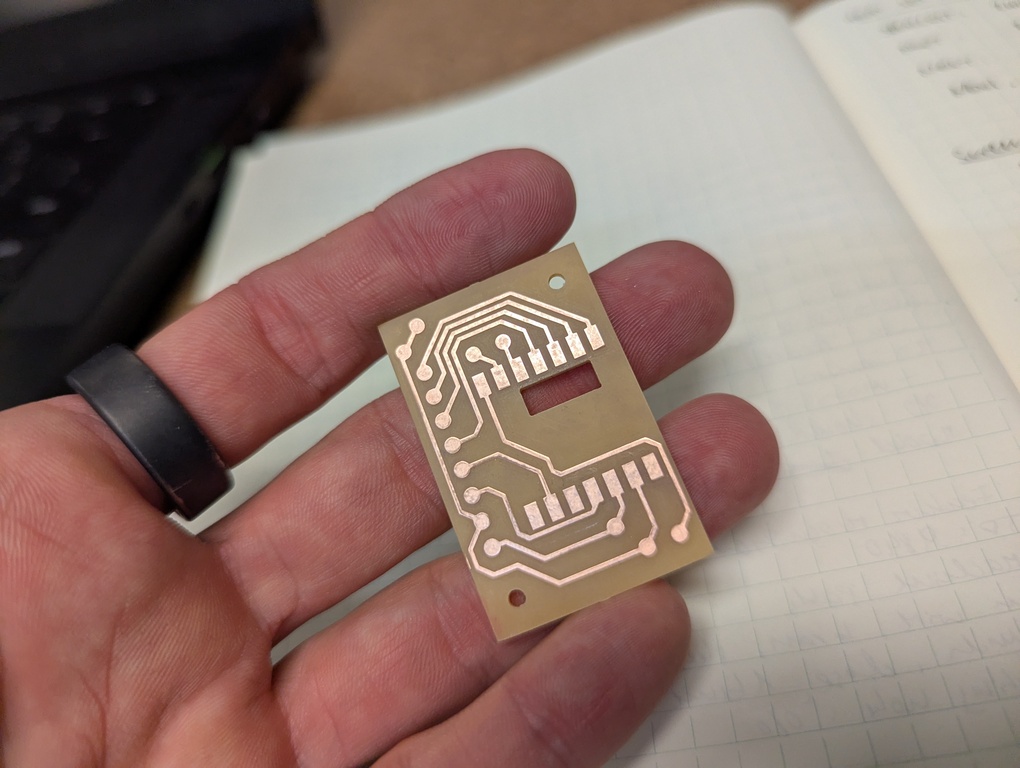

I added a few components to the pcb design (buttons, LED data line breakouts). I changed the layout accordingly. I then exported gerber files. You can find those files on the right (FP-Board.zip).

I then upload the gerber files into Quentin’s tool that can convert gerber files into a PNG. I download the two images.

I modify them in GIMP and make the final files that I upload into Neil’s mod application to generate the toolpaths. Remember to flip/mirror the edges image with the drill holes when aligning the text.

I run the first job on the Carvera machine to create the front of the PCB. I then flip the PCB over and screw it down again. From the width of the stock, the offset I used for the front and the width of the PCB itself, I calculate the new offset that will make the back side line up with the front.

I admit that I used a bit of a hack to make the back. I didn’t know how to use mods to not create the tool paths that cut out the board. I didn’t want those for the back of the board as to nut make those cuts for a second time. Since those cuts are made in the end, I closely observed what the machine was doing and then stopped it right when it was about to make those cuts. Clearly, there is a better way to do this. But it’s a Saturday night and I want to get this board done :)

There must be something slightly mis-aligned or something that I don’t understand about the Carvera’s offset calculations. As you can see in the images below, I didn’t get the offset quite right (the math is simple enough and should be correct). There’s a little stripe of copper left on the left side of the board. I don’t mind it. It actually looks nice.

I then sand the board and manually scratch off a few very thin leftover copper pieces that I don’t want on the board. I really like how the text came out.

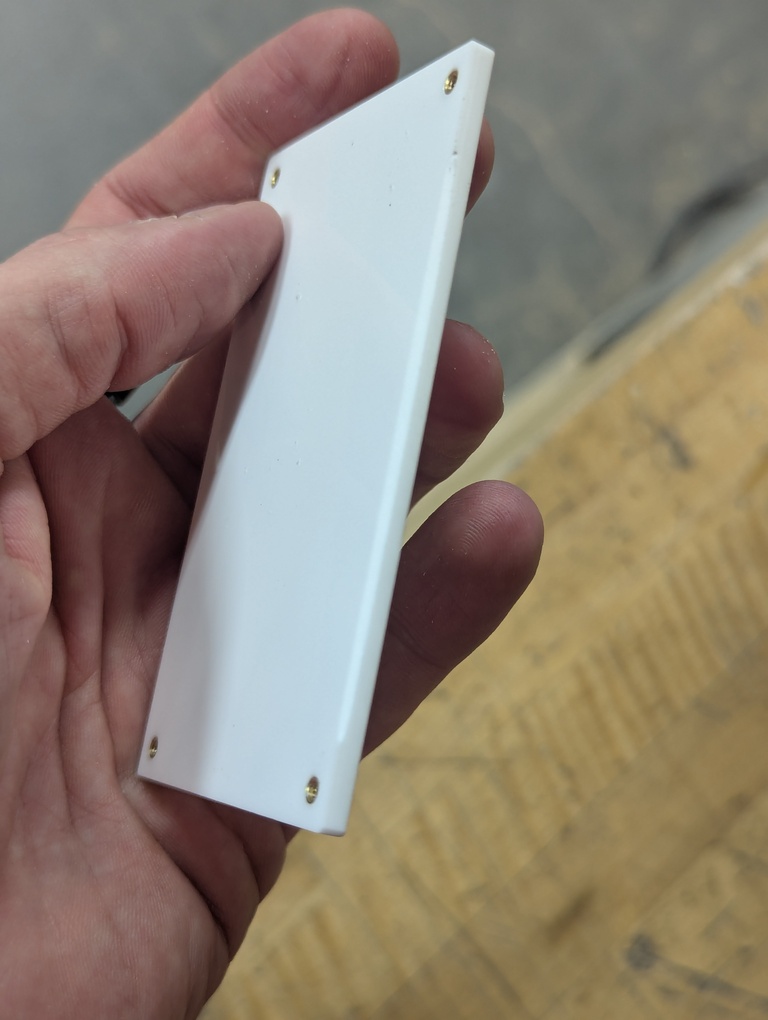

Acrylics

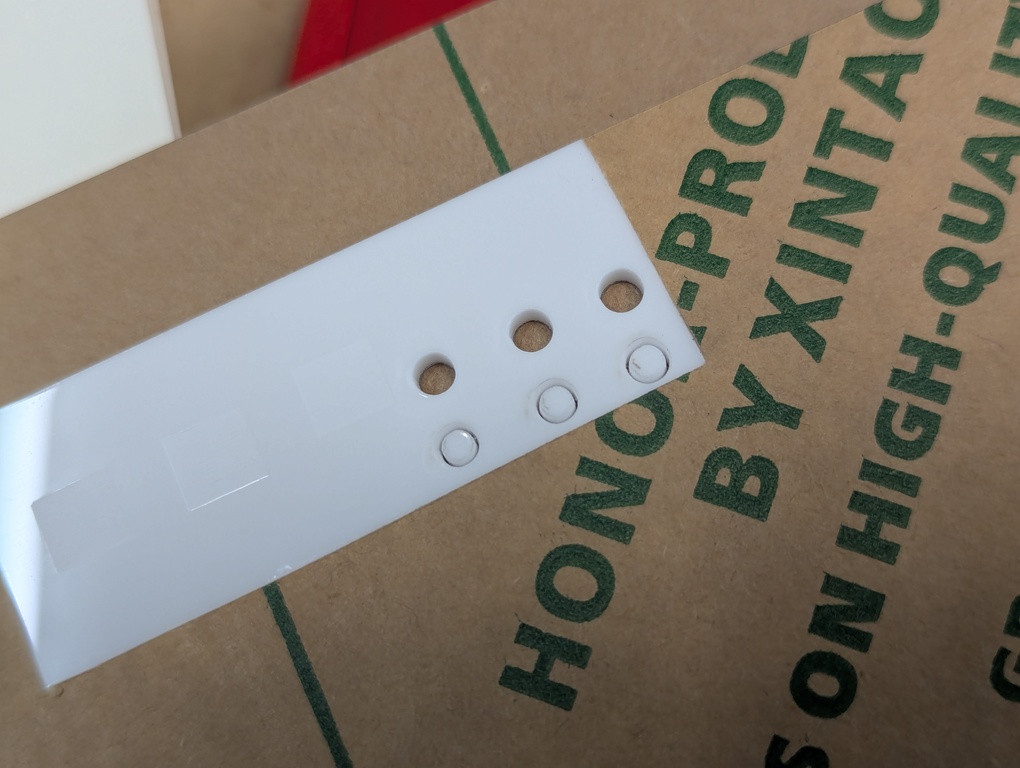

I’m running tests on some acrylic sheets that I ordered from Amazon. I want to understand the parameters required for the laser cutter to cut through the sheets. Also, I want to play with engraving and see what looks best and what sort of looks I can achieve. The acrylic is 2.7mm thick.

Cutting

First, I use the white acrylic and figure out cutting power and speeds to cut through the material.

| Power | Speed | Notes |

|---|---|---|

| 30 | 10 | Does not quite cut through the sheet |

| 35 | 5 | Successfully cuts through the sheet |

That was quick. These feel like reasonable cutting values. I later confirm that they also work on the clear acrylic.

Engraving

I’m using the white acrylic to explore what engraving might look like.

| Power | Speed | Lines/cm | Notes |

|---|---|---|---|

| 5 | 80 | 100 | Looks ok, some lines are visible. |

| 5 | 120 | 160 | Looks ok, some lines still visible. |

| 5 | 160 | 220 | Definitely the best, looks nice. |

The results are pretty good! The video doesn’t quite show the lines that were visible in the first 2 tests. All parameter sets were pretty decent, but I’ll go with the higher number of Lines/cm if I use this for my project.

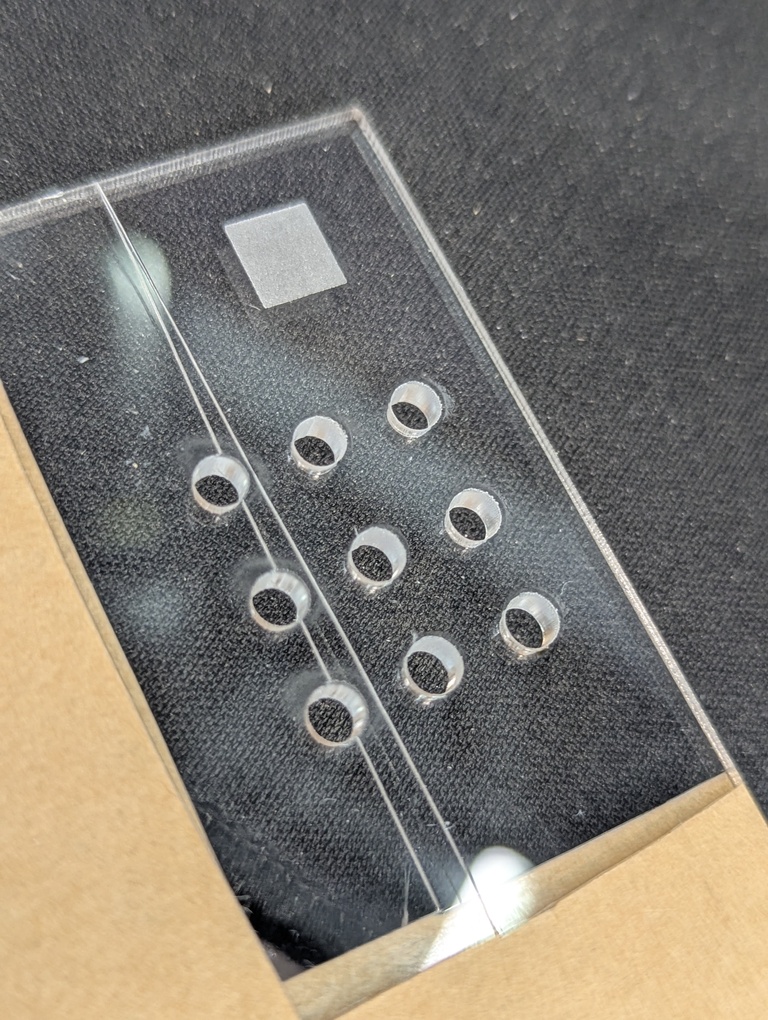

Next, I’m interested in what sizes the holes would have to have if I want to add heat set inserts. I try these diameters for a 3.2mm diameter threaded insert: 2.9mm, 3mm and 3.1mm.

Then I use a soldering iron to put the threaded inserts into the plastic. The 2.9mm hole is the only one that really makes sense. The others are too big. When I use this process in the assembly of the project, I’ll even go down to 2.8mm, I think.

I am a little concerned about alignment and precision. I want to use screws to keep some pieces together. For that to work the alignment of the threaded inserts needs to be pretty spot on. I’m thinking about how to guarantee good alignment. I’m not sure how to do that just yet.

I then also try to cut out a piece of engraved clear acrylic to embed it into the white acrylic. I think that might be a nice option for having some status LED light come through the housing of the project. I try different sizes of the “hole” to make up for the kerf of the laser.

I then also try to hot glue the pieces together. I apply too much pressure and the white acrylic cracks. That’s ok for these tests but something to be aware of and careful with for the final assembly.

Enclosure

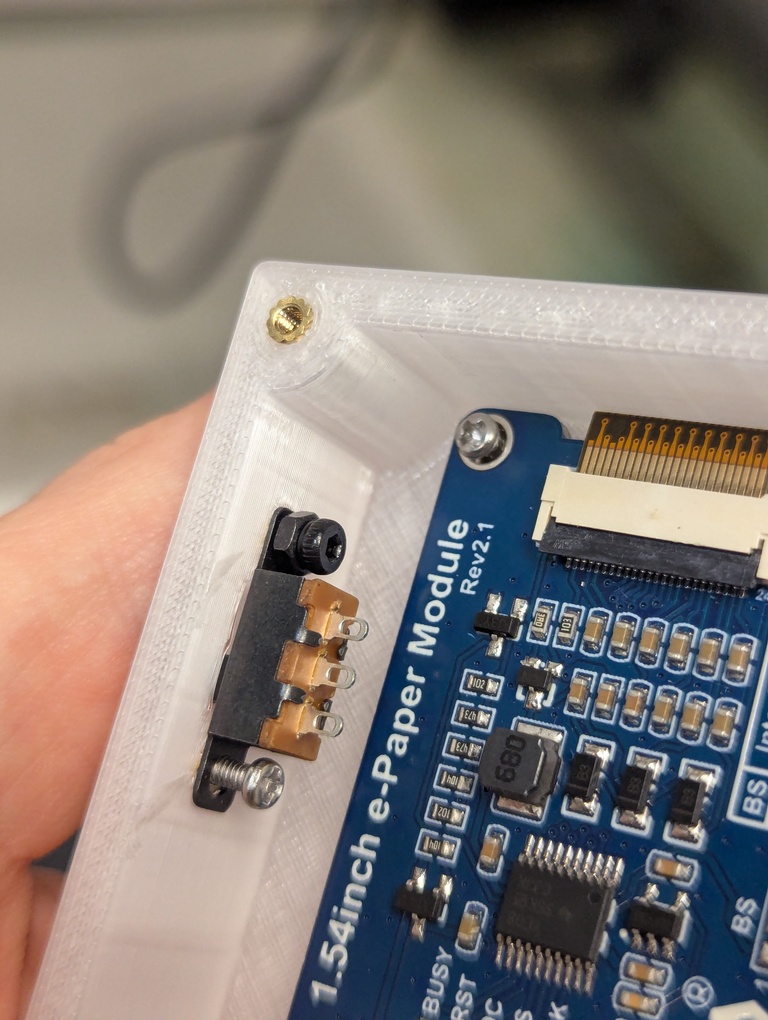

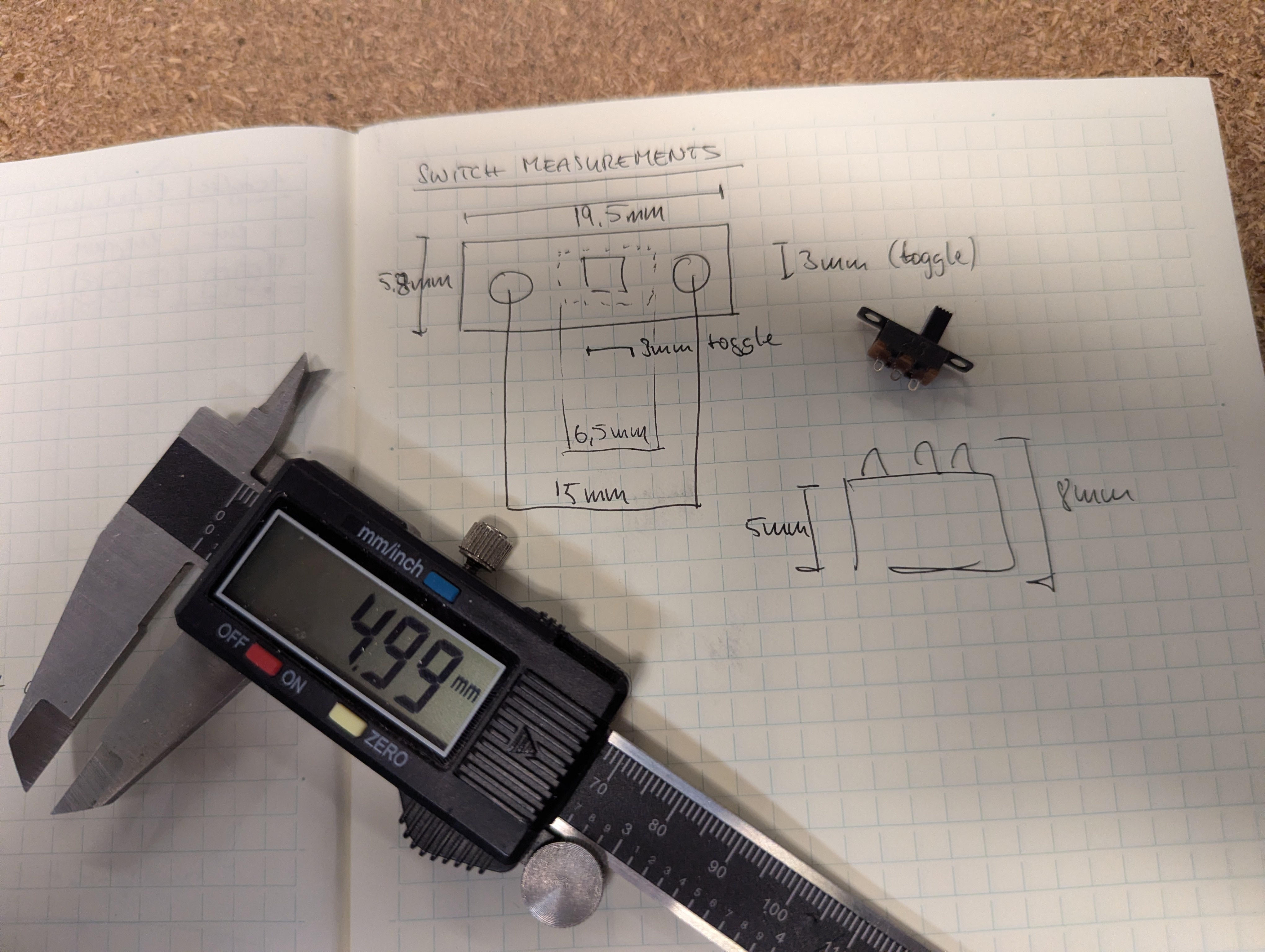

I continued some design work that I started a week or two ago. Some of the work today was around finding the correct measurements for the parts that I want to use. For my switch, for example, I used calipers to figure out the dimensions.

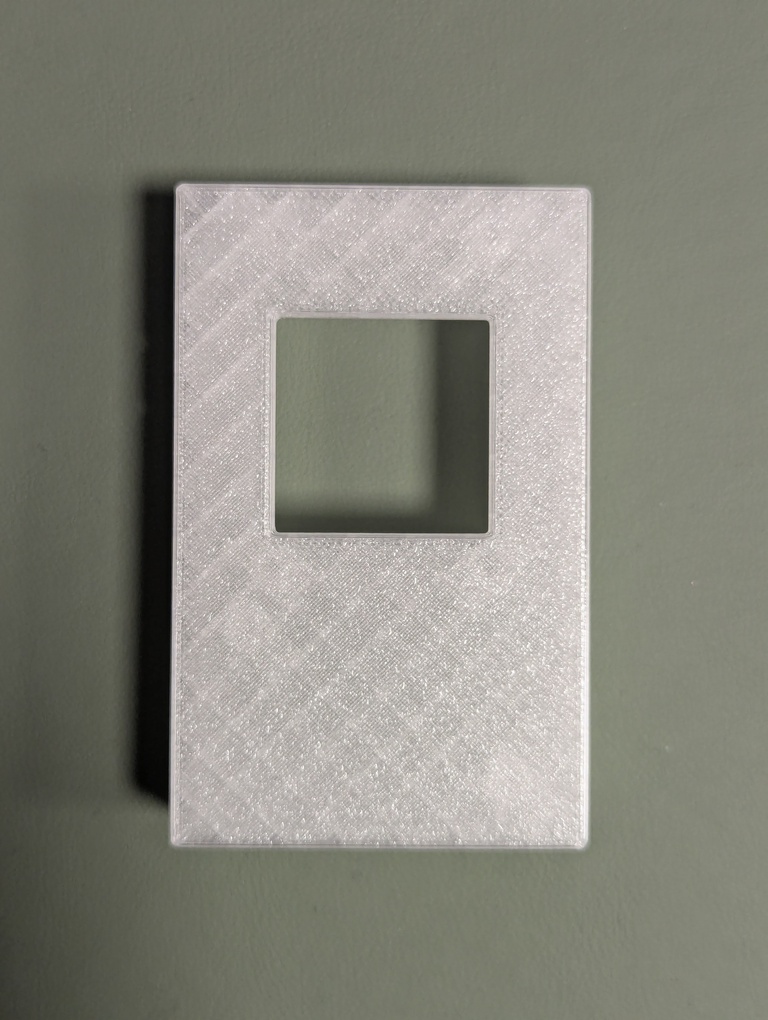

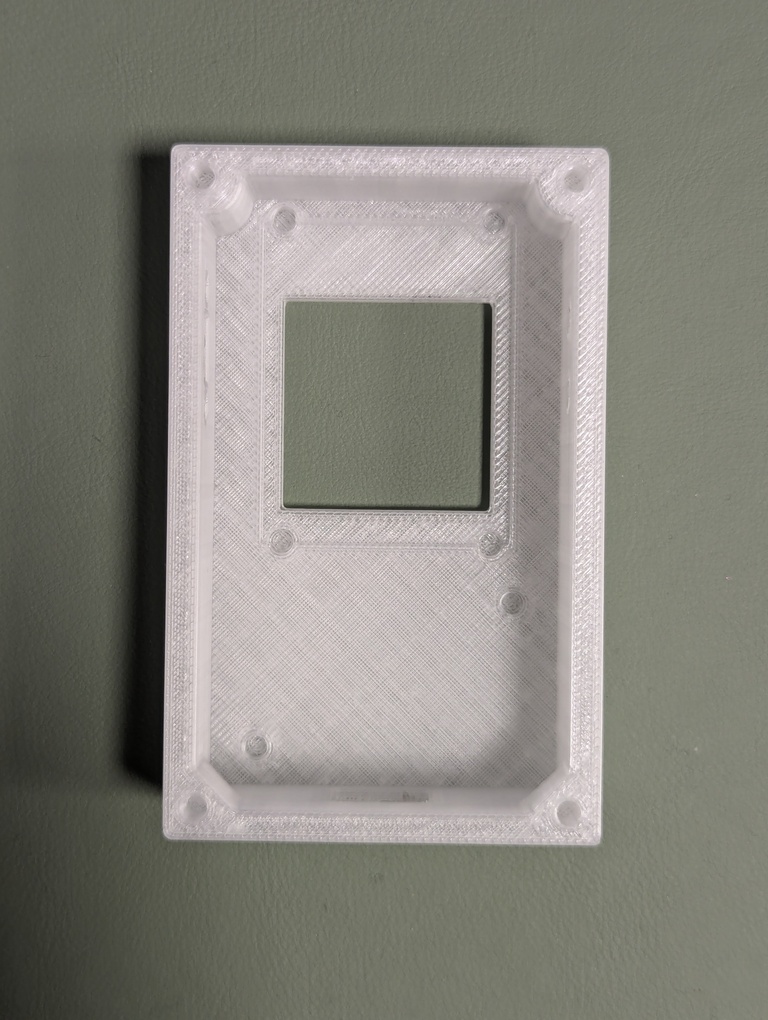

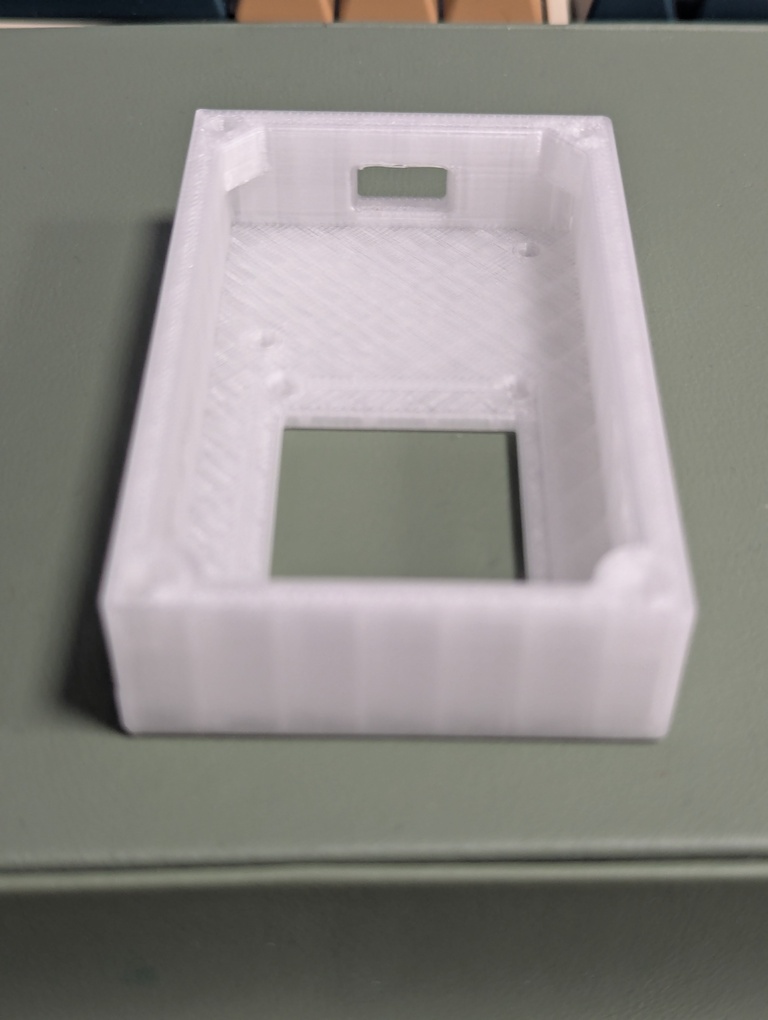

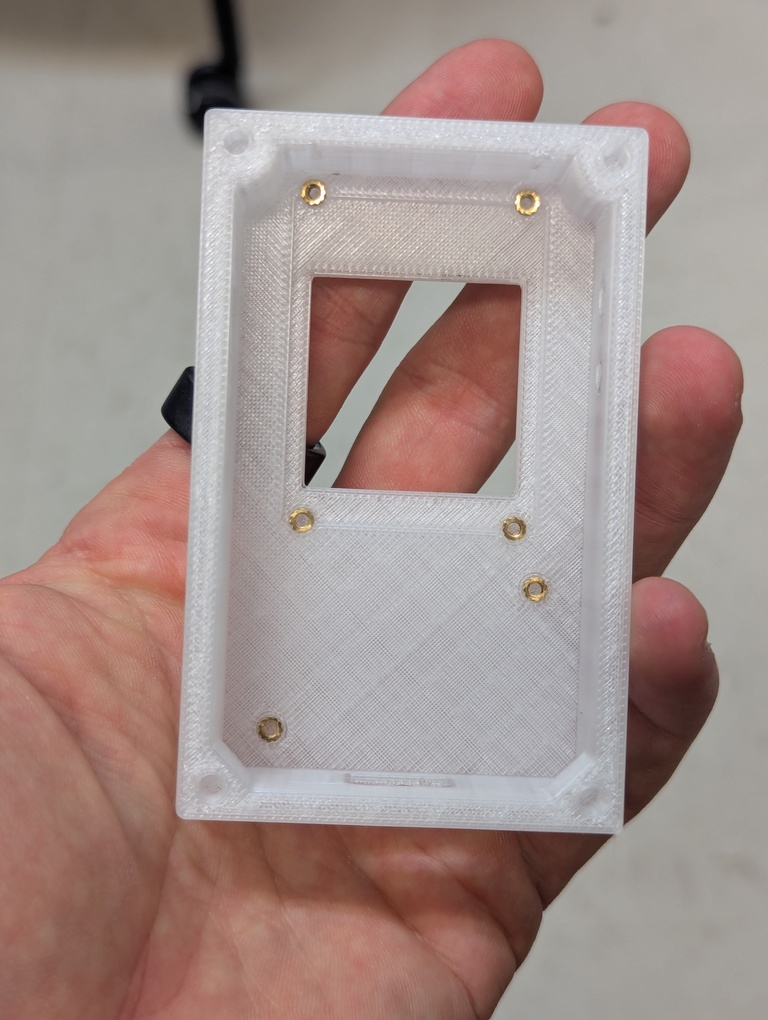

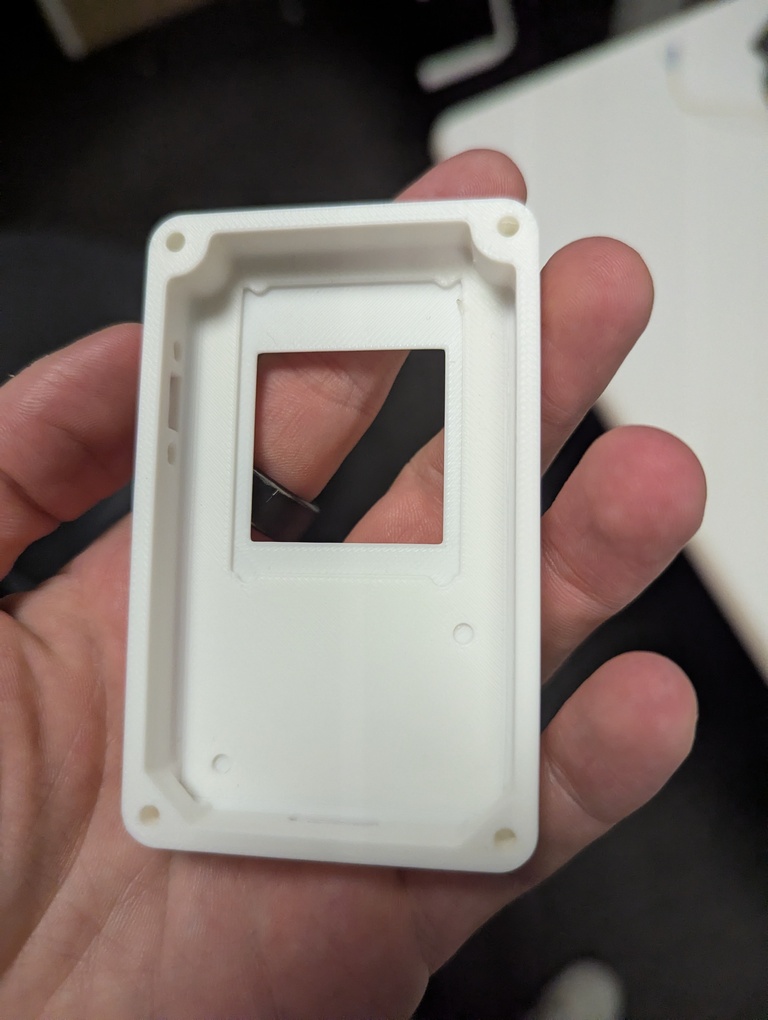

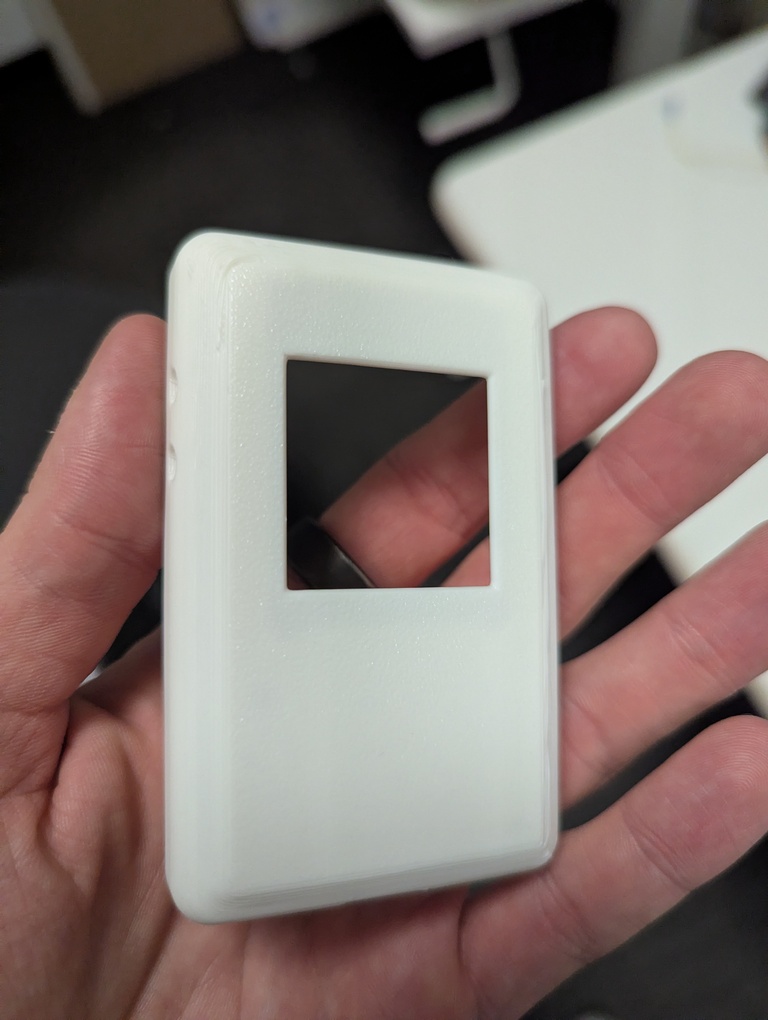

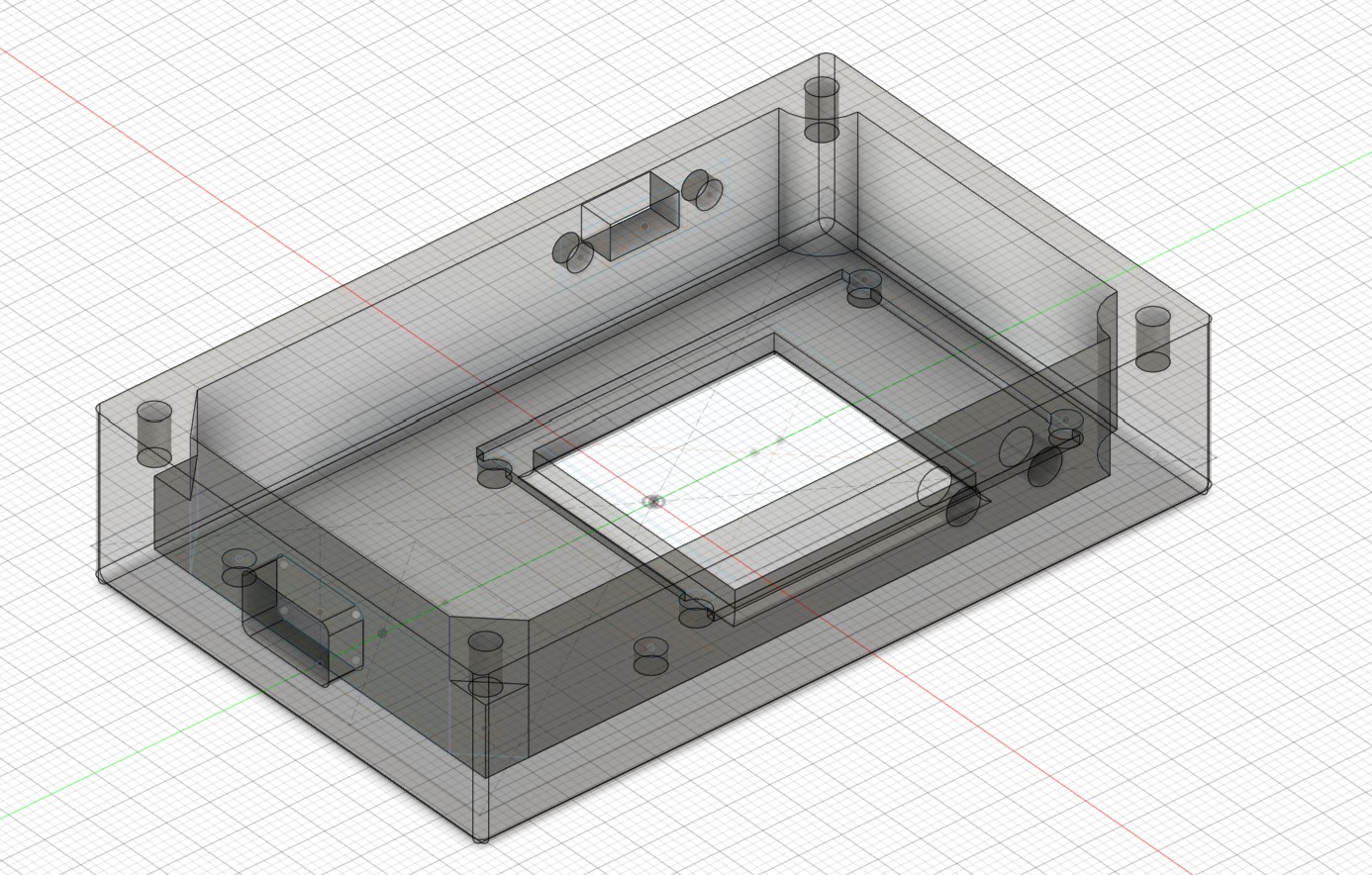

This is my v1 that’ll be printed:

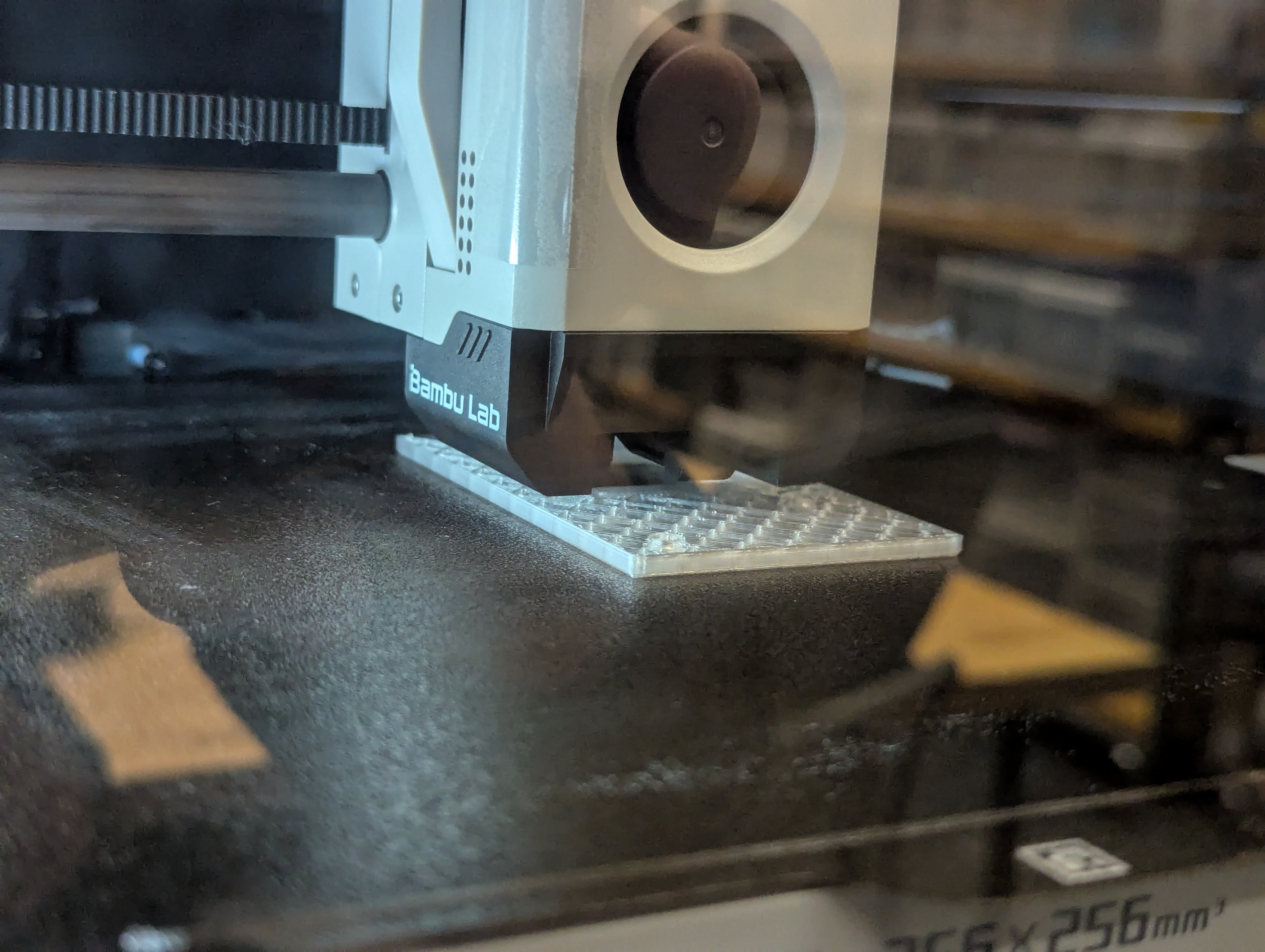

I print it on a Bambu printer. SaetB was kind enough to work with me to get it printed on a printer in her lab. I would like a nice print for this and the Prusas in the Mars Lab seem to mostly produce prints that are somewhat lacking. So, the Bambu it is (P1S). Here’s the printer in action:

The print came out pretty nicely. I’m happy with the result!

First Assembly Tests

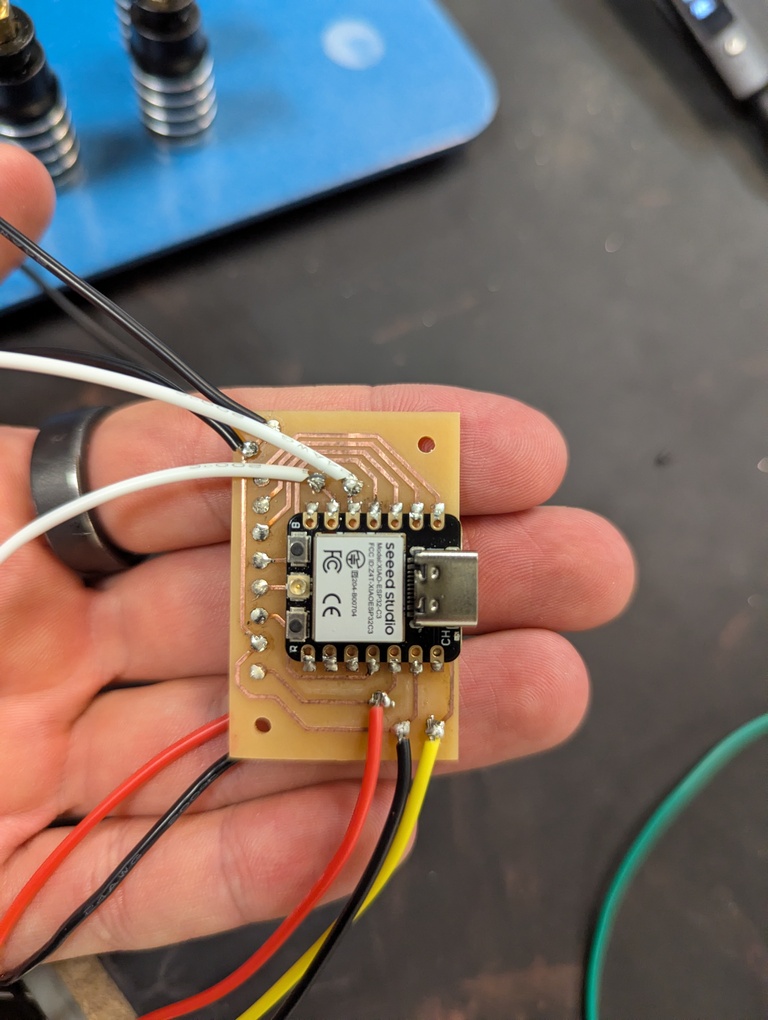

I start soldering components onto the PCB. There are a few things still in the mail (should arrive tomorrow), but I can get started on the ESP32 C3.

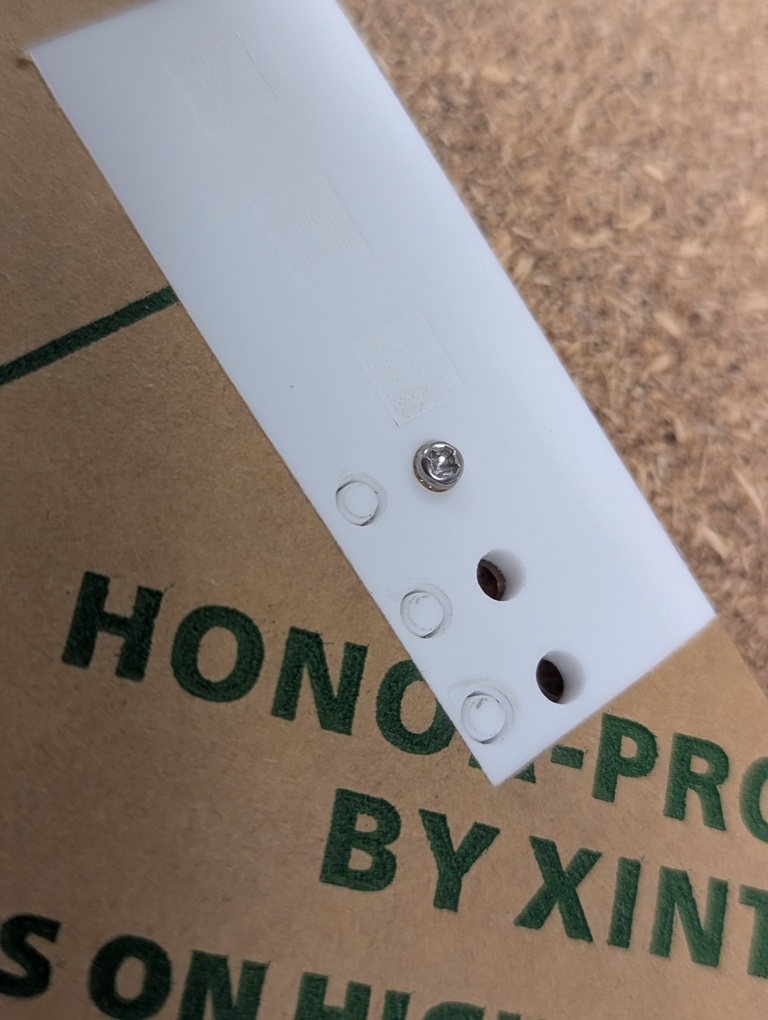

Time to add a first pass of heat set inserts into the 3d print.

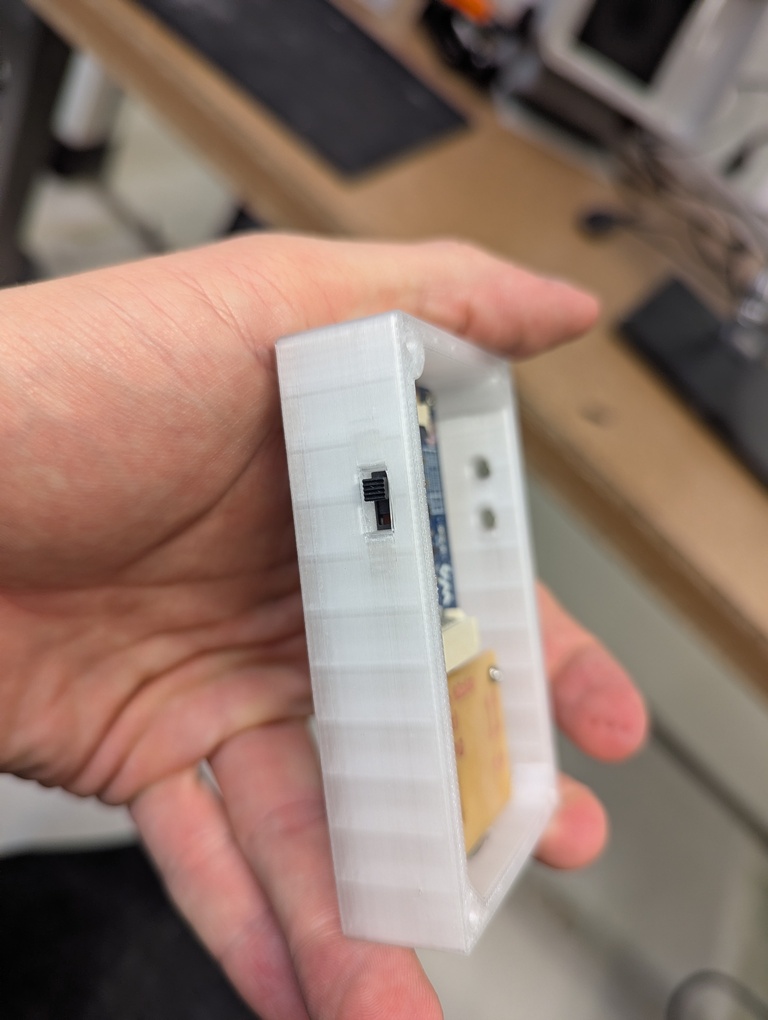

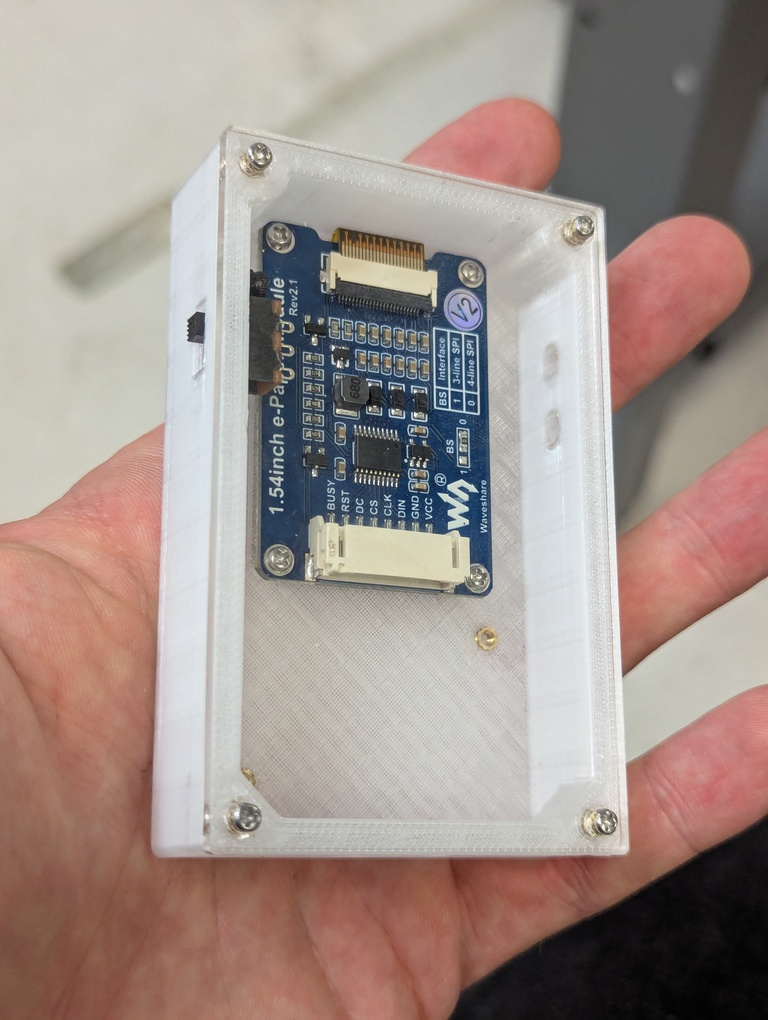

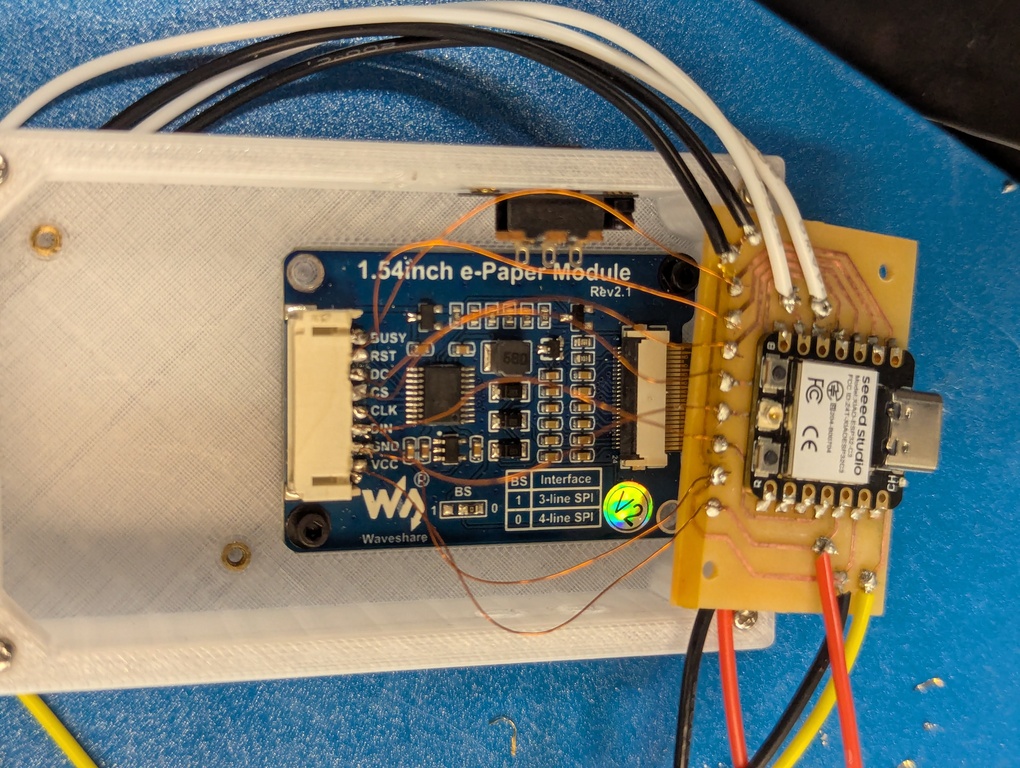

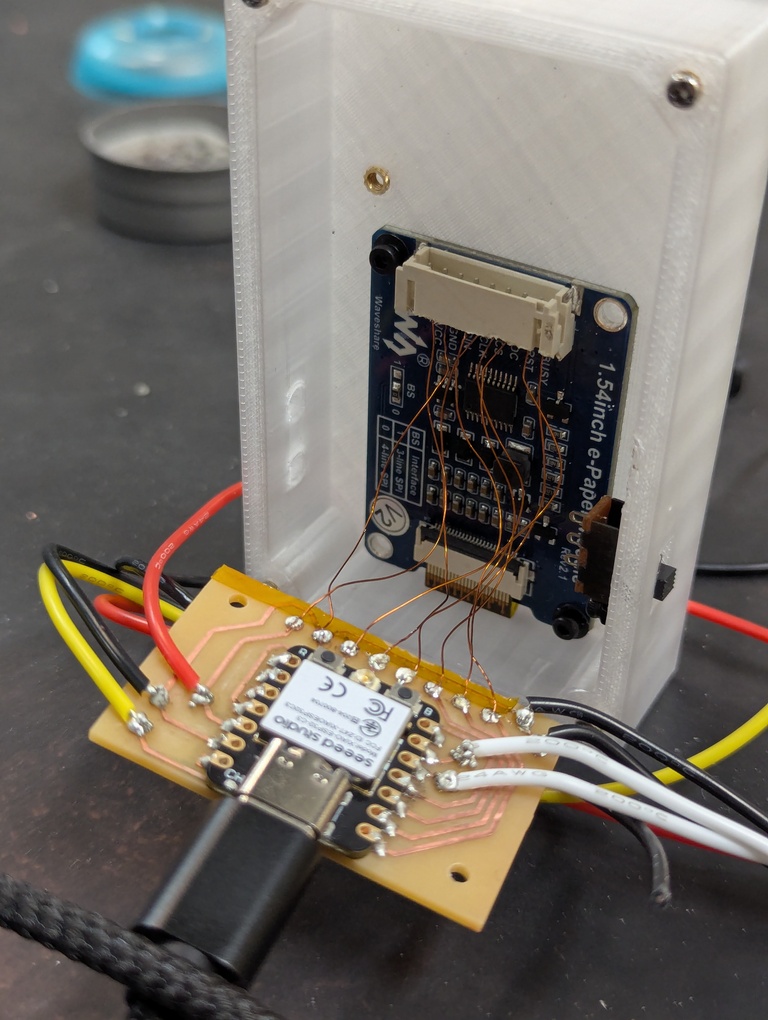

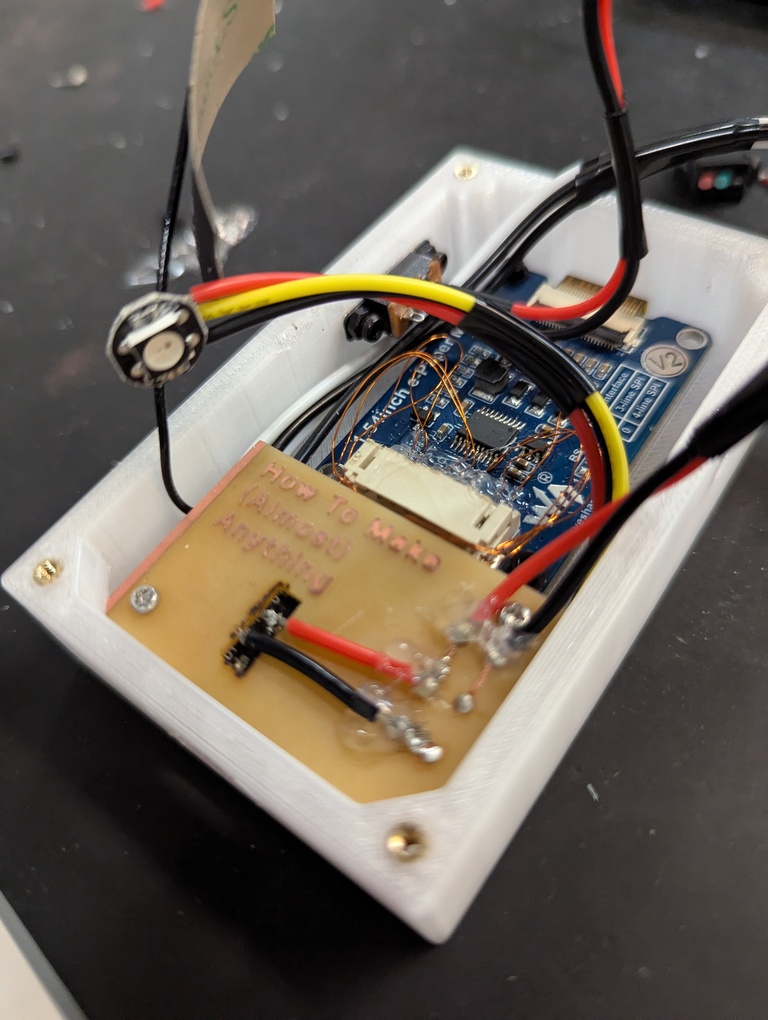

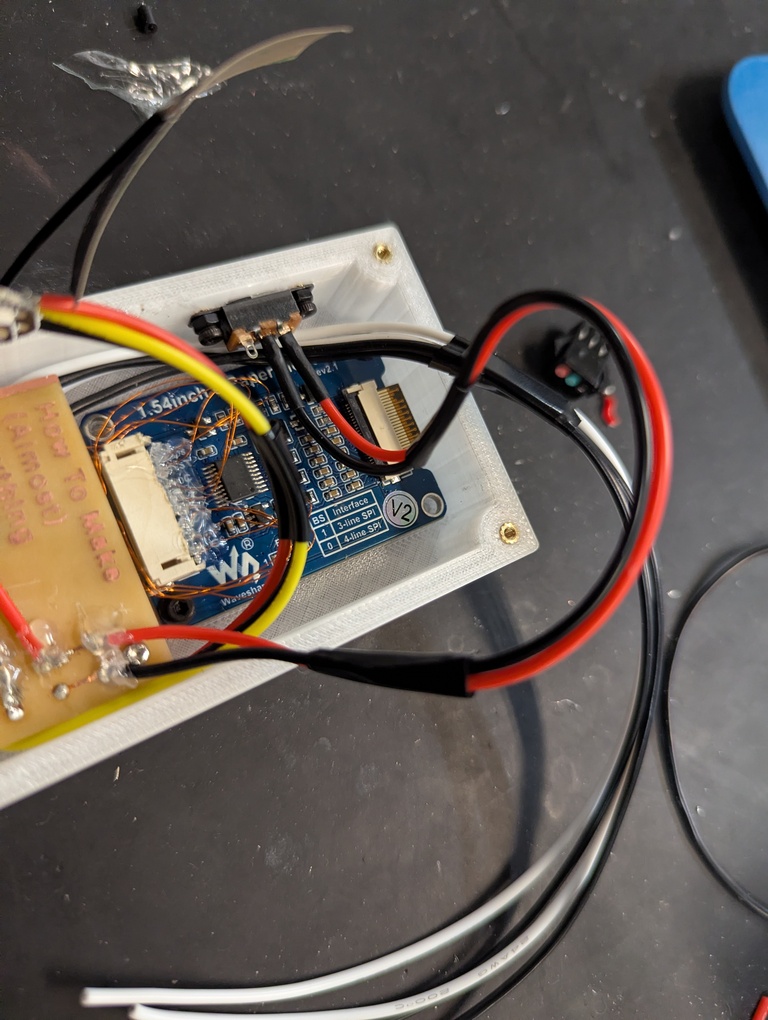

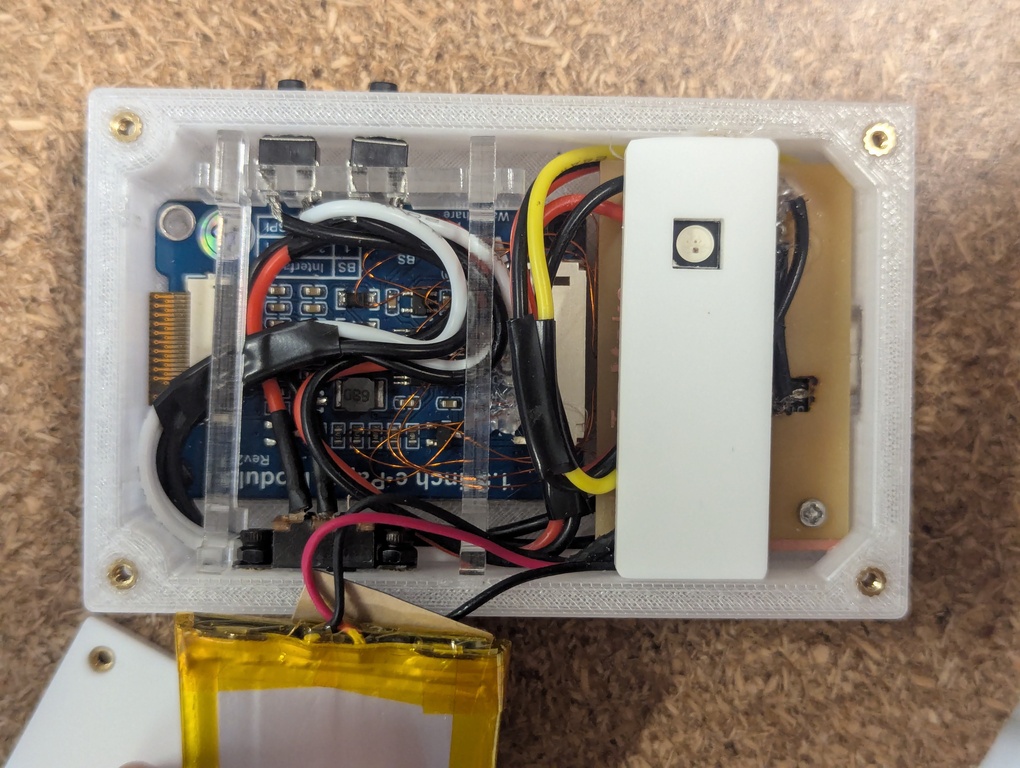

It’s time to see if the two boards (the e-ink driver board and my custom PCB) fit into the enclosure as intended.

Let’s see if the switch fits in as planned. I’m also adding the inserts for the lid. Those are a little deeper than the ones I used earlier. The smaller ones are 2mm, the larger ones are 5mm.

I’m a little concerned that the connector might stick out beyond the driver board dimensions a little too much. I’ll see if I have to make any changes later.

I’m also testing lids for the enclosure. Again, I’m using heat set inserts for assembly (these have a height of 3mm, the same as the thickness of the acrylic). The actual piece is cut from acrylic. I was concerned earlier about possible alignment issues. It seems that it works well as long as you’re careful and diligent about sinking the insert exactly vertically into the plastic. Quentin will look at this later and tell me to not do this again. Apparently it’s not a good idea to connect 2 pieces by putting these inserts into both. Only one side should have the inserts.

“It’s fine, just don’t do it again”

- Quentin

This felt pretty successful. I took some notes around what can be improved about the enclosure design. I had designed it somewhat spacious (spiral development! optimize later!) and I think I can learn from this first version if I get to make second version. Notes:

- The opening for the USB receptacle needs to sit lower (I will actually have to modify this V1 with a file to make it fit)

- The opening for the switch can be smaller (0.5mm on each side?)

- The buttons might need to sit farther apart (will see once they come in the mail)

- The holes for the heat set inserts should be a little smaller (the ones that are only semi-surrounded are a little loose, too)

- The screen should sit flush with the opening, need to investigate why it doesn’t right now.

- The switch can probably sit a bit lower

- That allows the enclosure to be smaller (less height) overall. Can probably shave off 3-5mm.

- The 3D printer is great and the enclosure walls can probably be thinner (from 4mm down to 3 or 2mm, at least where no threaded inserts are needed)

- Might have to compensate for wire connector dimensions

Good day overall. Next steps coming soon!!

More Assembly tests

Today was pretty frustrating. I tried to connect the two boards in the little housing and the space limitations are pretty severe. Using a connector takes up too much space, especially with a group of stiff wires coming out. I was struggling a lot. To be continued.

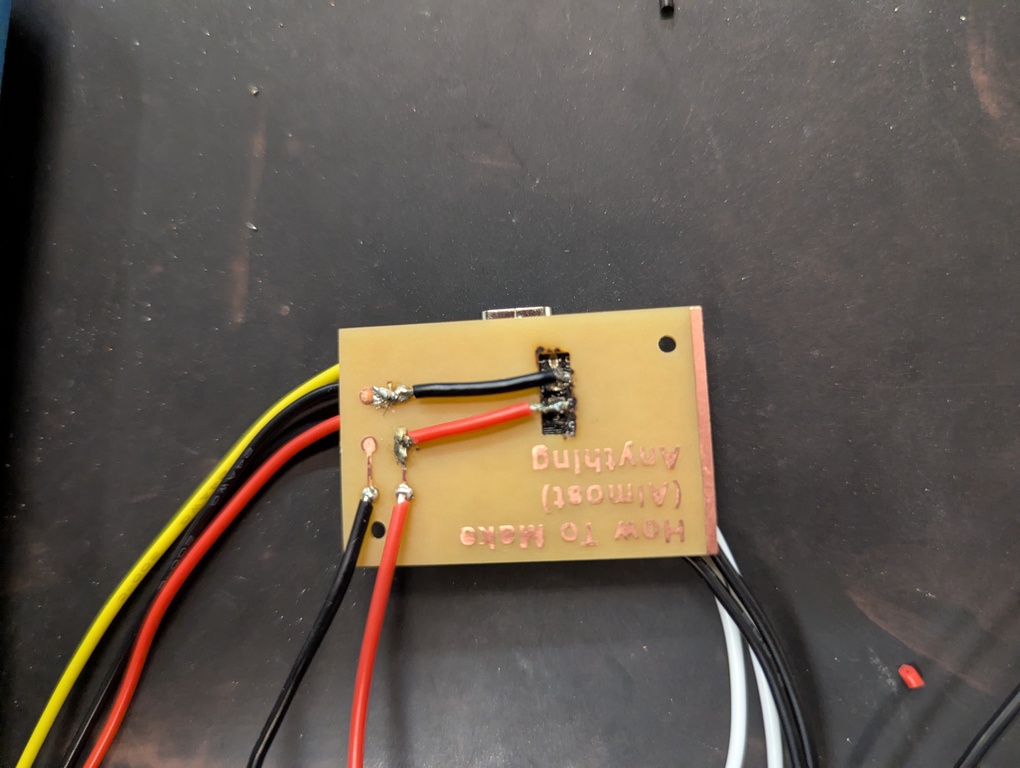

I started to solder wires to the PCB, though. I’m leaving them relatively long for now so I can cut them to the right length later.

I color-code the wires as follow:

| Color | Purpose |

|---|---|

| black | GND |

| red | POW (both the 3v3 from the ESP and BAT+) |

| yellow | data line for neopixel |

| white | GPIO connections for buttons |

More Assembly

I initially thought I could connect some silicone wires to the connector I had, but I didn’t find the right crimping tools in the lab (the connector is pretty small). So, changing directions, I soldered small hookup wires to the exposed leads of the connector on the e-ink driver board. Connecting those was fiddly, but worked. Using tip tinner on the soldering iron helped with getting good heat transfer. As shown in the image above, I’m adding some tape to a GND trace on the board to protect it from shorts created by the hookup wires.

I then connected the wires to my PCB and made sure that it all works as expected.

It works great and I can send images to the e-ink screen in the small housing now. The images are upside-down, but that’s easy enough to fix in code. Images are represented as PIL images (im in code below) and can be rotated like this:

if rotate_180:

im = im.transpose(Image.ROTATE_180)

For strain relief, I added a row of hot glue across all solder joints and connection points so that the small hookup wires are held in place by the glue and not by the solder joints. This connection clearly isn’t ideal, but it’s a solution that I’m ok with for this version of the project.

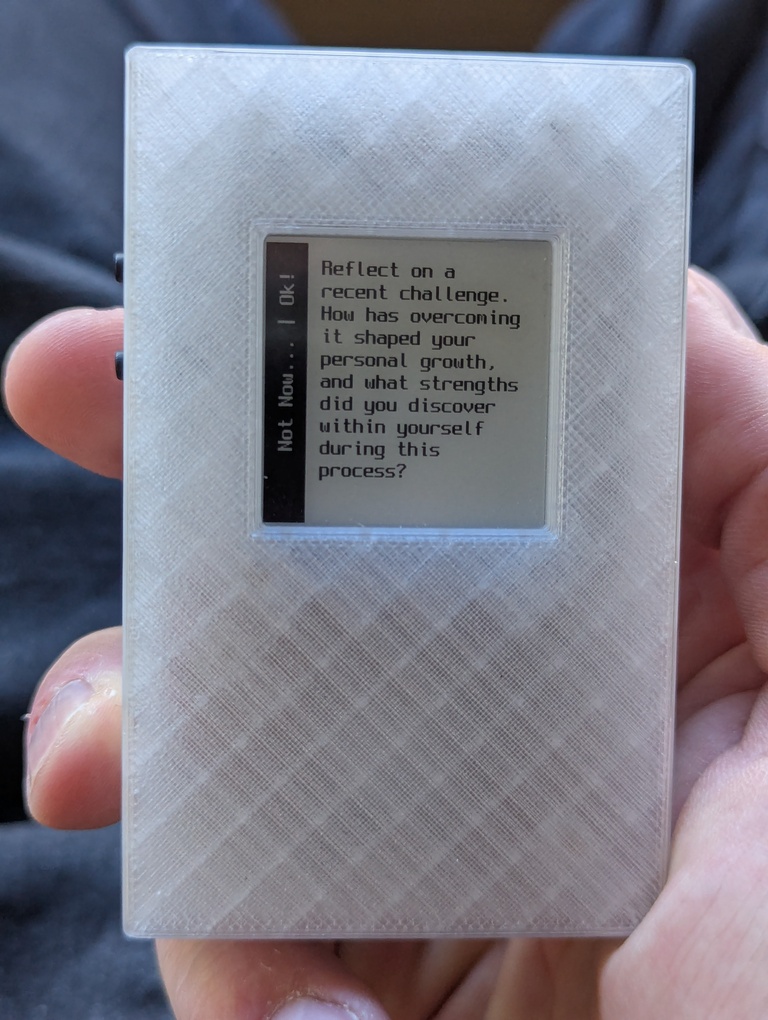

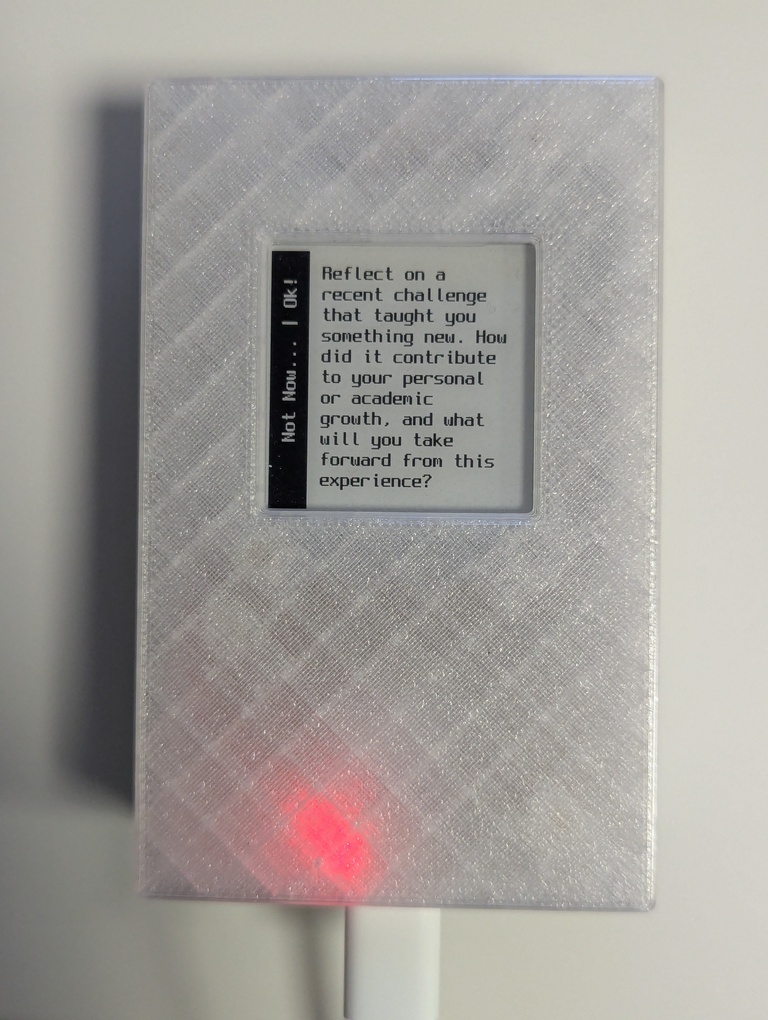

Text rendering

I started to look a bit more into how to render nicer-looking text on the e-ink display. Specifically, I explored some bitmap fonts that I assumed would give me crisper rendering on the small, resolution-limited screen (the screen is 200x200px). After looking at a few fonts, I settled on spleen. Initially, I had thought of the screen as just a way to show QR codes to the world as instructed by XR hardware. I have started to think a bit more about it as a more ambient alternative to the noisy phone. For example, I might want to have an AI agent running on n8n send journaling prompts to it. That way, I could do my journaling before picking up the phone in the morning so that I’m not immediately bombarded with notifications. There are images below that show a journaling prompt rendered on the screen with the new font.

You can install Spleen like so:

sudo apt update

sudo apt install fonts-spleen

To find it on your system afterwards, do this:

root@docker-ce-ubuntu-2gb-ash-1:~# fc-list | grep -i spleen

/usr/share/fonts/opentype/spleen/spleen-6x12.otf: Spleen 6x12:style=Medium

/usr/share/fonts/opentype/spleen/spleen-12x24.otf: Spleen 12x24:style=Medium

/usr/share/fonts/opentype/spleen/spleen-16x32.otf: Spleen 16x32:style=Medium

/usr/share/fonts/opentype/spleen/spleen-32x64.otf: Spleen 32x64:style=Medium

/usr/share/fonts/opentype/spleen/spleen-8x16.otf: Spleen 8x16:style=Medium

This is what it looks like on the little screen. Much cleaner than the truetype font I was using before!

More Assembly

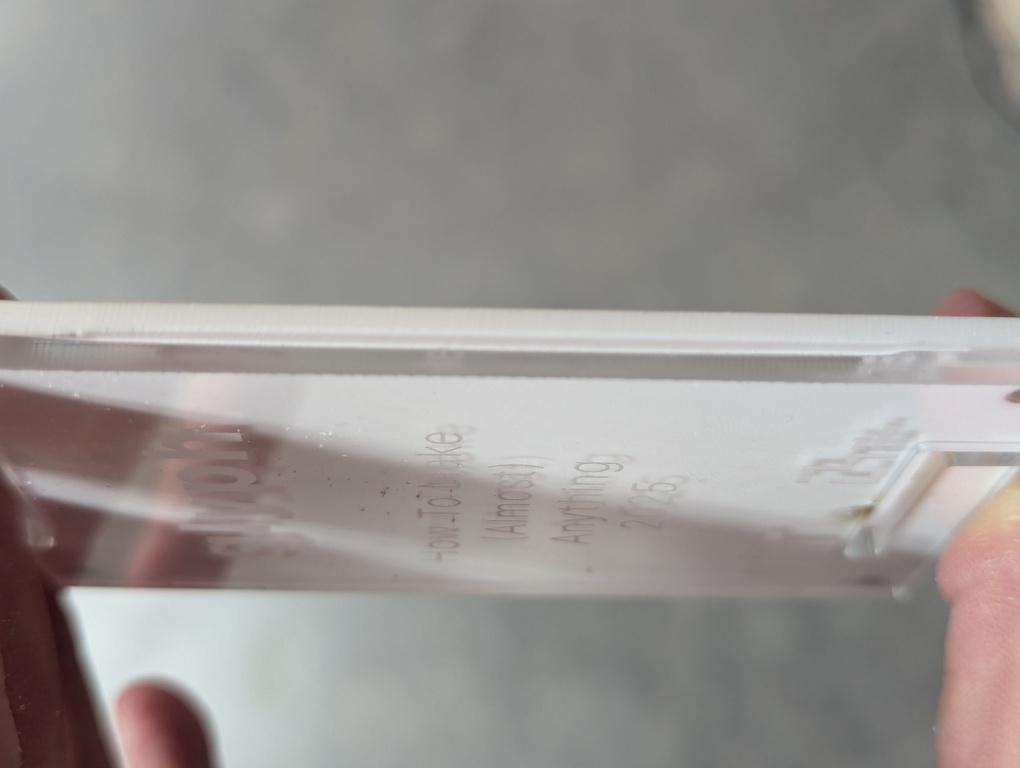

To make the outer plate that holds the cards in place nicer, I designed a pattern to engrave into some clear acrylic.

I also noticed that the heat set inserts stick out a bit too much still and sink them a bit more into the plastic using a soldering iron.

I also make a white lid with an insert made from clear acrylic. The idea is to have an LED in the housing shine into this insert and illuminate the outer face which I roughened with a file. I’m hoping that it leads to a nice little dot of light.

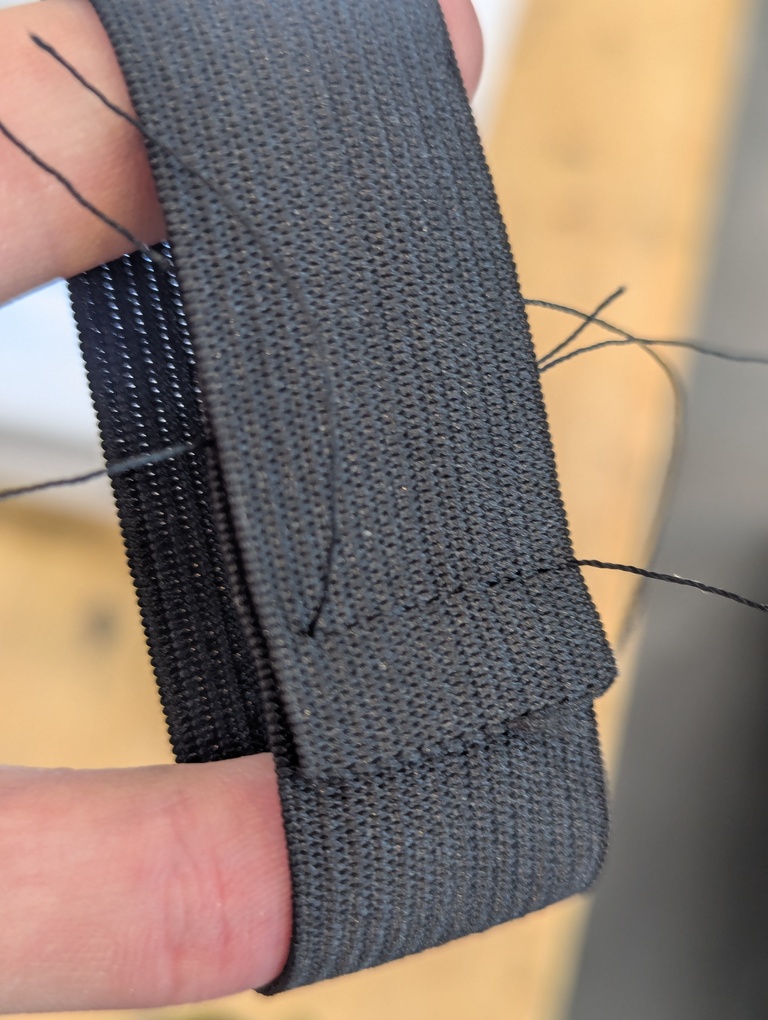

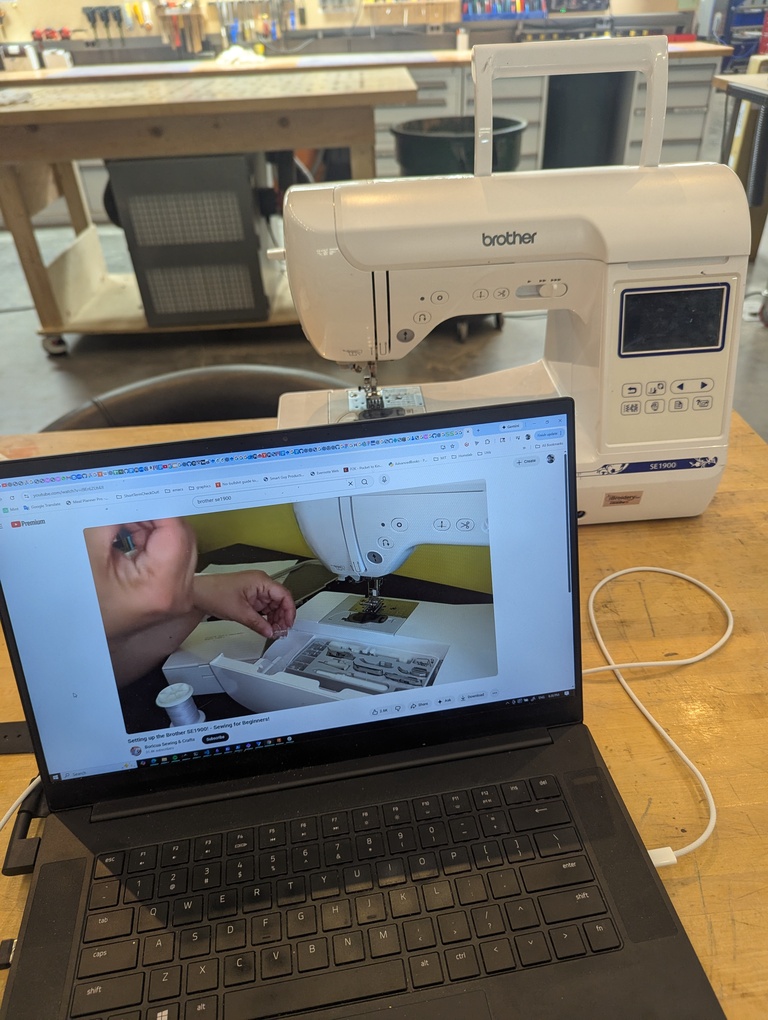

Sewing

I need to sew a loop from an elastic sewing band that I bought from Amazon. Dan told me where the sewing machine in the workshop is located. Thanks, Dan! I watched a tutorial about setting up and using that particular model. I had never used it (and the last time I used a sewing machine was a looooong time ago).

I did some testing to get used to the machine and the material. I felt pretty comfortable with it quickly. I won’t claim that I did a great job, but I was able to do what I wanted without the loop coming apart.

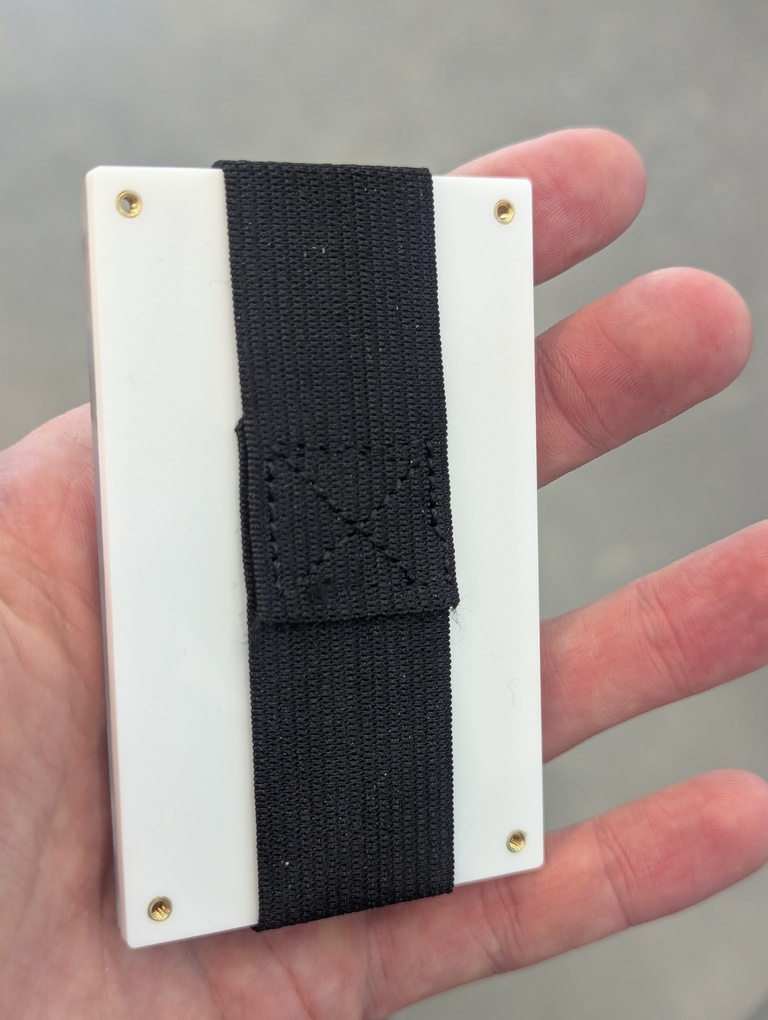

I moved on to the final version of the loop. I afixed the two sides of the loop with pins and then put a square of stitches between the pins. Result below.

I made 2 loops because I’m not sure yet whether I want to make a second version of my project before the final presentation. I then try to cut the leftover band with the tool shown below. It doesn’t work very well and I use a box cutter instead. I then test whether the length of the loop works well on the acrylic pieces I already made.

Here is a video of how the elastic is used in the project.

Chamfer Testing

I tested taking a file to the acrylic piece to chamfer the edges. Ideally, this would make it inserting the credit cards easier. Works ok!

More Assembly/Integration

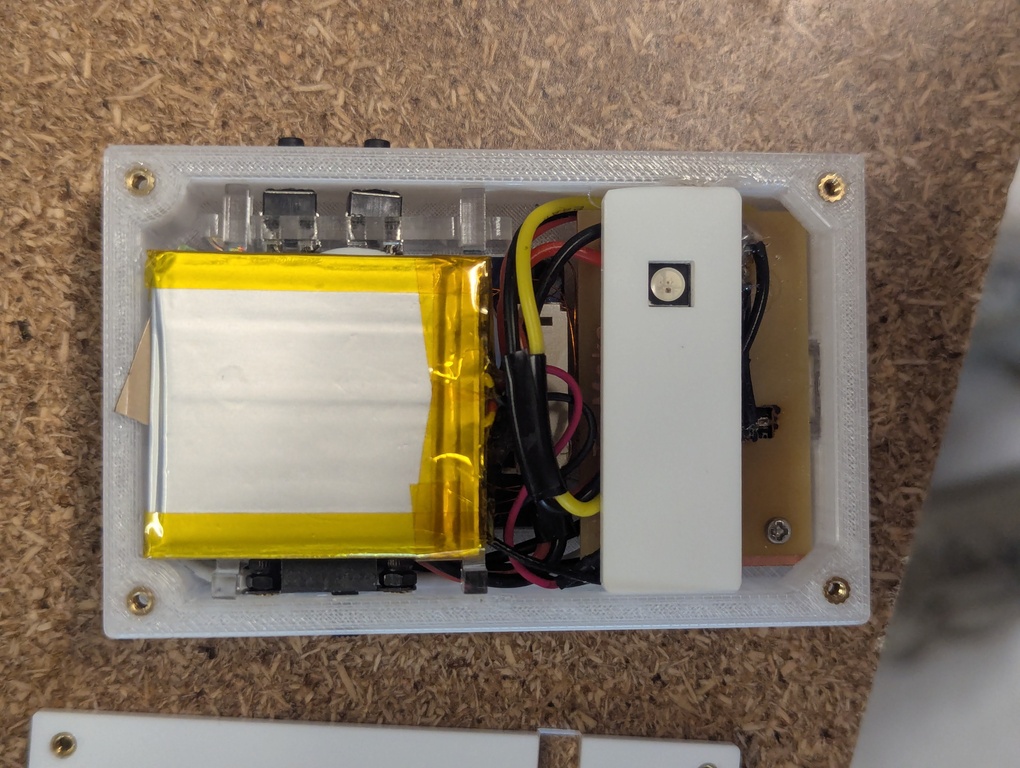

I solder a 2-Pin connector to the battery wires. I’m scared of batteries so I make sure they can be disconnected and that the connector is female (no exposed leads that could short accidentally).

I also connect the 3 wires reserved for the neopixel led - soldering + strain relief through hot glue.

Testing the fit:

Software Improvements

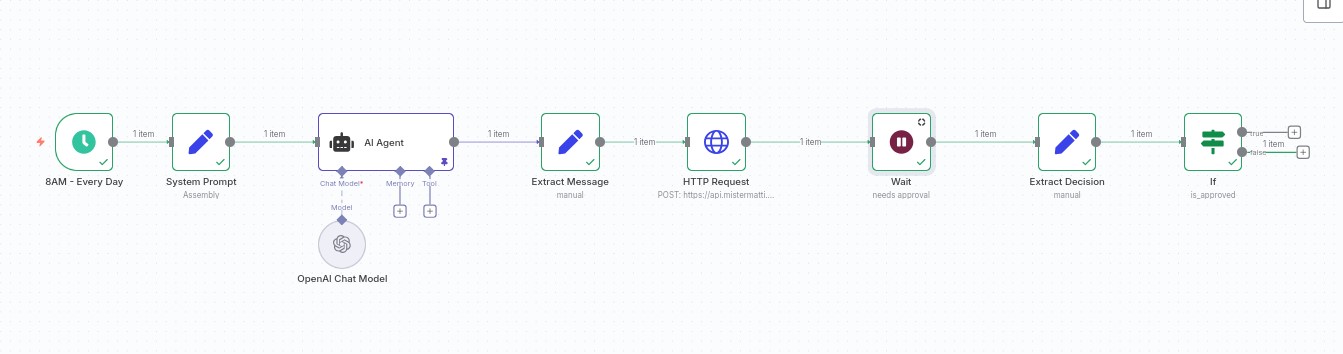

I mentioned earlier that I might attach N8N for a first use-case: journaling prompts. Putting that together was pretty straightforward. Glyphs API now has another endpoint that takes “prompts”. These are not just journaling prompts but any text based prompt that requires input from the user. Glyph’s buttons are used to react to the prompt. I’m imagining building human-in-the-loop use cases with this in the future. It will allow an AI system to prepare something (let’s say an email) and then getting confirmation from me as the human-in-the-loop before actually acting.

The video below shows:

- manually triggering an n8n workflow to send a prompt to glyph (via its separately hosted API)

- the prompt appearing on glyph (note the new side bar that labels the buttons)

- me pressing one of the buttons

- the workflow completing in n8n (after waiting for the press)

Wrapping it all up

Battery charging seems to work well. Glyph will power ok from a USB cable independently of the position of the switch. It needs to be “switched on” for the battery path to be active, allowing for charging. The picture below shows the XIAO’s charging indicator showing through the housing (hover over the image to see).

I cut a small piece of acrylic that can hold the LED in place. I also design a 3-piece rig from clear acrylic that can hold the 2 buttons in place and transfers the forces of pressing the buttons to the inside of the opposite wall. This rig also has space for the battery. All of it can be seen below.

The buttons are connected and work well. I had tested them by just changing the color of the LED before then also hooking them up to the web API as described above. The LED looks nice! I’m quite happy with how that part worked out. It’s a bit too bright in the video below. I drop the brightness to about 10% of this later.

I then open up the box and insert the elastic band. It’s held in place by the lid being screwed on top of it.

Preparation For The Final Presentation

To create some nice images and videos, I built a makeshift photography studio in my office. A scarf serves as backdrop. I have a small electric turntable and a very small light. Photos and videos are shot on a Sony A6400. Editing is done in DaVinci Resolve.

All final files needed to run glyph and its API are in the list on the right. I’d love to hear if you ever use any of this for your own projects! Let me know, please! :)

Reflection

Future Steps

To improve the project, I have the following plans.

Housing

The footprint of “glyph” isn’t great. It needs to be smaller. I designed a new enclosure that would be much nicer. It’s shown below and can be downloaded through the “Enclosure V3 STL” link on the right.

For the acrylic plates holding the credit cards I should be able to find a acrylic stock that is thinner but still robust enough. The current stock is ~3mm. Half of that should be fine, shaving off a good chunk of the device’s size.

Internals

There are a few things I would change in terms of the internal setup of the device:

- use thinner wires and cut them to length a bit better

- use thinner acrylic stock to build the rig for the buttons and the little frame for the LED. That’ll claw back some of the volume currently wasted on the inside.

- the connection between my custom PCB and the screen can be done differently. Ideally, I still want to replace the driver board with a custom board. That would allow for much more design flexibility.

Overall, I’m quite happy with this version of glyph. I might still improve it. Stay tuned. If you want to follow future work, you could do so here.