Insight: OpenSource Gaze Tracking

What did you do?: I developed a low-cost head mounted Gaze-Tracking system.

How did you select the approach?: I did research on this topic and had previous experience with IR-Cameras. I wanted to remain flexible in choosing my calibration routine, this is why I choose SVD Fitting - as it is indiscriminate of how the data was obtained as long as the point relate.

How does it relate to what's been done before?: Most GazeTracking systems are stationary - most head mounted systems are very expensive, this prototype was build with less than 60 USD.

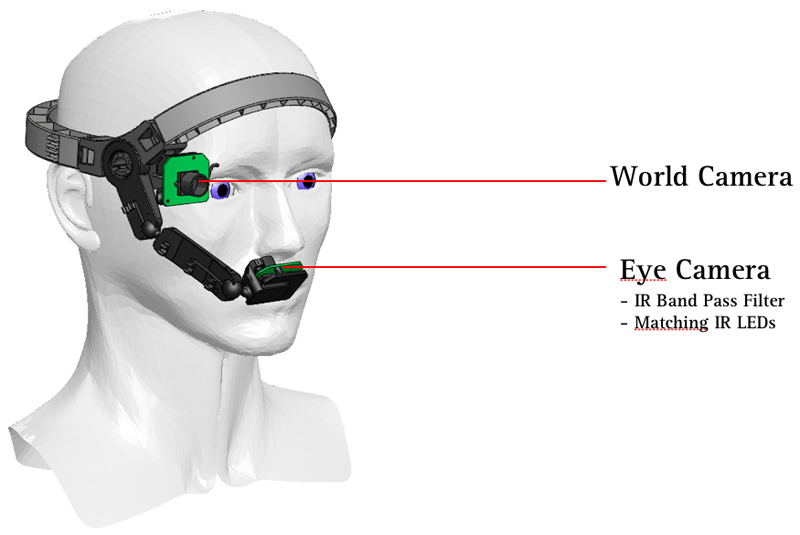

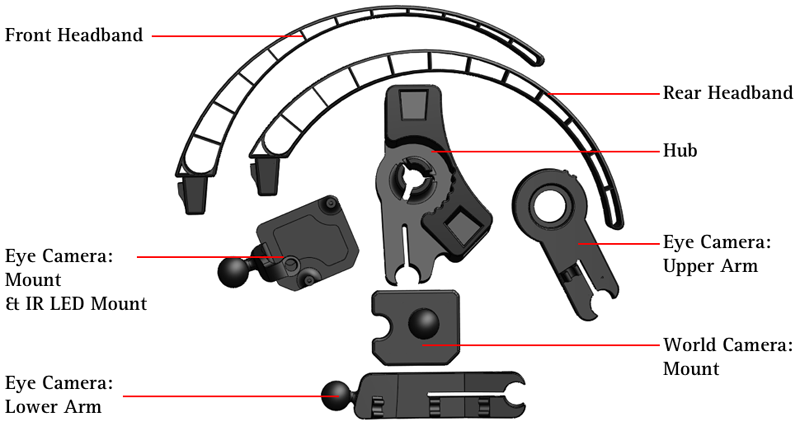

Hardware

SolidWorks Model of the Headset

4 qubic inch print - 25 USD

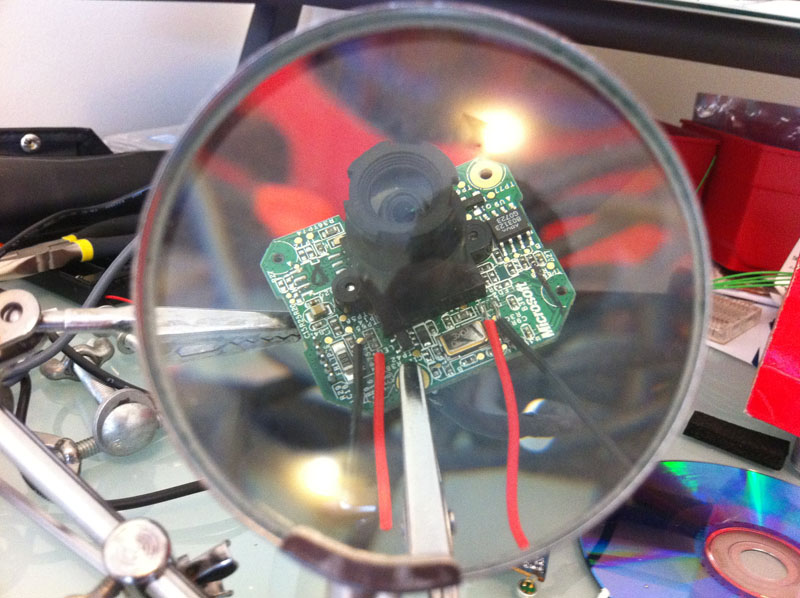

replacing the green smd LEDs with 5mm IR-LEDs

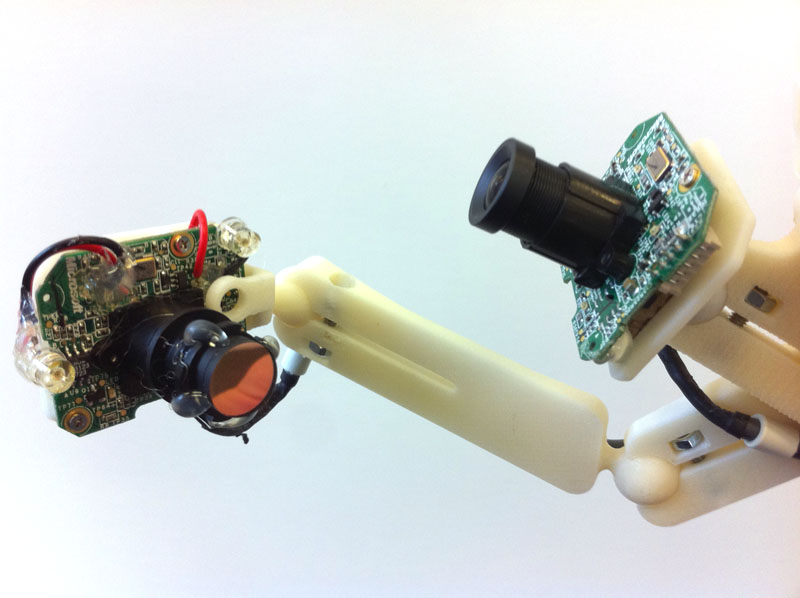

The two cameras one with IR-bandpass filter and matching LEDs, the cams are 12 USD each

Hardware Test

Software

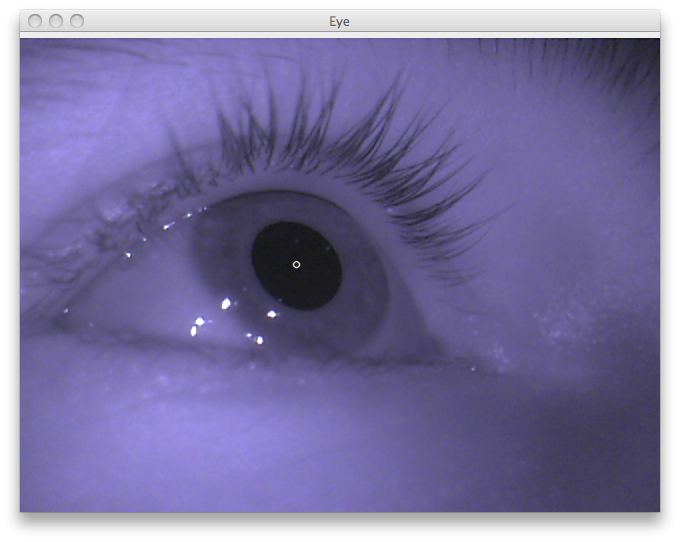

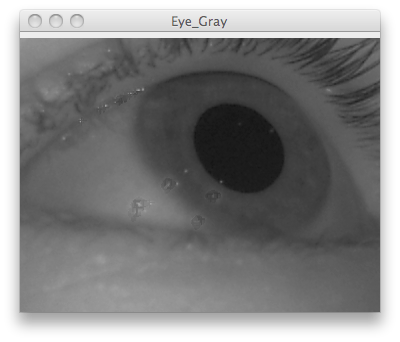

Task: find pupil center

Remove Spectral Reflactions

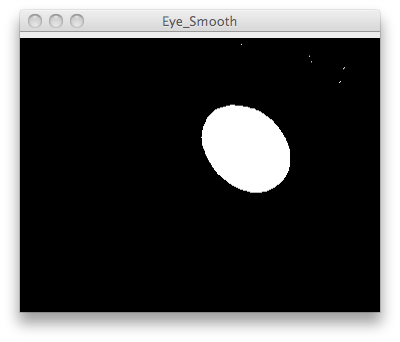

Binary Thresholding

Abs derivative of value in X and Y gives the contours.

The points are grouped based on connectiveness

Ellipses are fitted on the groups of points

The biggest ellipse is assumed to be the pupil and its center is the pupil center

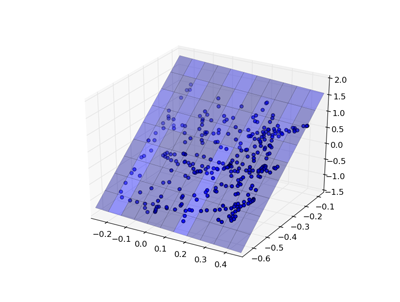

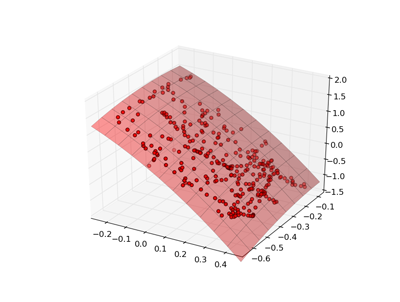

Fitting a plane using singular value decomposition results in a poor fit

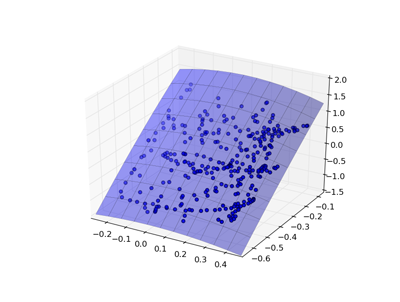

Fitting a hi-order polynomial using singular value decomposition gives a better estimation of the transform

Kalman Filter

The approach taken so far directly translates the position of the pupil to world-coordinates. It does not take into account possible noise in the measurement and does not exploit constrains of the observed system, like the fact that the pupil can not jump from one corner to the next without being seen traveling in between. I made a crude model of the dynamics of the pupil in the eye taking position and velocity into account. I then implemented a Kalman filter that exploited the model and had a noise model for the measurement as well as the internal model of the system dynamics.

The system Noise model is very important as at 30fps the pupils movements cannot be described very well with a linear model. At higher measurement frequencies the dynamics of the eye could become more evident and a linear model more aproriate

ConclusionHow did you validate the model? / How did you evaluate the results?: When using the Tracker, consistency of the marker with the actual gaze point while moving the head reveal inconstancies in the pupil center detection and the transformation to world-view coordinates. Visualization of the calibration data and the functions used to fit the data show conceptual flaws. The calculation of fitting errors can give an estimate of the quality of the calibration run as well as the appropriateness of the transformation function

What did you learn?: I learned how to write and use a Kalman filter, multidimensional SVD fitting of hi-order polynomials and why you want to normalize data, OpenCV image processing, more data fitting, and gained (some) insight into how we see the world.

What were the problems? A Kalman filter is a very useful addition to most Sensor applications, if one has multiple sensors or an appropriate model of the dynamics of the observed process.

If you want to catch all eye movements you need at least 60 fps time resolution. At these speeds I believe a Kalman Filter can become useful.

Future hardware work: make the headset less obtrusive.