Find the first five diagonal Padé approximants [1/1], …, [5/5] to around the origin. Remember that the numerator and denominator can be multiplied by a constant to make the numbers as convenient as possible. Evaluate the approximations at and compare with the correct value of . How is the error improving with the order? How does that compare to the polynomial error?

For a fixed function and integers and , the Padé approximant is the function

that matches as many terms as possible of the Taylor series of . (We can define the approximant about any point, but without loss of generality we’ll only consider the origin.) There are parameters in the formula above (, , , and , , ), so in general this means we can match up to order . (Though by coincidence, or otherwise, we may end up matching some higher order terms as well.)

We can write the resulting system of equations explicitly by expanding the Taylor series of the approximant to order (since this gives us terms). To make the equations simpler to write I will introduce a constant .

Then we multiply by the denominator.

By setting the different powers of equal, this gives us equations.

We know what , , are, since they must match the Taylor series of . (In particular, .) So by solving this system of equations we can determine all of the unknown parameters. In particular, the last equations (i.e. for ) can be solved to determine , , . Then the first equations immediately give values for , , .

The first step can be written as a system of equations.

Note that if , then some upper diagonal chunk of the matrix will be zero, corresponding to those entries where the subscript of would be negative.

Finally, there’s no guarantee that this matrix will be nonsingular. (For example, consider the case where and all derivatives of up to are zero. Then all rows of the matrix except for the first will be zero.) In such a situation, one can try to throw out degenerate equations, and pull new ones from higher order terms (i.e. consider ). However it’s possible that all additional rows will be degenerate; in this case, the approximant is legitimately underdetermined. This means it can match the function exactly, and you can reduce until your system is nonsingular.

Ok, so now to actually answer the question. I wrote a SymPy script that uses the strategy we just derived to build approximants for me. For , I get the following approximations:

These give the corresponding approximations for :

Polynomial approximations, on the other hand (to equivalent orders), give

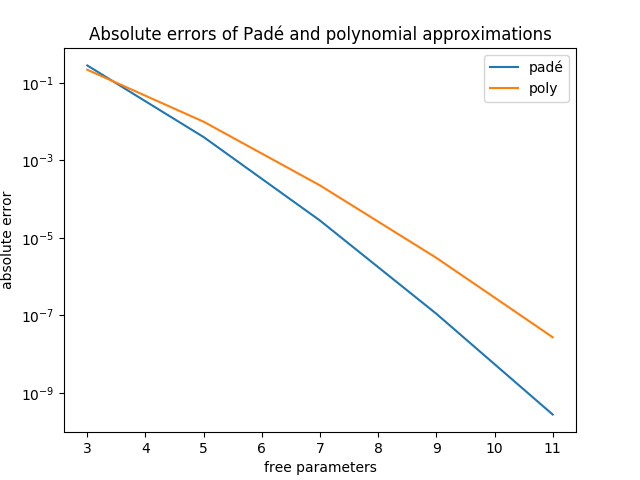

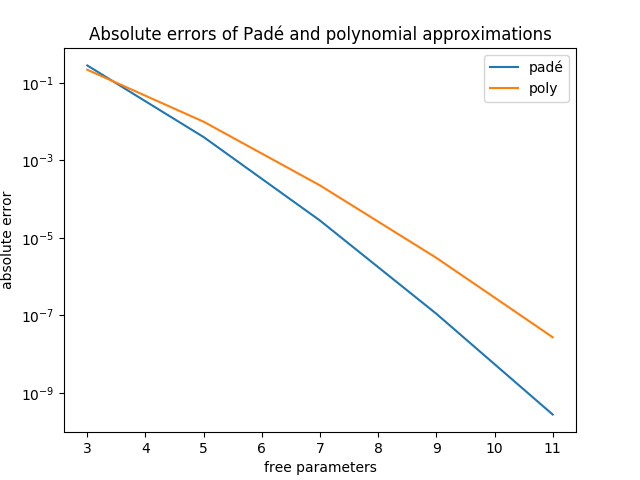

Here are the different errors.

Train a neural network on the output from an order 4 maximal LFSR and learn to reproduce it. How do the results depend on the network depth and architecture?

I have previously implemented backpropagation from scratch, to train my own VAEs. So rather than write this again, I wrote up a better derivation of backpropagation which I can use a reference for future modifications.