Week 12

Rhythmic objects: sonification and meaningful interpretation of gestural interactions with everyday objects

Kayla Briët | Keunwook Kim | Lucy Li | Rio Liu | Diana Mykhaylychenko | Yutong Wang

So as part of our Tangible interfaces group Include Peopls names

Everyday objects hold profound significance, shaping our identities through the meaning, relationships, and memories we associate with them. Our project focuses on sonifying, visualizing, and interpreting gestural interactions with these objects. We employ two applications: sonification and visualization of serial data through audiovisual mapping, and the interpretation of serial data to enhance the use of large-language models like ChatGPT. This page will our design strategy, emphasizing the sonification of gestural interactions with objects, leveraging their inherent affordances in human-computer interaction.

Our exploration also delves into the playful use of gestural-object recognition for creative problem-solving and alleviating social tensions in communal contexts.

Essentially, we wanted to make a tool that could turn any object into a tangible user interface, a creative tool for collocated play and collaboration. We saw applications in its effectivity to diffuse tensions, break down barriers over cultural differences, and a way to build deeper relationships among groups.

keywords: interaction design, human-computer interaction, tangible user interfaces, audiovisual performance, computer-generated sound, gesture analysis, large-language models, playfulness, social connectivity, emotional connection

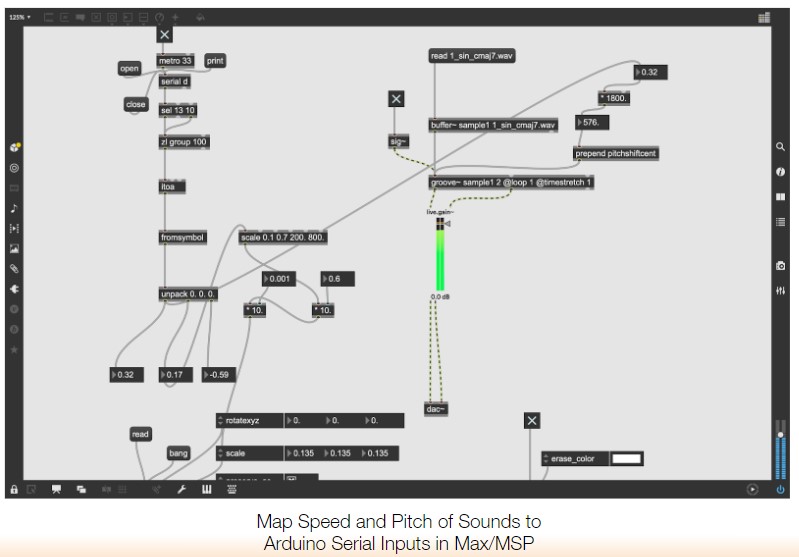

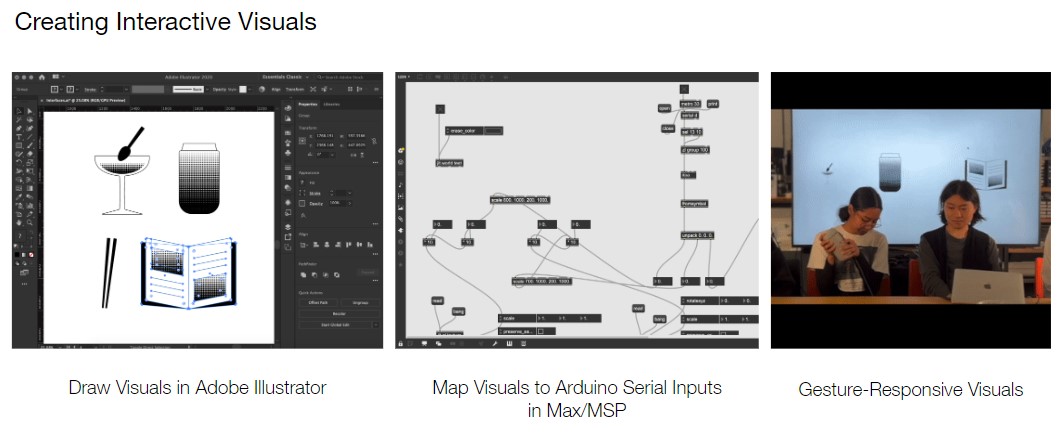

This methodology is straightforward. Take a common object, say a water bottle, and add sensor data from an IMU. Output that data to any audio-visual or computational processing in the form of serial data. In our project we had two applications: sonification and visualization of the serial data aggregated from gestural interactions by mapping data to audiovisual software. The second application is interpretation of the serial data aggregated from gestural interactions to streamline usage of large-language models such as chat gpt.

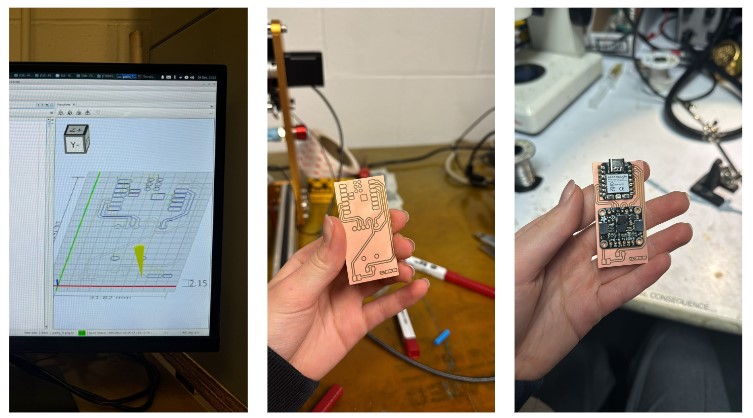

In the realm of audiovisual, the challenge is in creating meaningful and justified sounds that cue the affordance of these objects as opposed to mapping to arbitrary sound textures. Examples of meaningful and justified sounds could be augmenting the sound of a water bottle to make it sound like a rainstick, creating an object metaphor; or designing a string quartet of four everyday objects to play violins, cello, and viola. The sound and context is consistent and intuitive with its corresponding interaction.

In the realm of gestural interaction, the challenge is in meaningfully and accurately interpreting gestural data to infer commands likely to streamline the process of interacting with LLMs such as chat gpt.

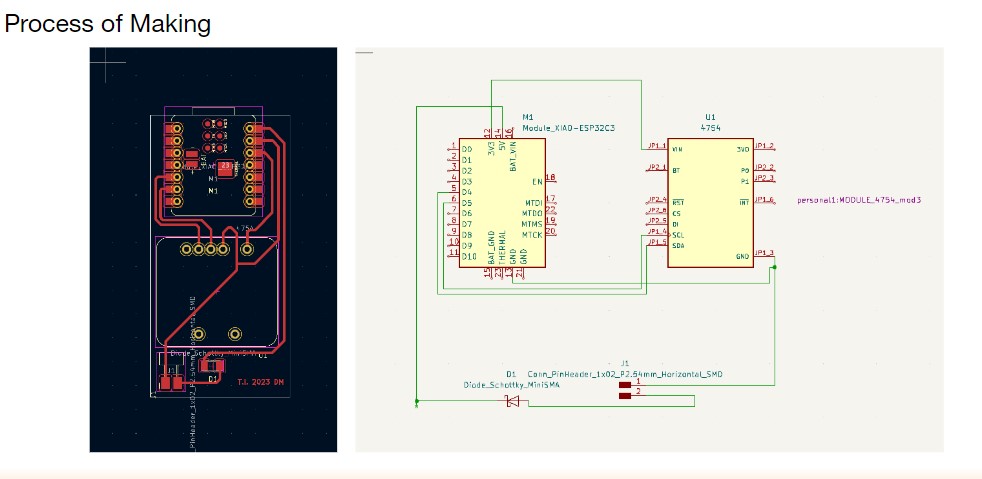

The approach is simple: integrate sensor data from an Inertial Measurement Unit (IMU) into everyday objects, like a water bottle, and transmit the data as serial data to audio-visual processing software such as Max MSP and TouchDesigner.

In the realm of sound, the serial data output can influence the frequency of an oscillator, a key component in sound synthesis. Altering the object's tilt, rotation, or a touch interaction can modify the pitch of the oscillator synth. Additionally, adjusting the speed of a looped sample results in corresponding changes in pitch.

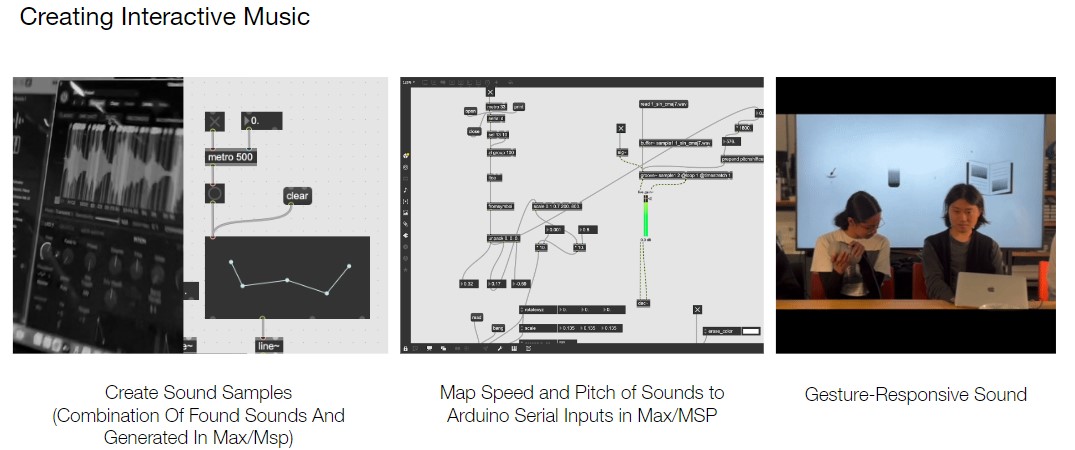

We selected four objects for sonification: a bowl with water (played with a metal spoon), two chopsticks, a book, and a water bottle.

For the bowl, water, and spoon ensemble, it was crucial to trigger a signal when the spoon touched the water, leading us to opt for a conductive material. Conversely, for utensils like chopsticks, we aimed to activate a signal when the two utensils made contact rather than the utensil itself. Designs were crafted with consideration for our natural and anticipated interactions with these objects.

Connections were established using wire and conductive tape, linked to an Adafruit Seeed.