Technologies Used

Python websockets were used to interface with our web input app.

Knowledge of the Gestalt framework and 3-Axis delta machines was used to generate the appropriate motor command.

Personal Project Goals

Since this was a team project, my goal wasn't to challenge myself in some way that I hadn't before - rather, I wanted to contribute what I knew I would be most effective in contributing to the team. Beyond that though, this happened to be a good opportunity for me to get my hands dirty with Gestalt, which seems like a great framework for rapid prototyping machines. I'm not sold on the cardboard frame designs (mostly because I'm experienced enough to make something sturdier from scratch), but the easy software to electronics networking is a huge win. Since I want to work on modifying 3D printer software sometime during my PhD, working on the software for a similar machine seemed like a great place to get started.

As for the licking robot idea...don't ask. That was all Ani's idea. I wanted to work on controlling a machine, but I didn't care too much what the appplication was.

Methodology

At first, I took the lead on software, but we soon flattened to a less hierarchical structure. At the beginning of this project, we first thought that maybe we could modify some open-source CAM software for our purposes. We had tentatively decided on PyCAM. This provided an intrerface through which we could draw paths on a screen and have it converted to GCode. The initial reasoning was that we could pretty up the interface to be cake-licking specific, convert the GCode to Gestalt language, and then we have a very professional interface from the getgo, without having to do the polygon to path conversion ourselves. In fact, I quickly wrote the GCode to Gestalt converter myself here. Unfortunately, this fell apart when upper management said they wanted this to be usable interactively through licking a cellphone. We decided to write our own application which would send information from a device to a web server, which would then be directed interpreted by a host computer, which would interface with Gestalt.

Read More / Hide

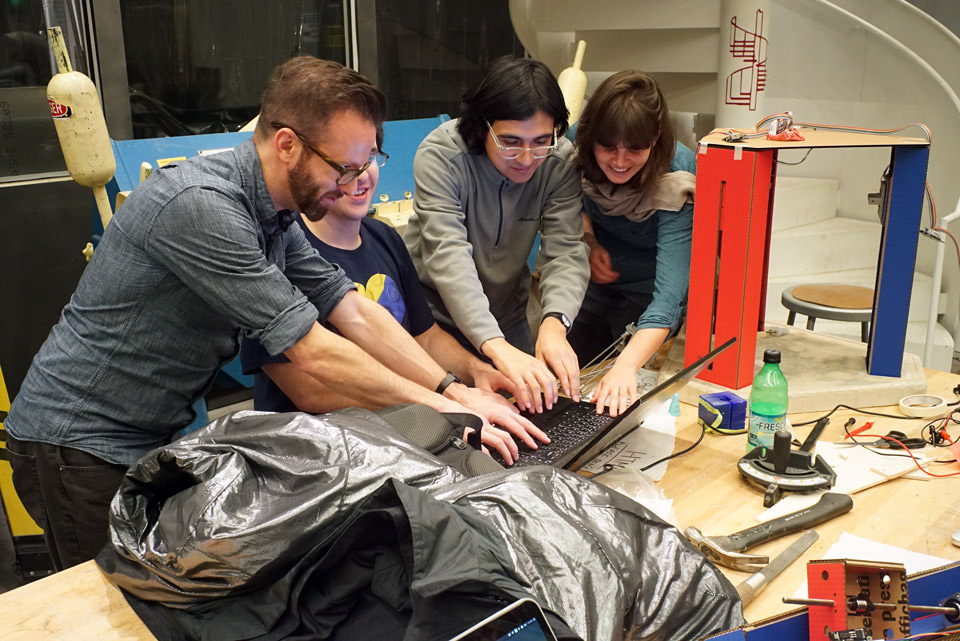

I took the lead on receiving the command from the webserver, which was handled using websockets in Python. Python was the obvious choice, because then the receiving node could directly communicate with Gestalt without the need for a middle layer. I also worked with Jasmin to calculate the inverse kinematics, which we derived from this article here and some machine measurements, and worked hard on the integration and tweaking. The result is a streaming application that continually sends licked waypoints (interpreted as touch events) to my client code, which converts it to the appropriate machine motor coordinates. My code lives in the group repository, but my contribution can be found locally here or on the github repository here.

Current Issues

The only issue we have is that the workspace is pretty small, since we didn't account for the tongue height when designing the machine. We can't cover the desired cake diameter. This could be fixed with taller servo rods. Beyond that, lick away! See the full project (with pics!) here.