Final Project

As a vocalist, I always appreciated enhancing and improving the live performativity of the overall music through expanding the possibilities of the sound generated through manipulation, looping, and analog-digital experimentations with the sound -especially in my case, with my vocals.

An idea for the final project is making my own musical instrument, using my voice interactively as the main input. I would also like it to be used in multi-user/ public environments for later purposes.Process of Making, Step by Step

1. Deciding on the Microcontroller

My initial idea is to work with ESP32, and wirelessly make gloves speak to each other through websockets. After speaking to Neil about my idea and him advising me to use radios instead, I immediately purcahsed some and started reasearching. Even though it is a great and probably a way simpler idea, I basically have SIX (6) days to go. I found that pivoting back to SAM21D E-18, working with

designing a complete new schematic would be at least 2 days of sweet labor; and I unfortunately do not have the time for that.I already have an ESP32 schematic from the Input Devices Week, and all I have to do about it was to make it as small and wearable as I can. It means that I need to take the TFT screen out, keep the IMU part and maybe even take one of the buttons out as well (hopefully not the reset one, of course). Let's start.

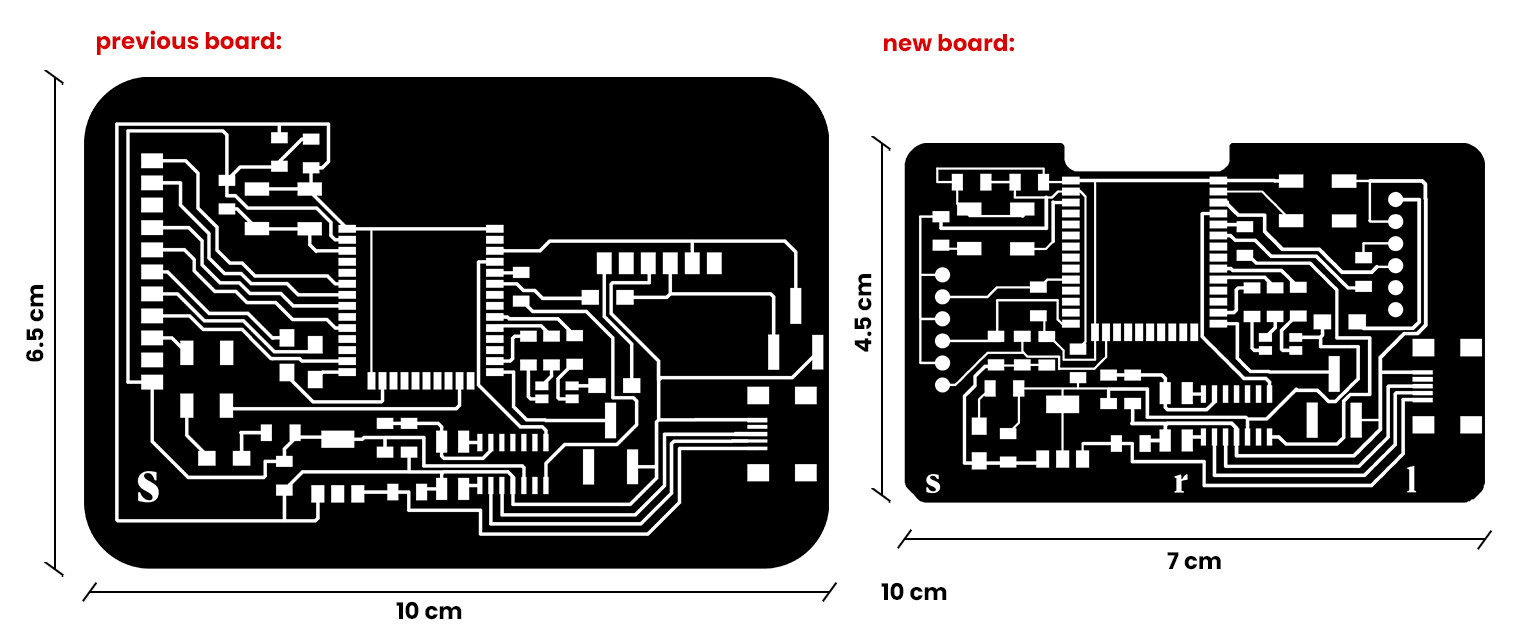

After taking the TFT pinouts and the buttobn out as well as making the traces as short and squeezed possible; my board looked like this in comparison to the old one:

2. Deciding on the Software

I have two options in mind, and I have spent a LONG time finding all the tools and information that I need to have to make a ESP32 synthesizer.

2.1. MIDI

According to 480837 websites and some of the TA's, this was the easiest option. To make a MIDI sythesizer, I first had to establish a connection between serial port (or ideally, wirelessly) to the musical software. Hairless Midi and loopMIDI were the best options that I could find, and I was able to generate a sound through a simple code. What loopMIDI does is that it creates 'a virtual loopback MIDI-ports to interconnect applications on Windows that want to open hardware-MIDI-ports for communication.' Hairless MIDI is the serial bridge that connects the serial port to the MIDI port to send MIDI signals to the musical software, like Ableton or Logic.

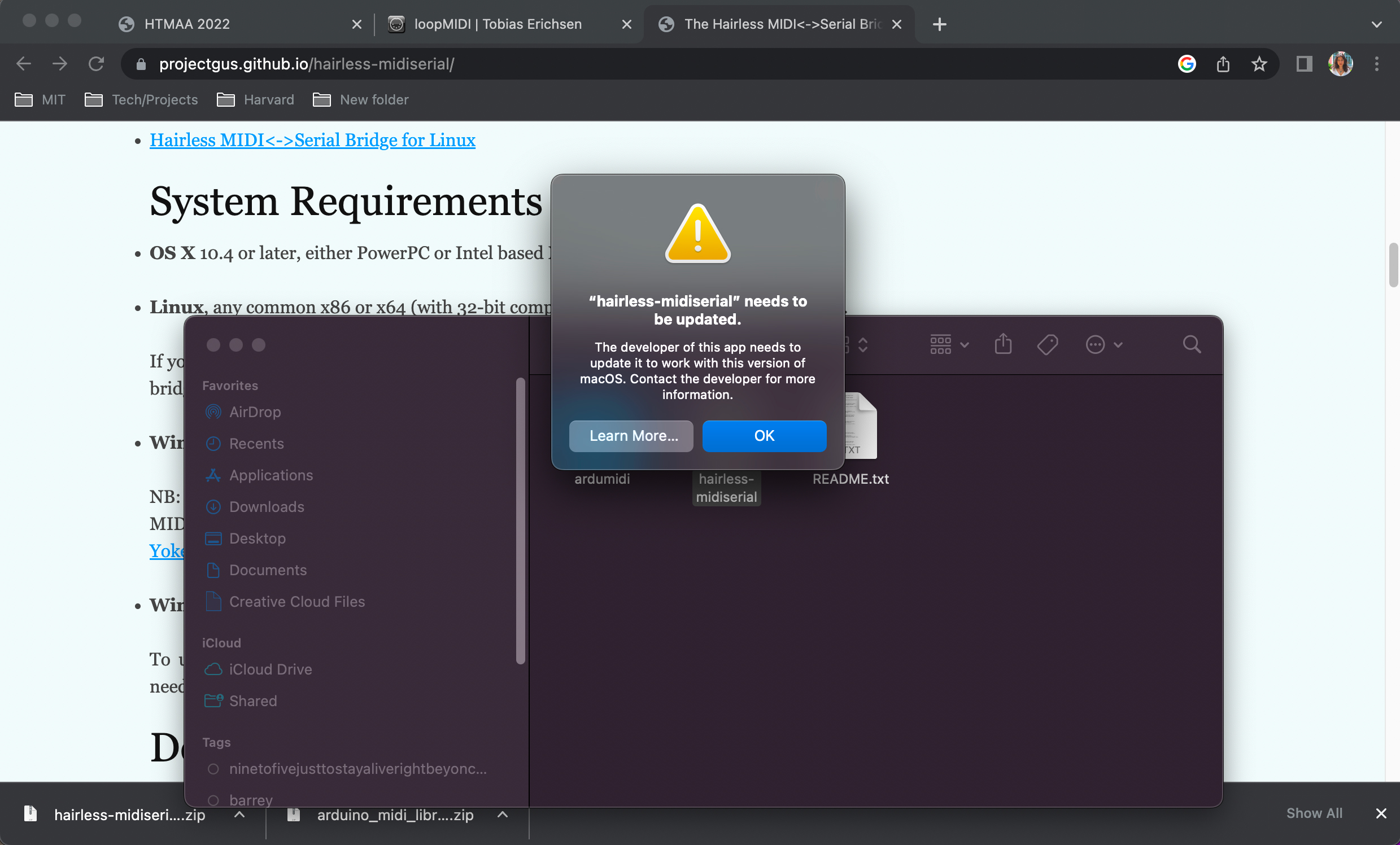

When I downloaded HairlessMIDI to my Macbook, it gave me this error:

I have decided to use my Windows computer and Ableton Live. Using the ArduMIDI library, I was able to upload the code (Thank you Iulian!):

However, things unfortunately went a bit more complicated with MPU6050. I had a lot of library issues, which drove me crazy. MPU library was not able to compile my sketch, no matter what I did. I had a very limited time and instead of spending a day more to debug, I wanted to see how the other option would turn out. As stressful but sweet as it sounds, I decided to switch to Max MSP.

2.2. Max MSP

By the time I switched to Max MSP, I had only a couple of days left to go, and I had to decide what my priorities were. Connecting to the serial port through Max MSP was very fun, and I realized how much I wanted to learn the software, and even though the time-crunch was highly apparent, I wanted to follow the route that felt most right, and decided not to sleep for a couple of days. This also meant that I was going to spend most of my time figuring out the software, and give up on the wireless communication for now.

Right now, I am imagining a fabric that can be stepped on (performed on the ground) and wrapped around the body.

I like the idea of space holding the memory of the sound; in other words, looping my voice, but in a spatial-specific way. It would be very nice to have speakers in each modular rug-like fabric, and when I step on one module, the sound activates on that fabric.

I want to start by experimenting with conductive fabrics (machine knitting-electronic textiles). Using Arduino and Arduino Keypad, I will create spatial floor modules acting like a loop station through separate channels. Then, I will use the same fabric with accelerators and other sound-modifying tools to modify the texture of the sound, add intervals as layers for the sound to become a chord, etc. I have experimented with Ableton Live and Logic Pro, but I need to learn Max MSP Live.

Questions

1. What does it do? It adds sound effects (intervals, reverb and loop) depending on the position of my hand and the flex of my fingers.

2. Who's done what beforehand?

Imogene Heap: MiMU Gloves! There are many other glove synthesizers but MiMU Gloves is a product that I could actually use for my music production and stage performance. MiMUs have been out of stock so I thought it could be a great opportunity to make my own.

3. What will you design?

I will design a hand-wearable synthesizer that will allow me to alter my voice or any midi tune through the position of my hand and fingers.

4. What materials and components are you planning on using?

4. What materials and components are you planning on using? 1. Board: I already have designed an ESP32 Board with IMU integrated in it, however, I need to design a smaller board and at a battery to it. 2. Wearable: I already have compression gloves but I want to sew or resin print a wearable as soon as I can! 3. PCB Compartment: I want to 3d or resin print a casing for the PCB as well. 4. Microphone (Shure58A)-Audio Interface-Speakers: Since I unfortunately have left all my musical equipment in Istanbul, I have loaned the set from FAS Education Support Services at Harvard. 5. Wireless Connection through Websocket 6. Max MSP or Ableton Live

5. Where did they come from?

6. How much will it cost?

6. How much will it cost?

7. What processes were used?

8. What questions were answered?