HTMA Week 1: Final Project Ideas

Published: 2023-09-16Intro

Heyo, welcome to week 1 of my progress blog for HTMA Fall 2023. Totally not writing this during the second week.

Lord Gershenfeld’s assignment to us for week 1 was to write about a final project. After procrastinating for a week thinking long and hard about it, I landed on this:

The Mask

Once upon a time, a dumb and edgy middle-high school Bowen always thought: wouldn’t it be cool if you can have a mask/helmet that displays your emotions in pixel-art emotes.

Now that I’ve grown into an educated and mature college kid at MIT, I think still it’s cool AF.

I have two main sources of inspiration for this:

The character Wrench from the Ubisoft video game Watch Dogs 2 wears such a mask

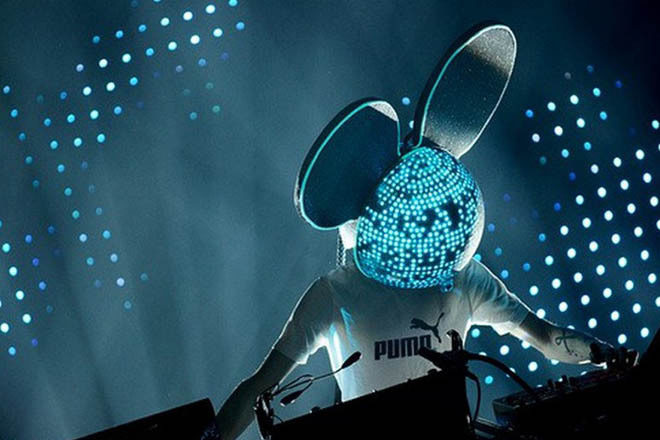

The popular musician Deadmau5 once wore a helmet littered with LEDs for a concert, on which he’d display various things as the concert went on.

For my final project, it would be more of a helmet than a mask. Of course, for extra cool factor, I want the helmet to look more sci-fi. Perhaps something cyberpunk, or something like Daft Punk.

Milestones

Basic Milestones

- Support displaying at of these basic emotions/state of thought (Can be manually toggled for basic milestone):

- Neutral

- Happiness

- Sadness

- Anger

- Surprise

- Confusion

- Thinking

- Convert an existing helmet or 3D print a helmet design and attach an LED array underneath the face shield.

- Make sure the user can actually see out of the helmet

- Deadmau5 has screens in the helmet that shows a camera feed, we don’t want this.

Extended Milestone

- Use ML to infer emotions from speech or gestures to determine what gets displayed on the helmet

- If possible, we want to be able to infer emotions locally without using cloud infrastructure

- Meaning running an ML model on a small computer like a raspberry pi or something.

- If not feasible or time runs out, then a cloud based solution can work for a prototype; we want to avoid this.

- If possible, we want to be able to infer emotions locally without using cloud infrastructure

Snap Back to Reality

The idea is cool and all, but how feasible is it actually?

Let’s start with what is probably the most difficult thing for both machine and humans: handling emotions

Emotional Intelligence

AI emotion perception largely falls under three categories

- Text

- Unfortunately this is not an option, since we’re not dealing with direct text input.

- Audio

- This is definitely feasible. It would be trivial to put a microphone inside the helmet

- Video

- This would be extremely difficult to pull off. In the tight and dark space of a helmet, it would be nigh impossible to get sufficient video footage to feed into an emotion perception model.

So it seems that inferring emotion from audio would be the best option. There are already many existing models readily available to us. The issue would be running it.

Cloud 9

Most of the time, ML models are run on the cloud due to their computation and power requirements. We neither have the power nor computation to spare, and running stuff in the cloud comes to latency, disrupting the experience. Therefore, we want to run the model locally if possible, but the difficult part would be to find a model that would be lightweight enough to run on a Raspberry Pi. If not, then we may need to train our own model, but that would be a challenge of its own.

Alternatives

Because of those reasons, we should not completely rely on achieving an ML based solution and prepare to go for alternative solutions, such as:

- Emotes being remote controlled (dedicated remote or phone)

- Gesture based controls?

Vision

With a bunch of LED arrays in the face shield, we’d need to find a way to maintain the user’s vision. Luckily, we can copy take inspiration from an existing product: these LED glasses that you can buy right off of Amazon:

These glasses allow for partial vision by cutting out parts between LEDs, much like a stereotypical party glasses. We can do the same by cutting out the parts between LEDs from the material the LEDs are attached to at eye level.

Helmet Construction

As for the physical helmet itself, I think I can take one of two directions.

- Take an existing helmet, such as a motorcycle helmet or similar, and build my prototype into it; cutting out parts and 3D printing additional parts as needed.

- Design and build a helmet from scratch, using fabrication methods available to me at the lab. I think taking the first approach would be the easiest: it would save on a lot of work and deign that would otherwise cost me at least a week or two of time.