Week 14: Wildcard

Assignment

Interfacing with another Robot:

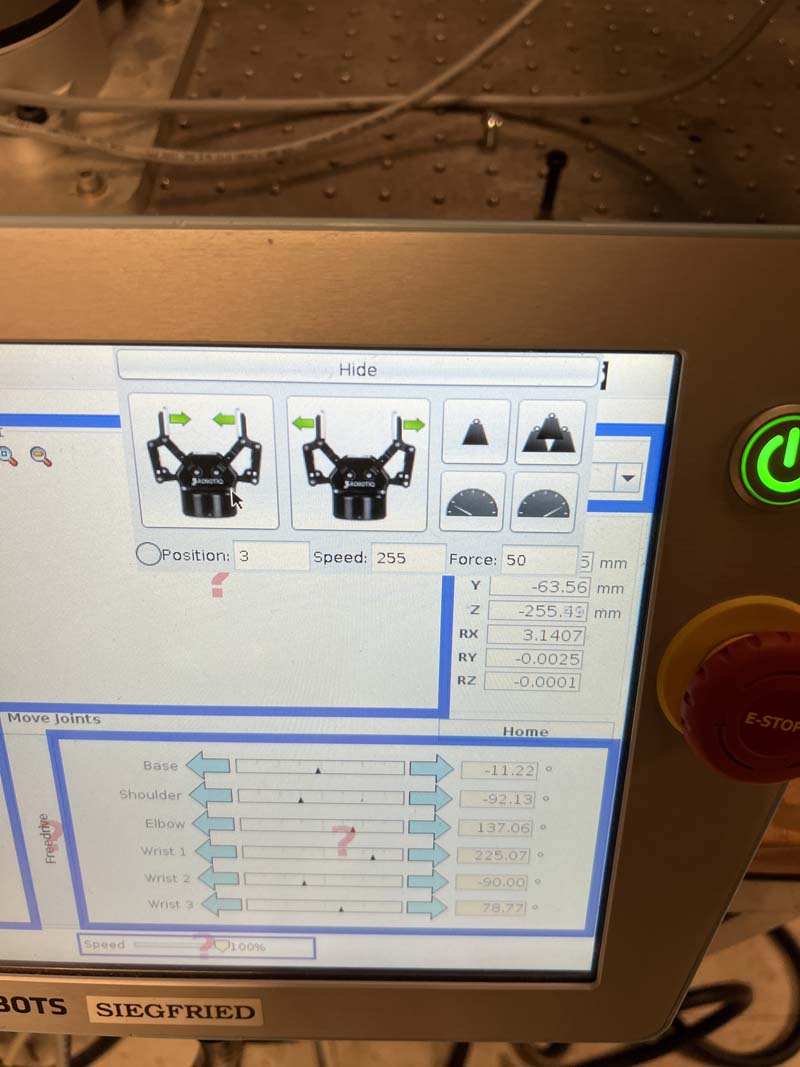

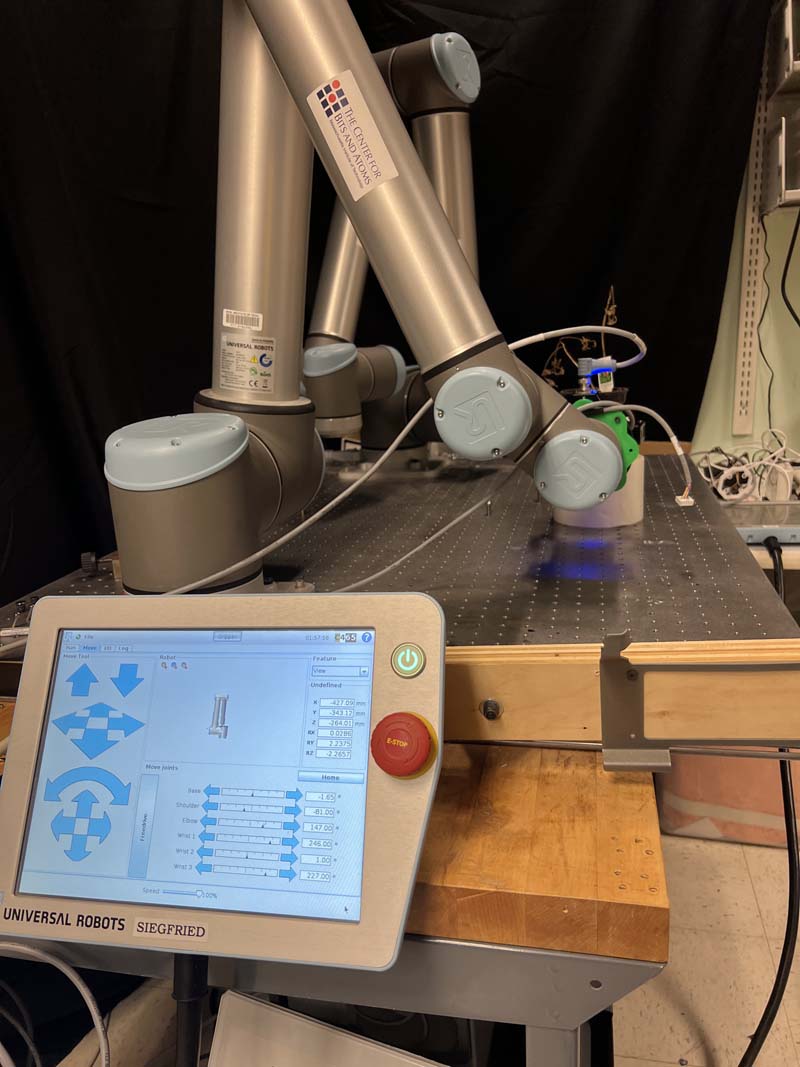

Previously, I had worked with the MyCobot280 as well as another larger Universal robots robotic arm in the Critical Matter group. The CBA lab has a pair of UR10 robotic arms (Siegfried and Roy). There was also a Robotiq End Effector (essentially a grabber) that I thought could be fun to play with to try and figure out how to program the robot to pick up a plant before the dance

Picking up a plant to dance

After some brief training with Alex to understand the interface for the UR10, I started trying to figure out how to program this robot arm in Python and then also how to work with the gripper

Moving the arm was relatively straightforward due to the work I'd done with the MyCobot280.

First we set things up, including the relevant libraries, hard coded values for certain parameters, code to connect to the robot arm on the CBA wi-fi(!) and setting movement accceleration and velocity:

import urx

import time

import numpy as np

import socket

from urx.robotiq_two_finger_gripper import Robotiq_Two_Finger_Gripper

# Fixed parameters

PI = 3.1415926535

movement_plane_height = -0.162

source_coordinate = [0.09, 0.16, -0.192]

lift_coordinate = [0.08, 0.15, 0.042]

close = 200

open = 3

# connect to robot

try:

robot = urx.Robot("192.168.1.53")

print("Initial connection attempt timed out, trying again with a longer timeout...")

except socket.timeout:

print("Initial connection attempt timed out, trying again with a longer timeout...")

# Retry with a longer timeout

#SIEGFRIED

#ROY

robotiqgrip = Robotiq_Two_Finger_Gripper(robot)

robot.set_tcp((0, 0, 0.1, 0, 0, 0))

robot.set_payload(2, (0, 0, 0.1))

acc_cmd = 0.2 # acc. and vel. for all movements

vel_cmd = 0.1 # meters per second

time.sleep(0.2) # leave some time to robot to process the setup commands

# Helper functions for convenience

def deg2rad(deg):

rad = []

for i in range(len(deg)):

rad.append(deg[i] * PI / 180)

return rad

Next we create a function for the end effector which will help us later. If we're not planning to use RX,RY and RZ (the additional 3 DOF) we can comment out the line that starts p_cmd[i]...

def translateEndEffectorGlobal(robot, p_des, p_root):

p_cmd = robot.getl()

for i in range(3): # Only update X, Y, Z (ignore rotation Rx, Ry, Rz)

p_cmd[i] = p_des[i] + p_root[i]

print("Moving to global position: ", p_cmd)

robot.movel(p_cmd, acc=acc_cmd, vel=vel_cmd)

And finally our main sequence to run the operation. In the below example, I initialize the robot gripper, ensure the robot arm is at pre-defined home coordinates (set manually for the specific task of picking up a plant that is placed a specific point), test opening and closing the gripper to ensure it is OPEN when moving, move the robot arm through a series of steps (intentionally a bit of a complicated path for drama), pick up the plant and position it in space ready to dance with satellites:

if __name__ == "__main__":

try:

#initialise

# robotiqgrip = Robotiq_Two_Finger_Gripper(robot)

# robot.set_tcp((0, 0, 0.1, 0, 0, 0))

# robot.set_payload(2, (0, 0, 0.1))

# time.sleep(0.2)

# Step 1: Start at home with gripper closed

print("Step 1: Moving to origin...")

#OG ORIGIN j_origin = deg2rad([0, -90, 135, 225, -90, 90]) # Joint angles for origin

j_origin = deg2rad([-25, -90, 135, 225, -90, 160])

robot.movej(j_origin, acc=acc_cmd, vel=vel_cmd)

time.sleep(3)

p_root = robot.getl() # Record the global origin position

print(f"Origin position: {p_root}")

time.sleep(2)

print("test CLOSE gripper")

robotiqgrip.gripper_action(200) # Fully close gripper

time.sleep(5)

print("test OPEN gripper")

robotiqgrip.gripper_action(10) # Fully close gripper

time.sleep(3)

print("SAFETY OPEN gripper")

robotiqgrip.gripper_action(10) # Fully close gripper

print("Step 1a: move to safe pos.")

j_safe = deg2rad([-25, -90, 125, 225, -90, 160])

robot.movej(j_safe, acc=acc_cmd, vel=vel_cmd)

time.sleep(3)

# Step 2: move to grab plant

print("Step 2: move to grab plant pos...")

j_grip = deg2rad([-1.65, -81, 147, 246, 1, 227]) # Joint angles for origin

robot.movej(j_grip, acc=acc_cmd, vel=vel_cmd)

time.sleep(3)

# Step 3: grab plant

print("grab plant")

p_grab = robot.getl() #record claw position

translateEndEffectorGlobal(robot, [0, -0.03, 0], p_grab)

time.sleep(3)

robotiqgrip.gripper_action(90) # close slightly

#step 4: dance

print("let's dance")

p_fly = robot.getl() #record claw position

translateEndEffectorGlobal(robot, [0, 0, 0.25], p_fly)

time.sleep(2)

finally:

robot.close()

print("Robot disconnected.")

To get these positions took a lot of trial and error. Setting relative positions didn't always work as expected in the main sequence, simply because of the limits to the robot's movements which were initially unclear to me. Understanding the positional coordinates was also quite unintuitive compared to working with the smaller robot arm as the X and Y coordinates for lateral movement were inversed both in terms of their directions and the logical / typical way that positive and negative work! Drawing that out on some paper helps.

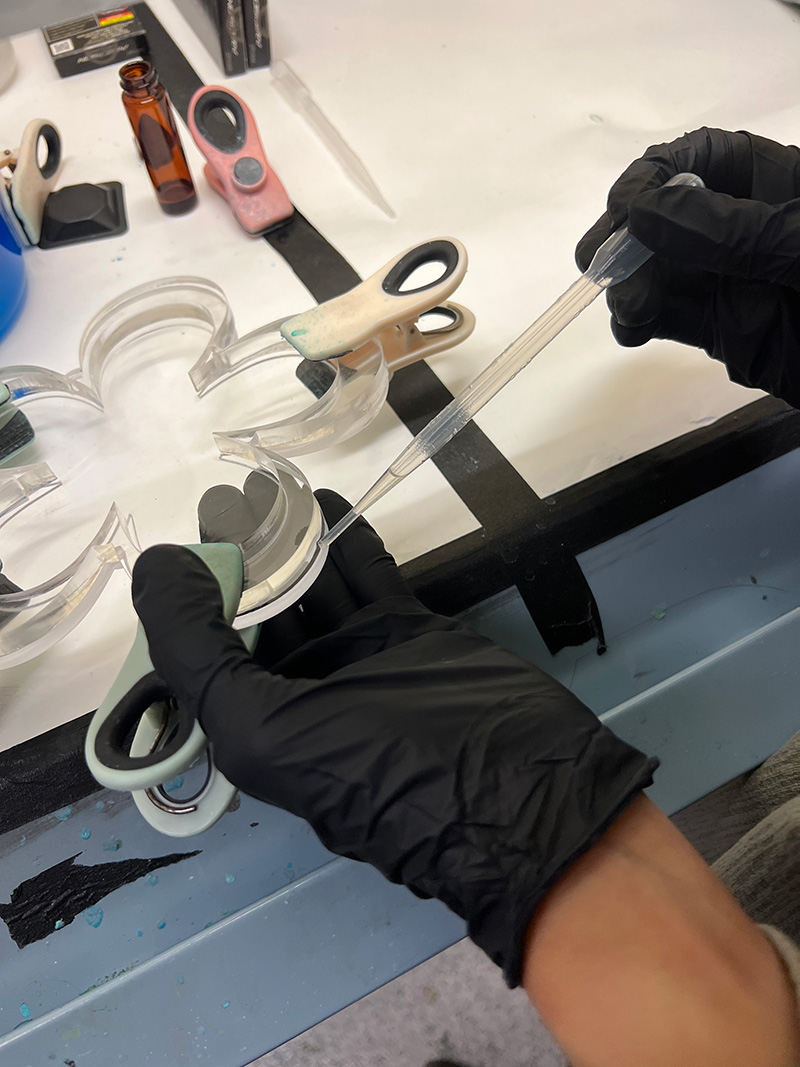

Due to the way the end effector works, I also had to raise the plant on a platform. Ultimately, the end effector design was also unsuitable to grasp a plant pot. This is easily solved by creating a new design. However, it was difficult to access the CBA lab in the last week, so instead I went back to the Cobot280. I also felt that the plant pot being carried while slightly comical was not very aesthtic. The work would look much more appealing and poetic if it was simply a singly flower. So I designed an end effector for the MyCobot280 that was a slight parody of the the Robotiq gripper. While capable of grasping, it also featured a small circular slot designed for the stem of flowers, that could be adjusted to a range of 4mm to 10mm which from my testing with various flowers seemed pretty good.

The design featured mounting holes for the effector to connect to the MyCobot280 which uses Lego connectors to fasten. Of course this meant the design had to be light and small.

I also 3D printed and adapted a fixing plate for the Robot arm. To finalise this stage of the project to be "Neil-ready" I designed and milled a PCB which was by now a relatively straightforward task. My workflow was to design the electronics layout which was simply a XIAO ESP32S3 connected to a GT-U7 GPS module via RX and RY pins. I then exported my gerber files, used Quentin's tool to get PNGs, brought those into Illustrator where I drew a custom Flower shape, then used Photoshop to remove negative space for the drill files and exported everything at 1000 DPI for mods. In Mods I set wider tabs than usual due to the shape of the board. I then soldered female connects to my board using flux to help speed things up, connected everything up and tested outside to see if I could get some GPS signals (I could).

I then setup the XIAO on Wi-Fi to be able to stream coordinates from the GPS module to the robot arm (via my Macbook for now). I created a suitably flowery case for the PCB and presented GND_CTRL for the first time at demo day as a quasi secondary final project.