Assignment Overview

This week covers:

• Introduction to the course and fab lab

• Computer-aided design (CAD) principles and practices

• Parametric design concepts

• Introduction to the course and fab lab

• Computer-aided design (CAD) principles and practices

• Parametric design concepts

Learning Objectives

• Understand the course structure and expectations

• Learn basic CAD software and parametric design

• Create and document a simple 2D/3D design

• Set up version control and documentation workflow using GIT

• Learn basic CAD software and parametric design

• Create and document a simple 2D/3D design

• Set up version control and documentation workflow using GIT

Work Completed

Introduction

• Attended first class session• Reviewed course syllabus and expectations

• Set up development environment

Computer-Aided Design

• Explored CAD software options• Created initial parametric design

• Documented design process

Documentation

• Coded my personal website using Cursor• Created this assignment page

• Established version control workflow via GitLab

Detailed Documentation

Project Overview

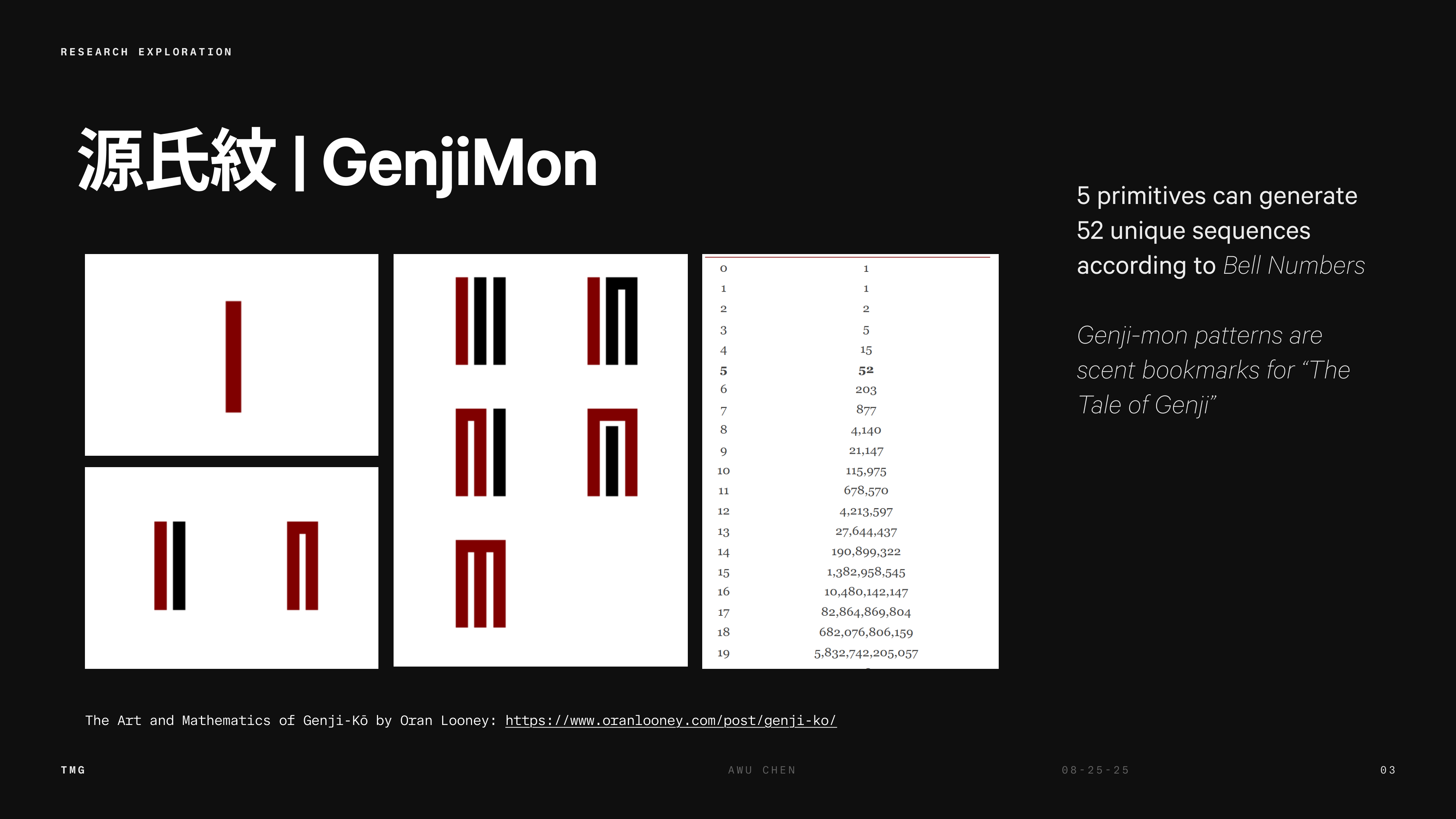

This week's assignment focused on learning computer-aided design (CAD) principles and creating a parametric 3D model. The project involved designing a 3D Genji Ko representation of 6 different incense sequences using 3D modeling software and documenting the entire design process.Historical Context: Genji Mon Pattern

The Genji Mon (源氏紋) is a traditional Japanese family crest pattern with deep historical significance. The pattern represents the Genji clan, one of the most influential families in Japanese history, particularly during the Heian period (794-1185). Historical Significance: • Origins: The Genji Mon pattern dates back to the Heian period and is associated with the Minamoto clan (源氏)• Cultural Impact: Featured prominently in "The Tale of Genji" (源氏物語), considered the world's first novel

• Design Elements: The pattern typically features geometric arrangements representing different aspects of court life

• Modern Usage: Still used in traditional Japanese arts, textiles, and ceremonial contexts

Pattern Characteristics: • Geometric Structure: Based on traditional Japanese geometric principles

• Symmetry: Highly symmetrical design reflecting balance and harmony

• Cultural Symbolism: Each element carries specific cultural meaning

• Artistic Tradition: Part of Japan's rich textile and design heritage

Historical Images

Historical Genji Mon pattern showing traditional geometric design elements and cultural significance

Variations of Genji Mon patterns demonstrating the geometric complexity and artistic tradition

Mathematical analysis of Genji Mon pattern showing the underlying geometric principles and bell number relationships

Design Files

• geniji-mon-3D.step - 3D model of Genji Mon incense holderSTEP format for maximum CAD compatibility and manufacturing readiness

Design Process: 1. Research Phase: Studied traditional Genji Ko designs and geometric principles 2. Sketching: Created initial 2D sketches to establish proportions and layout based on traditional design 3. 3D Modeling: Used parametric design methodology 4. Refinement: Iteratively refined the design based on geometric principles 5. Export: Saved in STEP format for maximum compatibility

Images

High-resolution rendering of the 3D model - Shows geometric accuracy and design intent of the parametric model

Learning Outcomes

CAD Skills Developed: • Parametric Modeling: Learned to use constraints and relationships in 3D design • Geometric Construction: Applied traditional design principles to modern CAD • File Formats: Understood the importance of STEP format for interoperability • Design Documentation: Practiced documenting the design process Challenges and Solutions: 1. Challenge: Maintaining symmetry in complex geometric patternsSolution: Used parametric constraints and mirroring operations 2. Challenge: Translating 2D traditional design to 3D model

Solution: Extruded 2D profiles and used boolean operations

Software and Tools Used

• Primary CAD Software: Fusion360 • File Conversion: STEP export functionality • Documentation: Markdown for process documentation • Image Compression: Online compress JPEG toolFuture Applications

This 3D model serves as a foundation for: • Digital Fabrication: Can be 3D printed or laser cuttedFinal Project Ideations

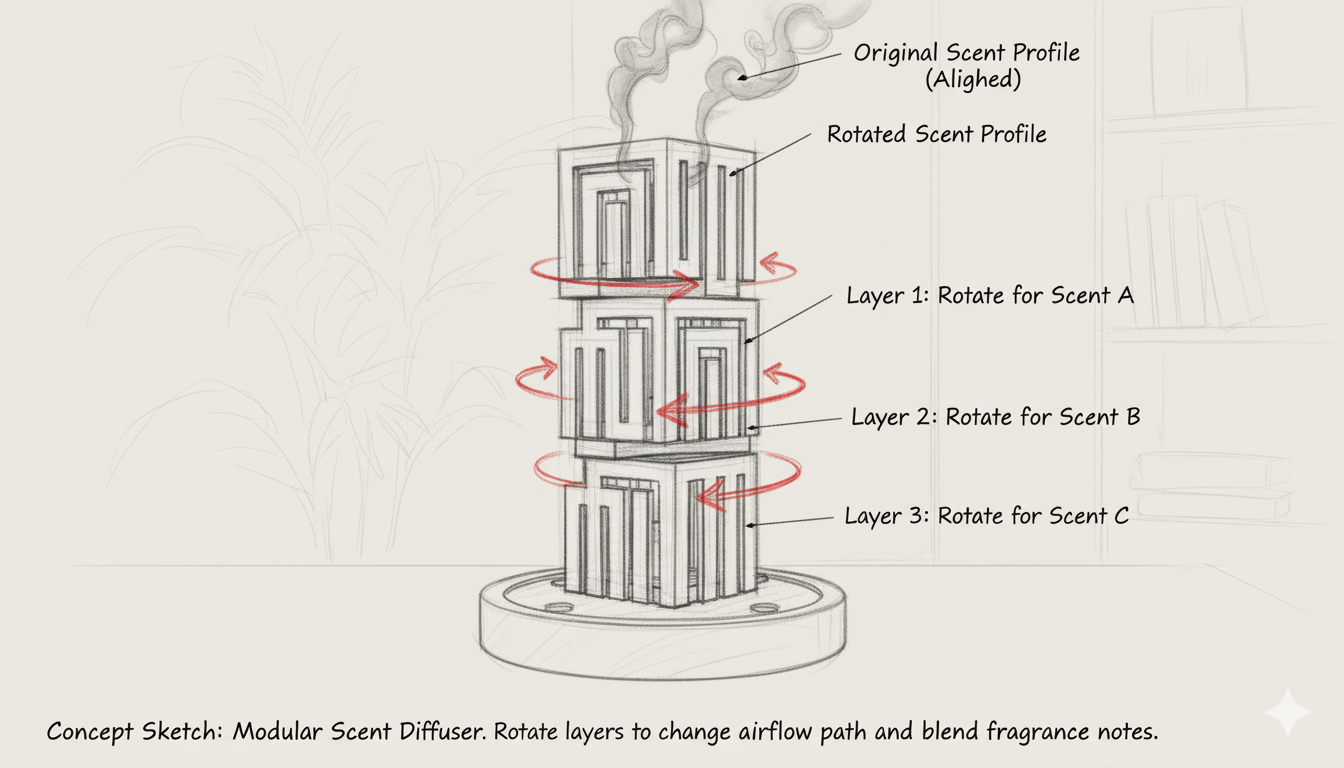

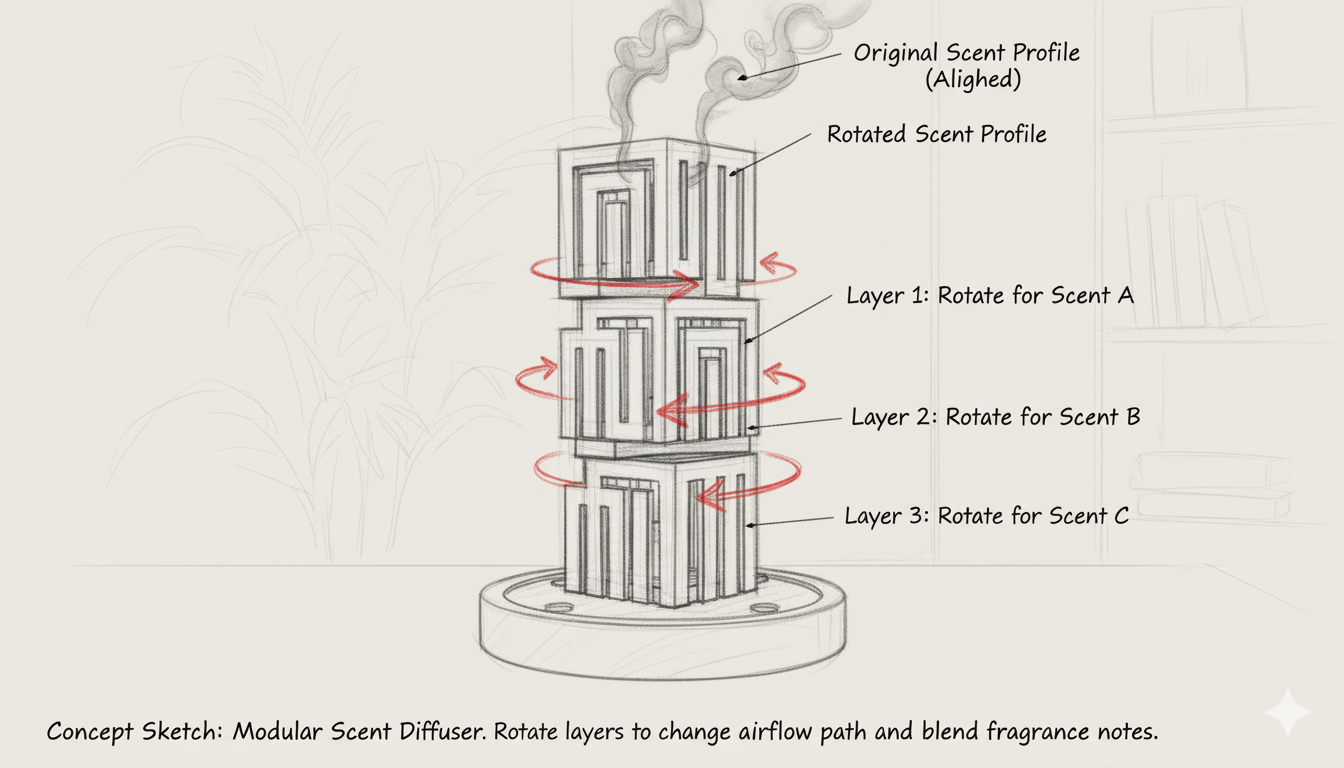

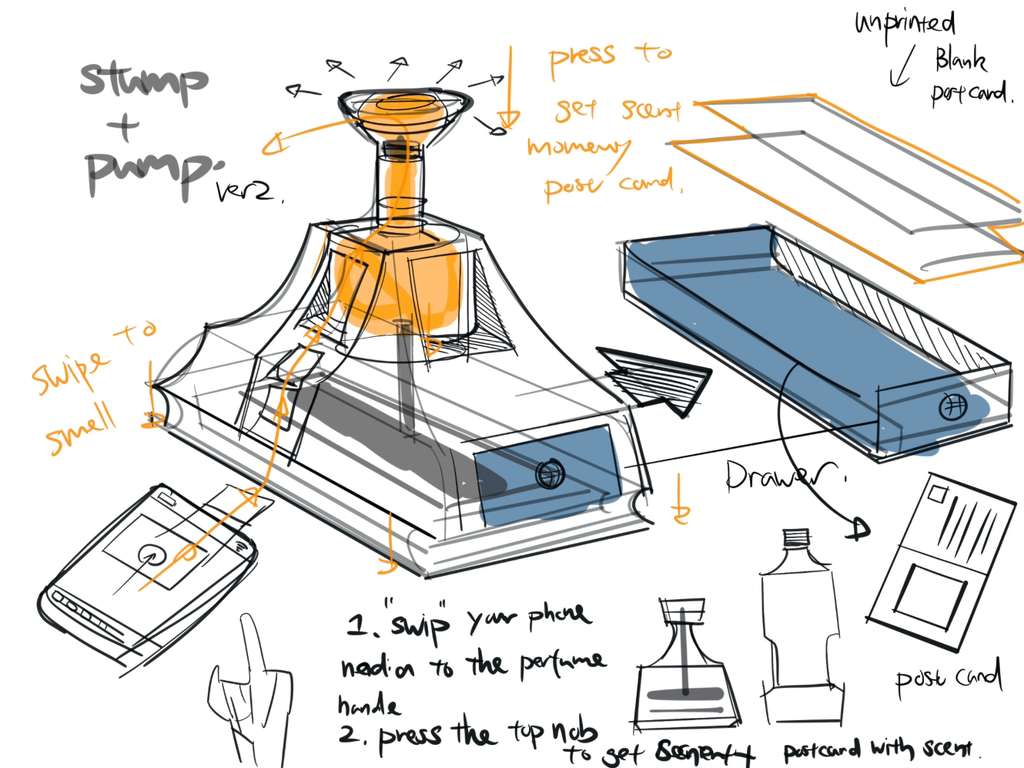

Ideation 1: Incense Diffuser with Genji Mon Pattern

Building on the Genji Mon pattern design, this ideation explores an incense diffuser that incorporates the traditional geometric patterns into a digitally actuated incense diffuser.

Project Vision:

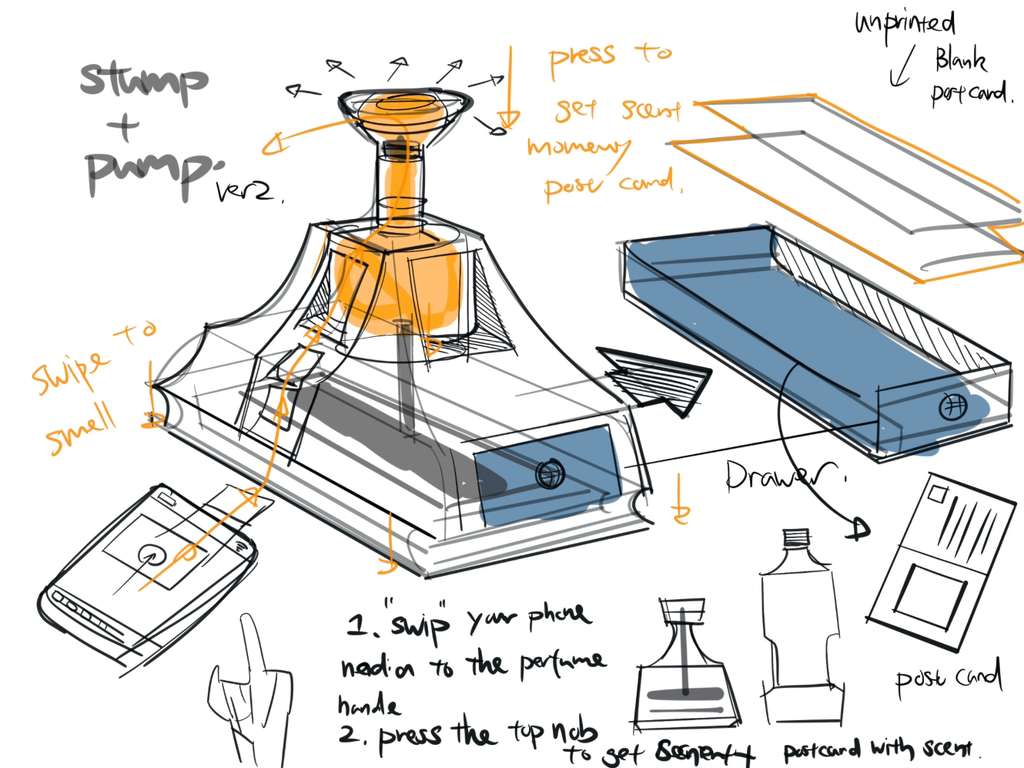

Detailed sketch for Genji 3D incense holder showing design development and functional considerations

3D rendering of incense diffuser concept incorporating Genji Mon patterns

• Cultural Integration: Combining traditional Japanese design with modern 3D printing technology

• Functional Art: Creating a beautiful object that serves a practical purpose

• Pattern Application: Using the Genji Mon geometry to create ventilation and aesthetic features

• Material Exploration: Testing different materials (resin, ABS) for optimal functionality

Design Considerations:• Functional Art: Creating a beautiful object that serves a practical purpose

• Pattern Application: Using the Genji Mon geometry to create ventilation and aesthetic features

• Material Exploration: Testing different materials (resin, ABS) for optimal functionality

• Ventilation: Genji Mon pattern provides natural airflow for incense diffusion

• Heat Management: Material selection for heat resistance and safety

• Aesthetic Appeal: Traditional patterns create visual interest and cultural connection

• Manufacturability: 3D printing allows for complex geometries impossible with traditional methods

• Heat Management: Material selection for heat resistance and safety

• Aesthetic Appeal: Traditional patterns create visual interest and cultural connection

• Manufacturability: 3D printing allows for complex geometries impossible with traditional methods

Final Project Concept

Detailed sketch for Genji 3D incense holder showing design development and functional considerations

3D rendering of incense diffuser concept incorporating Genji Mon patterns

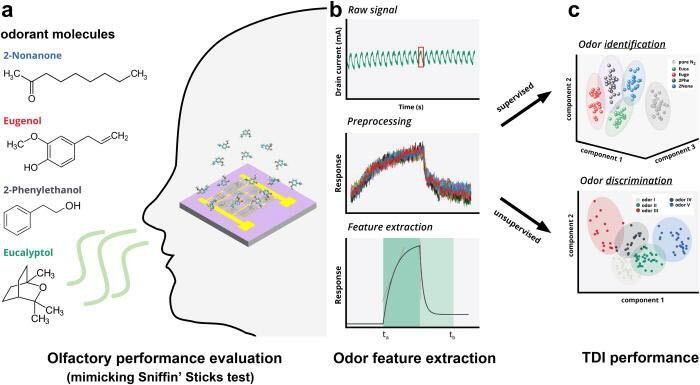

Ideation 2: LIG Electronic Nose

Concept Overview

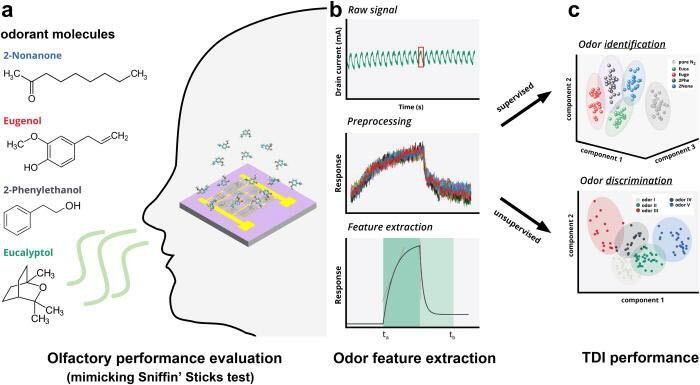

Exfoliated graphene structure used in machine-learning-enabled electronic nose systems. Image from: Machine-learning-enabled graphene-based electronic nose for microplastic detection

Laser-induced graphene sensor pattern test showing the porous graphene structure created by laser processing. This pattern forms the foundation of the sensor array that will detect scent molecules.

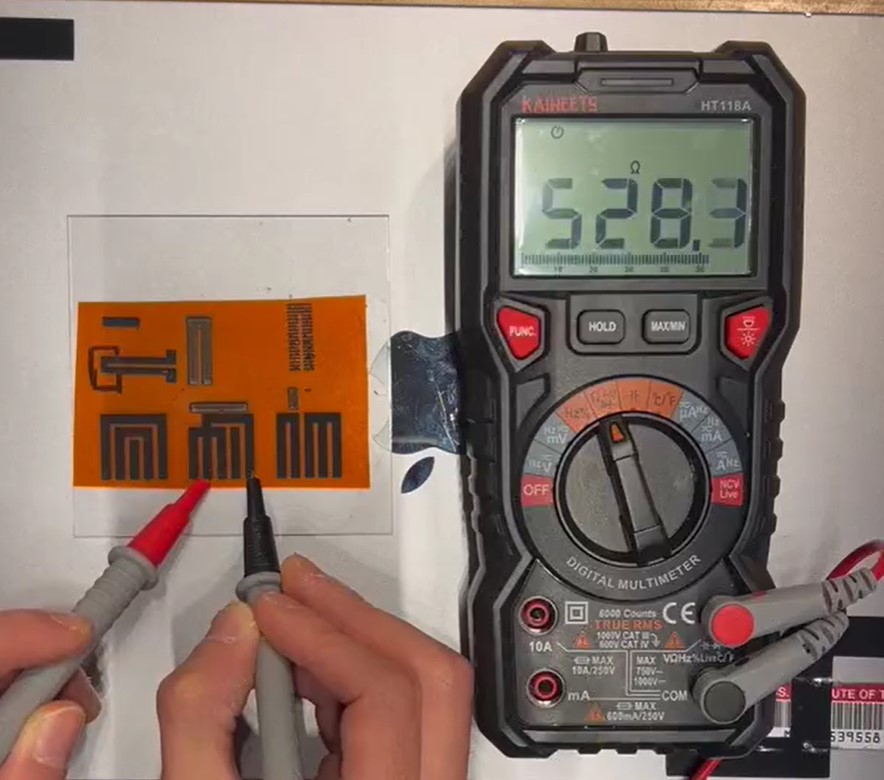

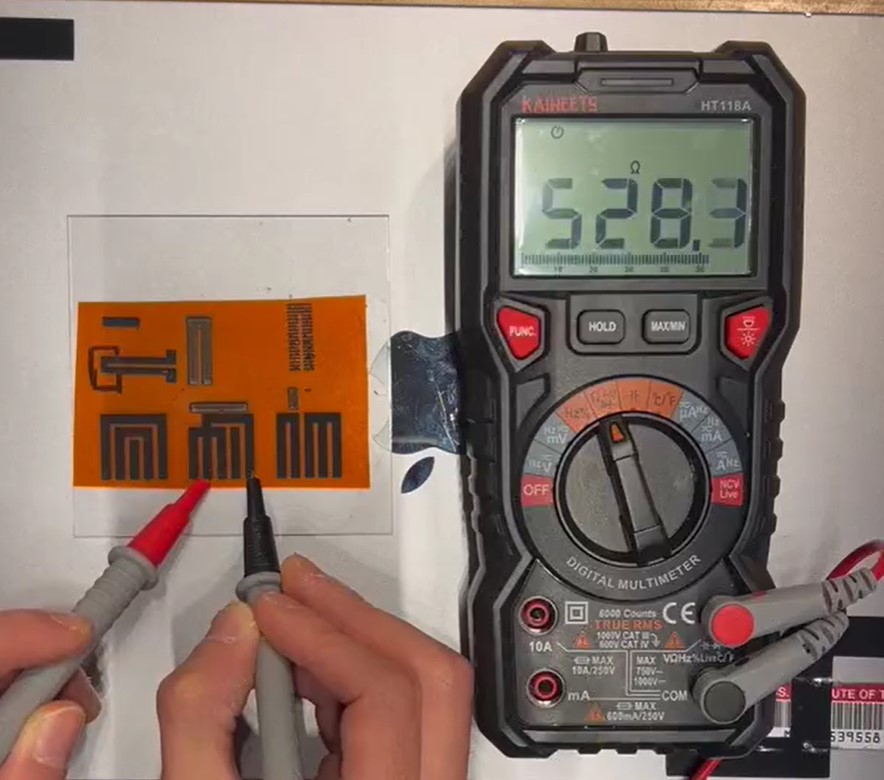

LIG sensor resistor testing demonstrating the electrical response characteristics of the graphene sensor array. The sensor array generates distinct electrical signal patterns when exposed to different scent molecules, which will be analyzed by AI to identify and classify scents.

LIG Fabrication Methodology

This ideation explores the development of a next-generation AI electronic nose using laser-induced graphene (LIG) sensor arrays. The system incorporates advanced sensor technology inspired by research from Korea's Daegu Gyeongbuk Institute of Science and Technology (DGIST), which developed a porous LIG sensor array that functions as a "next-generation AI electronic nose." This technology enables the system to distinguish scents like the human olfactory system does and analyze them using artificial intelligence.

How LIG Sensors Work

The LIG sensor array converts scent molecules into electrical signals and trains AI models on their unique patterns. This technology holds great promise for applications in personalized health care, the cosmetics industry, and environmental monitoring. While conventional electronic noses (e-noses) have already been developed and used in areas such as food safety and gas detection in industrial settings, they struggle to distinguish subtle differences between similar smells or analyze complex scent compositions. For instance, distinguishing among floral perfumes with similar notes or detecting the faint odor of fruit approaching spoilage remains challenging for current systems. This gap has driven demand for next-generation e-nose technologies with greater precision, sensitivity, and adaptability.

Biological Inspiration: Combinatorial Coding

The research team was inspired by the biological mechanism known as combinatorial coding, in which a single odorant molecule activates multiple olfactory receptors to create a unique pattern of neural signals. By mimicking this principle, the sensors respond to scent molecules by generating distinct combinations of electrical signals. The AI system learns these complex signal patterns to accurately recognize and classify a wide variety of scents, resulting in a high-performance artificial olfaction platform that surpasses existing technologies.

Fabrication and Performance

The novel electronic nose uses a laser to process graphene and incorporates a cerium oxide nano catalyst to create a sensitive sensor array. This single-step laser fabrication method eliminates the need for complex manufacturing equipment and enables high-efficiency production of integrated sensor arrays. In performance tests, the device successfully identified nine fragrances commonly used in perfumes and cosmetics, with over 95% accuracy. It could also estimate the concentration of each scent, making it suitable for fine-grained olfactory analysis.

Exfoliated graphene structure used in machine-learning-enabled electronic nose systems. Image from: Machine-learning-enabled graphene-based electronic nose for microplastic detection

LIG Sensor Development for This Project

Laser-induced graphene sensor pattern test showing the porous graphene structure created by laser processing. This pattern forms the foundation of the sensor array that will detect scent molecules.

LIG sensor resistor testing demonstrating the electrical response characteristics of the graphene sensor array. The sensor array generates distinct electrical signal patterns when exposed to different scent molecules, which will be analyzed by AI to identify and classify scents.

The LIG sensor fabrication process for this project builds upon the laser-induced graphene research and documentation by Wedyan Babatain from the HTMAA 2022 class. Her comprehensive work on LIG fabrication using CO2 lasers at MIT CBA provides the foundational methodology for creating the porous graphene sensor arrays used in this project.

The fabrication process involves laser irradiation of polyimide (Kapton) sheets to create the conductive graphene structures that form the basis of the sensor array. The single-step laser fabrication method eliminates the need for complex manufacturing equipment and enables high-efficiency production of integrated sensor arrays.

For detailed information on LIG fabrication techniques, parameters, and applications, see: Wedyan Babatain's LIG Documentation

Integration with Incense DiffuserThe fabrication process involves laser irradiation of polyimide (Kapton) sheets to create the conductive graphene structures that form the basis of the sensor array. The single-step laser fabrication method eliminates the need for complex manufacturing equipment and enables high-efficiency production of integrated sensor arrays.

For detailed information on LIG fabrication techniques, parameters, and applications, see: Wedyan Babatain's LIG Documentation

The LIG sensor array will be integrated into the incense diffuser to detect and analyze the scents being released. As different incense types are burned, the sensor array will:

• Detect Scent Molecules: Convert volatile organic compounds into electrical signals

• Generate Signal Patterns: Create unique electrical signatures for each scent type

• AI Classification: Use machine learning to identify and classify scents with high accuracy

• Concentration Estimation: Measure the intensity of scents being released

• Real-time Monitoring: Provide continuous feedback about the incense diffusion process

This integration transforms the incense diffuser from a passive device into an intelligent system that can recognize, analyze, and provide feedback about the scents it produces, creating a bridge between traditional incense ceremony practices and modern sensor technology.

• Generate Signal Patterns: Create unique electrical signatures for each scent type

• AI Classification: Use machine learning to identify and classify scents with high accuracy

• Concentration Estimation: Measure the intensity of scents being released

• Real-time Monitoring: Provide continuous feedback about the incense diffusion process

This integration transforms the incense diffuser from a passive device into an intelligent system that can recognize, analyze, and provide feedback about the scents it produces, creating a bridge between traditional incense ceremony practices and modern sensor technology.

Ideation 3: Tangible Scent Interface with MultiModal AI

Concept Overview

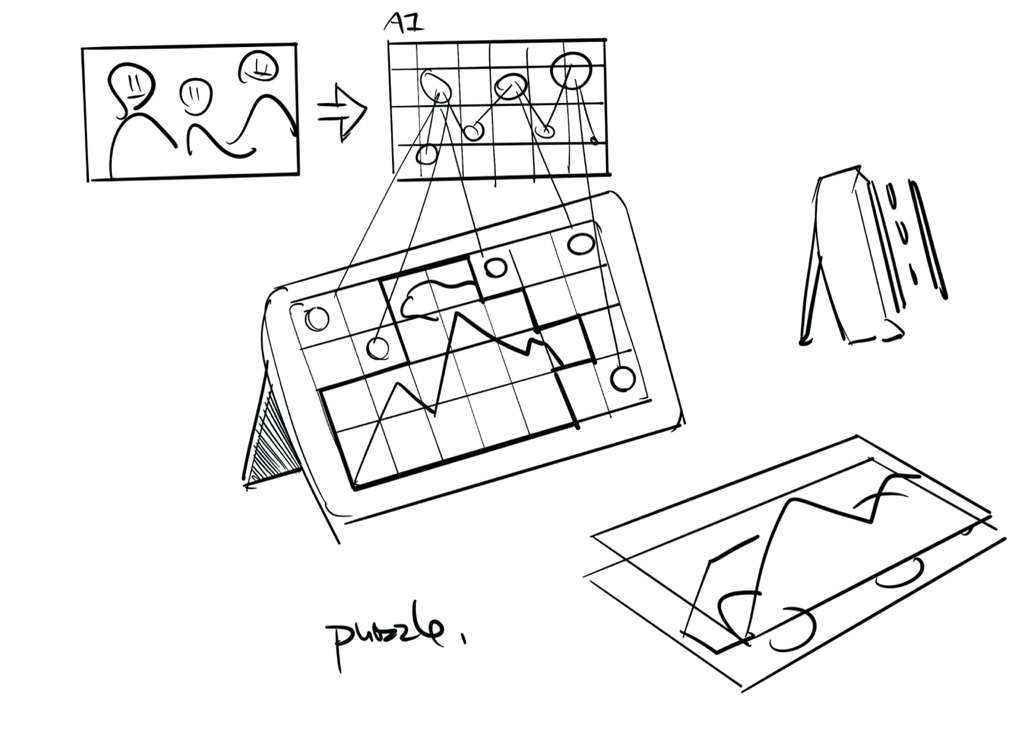

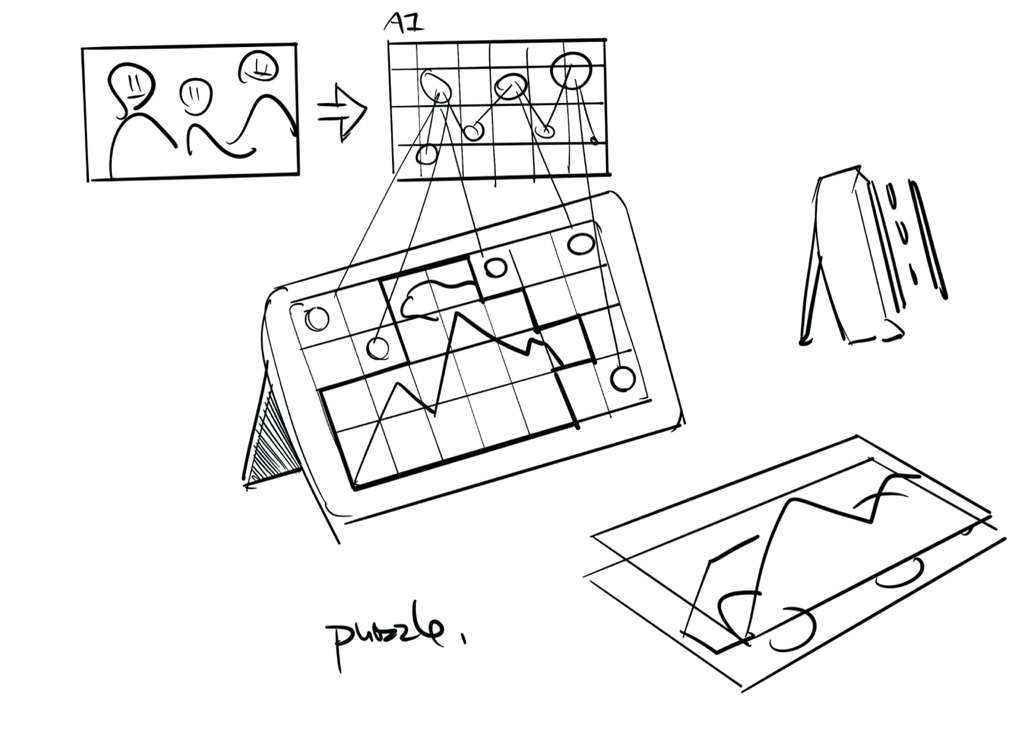

Concept sketch for the Tangible Scent Interface showing the integration of visual images with scent mapping.

Exploration of the visual-scent mapping concept, demonstrating how gaze tracking and object isolation work together to create synchronized sensory experiences

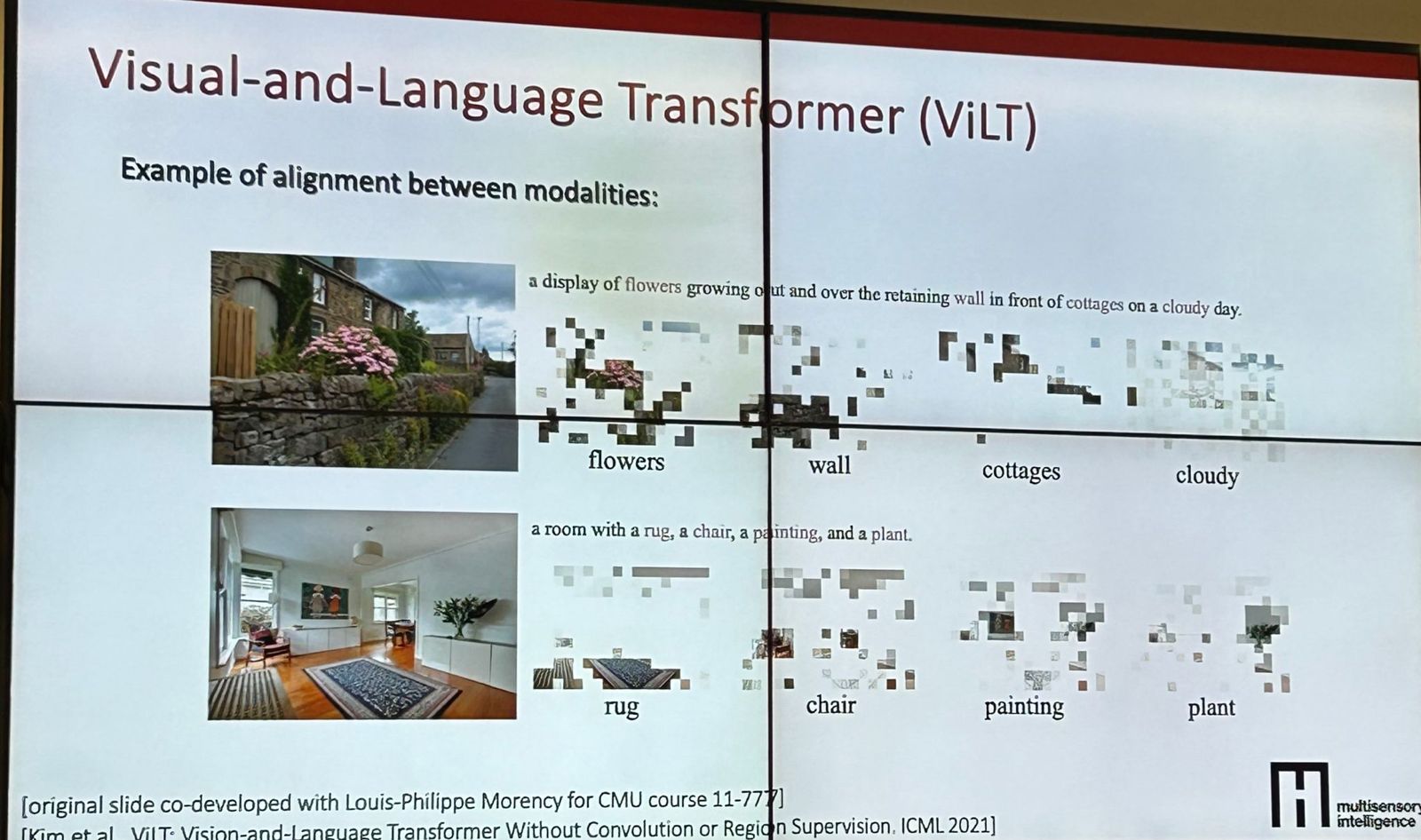

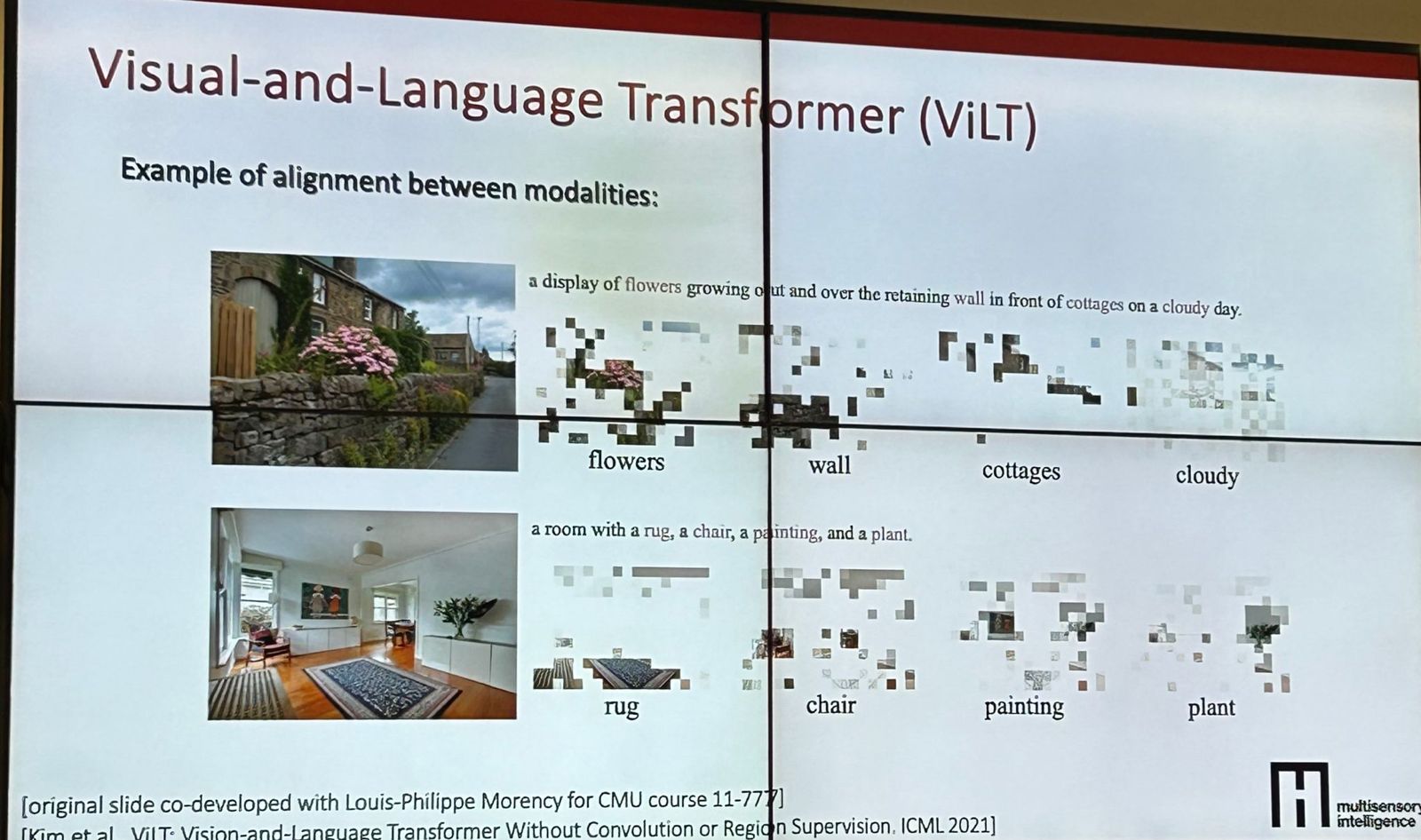

AI model architecture for the Tangible Scent Interface, showing how Vision-Language Models integrate with scent emission systems to create pixel-to-pixel visual-scent mapping

Technical Implementation

Ideation 3 emerged from a collaborative group project ideation session in the Tangible Interface class.

Core Technology: Vision-Language Model Integration

The system uses a VLM to isolate objects from an image that have strong scent associations. Each pixel of the image is mapped to a corresponding pixel of scent, creating a direct spatial relationship between what the user sees and what they smell. When the user's gaze locks onto a specific pixel or object, the visuals transform to focus on the corresponding pixels (e.g., all the flowers in the image), while everything else fades away.

Gaze-Locked Visual Transformation

The interaction model works as follows:

• Initial State: The system displays an image with multiple scent-associated elements (flowers, fruits, spices, etc.)

• Gaze Detection: Eye-tracking technology monitors where the user is looking

• Object Isolation: The VLM identifies all similar objects in the image based on the gazed pixel

• Visual Transformation: The image transforms - all matching objects (e.g., all flowers) become highlighted and focused, while other elements fade away

• Scent Emission: The corresponding scent pixel activates, releasing the appropriate aroma for the identified object

• Audio Fading: Optionally, audio elements may also fade away to enhance the focus on the visual-scent connection

Pixel-to-Pixel Scent Mapping• Gaze Detection: Eye-tracking technology monitors where the user is looking

• Object Isolation: The VLM identifies all similar objects in the image based on the gazed pixel

• Visual Transformation: The image transforms - all matching objects (e.g., all flowers) become highlighted and focused, while other elements fade away

• Scent Emission: The corresponding scent pixel activates, releasing the appropriate aroma for the identified object

• Audio Fading: Optionally, audio elements may also fade away to enhance the focus on the visual-scent connection

Each pixel in the visual image is mapped to a corresponding pixel in the scent output array. When the user's gaze focuses on a pixel showing a flower, for example:

1. The VLM identifies that pixel as belonging to a "flower" object class

2. All other pixels in the image that belong to the same class are found

3. These pixels are visually enhanced while others fade

4. The corresponding scent pixels are activated to emit the flower's aroma

5. The user experiences a synchronized visual and olfactory experience

This creates a tangible, spatial relationship between vision and smell, making scent a visible, interactive medium.

Idea 3 Exploration Sketch by Richard Zhang

Concept sketch for the Tangible Scent Interface showing the integration of visual images with scent mapping.

Exploration of the visual-scent mapping concept, demonstrating how gaze tracking and object isolation work together to create synchronized sensory experiences

AI Model Architecture Inspired by Paul's MultiModal AI lecture

AI model architecture for the Tangible Scent Interface, showing how Vision-Language Models integrate with scent emission systems to create pixel-to-pixel visual-scent mapping

The system integrates multiple technologies:

• Vision-Language Model: Processes images to identify scent-associated objects

• Eye Tracking: Monitors user gaze position in real-time

• Spatial Mapping: Creates pixel-to-pixel correspondence between visual and scent arrays

• LIG Sensor Array: Detects and confirms emitted scents

• Display Output: Renders transformed visuals with enhanced focus on selected objects

• Scent Emitter Array: Releases appropriate aromas corresponding to visual pixels

Applications and Implications• Eye Tracking: Monitors user gaze position in real-time

• Spatial Mapping: Creates pixel-to-pixel correspondence between visual and scent arrays

• LIG Sensor Array: Detects and confirms emitted scents

• Display Output: Renders transformed visuals with enhanced focus on selected objects

• Scent Emitter Array: Releases appropriate aromas corresponding to visual pixels

This ideation opens up new possibilities for:

• Interactive Art: Creating artworks where viewing and smelling are intrinsically linked

• Educational Tools: Teaching scent identification through visual reinforcement

• Therapeutic Applications: Using gaze-controlled scent therapy for relaxation or focus

• Cultural Experiences: Recreating historical or cultural scent-visual associations

• Accessibility: Making scent experiences more tangible and controllable for users

Connection to Digital Nose Project• Educational Tools: Teaching scent identification through visual reinforcement

• Therapeutic Applications: Using gaze-controlled scent therapy for relaxation or focus

• Cultural Experiences: Recreating historical or cultural scent-visual associations

• Accessibility: Making scent experiences more tangible and controllable for users

Ideation 3 extends the digital nose concept by adding bidirectional interaction: not only does the system detect scents (input), but it also creates scents based on visual attention (output). This creates a complete sensory feedback loop where the user's visual attention directly controls olfactory output, and the system confirms scent emission through its detection capabilities. The LIG sensor array can verify that the correct scent is being emitted, creating a closed-loop system that learns and improves over time.

References and Inspirations

This ideation draws inspiration from Hsin-Chien Huang's "The Moment We Meet" interactive installation, which uses split-flap displays to form a 10x10 matrix of faces that can be controlled independently, creating endless combinations. The work explores how emotions and expressions spread through visual transformation, similar to how Ideation 3 maps visual attention to scent emission. Huang's use of pixel-like displays that independently transform based on interaction provides a foundation for understanding how individual sensory pixels can be mapped and controlled in a spatial array.

"The Moment We Meet" interactive installation showing the 10x10 matrix of split-flap displays that can be controlled independently. Each display acts like a pixel that can transform based on interaction, providing inspiration for the pixel-to-pixel mapping concept in Ideation 3.

Detail view of "The Moment We Meet" showing how individual displays within the matrix create different facial expressions. This demonstrates how independent pixel-like elements can create complex patterns through coordinated transformation.

Another view of "The Moment We Meet" installation showing the spatial array of independently controllable displays. This spatial array concept directly informs Ideation 3's approach to mapping visual pixels to scent pixels in a coordinated spatial system.

Reference: "The Moment We Meet" by Hsin-Chien Huang

"The Moment We Meet" interactive installation showing the 10x10 matrix of split-flap displays that can be controlled independently. Each display acts like a pixel that can transform based on interaction, providing inspiration for the pixel-to-pixel mapping concept in Ideation 3.

Detail view of "The Moment We Meet" showing how individual displays within the matrix create different facial expressions. This demonstrates how independent pixel-like elements can create complex patterns through coordinated transformation.

Another view of "The Moment We Meet" installation showing the spatial array of independently controllable displays. This spatial array concept directly informs Ideation 3's approach to mapping visual pixels to scent pixels in a coordinated spatial system.

Reflection

This week was an introduction to the course structure and the beginning of learning computer-aided design. I'm excited to explore the possibilities of digital fabrication and parametric design.

Links