Wildcard Week

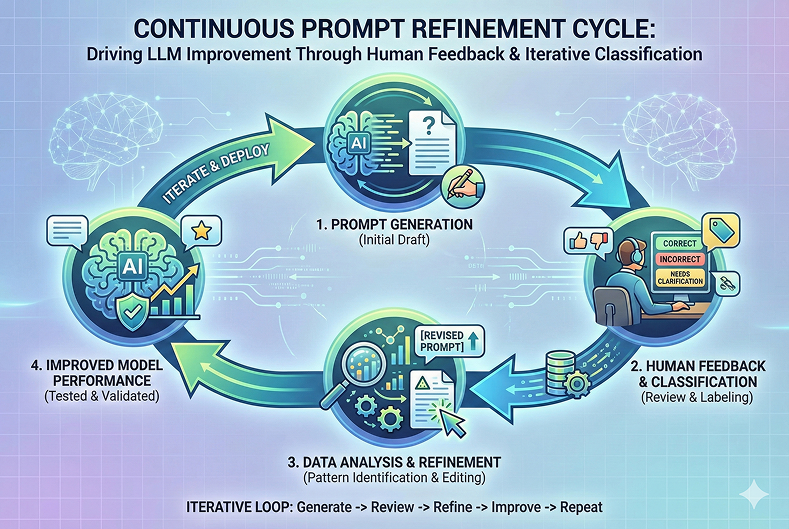

Developing a human feedback learning system for continuous improvement of LLM prompts through iterative refinement based on message classification and priority corrections.

During wildcard week, the class explored 10 different themes and projects. However, I had already left for India and was unable to participate in the in-person activities. Instead, I took up an extra subproject within my final project: implementing a human feedback learning system for the SmartPi Agentic Assistant.

01 · Project Overview

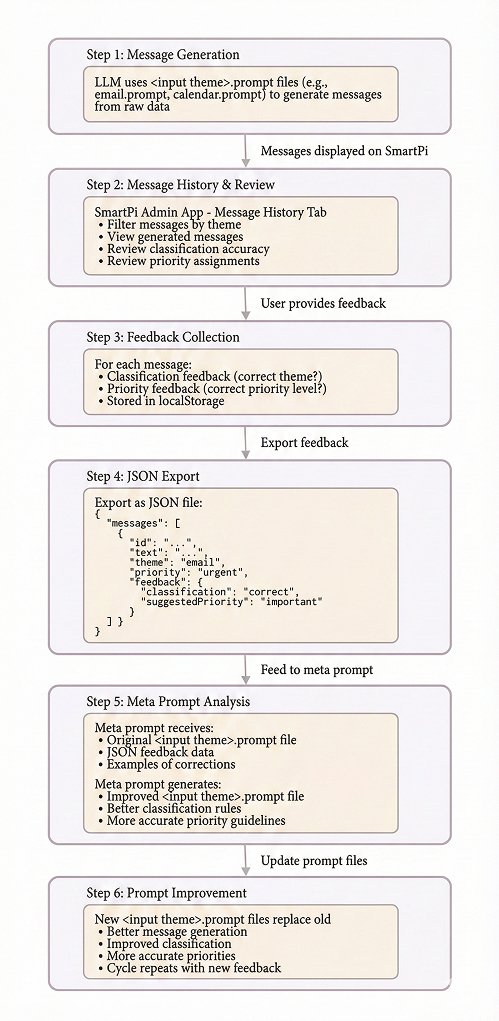

The human feedback learning system enables continuous improvement of LLM prompt quality by collecting user feedback on message classifications and priority assignments. This feedback is then used to refine the prompt templates that guide the LLM in generating messages for different themes (email, calendar, weather, slack).

Problem Statement

The SmartPi system uses LLM prompts (stored in <input theme>.prompt files) to

generate concise, formatted messages from raw data. However, these prompts may not always produce

optimal results:

- Messages may be misclassified (wrong theme assignment)

- Priority levels may be incorrect (urgent vs. important vs. normal)

- Message formatting may not match user preferences

- LLM output quality may degrade over time without feedback

Solution: Human Feedback Loop

The human feedback learning system creates a closed loop where:

- Users review messages in the Message History tab

- Users provide feedback on classification and priority

- Feedback is exported as structured JSON files

- JSON files feed into a meta prompt system

- Meta prompt analyzes feedback and generates improved prompt templates

- Improved prompts replace the original

<input theme>.promptfiles

02 · System Architecture

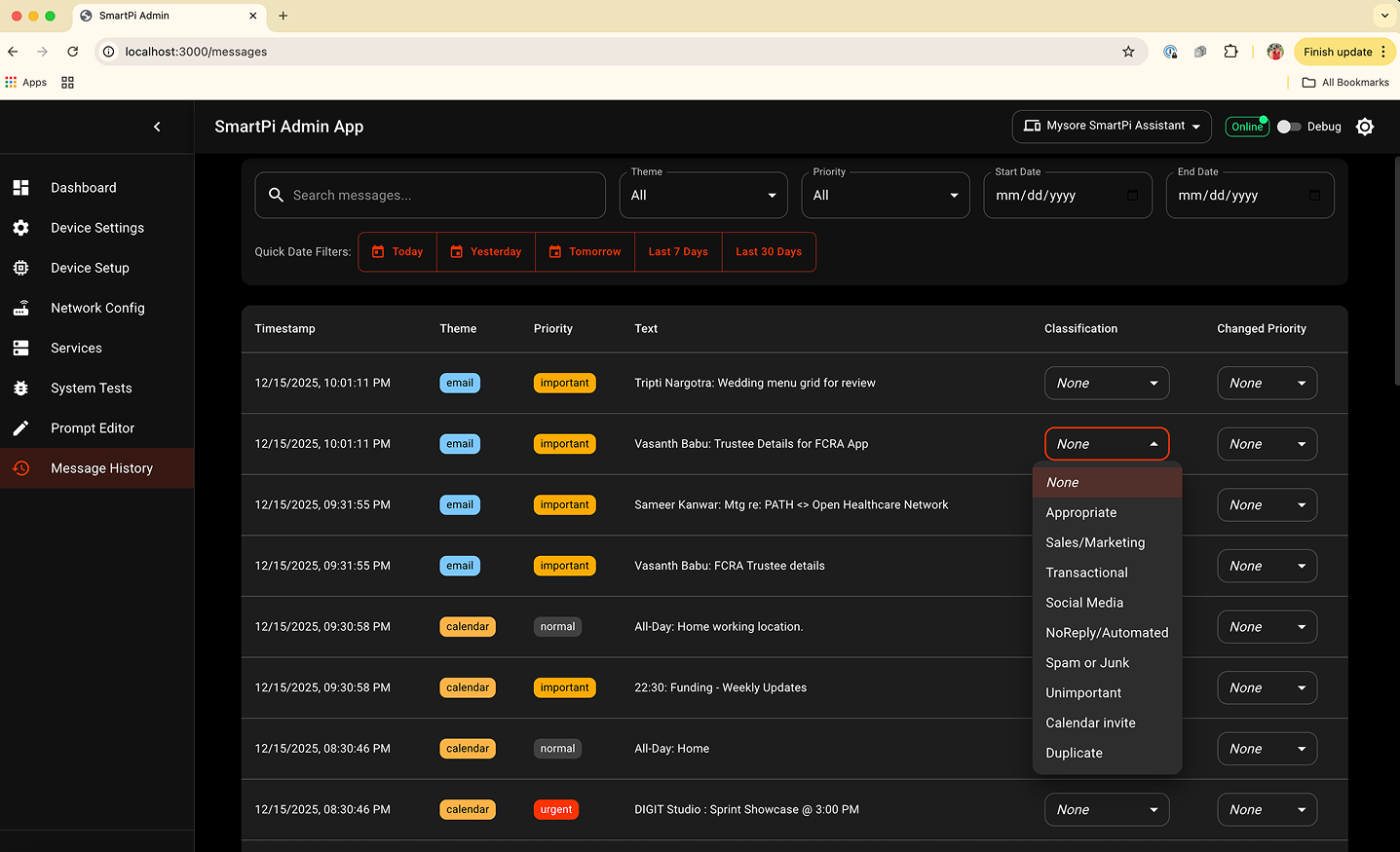

03 · Message History Interface

Filtering by Input Theme

The Message History tab in the SmartPi Admin App allows users to filter messages by the input theme that generated them. This enables focused review of messages from specific sources:

- Email theme: Messages generated from Gmail API data

- Calendar theme: Messages generated from Google Calendar events

- Weather theme: Messages generated from OpenWeather API data

- Slack theme: Messages generated from Slack notifications

Feedback Collection Interface

For each message in the history, users can provide two types of feedback:

| Feedback Type | Purpose | Options |

|---|---|---|

| Classification Feedback | Indicate if the message was assigned to the correct theme | Correct / Incorrect / Needs Review |

| Priority Feedback | Suggest the correct priority level for the message | urgent / important / normal |

- Feedback is stored in browser

localStoragefor persistence - Feedback is associated with message IDs for tracking

- Feedback survives page refreshes and browser sessions

- Feedback can be exported as JSON for analysis

04 · JSON Export Format

The feedback system exports messages and their associated feedback in a structured JSON format that can be processed by the meta prompt system:

{

"search_params": {

"query": "",

"theme": "email",

"priority": "all",

"start_date": "2025-01-01",

"end_date": "2025-01-31"

},

"messages": [

{

"id": "msg_12345",

"text": "Meeting at 2PM with John",

"theme": "calendar",

"priority": "urgent",

"timestamp": "2025-01-15T14:30:00Z",

"feedback": {

"classification": "correct",

"suggestedPriority": "important"

}

},

{

"id": "msg_12346",

"text": "Budget approval needed",

"theme": "email",

"priority": "normal",

"timestamp": "2025-01-15T15:00:00Z",

"feedback": {

"classification": "incorrect",

"suggestedPriority": "urgent"

}

}

],

"statistics": {

"total_messages": 150,

"by_theme": {

"email": 45,

"calendar": 60,

"weather": 30,

"slack": 15

}

},

"feedback_summary": {

"total_messages": 150,

"messages_with_classification": 120,

"messages_with_suggested_priority": 95

},

"exported_at": "2025-01-15T16:00:00Z"

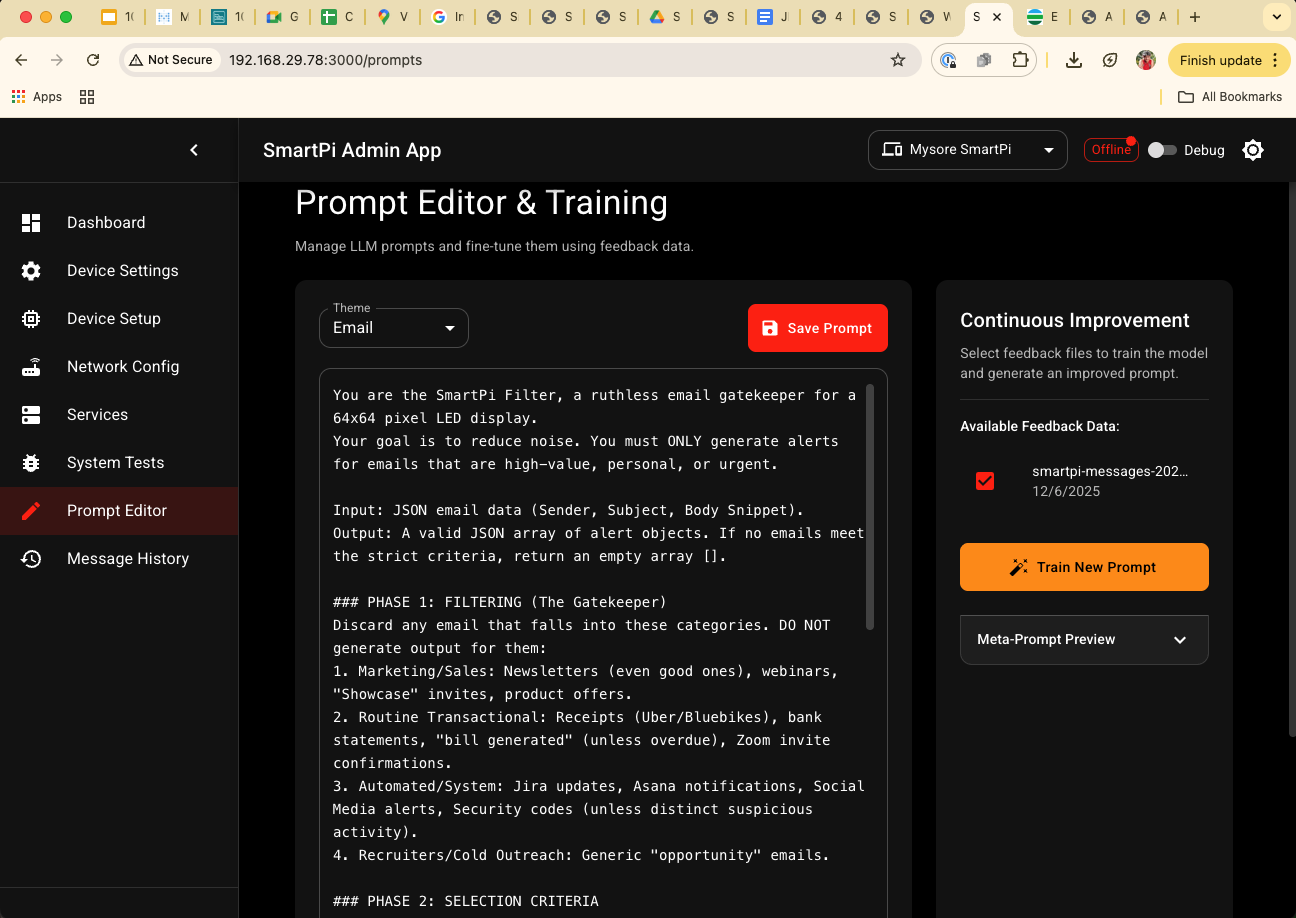

}05 · Meta Prompt System

Meta Prompt Architecture

The meta prompt system is a higher-level LLM prompt that analyzes human feedback and generates improved prompt templates. It takes as input:

- Original prompt file: The current

<input theme>.promptfile being improved - Feedback JSON: Exported feedback data with corrections and suggestions

- Context: Examples of messages that were incorrectly classified or prioritized

- Improvement goals: Specific areas to focus on (classification accuracy, priority accuracy, formatting)

Meta Prompt Processing

The meta prompt analyzes patterns in the feedback data:

- Classification errors: Identifies common misclassification patterns (e.g., calendar events being classified as email)

- Priority mismatches: Finds systematic priority assignment issues (e.g., urgent messages being marked as normal)

- Formatting issues: Detects problems with message length, structure, or clarity

- Edge cases: Identifies scenarios where the current prompt fails

Improved Prompt Generation

Based on the analysis, the meta prompt generates an improved version of the

<input theme>.prompt file that:

- Addresses identified classification errors

- Improves priority assignment guidelines

- Clarifies formatting requirements

- Adds examples of correct behavior

- Includes edge case handling

06 · Learning Loop Implementation

Iterative Improvement Process

The learning system operates as a continuous improvement loop:

Iterative Learning Cycle

Cycle 1: Original Prompt → Generate Messages → Collect Feedback → Meta Prompt Analysis → Improved Prompt v1 Cycle 2: Improved Prompt v1 → Generate Messages → Collect Feedback → Meta Prompt Analysis → Improved Prompt v2 Cycle N: Improved Prompt v(N-1) → Generate Messages → Collect Feedback → Meta Prompt Analysis → Improved Prompt vN Each cycle: • Reduces classification errors • Improves priority accuracy • Refines message formatting • Handles more edge cases

Feedback Quality Metrics

The system tracks improvement through several metrics:

| Metric | Description | Target |

|---|---|---|

| Classification Accuracy | Percentage of messages with correct theme assignment | >95% |

| Priority Accuracy | Percentage of messages with correct priority level | >90% |

| Feedback Coverage | Percentage of messages with user feedback | >80% |

| Improvement Rate | Reduction in errors per learning cycle | 10-20% per cycle |

07 · Technical Implementation

Message History Component

The Message History tab is implemented in MessageHistory.tsx with the following key

features:

Feedback State Management

// Feedback state: messageId -> { classification, suggestedPriority }

const [messageFeedback, setMessageFeedback] = useState>({});

// Load feedback from localStorage on mount

useEffect(() => {

loadFeedbackFromStorage();

}, []);

// Save feedback to localStorage

const saveFeedbackToStorage = (feedback) => {

localStorage.setItem('smartpi-message-feedback', JSON.stringify(feedback));

}; Feedback Collection Handlers

// Handle classification feedback

const handleClassificationChange = (messageId: string, classification: string) => {

setMessageFeedback(prev => {

const updated = {

...prev,

[messageId]: {

...prev[messageId],

classification: classification === '' ? undefined : classification

}

};

saveFeedbackToStorage(updated);

return updated;

});

};

// Handle priority feedback

const handleSuggestedPriorityChange = (messageId: string, suggestedPriority: string) => {

setMessageFeedback(prev => {

const updated = {

...prev,

[messageId]: {

...prev[messageId],

suggestedPriority: suggestedPriority === '' ? undefined : suggestedPriority

}

};

saveFeedbackToStorage(updated);

return updated;

});

};JSON Export Function

const handleExportMessages = () => {

// Combine messages with their feedback data

const messagesWithFeedback = messages.map(msg => ({

...msg,

feedback: messageFeedback[msg.id] || {}

}));

const exportData = {

search_params: {

query: searchQuery,

theme: selectedTheme,

priority: selectedPriority,

start_date: startDate,

end_date: endDate

},

messages: messagesWithFeedback,

statistics,

feedback_summary: {

total_messages: messages.length,

messages_with_classification: Object.values(messageFeedback)

.filter(f => f.classification).length,

messages_with_suggested_priority: Object.values(messageFeedback)

.filter(f => f.suggestedPriority).length

},

exported_at: new Date().toISOString()

};

const filename = `smartpi-messages-${new Date().toISOString().split('T')[0]}.json`;

downloadJSON(exportData, filename);

};08 · Meta Prompt Template

The meta prompt template guides the LLM in analyzing feedback and generating improved prompts. Here's an example structure:

You are a prompt engineering expert tasked with improving LLM prompts based on

human feedback.

CONTEXT:

You are analyzing feedback for the {THEME} message generation prompt. The

current prompt file is:

{ORIGINAL_PROMPT}

FEEDBACK DATA:

The following messages were generated using the current prompt, along with

human feedback on their accuracy:

{FEEDBACK_JSON}

ANALYSIS TASK:

1. Identify patterns in classification errors

2. Identify patterns in priority mismatches

3. Identify formatting or clarity issues

4. Find edge cases where the prompt fails

IMPROVEMENT TASK:

Generate an improved version of the {THEME}.prompt file that:

- Addresses all identified classification errors

- Improves priority assignment accuracy

- Clarifies formatting requirements

- Handles identified edge cases

- Maintains the prompt's core structure and style

OUTPUT:

Provide the complete improved prompt file content, ready to replace the

original {THEME}.prompt file.09 · Benefits & Applications

Continuous Improvement

The human feedback learning system enables continuous improvement of message generation quality without manual prompt engineering:

- Adaptive: System learns from real-world usage patterns

- Scalable: Can handle feedback from multiple users

- Efficient: Reduces need for manual prompt iteration

- Data-driven: Improvements based on actual user feedback

Quality Assurance

The feedback loop acts as a quality assurance mechanism:

- Catches classification errors before they propagate

- Identifies priority assignment issues

- Surfaces edge cases that need handling

- Provides metrics for prompt quality

User-Centric Design

By incorporating user feedback, the system becomes more aligned with user preferences:

- Messages match user expectations for priority levels

- Classification aligns with user mental models

- Formatting improves based on user feedback

- System adapts to user's communication style

10 · Future Enhancements

- Automated meta prompt execution: Automatically run meta prompt analysis when sufficient feedback is collected

- Prompt versioning: Track prompt versions and rollback if quality degrades

- A/B testing: Test multiple prompt variants and compare performance

- Multi-user feedback aggregation: Combine feedback from multiple users for consensus

- Real-time learning: Update prompts in real-time as feedback is collected

- Feedback weighting: Weight feedback based on user expertise or historical accuracy

11 · Conclusion

The human feedback learning system represents a novel approach to improving LLM prompt quality through iterative refinement based on real-world usage. By creating a closed feedback loop between message generation, user review, and prompt improvement, the system enables continuous learning and adaptation.

This subproject demonstrates how human feedback can be systematically collected, analyzed, and applied to improve AI system performance. The integration with the SmartPi Admin App's Message History tab provides a user-friendly interface for feedback collection, while the meta prompt system enables automated prompt refinement.

The system's architecture supports scalability, allowing feedback from multiple users to be aggregated and used to improve prompts across all themes. This creates a self-improving system that gets better over time through human guidance and LLM-powered analysis.