But before I got into all of that, I was interested in developing my final project a bit further by coding the calibration routine that the wall plotter would have to follow. I did this in C# in Grasshopper, and will post more details on my final page. The code as it stands is pasted below. The only thing omitted at this point is the accelerometer data, which I'll add in once I have the accelerometer working correctly.

// Switch Fail Safe

if (limit1 == true){

//rst = true;

toggle1 = true;

}

if (limit2 == true){

//rst = true;

toggle2 = true;

}

// Reset function

if (rst == true){

time = 0;

seq = 0;

pt1 = pt;

pt2 = pt;

pt1a = pt;

pt2a = pt;

}

// Make lists for output

List<Curve> out_crvs = new List<Curve>();

List<Point3d> out_pts = new List<Point3d>();

List<Circle> out_circ = new List<Circle>();

// Seq 0: Initialize pt1 and pt2 as instances of pt

if (seq == 0){

pt1 = pt;

pt2 = pt;

seq++;

}

// Seq 1: Move pt1 until it hits switch1

if (seq == 1){

pt1 -= step1;

circle1 = new Circle(Plane.WorldZX, pt, pt1.DistanceTo(pt));

if (limit1 == true){

pt1a = pt1;

dist1 = pt1.DistanceTo(pt);

seq++;

}

}

// Seq 2: Move pt1 back to origin

if (seq == 2){

if (pt1a.DistanceTo(pt) > 0){

pt1a += step1;

}

else {

seq++;

}

}

// Seq 3: Move pt2 until it hits switch2

if (seq == 3){

pt2 += step1;

circle2 = new Circle(Plane.WorldZX, pt, pt2.DistanceTo(pt));

if (limit2 == true){

pt2a = pt2;

dist2 = pt2.DistanceTo(pt);

pt1a.X = pt.X;

pt1a.Y = pt.Y;

pt1a.Z = pt.Z - dist1;

pt2a.X = pt.X;

pt2a.Y = pt.Y;

pt2a.Z = pt.Z - dist2;

seq++;

}

}

// Seq 4: Move pt2 to pt1

if (seq == 4){

// Draw Line 1

List<Point3d> pts1 = new List<Point3d>();

pts1.Add(pt);

pts1.Add(pt1a);

ln1 = Curve.CreateInterpolatedCurve(pts1, 3);

// Draw Line 2

List<Point3d> pts2 = new List<Point3d>();

pts2.Add(pt);

pts2.Add(pt2a);

ln2 = Curve.CreateInterpolatedCurve(pts2, 3);

ln1.Rotate((Math.PI / 180) * 1, Vector3d.YAxis, pt);

ln2.Rotate((Math.PI / 180) * -1, Vector3d.YAxis, pt);

pt1a = ln1.PointAtEnd;

pt2a = ln2.PointAtEnd;

// Draw Line 3

List<Point3d> pts3 = new List<Point3d>();

pts3.Add(pt1a);

pts3.Add(pt2a);

ln3 = Curve.CreateInterpolatedCurve(pts3, 3);

if (limit1 == true){

seq++;

}

}

time++;

//out_pts.Add(pt);

out_pts.Add(pt1);

out_pts.Add(pt2);

out_pts.Add(pt1a);

out_pts.Add(pt2a);

out_crvs.Add(ln1);

out_crvs.Add(ln2);

out_crvs.Add(ln3);

out_circ.Add(circle1);

out_circ.Add(circle2);

A = out_pts;

B = out_crvs;

C = out_circ;

//D = circle2;

E = seq;

}

double time;

Vector3d step1 = new Vector3d(100, 0, 0);

Vector3d step2 = new Vector3d(0, 0, 100);

bool toggle1 = false;

bool toggle2 = false;

int seq;

Circle circle1;

Circle circle2;

double dist1;

double dist2;

double dist3;

Point3d pt1;

Point3d pt2;

Point3d pt1a;

Point3d pt2a;

Point3d pt1b;

Point3d pt2b;

Curve ln1;

Curve ln2;

Curve ln3;

}

It turned out to be more time consuming to write the C# routine than I had expected, and it didn't exactly fulfill the week's assignment...even though it's intended to push step commands to the motor drivers, that isn't working yet. So I started looking into the possibility of writing an application for haptic input to control my wall plotter. There are of course infinite ways of doing this, and while I would prefer to write an app from scratch, this seemed to be a bit outside of the limits of my time constraints. I found a viable solution with the OSC features of the Firefly library for Grasshopper. This is ideal because I'm using Grasshopper for realtime control of the plotter. Below is a description of OSC taken from the Firefly documentation:

Open Sound Control (OSC) messages are essentially specially formatted User Datagram Protocols (UDP) transmissions. This OSC listener works by creating a data tree structure for every unique message it receives. Each OSC message contains a name and the value (can be floats, integers, strings, etc.) Use a List Item or Tree Item to retrieve the latest value for each message. A reset toggle can be used to clear the data tree values.

Setting up the interface was remarkably easy. I simply followed (most of) the steps below.

Touch OSC

------------------------

Download Touch OSC app

Make sure phone and computer are on same network

Open OSC Connections setting (in app)

Set the Host IP address on phone to be the same as your computer IP address

Set an outgoing Port address (eg. 6000)

Under App Options, turn on Accelerometer messages

Set Layout to Mix2 (doesn't really matter actually)

------------------------

Download Touch OSC app

Make sure phone and computer are on same network

Open OSC Connections setting (in app)

Set the Host IP address on phone to be the same as your computer IP address

Set an outgoing Port address (eg. 6000)

Under App Options, turn on Accelerometer messages

Set Layout to Mix2 (doesn't really matter actually)

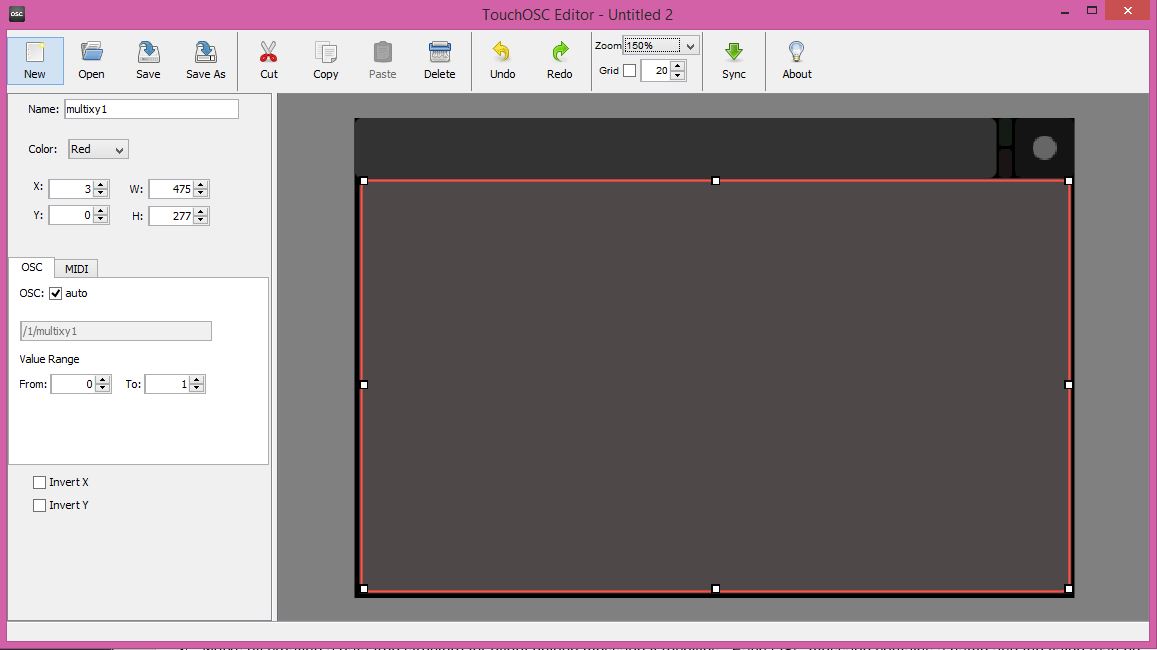

I downloaded ($5) and installed TouchOSC since it was recommended by the Firefly documentation. I didn't even think to check if there were other apps avialble which might be free and/or open source (there are, I'll get to that later). The first step was to install the OSC editor, which is a simple program that allows you to design an interface using the TouchOSC modular library, and then sync that interface to your smartphone. In my case, I just wanted an X,Y coordinate input that took up as much of the screen as possible.

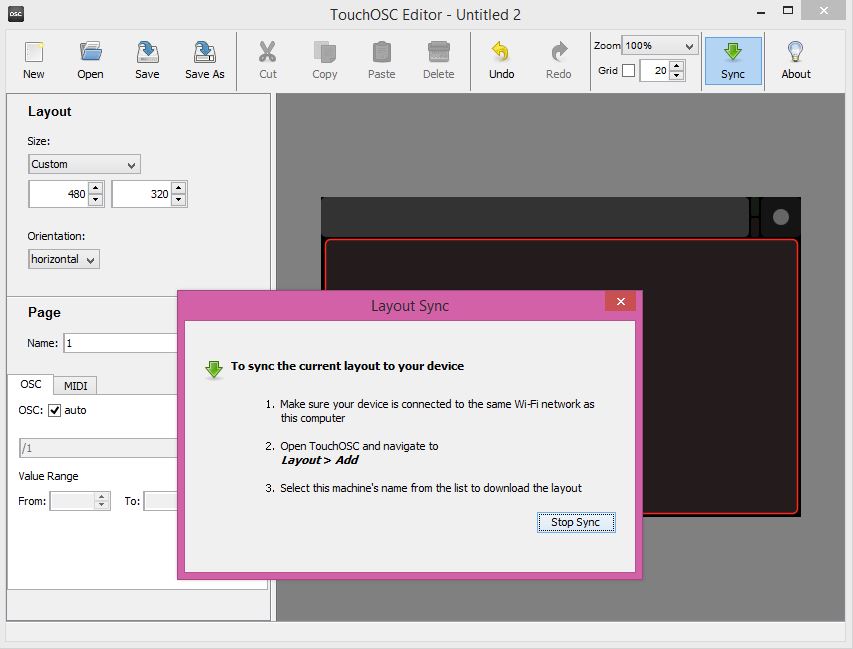

Syncing to my phone was quite straightforward, and I quickly found and selected my custom interface in the OSC app on my phone.

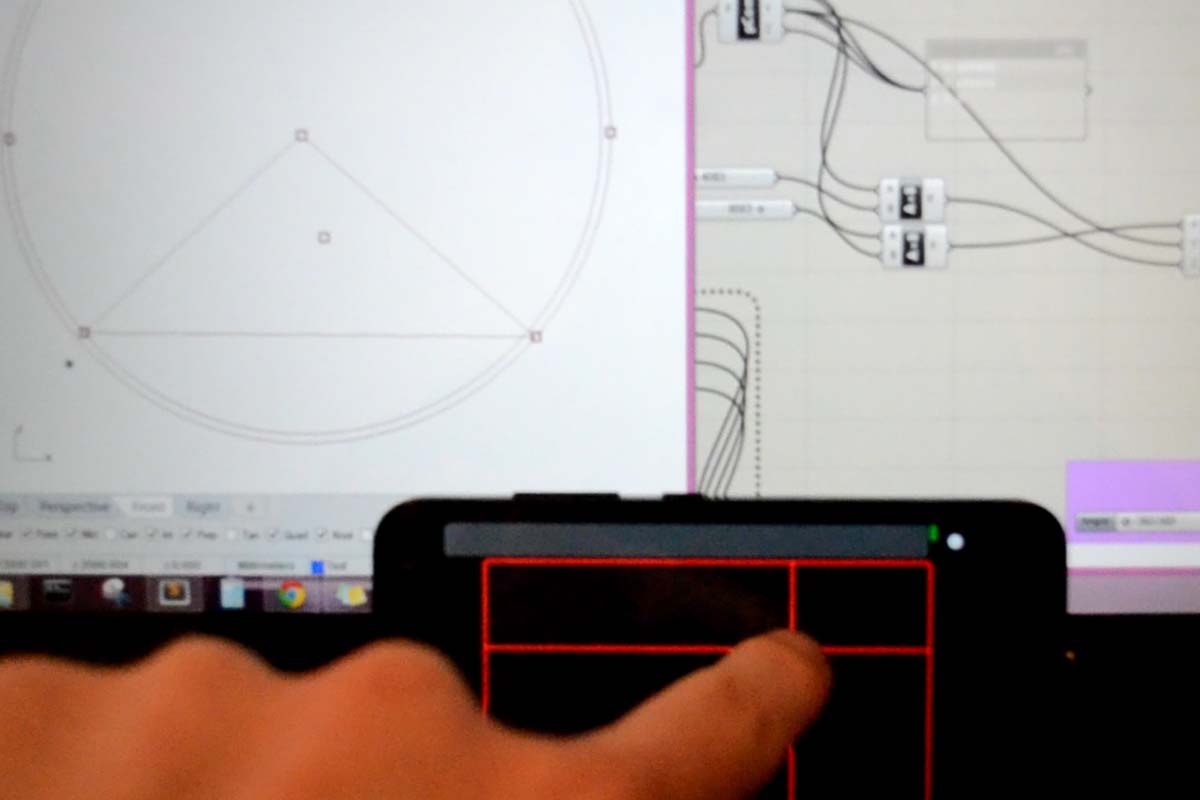

The final step was formatting the data in Grasshopper coming in from TouchOSC so that the coordinates would be mapped into the workspace defined by the calibration routine. It all went pretty smoothly. Note: I do plan to upload the Grasshopper definitions shown here; they're just a bit messy and unfinished at the moment. I'll put everything on my final page when it's closer to being finished.

Here is a video of the result. I was pretty impressed with how little lag time there was. I definitely intend to use this towards my final project. It seems like a viable option for realtime path generation. Ideally, I'd write my own app interface, but that was a bit much to handle this week, and realistically I'm not sure that's feasible within the remaining time.