How to Make [Almost] Anything | Life + Times Of v.2019

Maharshi Bhattacharya | Masters in Design Studies

Harvard University | Graduate School of Design

Maharshi Bhattacharya | Masters in Design Studies

Harvard University | Graduate School of Design

The Final Week was the culmination of all that was learnt throughout the term and applying it to build something.

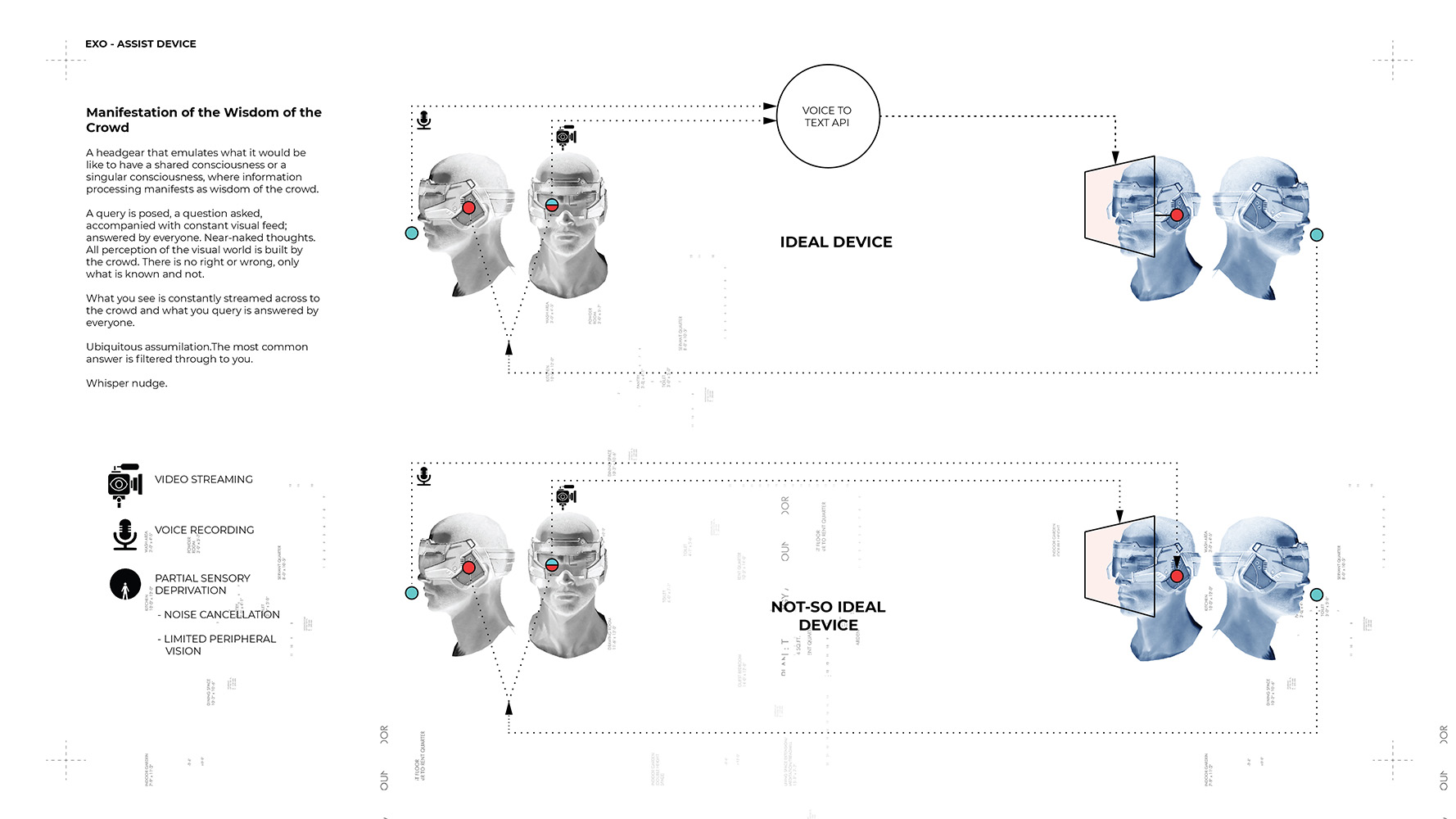

Consider two users - user1 and user2. Essentially devices/headsets like this would be able to talk to each other (users wearing them will communicate through them). The device will have partial sensory deprivation (noise cancellation and selective visual input).

A camera attached to the device will provide a visual input to whomever user1 is communicating with. The device will also have a screen, for user1 to see what user2 wants to show them through their camera. Similarly audio input will be cross-linked - the user1 hears what user2 has to say or query about and user2 can hear user1’s response. This will be possible between multiple users.

A scenario could be that one user looks at something and asks a question that is relayed (video and audio) to the entire “crowd” or network and the crowd can respond, which is then fed back (whispered back) to the initial user.In another iteration the speech is converted to text, so users in the crowd only get visual input.

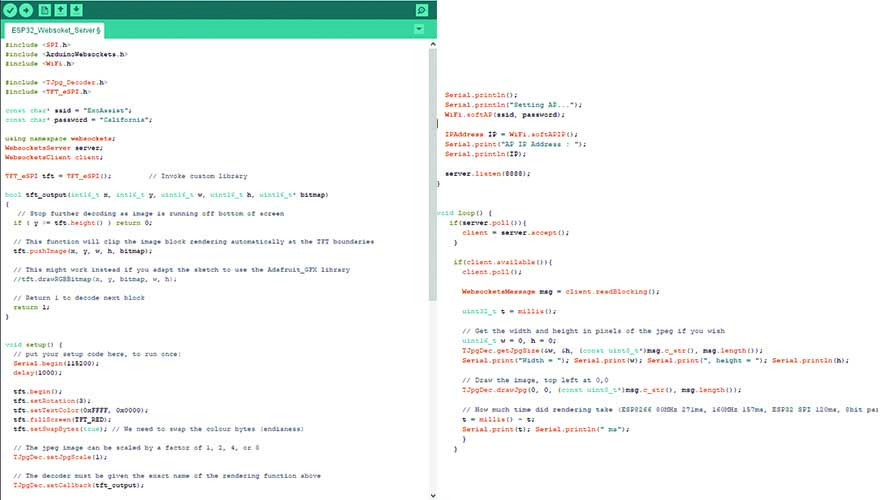

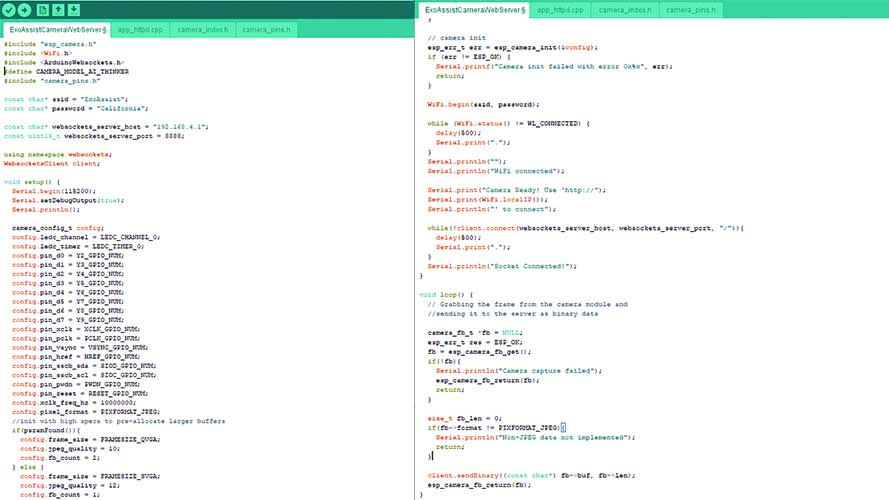

I was able to set up a visual feedback system where in a remote camera, powered locally can send visual feedback (images at the rate of 30 per second - enough to give the illusion of fluid motion) to a server which has a display attached to it. Some parts are described below in the videos and images of the code used as well.

Device Initial Setup. The Serial Monitor shows what data it is receiving over wifi from the cam client.

The code is for the ESP32 connected to the LCD (the server-setup), to initialise Wifi, across which the JPgs will be sent. I have documented two longer versions of this in the Networking and the interfacing week, which I found online. This has been tweaked based on snippets I found online and with help from forums online.

The code is for the ESP32 CAM (via FTDI) The camera remotely, and attached to apower bank, sends jpgs to the server, which are then displayed on the LCD. Each Image takes about 90ms 95ms to be sent.

I made a video with my cousin’s pet to see if I could run the LCD server and the CAM client independently with powerbanks.

Next step would be to get the voice element working preferably with the Google Speech to Text API and to actually fit the whole setup into a headset.