Programming

Machine code

Instructions encoded in binary, as the processor will read and execute from memory. Stored byte-literally in flash.

On a host computer, typically found in:

*.elffiles: executable and linking format, a structured file format describing sections of memory (size, location, RWX permissions) and their contents. Linux executables are ELFs.*.hexfiles: Intel HEX format. Textual format where each line starts with an address offset and the remainder contains the contents of the memory that should be loaded there.*.binfiles: literal, linear binary blob without load location information (you typically supply this, or it’s inferred by your tooling based on the MCU you’re targeting)

Assembly

A textual language representing instructions, designed for humans to read and write. Typically a near bijection onto machine code.

Programming Languages

Compiled, systems

The most popular option for embedded programming. The code (C, C++, Rust) is compiled by the host PC thanks to a cross-compiling toolchain. Appropriate abstractions (e.g. HAL/PAL) written as libraries or generated, frequently by vendors and glued together using Arduino cores, allow code to be portable across MCUs.

Interpreted

Interpreted languages (e.g. JavaScript, Python) are written in text files, which are interpreted at runtime by a program (called the interpreter, e.g. your python executable) in order to run the program. Machine code isn’t generated in the general case, the interpreter just runs through your text file line-by-line. Compared to compiled programs, interpreters are typically very slow, though JIT techniques can improve speed dramatically. In Python, it is common to use native (i.e. compiled) modules with Python interfaces to improve execution time (e.g. numpy, see also pyo3).

Interpreter overhead and code-size typically makes these languages unsuitable for use in microcontrollers. However, several languages have been ported to embedded platforms, and they can provide good starting points for embedded proejcts and vastly improve iteration speed due to language ergonomics.

See e.g. MicroPython, CircuitPython, Espruino (js), AtomVM (erlang).

Compiled, managed

Systems languages (e.g. C, C++, Rust, Zig, D) characteristically do not require a runtime (code that actively executes beyond what you actually wrote — a garbage-collector, for instance, is a runtime component, as it has its own agenda and runs when it decides it needs to, not when you ask it to).

Managed languages (everything else: Java-family languages, C#/CLR languages, Go, all interpreted languages) have garbage-collectors and usually additional runtime components. You invoke java MyClass.class e.g. and the java executable (the JVM) runs your compiled class file. Every once in a while, it halts execution of your program and runs a GC. It has its own housekeeping processes in the background — the JIT is a runtime feature that can be considered independent of program execution, for instance.

Some languages (Go) compile the runtime into the final binary. This could be tenuously argued to mean that Go is a systems language, but importantly, you can’t get rid of the Go runtime if you don’t want it – the language doesn’t function.

There are some fascinating historical examples (Lisp Machines, Java Processors) of compiled, managed languages on bare-metal, but these are typically limited in the same way as interpreted languages.

Hardware Description Languages (HDL)

Describes physical logic circuits, not a sequential program. The result can be implemented by an FPGA, or turned into an ASIC design without additional effort. The most common HDLs are Verilog, System-Verilog, and VHDL. Increasingly popular alternatives include nMigen and Chisel, among many others.

Since the description of physical circuits ultimately boils down to geometry, there’s a blurry boundary between programming and CAD as you work your way toward the bottom of the stack. Some frameworks embrace this whole-heartedly, such as CBA’s Asynchronous Logic Automata.

Parallel Computing on HPC Systems

Parallelization on CPU used to require manual management of subprocesses and threads but is now easier thanks to improved libraries and tooling. Notably, in many cases OpenMP can parallelize your C/C++ code with the addition of a single line preprocessor pragma.

- basic threading in C++

- CUDA hello world

- Satori hello world (including CUDA aware MPI)

Genetic algorithms

Genetic or evolutionary algorithms is an intriguing field in which parameters to solve a given problem are treated as a genome, and undergo some form of mutation and natural selection. This can be applied to any problem in which a score (reward) is easily computed, while the problem itself can be arbitrarily complex. The term “evolutionary algorithm” remains, but newer methods make use of optimization to prune the search space significantly.

Machine Learning

The closest thing we have to a general A.I., and by far the hottest topic in that field.

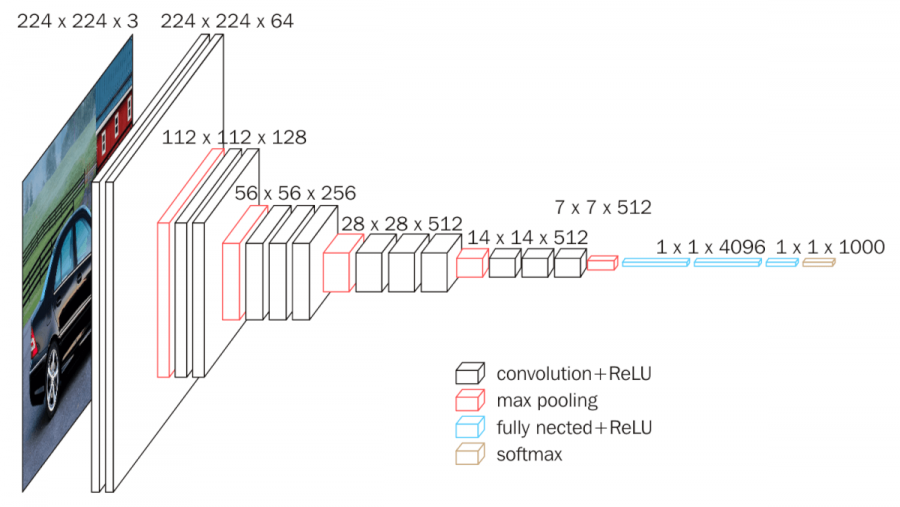

Backpropagation is the backbone of modern machine learning: it allows gradient evaluation on arbitrarily complex combination of trainable functions, as long as each of them has a defined gradient function. For signal with time or spatial dimensions, convolutions are an efficient implementation for the trainable function, as they require less parameters to train.

- supervised: input/output pairs are available as training datasets. There is a danger of overfitting if the dataset is too small, not representative or has high redundancy.

- unsupervised: no input/output pair available

- Classification:

- reinforcement learning: reward-based, we let the system learn by trial and error. Amazingly, this requires no dataset at all, only rules describing the problem and a well-crafted reward function. This is how Deepmind trained AlphaZero to master Go and chess from the game’s rules only. A new variant can even play Starcraft II.

- Generative Adversarial Networks (GAN): two sub-systems take turns refining their