Week 8

This week was input devices week, but I had previously implemented mutual capacitance sensing in Week 6 when I built a PCB sandwich for the arrow pads of my DDR machine.

So, I decided to use this week to make more progress on the logic for my final project, the tiny DDR machine. I tried to flesh out in more detail how I can implement the game logic and scoring (e.g. tell how close the user’s keypress is to the desired note timing), and how to play the logic of the game at the same time as the speaker. Here’s my documentation of my implementation of this pipeline. Here is my quaint little Serial Monitor reading my parsed data structure and printing every time an arrow is meant to be pressed, as a demo that my pipeline works:

I did the group assignment with Ben – he showed me how to use a logic analyzer, explained how I2C works, and we tried to understand the Adafruit Seesaw code for reading capacitive sensors.

Tiny DDR logic

For this week I wanted to make a pipeline for going from the most common file format for DDR songs / levels to playable game logic in Arduino that can detect how off a user’s keypress is (in milliseconds) from the true timing of the note and map this to a score using the scoring guide.

First, I found the most downloaded simfile off zenius-i-vanisher and downloaded the .zip file for the level. Inside the file, I found:

- a few .png assets for the song name, background image, and “jacket” (assuming this is an icon for the song selection menu)

- .ogg file: compressed audio

- .avi file: audio + video

- .sm file: the Thing I Was Looking For

A bit more about the .sm file. The format of it looks like this:

//----- song ID: xeph -----//

#TITLE:Xepher;

#ARTIST:Tatsh;

#BANNER:Xepher.png;

#BACKGROUND:Xepher-bg.png;

#CDTITLE:./CDTITLES/BeatmaniaIIDX.png;

#MUSIC:Xepher.ogg;

#SAMPLESTART:33.9;

#SAMPLELENGTH:15;

#BPMS:0=170;

//---------------dance-single - ----------------

#NOTES:

dance-single:

:

Beginner:

4:

:

0000

0000

0000

0000

,

...

1000

0000

0000

1001

,

0000

0000

0000

0110

,

...The BPMS property specifies the BPM and the note where that BPM begins (to be able to handle tempo changes mid-song). In the example above we have a consistent BPM of 170.

The notes are encoded in a way I can’t seem to find documentation for, but luckily there is a handy Python simfile library which contains a handy timing engine to decode the note metadata and time (in seconds) from the note information.

Each of the TimedNotes looks like this:

TimedNote(time=8.471, note=Note(beat=Beat(24), column=0, note_type=NoteType.TAP))We have the time (in seconds), and inside of the Note object, the beat, column (arrow direction, where 0 is left, 1 is down, 2 is up, 3 is right) and note type, which for the beginner tracks is always TAP, but could also be, e.g. HOLD_HEAD or TAIL to define the beginning and end of a note which is held across multiple beats. My code converts this into a plaintext file which represents a table with three columns, where each row is one arrow: the timing of the arrow (in milliseconds since song start), the note type, and the arrow direction. Here is an example simfile which is the input to my parsing script and here is the .txt output for the beginner level of that simfile.

To quickly test if this works, I wrote a small .ino file which simply loads the parsed data structure, starts a millisecond counter, and prints the arrow type and direction to the Serial Monitor when the arrows are meant to be pressed. Since I couldn’t figure out how to add a .txt file into Wokwi I just hardcoded the array into the code itself. It’s not a particularly exciting demo, but it works!

Onwards…

Ben teaches me about I2C

I learned that I2C is both a hardware unit and a communication protocol. We discussed the nice metaphor that I2C is how microcontrollers talk to each other, and to “talk” to another one needs:

- a transmitter (a mouth)

- a receiver (ears)

- an agreed-upon protocol (shared language)

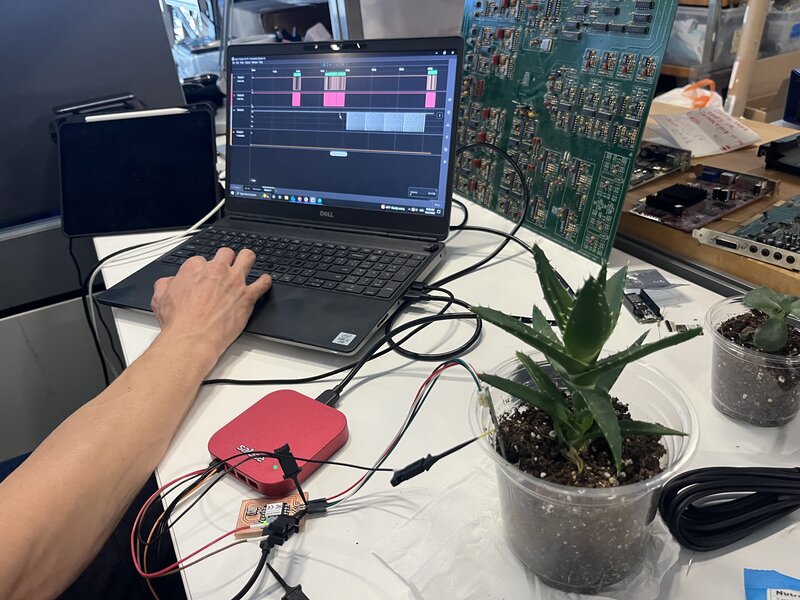

He showed me with the Saleae logic analyzer how a very simple I2C protocol he was running between a capacitive sensor for soil moisture dectection and a RP2040. I observed the three lines: clock, data, and ground. Ben explained that they do a sort of choreographed handshake and then dance in order to maintain the reliability of data transfer. First there is a SYN / ACK exchange to indicate that data is about to be sent. Then, the clock line pulses low / high while the data line sends bits. When the clock line returns to 1, there is no more data to be sent.

It was so fun seeing this extremely low-level logic and communication in action – one of the last courses I took at my previous university was Compilers, and it was really cool to see the layers below the lowest layers I had previously encountered! I’m getting closer and closer to the wires, it seems…