Week 14

Robot Arms (Wildcard Week).

Engineering Files

Voxel Generation and Assembly

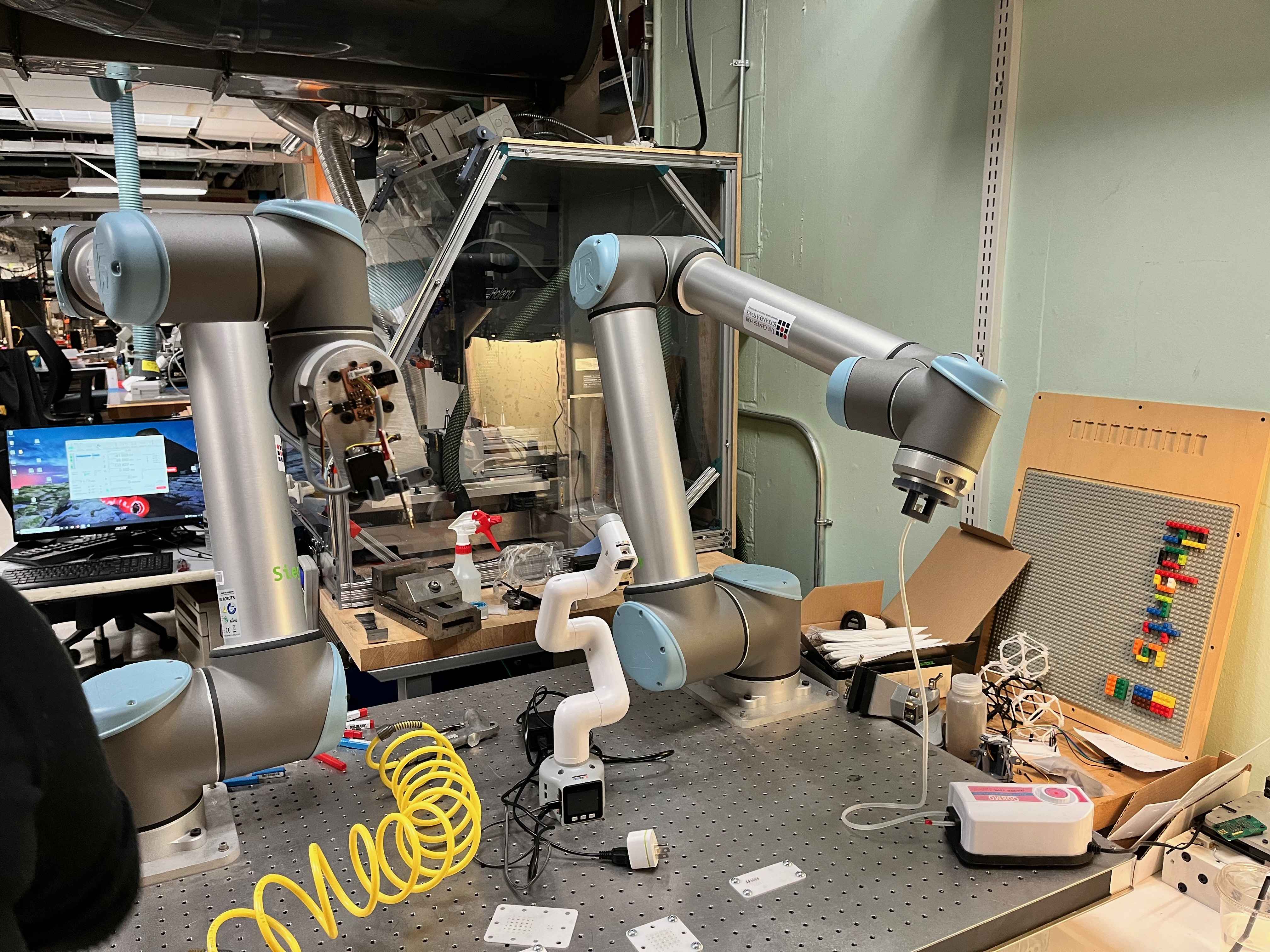

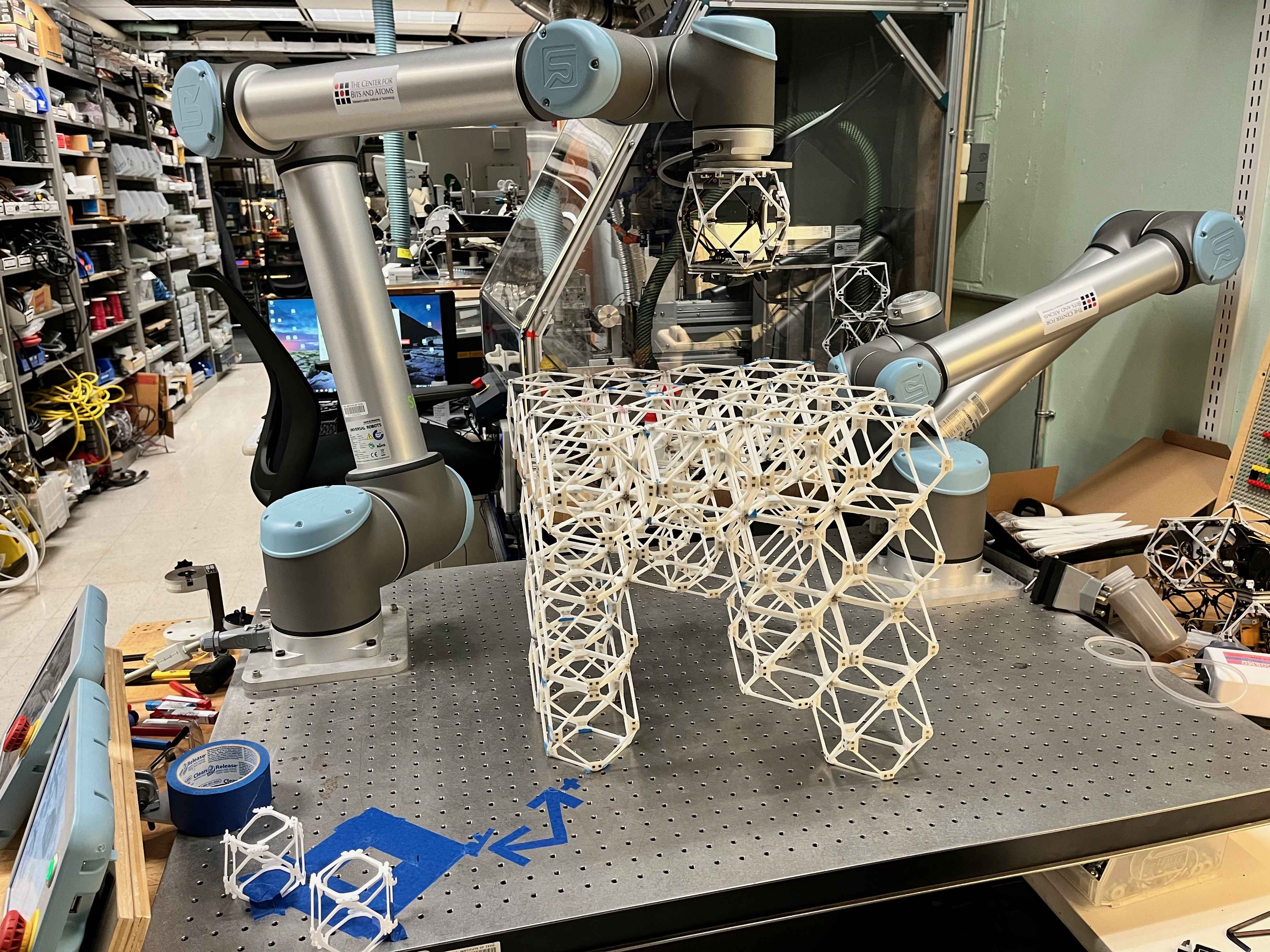

Our project this week was done by me and the incredible + wonderful Alex Htet Kyaw. We decided to develop a speech-to-MeshGPT-to-mesh-to-voxel-to-physical prototype pipeline. His part consisted of developing interfaces to take in speech from his laptop, use generative/online resources to find models of the requested objects, and then use Rhino/Grasshopper to convert them to low-resolution voxels. My part consisted of taking those voxels and commanding the UR5 robot arm to assemble them in 3D space with the physical, 3D printed voxels available in the CBA lab.

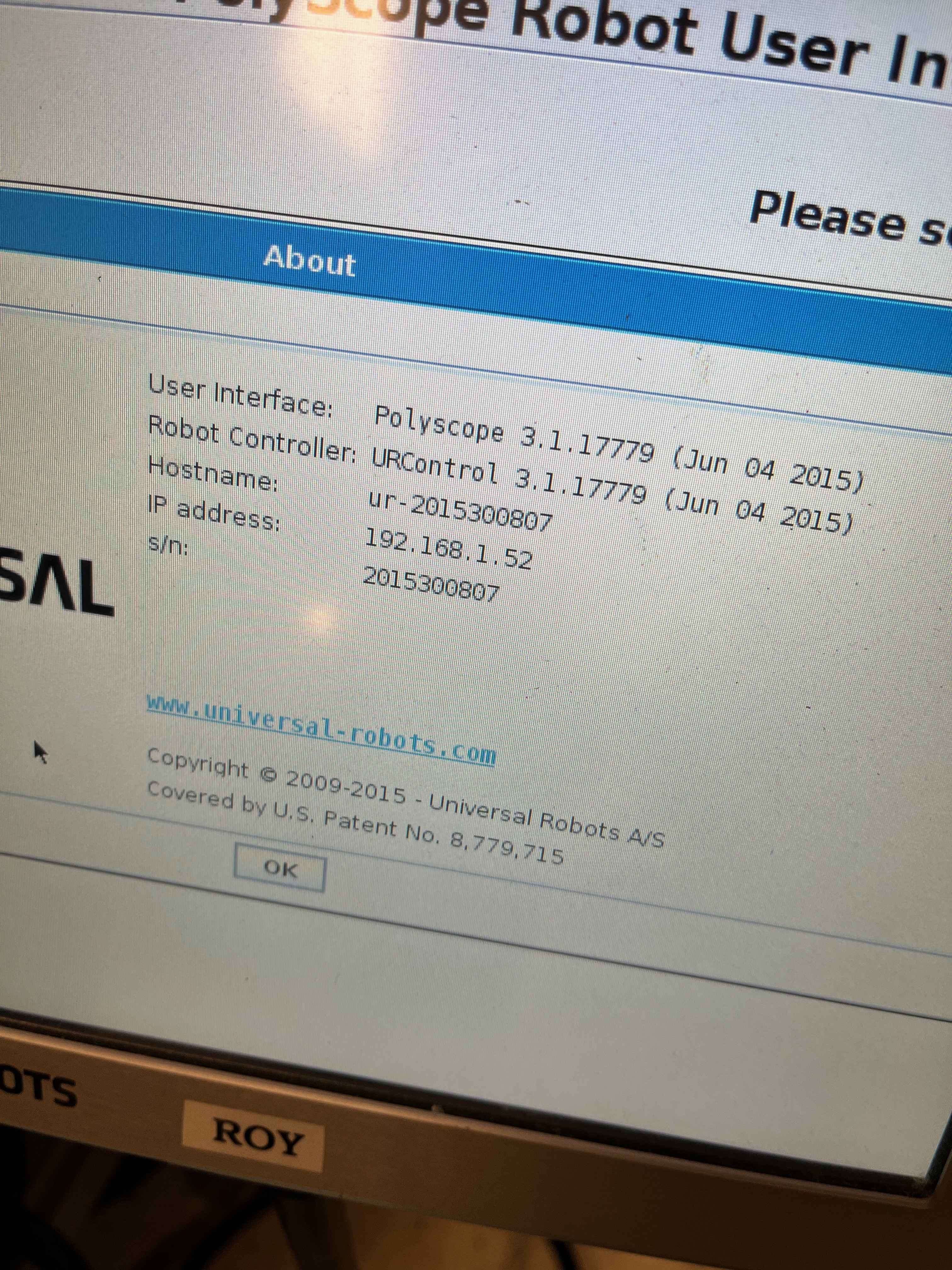

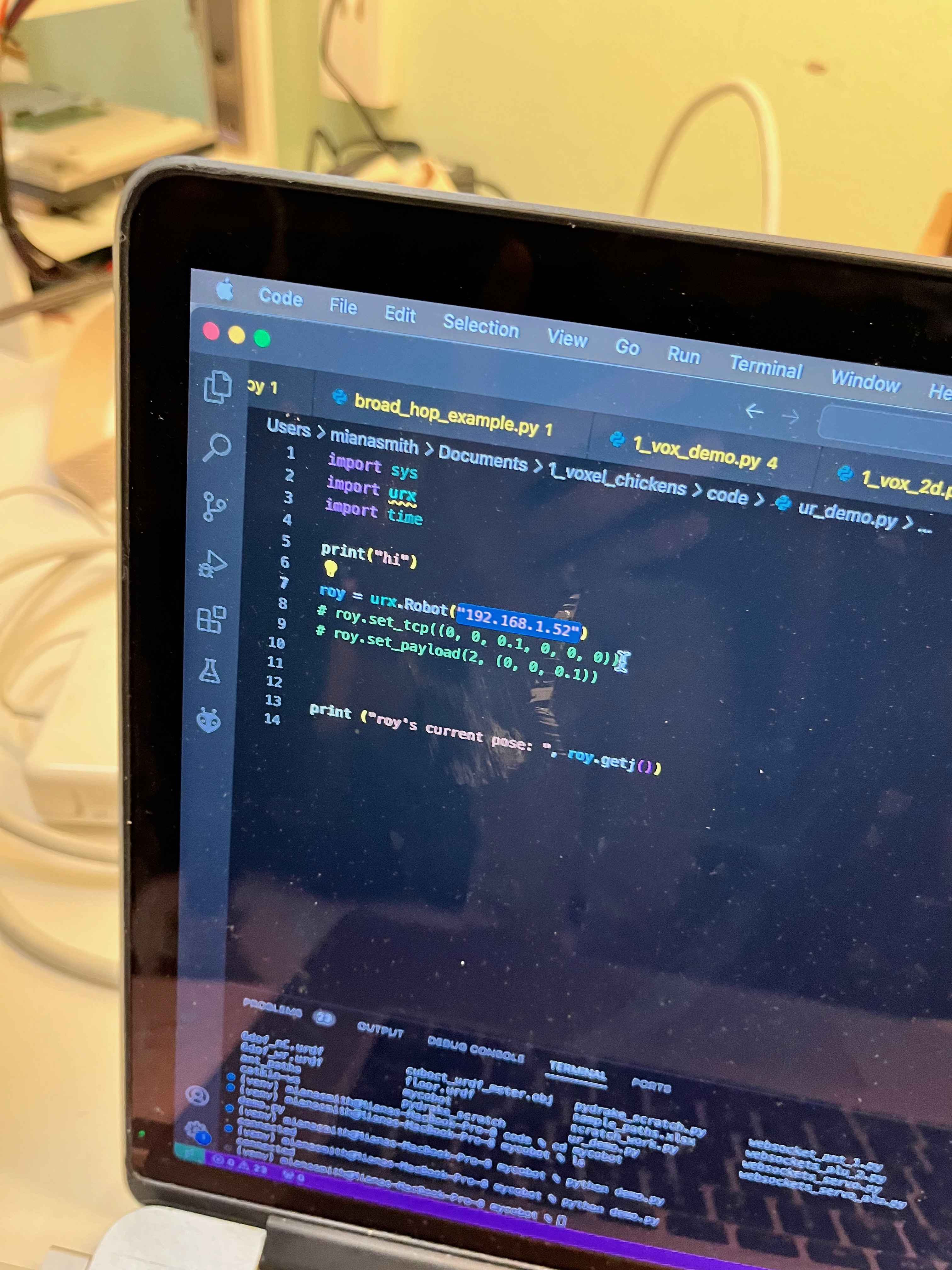

While I was concerned about the complexity of controlling the UR5, the interfaces were fairly easy to manage. I used the Python urx package to control the UR5, and could connect directly to the robots via Wifi.

Unfortunately, the people behind the Python package recently tried to integrate a new Python library for transformation calculations that broke a lot of their codebase functionality. So first, I had to spend some time fixing their source code so that their basic translation and rotation functions would work on the UR5s.

I debated making a proper pull request for this, but decided they don't deserve it.

After that, I had to immediately set up a number of safety features and checks to make sure the robot wouldn't crash into the surrounding environment or itself. Multiple times, typos in my code led to scary ESTOP moments and smacks against nearby structures.

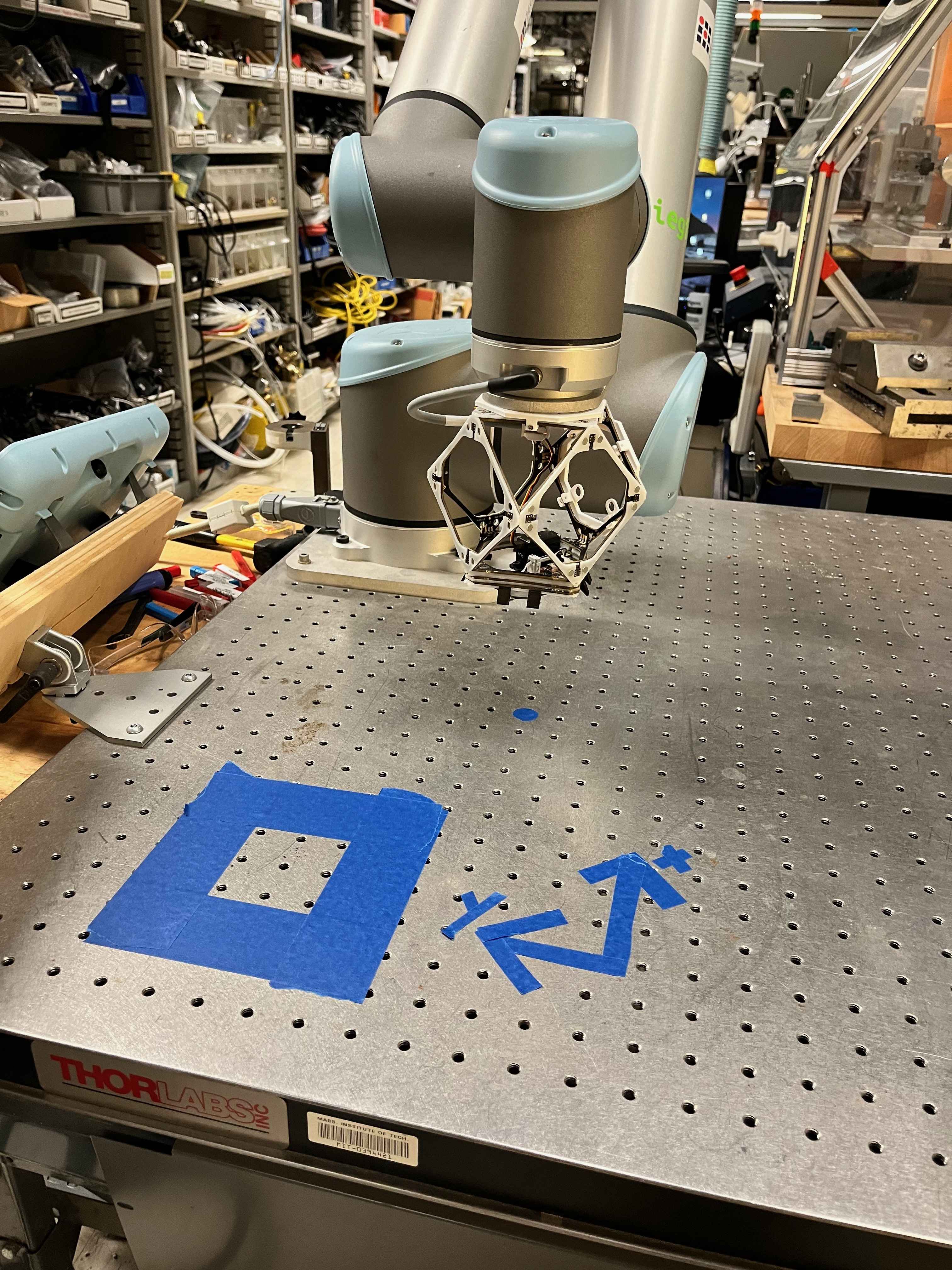

After defining a few custom functions that would safely move the arms from one XYZ location to another, I was able to start calibrating the robot to initialize correctly, and actuate the attached servo end-effector to pick up a voxel.

Once the individual functional units of my code were thoroughly tested, I was able to accelerate our progress, and a simple for loop iterating over our desired locations, with some logic of how to move from point A to point B in a safe manner, let us construct our specified voxel structure very quickly.

After construction, our final result (it's supposed to be a stool) looked like this:

The main downside of our project is that we had to feed the robot the "source blocks" manually by hand. If someone could design some kind of clever mechanism to do this automatically, I think that would be a great final project for MIT's How To Make Almost Anything 2023 MAS 4.623 class.

How to Make Almost Anything 2023