Processing

Point cloud

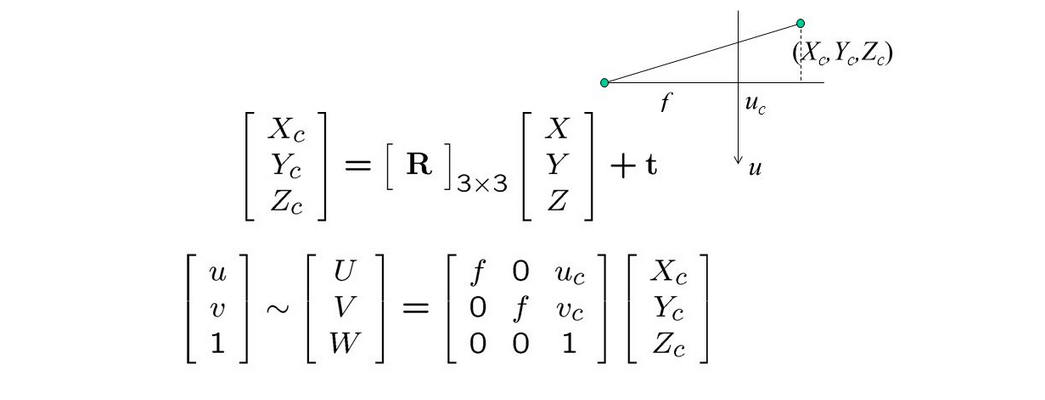

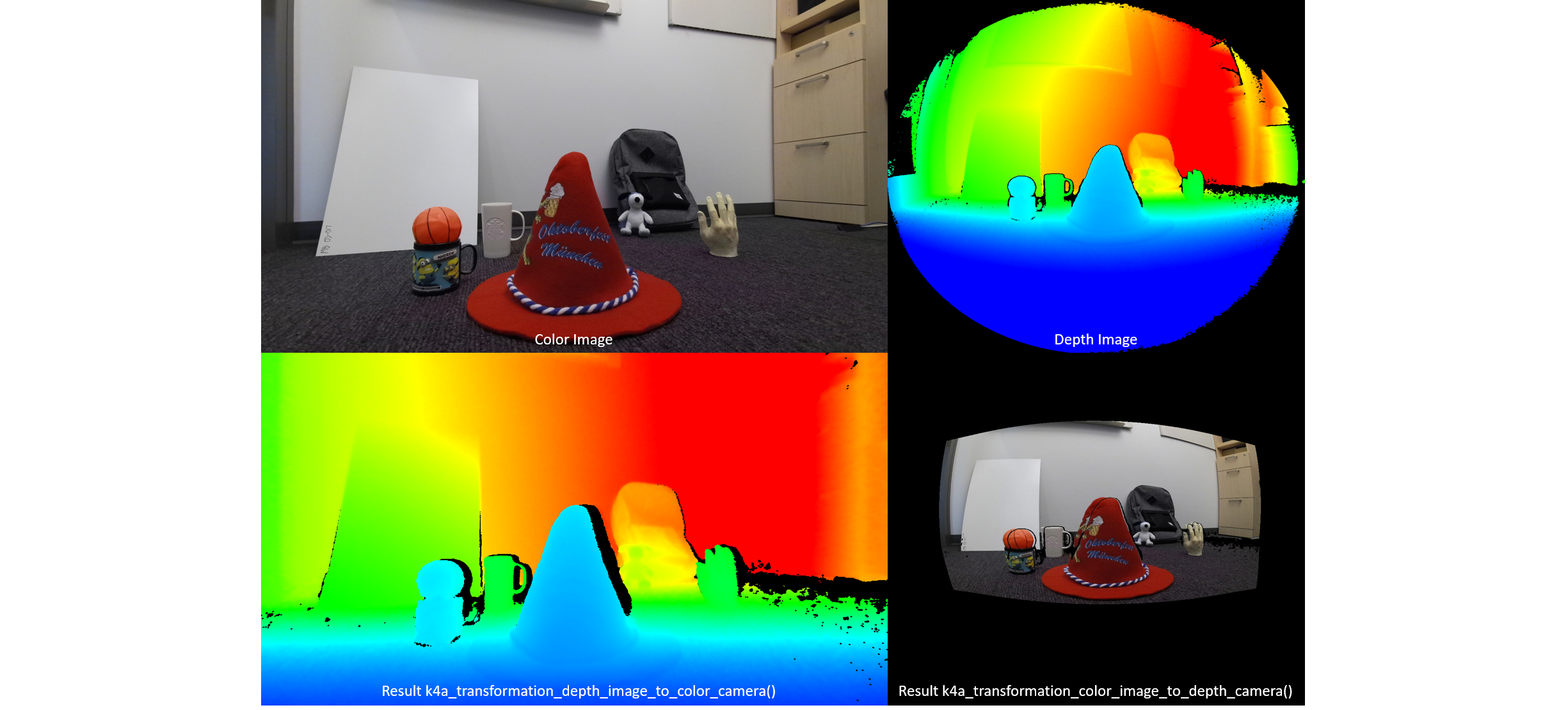

Any depth estimation method discussed previously that involves a camera sensor will provide a depth map result. Using the camera’s calibration parameters, this depth is easily re-projected to the 3D space with the pinhole camera model.

In case the lens distortion is significant, it can be cancelled first to get back to a pinhole model. There are common frameworks for calibrating the optical parameters of the camera. In many cases, a color camera is available to provide color, but warping is necessary

When a camera is not involved, each point is a single measurement (e.g. LiDAR or digitizer). In all cases, the geometrical information is just as important as the measurement itself, and this is still an active research field.

Registration

When merging several scans or modalities, finding the spatial transformation to apply on each scan is a great challenge.

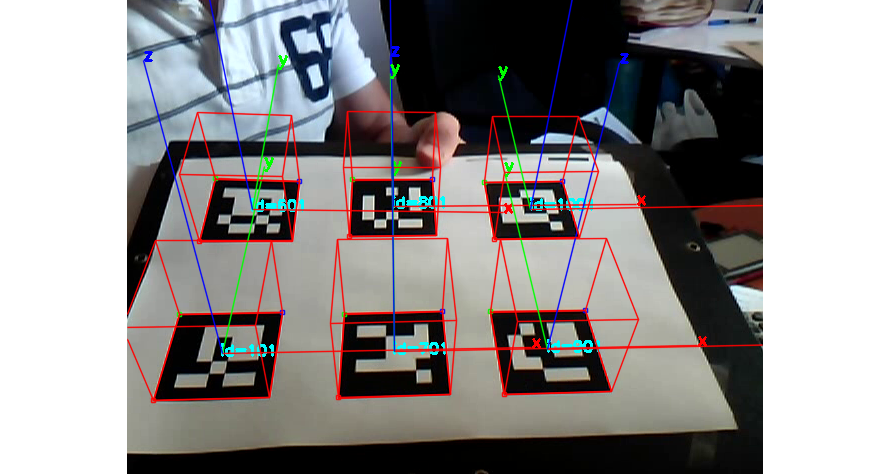

- Marker-based: the scene includes easy to detect marker points, at least 3 visible per scan. The markers can either be simple checkerboards, or encode information (coded targets, Aruco markers). The latter is more robust as it makes each marker unique. Neural networks can lower the variance of the estimated marker location.

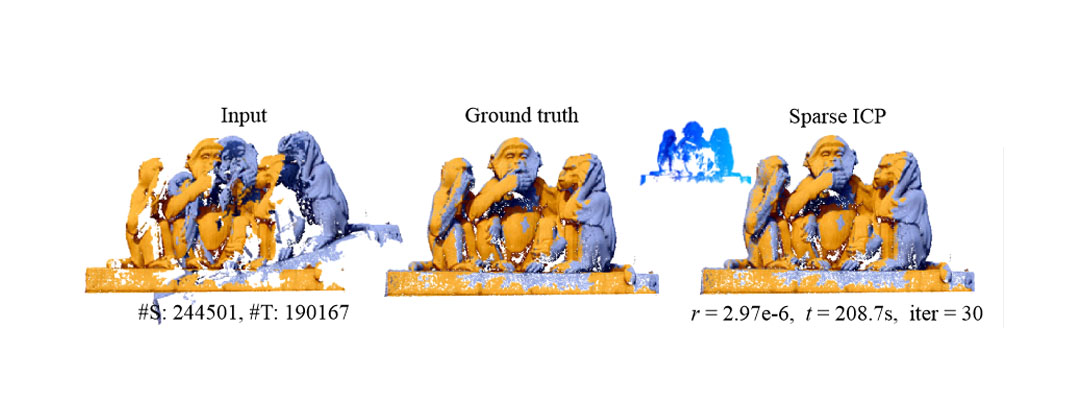

- Point cloud registration: in the general case, the best transform for overlapping several point clouds can still be retrieved through iterative methods such as Iterative Closest Point. In the case of partial overlap or noisy scans, a Gaussian mixture model was proposed to handle the local ambiguity.

Noise removal

Any 3D scan will exhibit some form of noise, which can be handled in several ways:

- Outlier removal: remove points that are too distant or suspiciously noisy compared to the local area.

- Denoising: displace points based on an estimate of the local noise.

Denoising makes use of the sparsity of the underlying data in some natural feature space. This requires knowledge about natural data; most recent approaches embed this knowledge in a deep learning model.

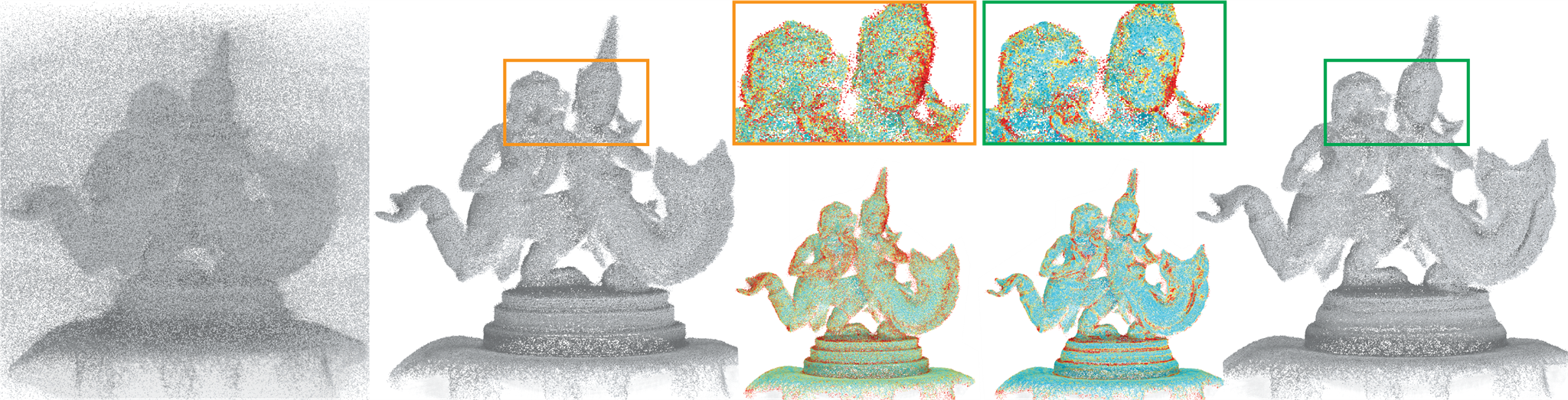

Here is the previous example of ToF point cloud before denoising:

Here it is after denoising with a residual neural network on the depth map:

Voxels

Voxels are the 3D equivalent of 2D pixels. In an occupancy map, each voxel contains a binary value (occupied or empty). More complex scanning can produce floating point or vector values for each voxel: this is commonly the case with medical imagery. A binary occupancy map can be obtained by thresholding these with specific criteria.

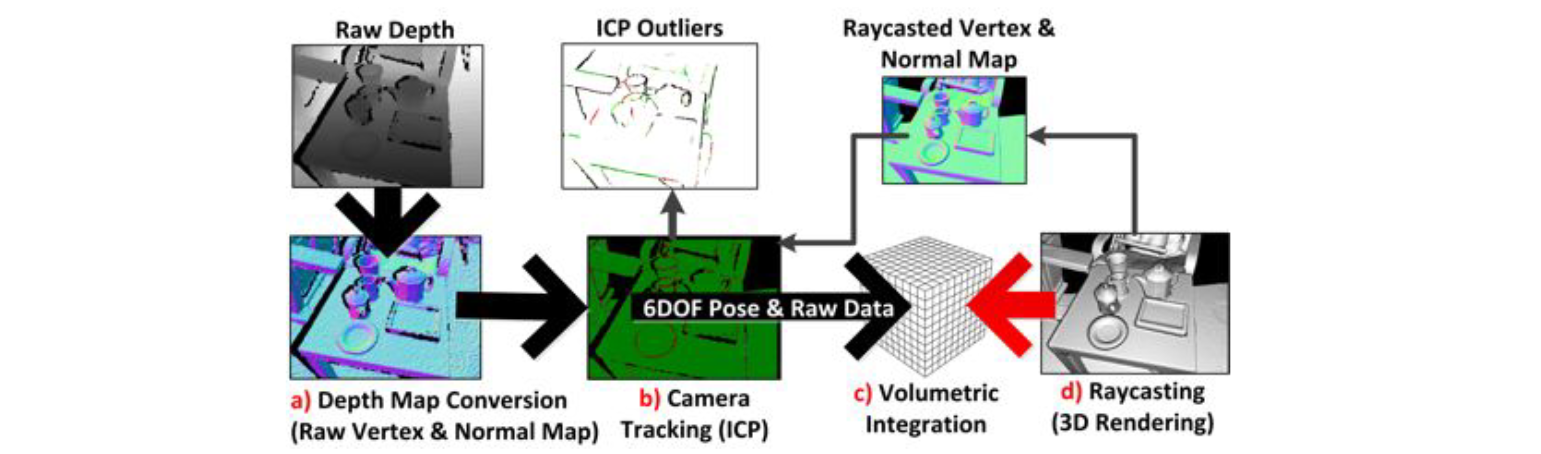

Kinect Fusion makes use of voxels as an intermediary step when merging estimates from several ToF cameras (or a single moving one).

Meshes

By far the most popular representation for 3D models in the context of 3D scanning, 3D printing and gaming.

The key challenge in scanning workflows is often centered around mesh construction and repair. Proprietary softwares like Agisoft Metashape provide many meshing algorithms and repair/denoising tools. There are some open source alternatives:

or simpler tools targeting printability:

More details on mesh processing are available here.