Machine Learning Control

In the subfield of control theory, Machine Learning Control (MLC), optimal control problems are solved with various machine learning methods.

In robotics, machine learning can be used for things such as machine vision, imitation learning, self-supervised learning.

Evolutionary and Genetic Algorithms (EA & GA)

A popular approach is the use of Evolutionary Algorithms and specifically Genetic Algorithms (GA). These algorithms simulate principles of natural evolution, such as mutation, crossover and selection. To achieve the most optimal solution in a certain solution domain, they evolve over multiple generations by passing down successful properties.

This technique is used widely in industry, from the design of a boat hull that is ergodynamic yet can carry a large freight to the composition of a coffee blend that is cost-efficient yet doesn’t taste disgusting.

I love to look at this now-ancient project called Evolved Virtual Creatures by Karl Sims that describes the principle of GA quite literally.

Karl Sims, Evolved Virtual Creatures (1994) Source: YouTube.

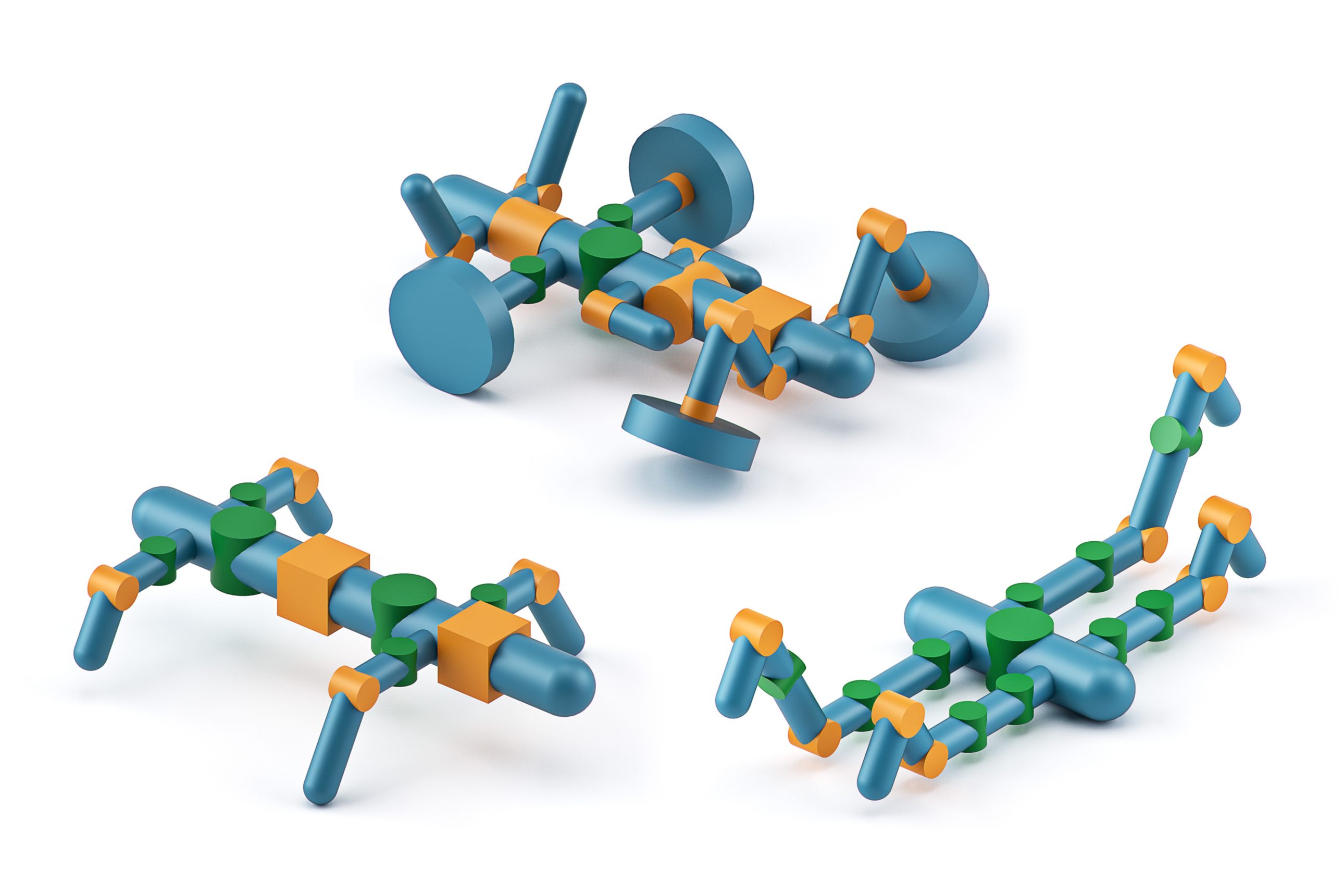

RoboGrammar

While Karl Sims's example is ancient, this principle of generation lives on in modern application. For instance RoboGrammar: graph grammar for terrain optimized robots developed at CSAIL. Through this, it is possible to generate robots that are optimized for traversing given terrains. The way the graph grammar works, is that it generates only designs that can realistically be fabricated.

Isaac Gym

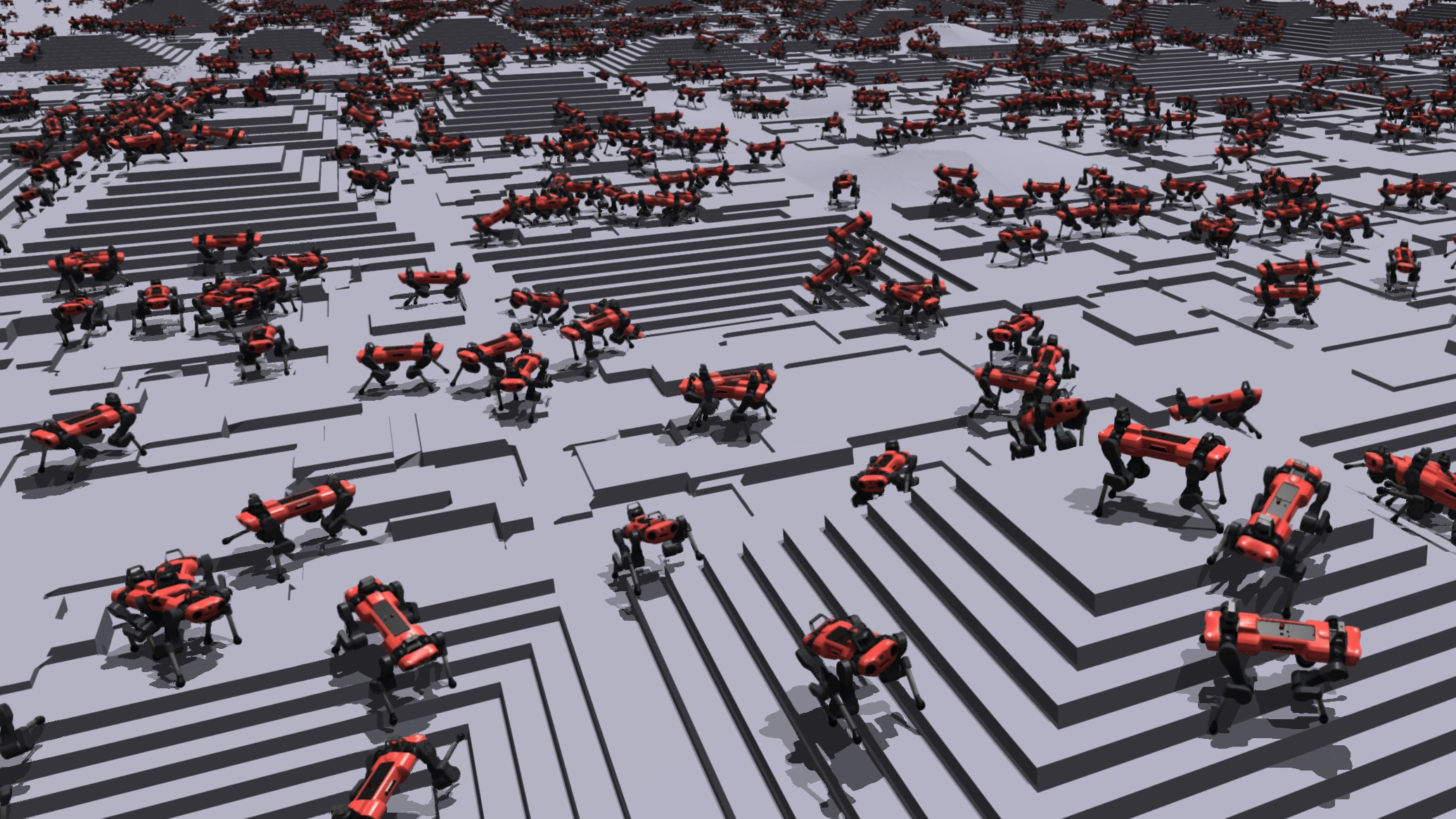

So how to optimize these robots? Imagine an endless space, not far from where you are, with a seemingly infinite number of simulated objects that perform the same task or similar tasks. This is not a scenario from a bad trip on hallucinatory substances nor a nightmare, no, it’s NVIDIA’s reinforcement learning physics simulation environment, Isaac Gym (December 17, 2020).

(I wonder if the researchers saw the Rick and Morty episode “A Rickle in Time” (July 26, 2015) which showed the two protagonists splitting time into multiple realities and figuring which one works out the best for them, and thought: this would be a great way to test my model. There is no proof of this whatsoever.)

Source: Rick and Morty Wiki.

Source: Rick and Morty Wiki.

Legged Gym

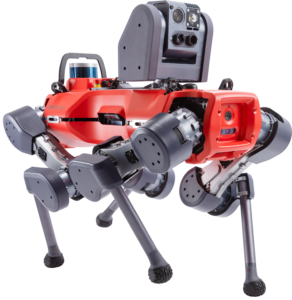

Legged Gym is a version of Isaac Gym that's specifically catered to robots with legs. This tool provides the environment used to train ANYmal (and other robots) to walk on rough terrain based on NVIDIA’s Isaac Gym.

Source: anymal-research.com

Reinforcement Chair

Anoter example of a reinforcement learning project is this chair by Shintaro Inouie et al. (Body Design and Gait Generation of Chair-Type Asymmetrical Tripedal Low-rigidity Robot).

They generate gait for the chair through reinforcement learning. So now it can do chair-like things like waling or standing up when being kicked down.

Source: Kento Kawharazuka on YouTube

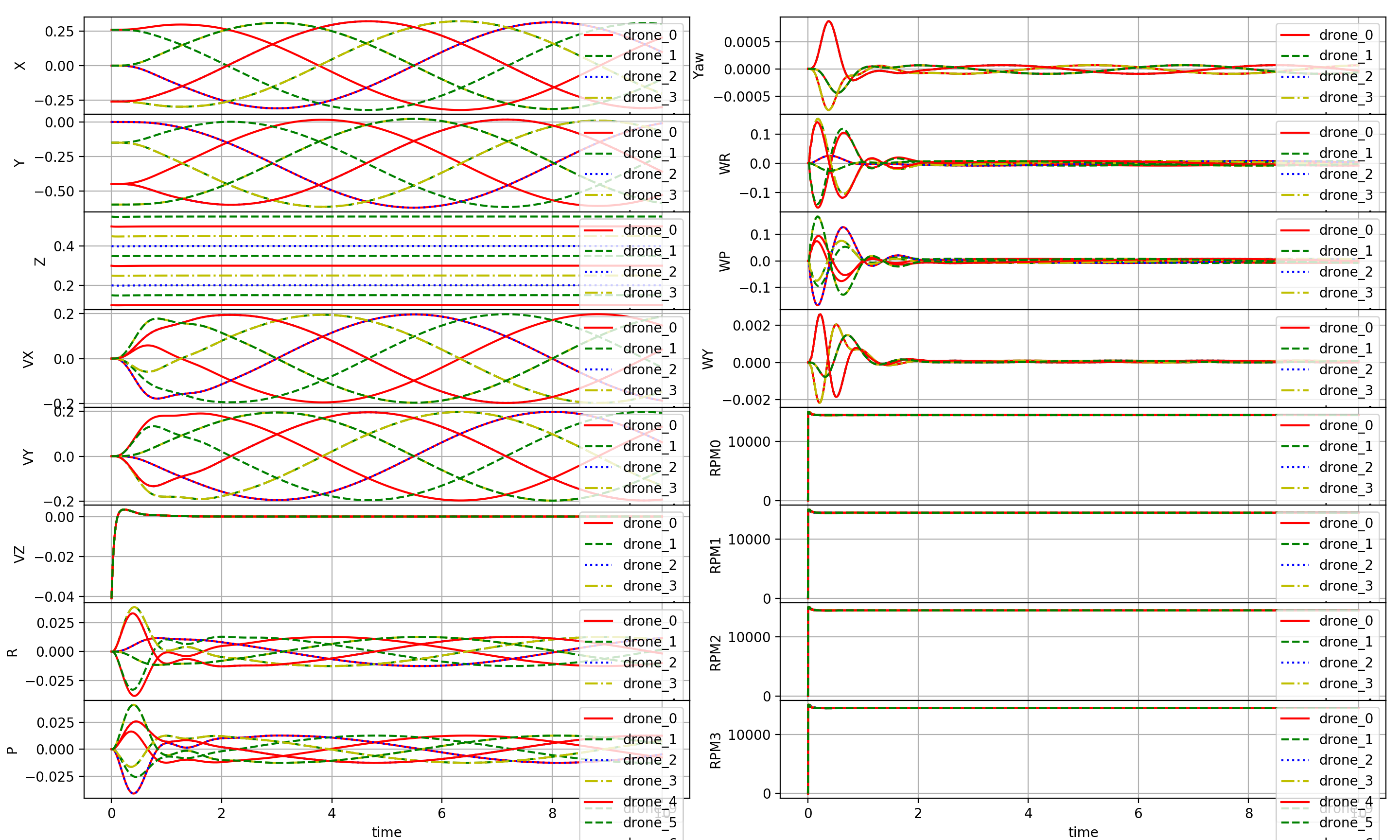

Gym PyBullet Drones

This is a single or multi-agent reinforcement learning simulation specifically for nano-quadcopters.

Source: Github