Week 11 :: Interface and Application Programming

How do we incorporate interaction into video processing?

1. Write an application that interfaces a user with a device.

This week, I was aiming to learn some of the basics that I would need to set up an application to interface with my final project.

Firstly, I need to find a way to use openCV libraries to process images from a camera.

In Networking Week, I tried using Python with "urlib" to access video stream from the ESP-32CAM. While I found that it was possible, I'm not sure it's going to be fast enough for real-time video processing of multiple cameras.

I'm testing openFrameworks because it seems to have promising user-friendly options for quickly working with openCV, and it's built specifically for real-time interactive applications.

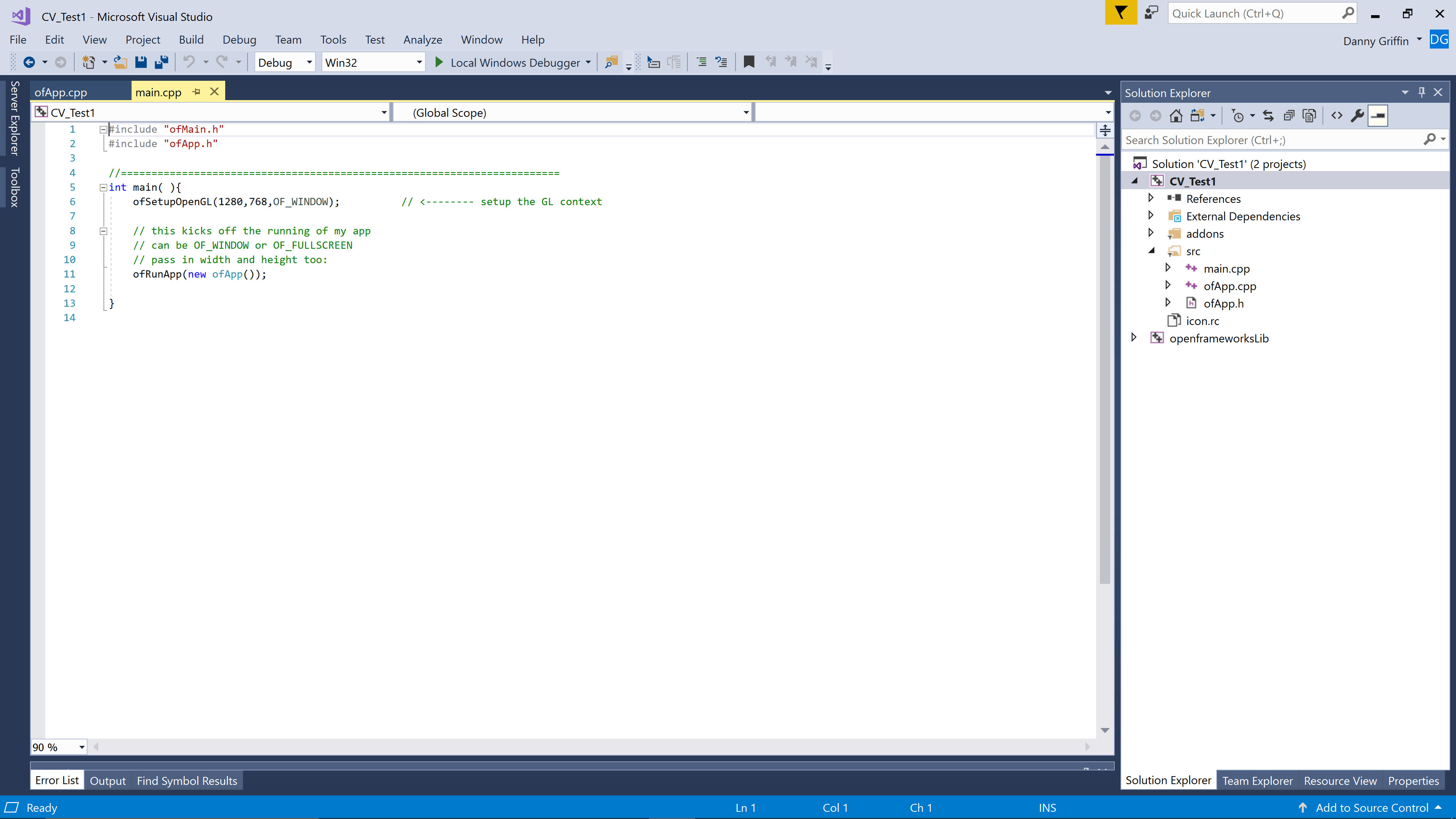

First test, a refresher on project generation using openFrameworks:

Here, in main.cpp, the size of the application window is declared.

A window will pop up with a resolution of 1280 x 768 pixels.

Here, in main.cpp, the size of the application window is declared.

A window will pop up with a resolution of 1280 x 768 pixels.

This week, I'm trying to use ofxOpenCv for motion tracking. The general process is to retrieve frames from the camera, convert to black and white, and then manipulate contrast to find the outline of certain desired objects or features.

To build this demo app, I'm referring to bits of code from several Kyle McDonald examples.

Demo screencapture.

This isn't much of a UI design, but the functionality is enough of a proof-of-concept for me to believe openFrameworks will work for my final project.

As I finalize my schematic design for the final weeks, I have a few challenges in mind for the interface development:

1. Connect to ESP-32CAM rather than webcam.

2. Connect to an output device.

3. Track the center of an object.