Week 12: Interface and Application Programming

Machine Learning Web Interface using Webcam

For my final project, I want my camera sensor to send a video stream to a mobile application, and for that application to be able to recognize whether specific people or objects are in the image (can be "seen" by the camera). My first step is to test the specific method and libraries that I want to use before trying to do this on a mobile device. I modified an application using JavaScript and the DeepLearnJS library and tutorials for machine learning that allows the user to "train" the computer to recognize certain sets of images or image features so that the machine learning algorithm would then be able to "classify" whether or not the features that it was trained on appear in new images.

To do this, I used a neural network called SqueezeNet (for more information, you can read the original paper about SqueezeNet here, but the TLDR is that SqueezeNet is a specific neural network architecture consisting of a lot of convolutional layers and is known for being comparatively small in terms of size and training time). I used a pretrained version of SqueezeNet provided by the DeepLearnJS library that has already been trained on all of the ImageNet database, which is a huge set of labeled images commonly used in machine learning related to visual object recognition. I feed my image into SqueezeNet and for each image, I get an output of a 1000-dimensional logits vector, which is basically a high-level numerical summary of all the notable features of that image based on what the pretrained SqueezeNet has learned about image features through the ImageNet database. I give my system labeled training data (for example, 50 images of my face via the webcam and 50 images of the background environment), and then use a technique called K-Nearest Neighbors to figure out what all future non-labeled images should be classified as.

I built the program and currently have the program running on my computer's localhost:3000. In the demo video below, I train my program to recognize 3 classes using training and testing images fed through my computer's webcam. The 3 classes I train it on are me, a soda can, and the background. Once training is complete, the program will tell us with some percent confidence what it thinks new images should be classified as. You can see that it is able to train fairly quickly and classify pretty accurately.

I still need to port this functionality over onto mobile and make sure that SqueezeNet is compact enough for a smartphone to run it at a reasonable speed, but this serves as a nice proof of concept.

Mobile App Using MIT App Inventor

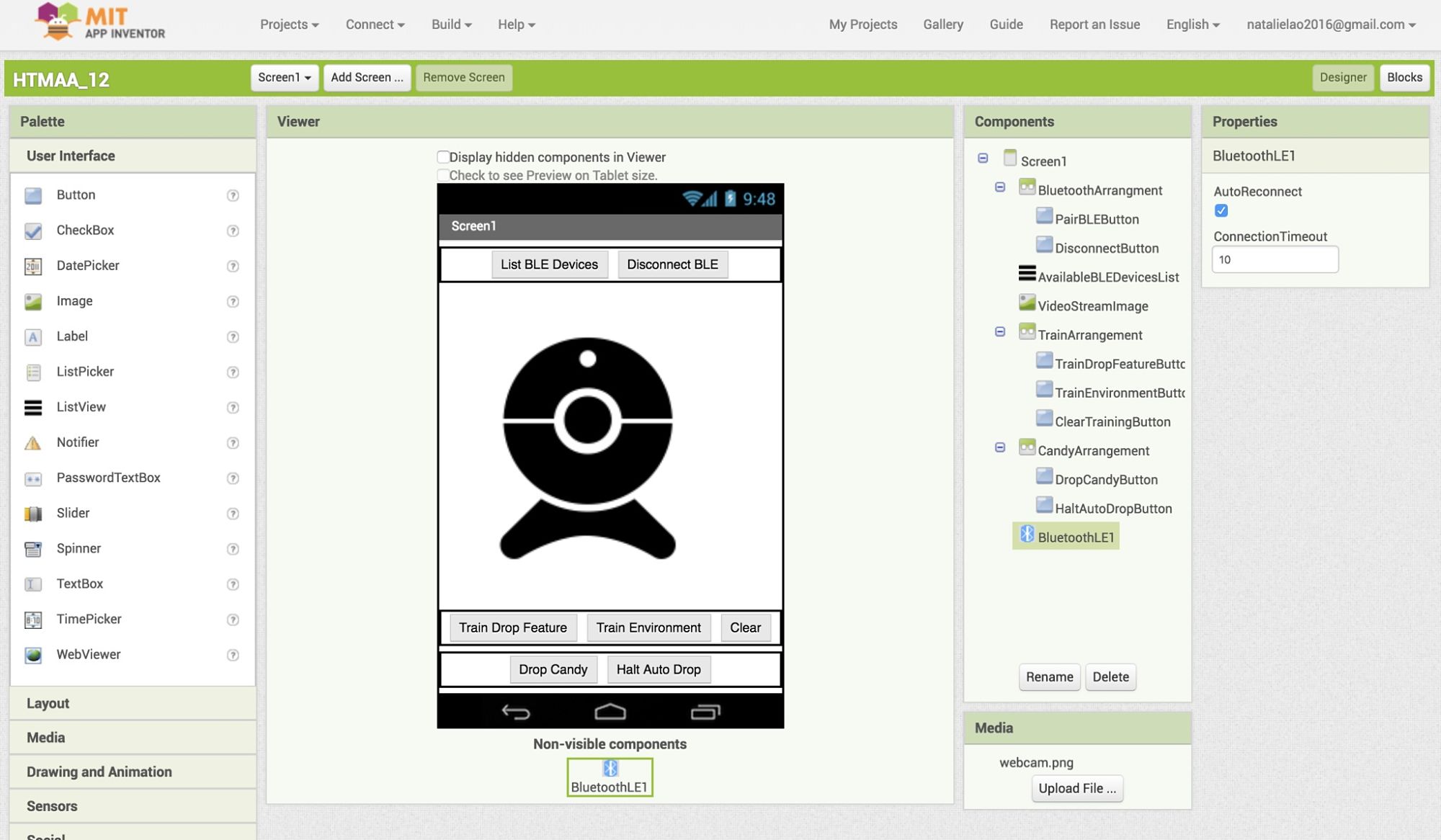

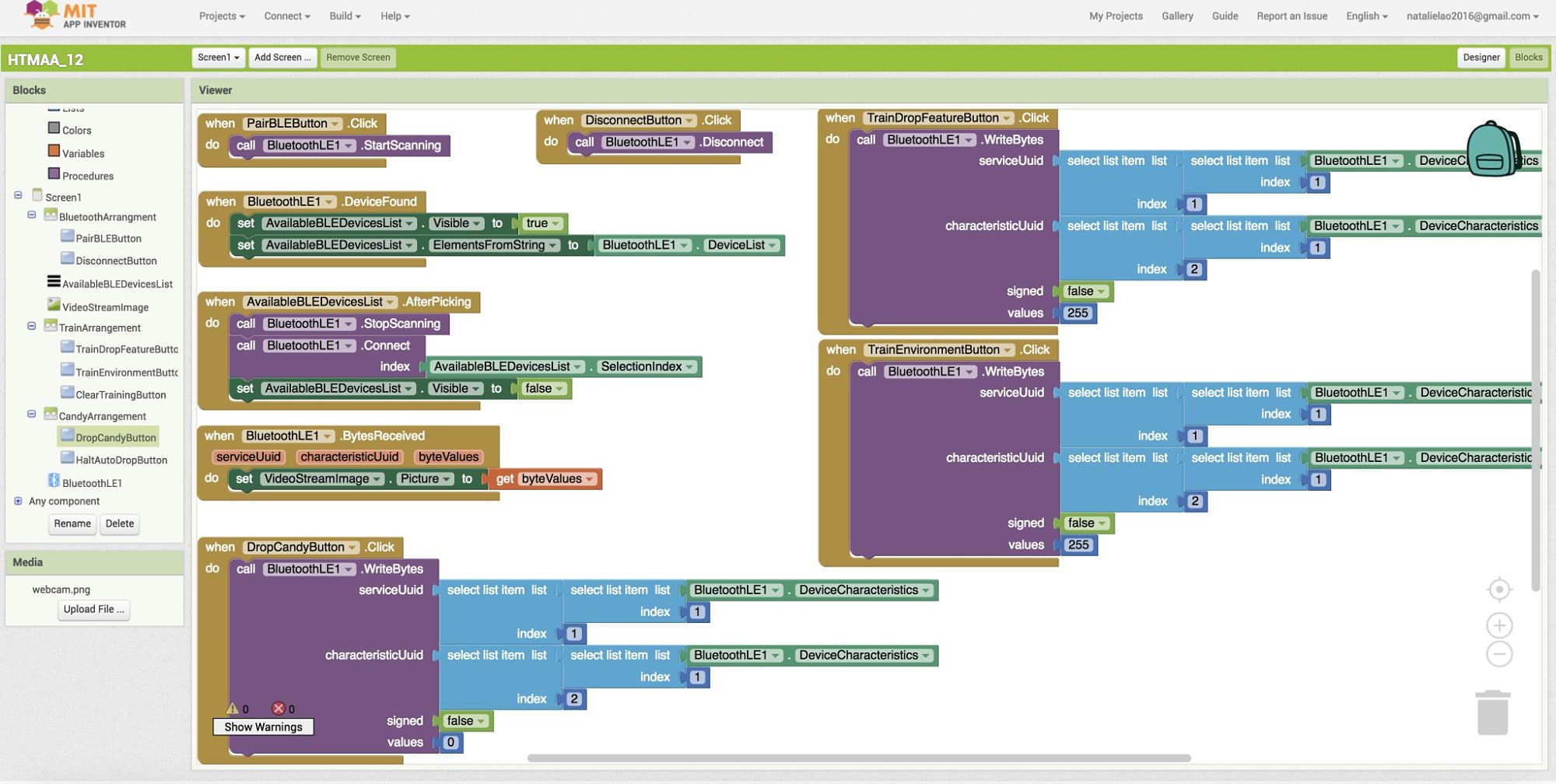

I started creating what I could for the mobile app for my final project using MIT App Inventor. I haven't finished building the image recognition block for App Inventor nor do I know the specifics of the BLE unit and how it will actually transmit the video data, but I started by building everything else first, including the user interface, the logic for pairing to a BLE device, and the setup for training and the output device.

Note that I am using the new BLE extension (AIX file) that can be found in the App Inventor extensions library instead of the BluetoothClient and BluetoothServer components currently available in the MIT App Inventor "Connectivity" side shelf.

Screenshots of the Designer and Blocks pages for my app are below:

I plan to connect a BLE device to my app for next week's assignment. I don't expect my image classification block to be ready by then, so I will likely just try to have my app write to the nRF52 and do some arbitrary response when it receives the streamed image data from the BLE device.