Goal#

- Write an application that interfaces a user with an input &/or output device that you made

Result:#

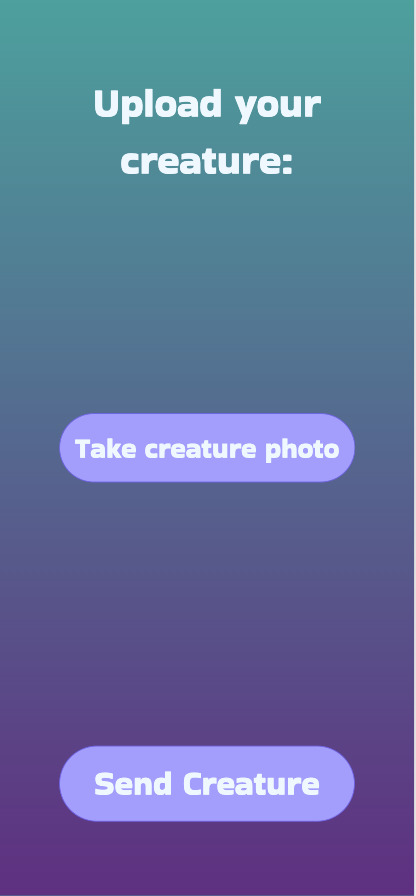

I decided to use this week to work on an app that would be part of my final project. I wanted people to draw their own creatures and be able to send them to the tidepool projection by takin a picture. My plan was to use OpenCV to do some image processing on the picture to get just the creature and bring it to life.

I'm very used to programming in the Node.js ecosystem, but I wanted to try something new so I chose Flask, a micro web framework for Python. Since I was doing this on my raspberry pi, I followed this tutorial. I also followed this and this to handle uploading photos. I was able to get a basic site with file input up with no issues. I also added some CSS styling to make it look nicer.

After that, I had to do the actual image processing. It took many hours to compile OpenCV on the raspberry pi. After many failed attempts because of operating system anv version mismatches, I got it to work following this. The key thing was downgrading my Raspbian OS to Buster.

While that was compiling, I did some reading on how to use OpenCV to take a creature drawing and output only creature with the background removed. The steps are basically:

- Make sure the image is in the right format (in my case, I needed to add an alpha channel)

- Convert the image to grayscale and threshold it (make darks darker and lights lighter)

- Remove noise from the image

- Run contour-finder to find the maximum-area contour

- Create a mask of that maximum-area contour to remove everything else from the image

- Use the maximum-area contout to define a minimum-bounding rectangle and crop the image

You can see the details of this procedure in python OpenCV here.

It took some fiddling for me to find the correct parameters, which you can see in my code. Using adaptive thresholding helped a lot. I drew some creatures and ran them through my app to see how it was working. Unfortunately, I lost all the images from my tinkering phase when I accidentally rm -rfed everything on my Raspberry Pi later in the week... Here are some of the creatures vistors drew during Open House instead!

Another key part of this app is that I saved those images to a directory within the openFrameworks project that would eventually become my Tidepool projection. I followed this example to implement my own version of an Image File Watcher that could both load all images in a directory when my app started (for debugging reasons), but after receiving a new image it would "start over" and only keep subsequently added images (code here).